Incentives from a causal perspective

post by tom4everitt, James Fox, RyanCarey, mattmacdermott, sbenthall, Jonathan Richens (jonrichens) · 2023-07-10T17:16:28.373Z · LW · GW · 0 commentsContents

Value of information Response Incentives Value of Control Instrumental Control Incentives Multi-decision and multi-agent extensions Conclusions None No comments

Post 4 of Towards Causal Foundations of Safe AGI [? · GW], preceded by Post 1: Introduction [AF · GW], Post 2: Causality [AF · GW], and Post 3: Agency [? · GW].

By Tom Everitt, James Fox, Ryan Carey, Matt MacDermott, Sebastian Benthall, and Jon Richens, representing the Causal Incentives Working Group. Thanks also to Toby Shevlane and Aliya Ahmad.

“Show me the incentive, and I’ll show you the outcome” – Charlie Munger

Predicting behaviour is an important question when designing and deploying agentic AI systems. Incentives capture some key forces that shape agent behaviour,[1] which don’t require us to fully understand the internal workings of a system.

This post shows how a causal model of an agent and its environment can reveal what the agent wants to know and what it wants to control, as well as how it will respond to commands and influence its environment. A complementary result shows that some incentives can only be inferred from a causal model, so a causal model of the agent’s environment is strictly necessary for a full incentive analysis.

Value of information

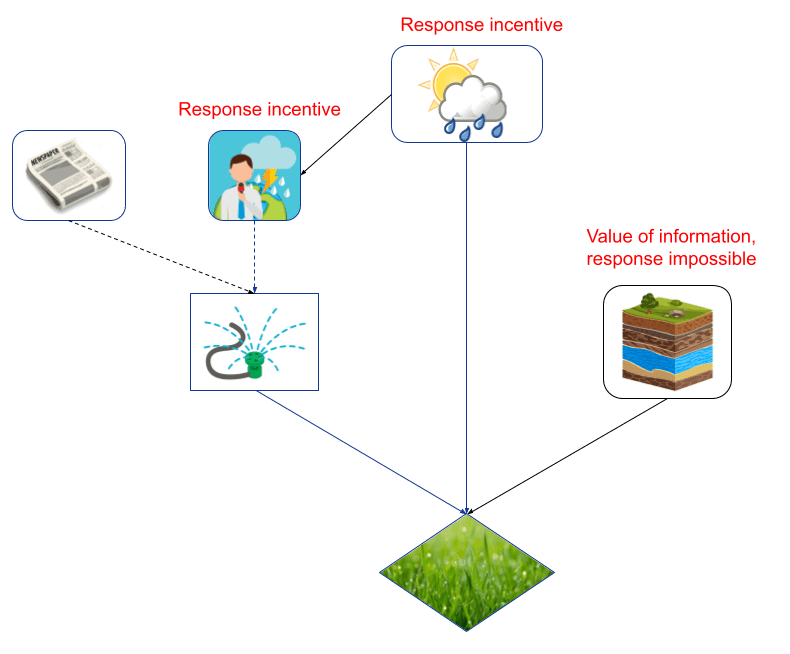

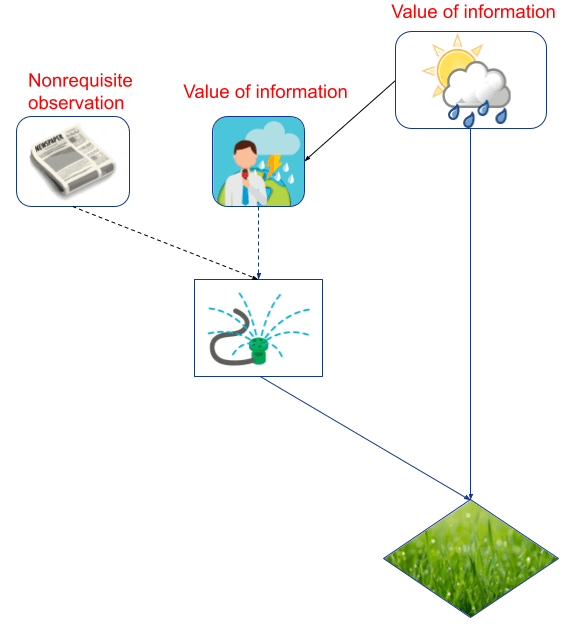

What information would an agent like to learn? Consider, for example, Mr Jones deciding whether to water his lawn, based on the weather report, and whether the newspaper arrived in the morning. Knowing the weather means that he can water more when it will be sunny than when it will be raining, which saves water and improves the greenness of the grass. The weather forecast therefore has information value for the sprinkler decision, and so does the weather itself, but the newspaper arrival does not.

We can quantify how useful observing the weather is for Mr Jones, by comparing his expected utility in a world in which he does observe the weather, to a world in which he doesn’t. (This measure only makes sense if we can assume that Mr Jones adapts appropriately to the different worlds, i.e. he needs to be agentic [? · GW] in this sense.)

The causal structure of the environment reveals which variables provide useful information. In particular, the d-separation criterion captures whether information can flow between variables in a causal graph when a subset of variables are observed. In single-decision graphs, value of information is possible when there is an information-carrying path from a variable to the agent’s utility node, when conditioning on the decision node and its parents (i.e. the “observed” nodes).

For example, in the above graph, there is an information-carrying path from forecast to weather to grass greenness, when conditioning on the sprinkler, forecast and newspaper. This means that the forecast can (and likely will) provide useful information about optimal watering. In contrast, there is no such path from the newspaper arrival. In that case, we call the information link from the newspaper to the sprinkler nonrequisite.

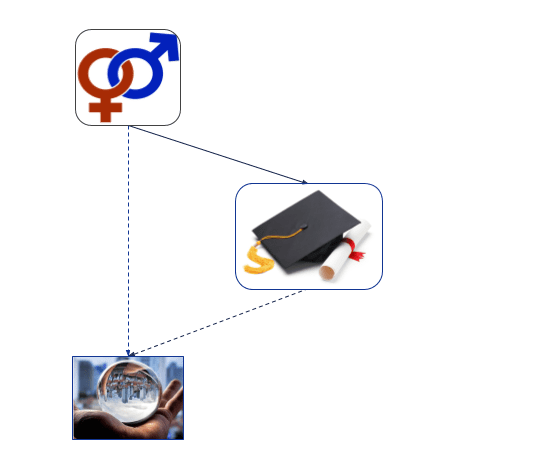

Understanding what information an agent wants to obtain is useful for several reasons. First, in e.g. fairness settings, the question of why a decision was made is often as important as what the decision was. Did gender determine a hiring decision? Value of information can help us understand what information the system is trying to glean from its available observations (though a formal understanding of proxies remains an important open question).

More philosophically, some researchers consider an agent’s cognitive boundary as the events that the agent cares to measure and influence. Events that lack value of information must fall outside the measuring part of this boundary.

Response Incentives

Related to the value of information are response incentives: what changes in the environment would a decision chosen by an optimal policy respond to? Changes are operationalised as post-policy [AF · GW] interventions, i.e. as interventions that the agent cannot change its policy in response to (the decision might still be influenced under a fixed policy).

For example, Mr Jones is incentivised to adopt a policy that waters or not based on the weather forecast. This means that his decision will be responding to interventions both on the weather forecast, and to the weather itself (assuming the forecast reports those changes). But his watering decision will not respond to changes to the newspaper as it's a nonrequisite observation. He is also unable to respond to changes that are not causal ancestors of his decision, such as the groundwater level or the (future) greenness of the grass:

Response incentives are important because we want agents to respond to our commands in appropriate ways, such as switching off when asked. Conversely, in fairness, we often want a decision to not respond to certain things, e.g. we don’t want a person’s gender to influence a hiring decision, at least not along particular paths. For example, if an AI system is used to filter candidates for interview, what if gender only indirectly influences the prediction, via the university degree the person acquired?

A limitation of graphical analysis is that it can only make a binary distinction whether an agent is incentivised to respond or not at all. Further work may develop a more fine-grained analysis of when an agent will respond appropriately, which could be thought of as causal mechanism design.

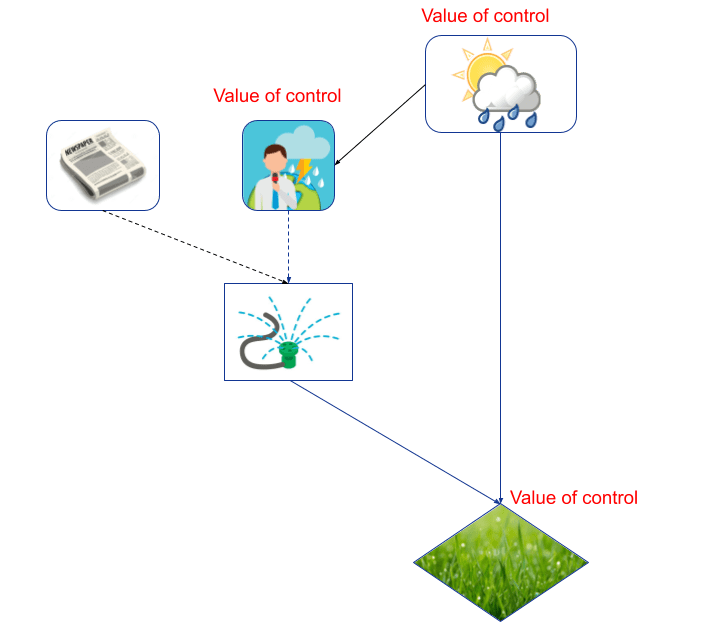

Value of Control

The dual of information is control. While information can flow in both directions over a causal relationship (the ground being wet is evidence of rain, and vice versa), influence can only flow forward over causal arrows. This makes it particularly easy to infer Value of Control from a causal graph, by simply checking for directed paths to the agent’s utility node.

For example, there is a directed path from weather to grass greenness, so Mr Jones may value controlling the weather. He might also value controlling the weather forecast, in the sense that he wants to make it more accurate. And trivially, he wants to control the grass itself. But controlling the newspaper lacks value, because the only directed path from the newspaper to the grass contains a nonrequisite information link.

Value of control is important from a safety perspective, as it reveals what variables the agent would like to influence if it could (i.e. it bounds the control-part of the agent’s cognitive boundary).

Instrumental Control Incentives

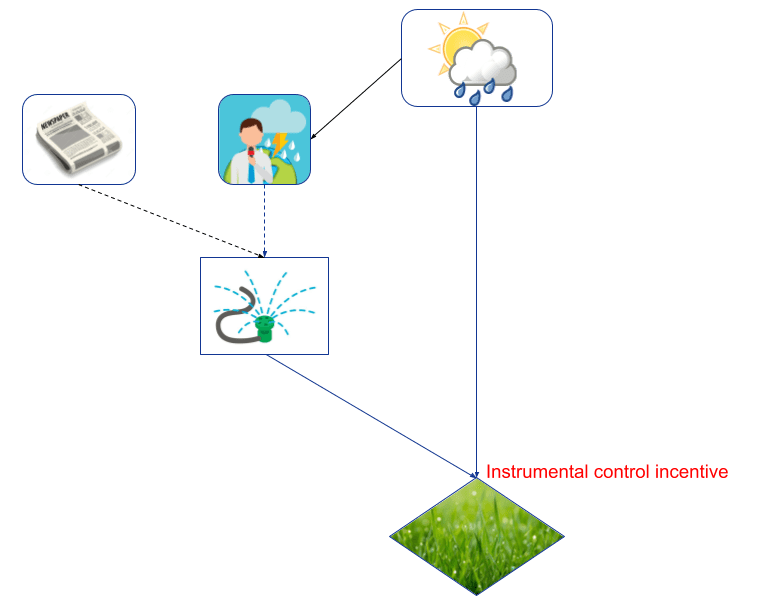

Instrumental control incentives are a refinement of value of control to nodes that the agent is both able and willing to control. For example, even though Mr Jones would like to control the weather, he is unable to, because his decision does not influence the weather (as there is no directed path from his decision to the weather):

There is a simple graphical criteria for an instrumental control incentive: to have it, a variable must sit on, or at the end of, a directed path from the agent’s decision to its utility (the grass sits at the end of the path sprinkler -> grass).

However, it is less obvious how to characterise instrumental control incentives in terms of behaviour. How do we know that the agent wants to control a variable that it is already able to influence? Simply giving the agent full control of the variable would not work, as that would bring us back to value of control.

In our agent incentives paper, we operationalise it by considering a hypothetical environment, where the agent has two copies of its decision: one that only influences the environment via a variable V, and one that influences the environment in all other ways. If the first decision-copy influences the agent’s utility, then V has an instrumental control incentive. This makes sense, because only if the decision influences V, and V in turn influences utility, can the first decision-copy influence the agent’s utility. Halpern and Kleimann-Weiner consider a related hypothetical: what if the variable wasn’t influenced by the agent’s decision? Would the agent then take a different action? This leads to the same graphical condition.

Instrumental control incentives have been used to analyse reward tampering and user manipulation, leading to path-specific objectives as a suggested method for ethical content recommenders [see next post]. Other methods that remove instrumental control incentives include decoupled approval, current-RF optimisation, counterfactual oracles, countermeasures for auto-induced distributional shift, and ignoring effects through some channel [LW · GW].

A question for future work is how to quantify an agent’s degree of influence, as discussed in the agency post [AF · GW].

Multi-decision and multi-agent extensions

Agents often interact over multiple timesteps with an environment that contains other agents as well. Sometimes, the single-decision, single-agent analysis extends to these settings, in one of two ways:

- Assume all but one decision are following fixed, non-adaptive policies, or

- Consider a multi-decision policy as a single decision, that simultaneously decides decision rules for all concrete decisions.

Both options have drawbacks. Option 2 only works in single-agent situations, and even in those situations some nuance is lost, as we can no longer say which decision an incentive is associated with.

Option 1 isn’t always an appropriate modelling choice, as policies do adapt [? · GW]. Except for response incentives, the incentives we’ve discussed above are all defined in terms of hypothetical changes to the environment, such as adding or removing an observation (value of information), or improving the agent’s control (value of control, instrumental control incentives). Why would policies remain fixed under such changes?

For example, if an adversary knows that I have access to more information, they might behave more cautiously. Indeed, more information can sometimes decrease expected utility in multi-agent situations. Multi-agent dynamics can also make agents behave as if they have an instrumental control incentive on a variable, even though they don’t satisfy the single-agent criterion. For example, the actor in an actor-critic architecture behaves (chooses actions) as if it tries to control the state and get more reward, even though it doesn’t satisfy the definition of a single-decision, single-agent instrumental control incentive:

For these reasons, we’ve been working to extend the incentive analysis to multiple decisions. We’ve established a complete graphical criterion for the value of information of chance nodes in single-agent, multi-decision influence diagrams with sufficient recall, and a way to model forgetfulness and absent-mindedness. Further work may push further in these directions.

In the discovering agents paper, we also suggest a condition for when the single-decision criterion can be used: it’s when no other mechanisms adapt to the relevant intervention.

Conclusions

In this post, we have shown how causal models can both make precise various types of incentives, and how incentives can be inferred from a causal graph, and argued that it is impossible to infer most types of incentives without a causal model of the world. Natural directions for further research include:

- Extend the result by Miller et al to other types of incentives, establishing for which incentives a causal model is strictly necessary.

- When a system is incentivized to use an observation as a proxy for another variable? Value of information and response incentives give clues, but further work is needed to fully understand the conditions.

- Develop causal mechanism design for understanding how to incentivise agents to respond in appropriate ways, and understand their degree of influence [AF · GW].

- Continue the extensions to multi-decision and multi-agent extension of incentive analysis, with generalised definitions and graphical criteria that work in graphs with multiple decisions and agents.

In the next post, we’ll apply the incentive analysis to various misspecification problems and solutions, such as manipulation, recursion, interpretability, impact measures, and path-specific objectives.

- ^

Some others being computational constraints, choices of learning algorithm, and environment interface.

0 comments

Comments sorted by top scores.