DeepMind alignment team opinions on AGI ruin arguments

post by Vika · 2022-08-12T21:06:40.582Z · LW · GW · 37 commentsContents

Summary of agreements, disagreements and implications Section A: "strategic challenges" (#1-9) Summary Detailed comments Section B.1: The distributional leap (#10-15) Summary Detailed comments Section B.2: Central difficulties of outer and inner alignment (#16-24) Summary Detailed comments Section B.3: Central difficulties of sufficiently good and useful transparency / interpretability (#25-33) Summary Detailed comments Section B.4: Miscellaneous unworkable schemes (#34-36) Summary Detailed comments Section C: "civilizational inadequacy" (#37-43) Summary Detailed comments None 37 comments

We had some discussions of the AGI ruin arguments [LW · GW] within the DeepMind alignment team to clarify for ourselves which of these arguments we are most concerned about and what the implications are for our work. This post summarizes the opinions of a subset of the alignment team on these arguments. Disclaimer: these are our own opinions that do not represent the views of DeepMind as a whole or its broader community of safety researchers.

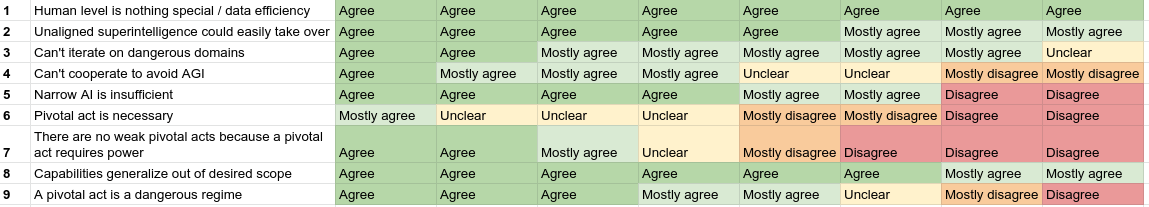

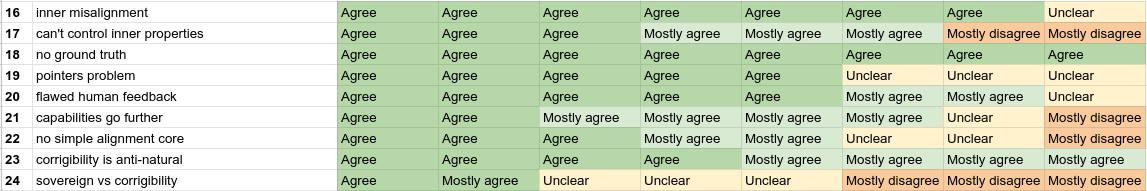

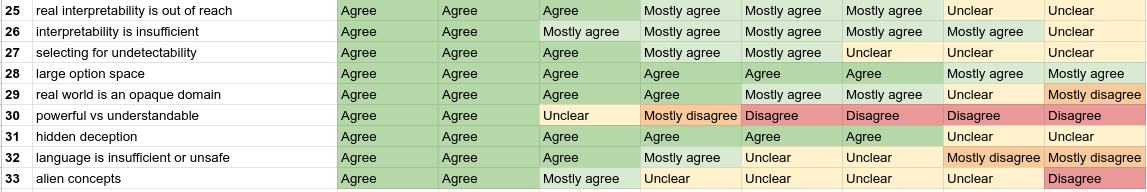

This doc shows opinions and comments from 8 people on the alignment team (without attribution). For each section of the list, we show a table summarizing agreement / disagreement with the arguments in that section (the tables can be found in this sheet). Each row is sorted from Agree to Disagree, so a column does not correspond to a specific person. We also provide detailed comments and clarifications on each argument from the team members.

For each argument, we include a shorthand description in a few words for ease of reference, and a summary in 1-2 sentences (usually copied from the bolded parts of the original arguments). We apologize for some inevitable misrepresentation of the original arguments in these summaries. Note that some respondents looked at the original arguments while others looked at the summaries when providing their opinions (though everyone has read the original list at some point before providing opinions).

A general problem when evaluating the arguments was that people often agreed with the argument as stated, but disagreed about the severity of its implications for AGI risk. A lot of these ended up as "mostly agree / unclear / mostly disagree" ratings. It would have been better to gather two separate scores (agreement with the statement and agreement with implications for risk).

Summary of agreements, disagreements and implications

Most agreement:

- Section A ("strategic challenges"): #1 (human level is nothing special), #2 (unaligned superintelligence could easily take over), #8 (capabilities generalize out of desired scope)

- Section B1 (distributional leap): #14 (some problems only occur in dangerous domains)

- Section B2 (outer/inner alignment): #16 (inner misalignment), #18 (no ground truth), #23 (corrigibility is anti-natural)

- Section B3 (interpretability): #28 (large option space)

- Section B4 (miscellaneous): #36 (human flaws make containment difficult)

Most disagreement:

- #6 (pivotal act is necessary). We think it's necessary to end the acute risk period, but don't agree with the "pivotal act" framing that assumes that the risk period is ended through a discrete unilateral action by a small number of actors.

- #24 (sovereign vs corrigibility). We think this kind of equivocation isn't actually happening much in the alignment community. Our work focuses on building corrigible systems (rather than sovereigns), and we expect that the difficulties of this approach could be surmountable, especially if we can figure out how to avoid building arbitrarily consequentialist systems.

- #39 (can't train people in security mindset). Most of us don't think it's necessary to generate all the arguments yourself in order to make progress on the problems.

- #42 (there's no plan). The kind of plan we imagine Eliezer to be thinking of does not seem necessary for a world to survive.

Most controversial among the team:

- Section A ("strategic challenges"): #4 (can't cooperate to avoid AGI), #5 (narrow AI is insufficient), #7 (no weak pivotal acts), #9 (pivotal act is a dangerous regime)

- Section B1 (distributional leap): #13 and 15 (problems above intelligence threshold and correlated capability gains)

- Section B2 (outer/inner alignment): #17 (inner properties), #21 (capabilities go further), #22 (simple alignment core)

- Section B3 (interpretability): #30 (powerful vs understandable), #32 (language is insufficient), #33 (alien concepts)

- Section B4 (miscellaneous): #35 (multi-agent is single-agent)

- Section C ("civilizational inadequacy"): #38 (lack of focus), #41 (have to write this list), #43 (unawareness of risks)

Cruxes from the most controversial arguments:

- How powerful does a system / plan need to be to save the world?

- Is global cooperation sufficiently difficult that AGI would need to deploy new powerful technology to make it work?

- Will we know how capable our models are?

- Will capabilities increase smoothly?

- Will systems acquire the capability to be useful for alignment / cooperation before or after the capability to perform advanced deception?

- Is consequentialism a powerful attractor? How hard will it be to avoid arbitrarily consequentialist systems?

- What is the overhead for aligned vs not-aligned AGI?

Possible implications for our work:

- Work on cooperating to avoid unaligned AGI (compute governance, publication norms, demonstrations of misalignment, etc)

- Investigate techniques for limiting unaligned consequentialism, e.g. process-based feedback, limiting situational awareness, limited domains

- Work on improving capability monitoring and control

- Empirically investigate to what extent selection for undetectability / against interpretability occurs in practice as systems become more capable

- Continue and expand our work on mechanistic interpretability and process-based feedback

Section A: "strategic challenges" (#1-9)

Summary

Detailed comments

#1. Human level is nothing special / data efficiency

Summary: AGI will not be upper-bounded by human ability or human learning speed (similarly to AlphaGo). Things much smarter than human would be able to learn from less evidence than humans require.

- Agree (though don't agree with the implication that it will be discontinuous)

- Agree (strongly, and possibly a major source of disagreement with broader ML community)

#2. Unaligned superintelligence could easily take over

Summary: A cognitive system with sufficiently high cognitive powers, given any medium-bandwidth channel of causal influence, will not find it difficult to bootstrap to overpowering capabilities independent of human infrastructure.

- Agree (including using human institutions and infrastructure for its own ends)

- Agree ("sufficiently high" is doing a lot of the work here)

- Mostly agree - it depends a lot on what "medium-bandwidth" means, and also how carefully the system is being monitored (e.g. how we're training the system online). I think "text-only channel, advising actions of a lot of people who tend to defer to the system" seems like probably enough -> takeover if we're not being that careful. But I think I probably disagree with the mechanism of takeover intended by Yudkowsky here.

#3. Can't iterate on dangerous domains

Summary: At some point there will be a 'first critical try' at operating at a 'dangerous' level of intelligence, and on this 'first critical try', we need to get alignment right.

- (x2) Mostly agree (misleading, see Paul's Disagreement #1 [AF(p) · GW(p)])

- Mostly agree. It's not clear that attempting alignment and failing will necessarily be as irrecoverable as unaligned operation, but does seem very likely. If "dangerous" just means failing implies extinction then this statement is a truism.

- Mostly agree (though "get alignment right on the first critical try" may lean heavily on "throttle capability until we get alignment right")

#4. Can't cooperate to avoid AGI

Summary: The world can't just decide not to build AGI.

- Unclear. I think this depends a lot on what exactly happens in the real world in the next decade or so, and we might be able to buy time.

- Unclear. Agree that worldwide cooperation to avoid AGI would be very difficult, but cooperation between Western AI labs seems feasible and could potentially be sufficient to end the acute risk period or buy more time (especially under short timelines).

- Unclear. I agree it is very hard and unlikely, but extreme things can happen in global politics given sufficiently extreme circumstances, and cooperation can do much to shape the pace and direction of tech development.

- Mostly disagree (more on principle than via seeing a stable solution; agree that "just" deciding not to build AGI doesn't work, but culture around AI could be shifted somehow)

#5. Narrow AI is insufficient

Summary: We can't just build a very weak system.

- Agree (assuming #4)

- Agree (this doesn't end the risk period)

- Disagree (can potentially use narrow AI to help humans cooperate)

- (x2) Disagree (more on principle - we should work on how to solve xrisk using somewhat-narrow systems; to be clear, this a problem to be solved, rather than something we "just do")

#6. Pivotal act is necessary

Summary: We need to align the performance of some large task, a 'pivotal act' that prevents other people from building an unaligned AGI that destroys the world.

- Mostly agree (but disagree connotationally, in that "act" sounds like a single fast move, while an understandable human-timescale strategy is probably enough)

- Unclear (it is necessary to end to acute risk period but it can be done by humans rather than an AGI)

- Unclear (dislike framing; seems tied up with ability to cooperate)

- Disagree if a pivotal act is a "discrete, powerful, gameboard-flipping action" (as opposed to something that ends the acute risk period)

- Disagree (strongly disagree with pivotal act. Briefly, pivotal acts seem like the wrong framing.

- It seems like "get people to implement stronger restrictions" or "explain misalignment risks" or "come up with better regulation" or "differentially improve alignment" are all better applications of an AGI than "do a pivotal act".

- The pivotal act frame seems to be "there will be a tiny group of people who will have responsibility for saving the world" but the reality seems to if anything be closer to the opposite: there will be a tiny group of people that wants to build a tremendously ambitious (and thus also dangerous) AGI, the vast majority of the world would be in support of _not_ building such an AGI (and instead building many more limited systems, which can still deliver large amounts of wealth and/or value), and some set of people representing the larger global population in getting people to not build/deploy said dangerous AGI. This is an extension of the view that "AIs should be a major influence on large sectors of society" is probably a fairly unpopular view today already.

#7. There are no weak pivotal acts because a pivotal act requires power

Summary: It takes a lot of power to do something to the current world that prevents any other AGI from coming into existence; nothing which can do that is passively safe in virtue of its weakness.

- Agree (if by pivotal act we mean "discrete, powerful, gameboard-flipping action")

- Agree (if the bar is "prevent any other AGI from coming into existence")

- Agree (with caveats about cooperation)

- Disagree. This may have technical/engineering solutions that don't involve high general-purpose agency, or may not require deploying narrow AI at all.

#8. Capabilities generalize out of desired scope

Summary: The best and easiest-found-by-optimization algorithms for solving problems we want an AI to solve, readily generalize to problems we'd rather the AI not solve.

- Agree (conditional on #5)

#9. A pivotal act is a dangerous regime

Summary: The builders of a safe system would need to operate their system in a regime where it has the capability to kill everybody or make itself even more dangerous, but has been successfully designed to not do that.

- Agree (conditional on #5)

- Agree (if by pivotal act we mean "discrete, powerful, gameboard-flipping action")

- Mostly disagree (because of underlying pivotal act framing). But agree that ML systems will realistically be operating in dangerous regimes. I agree with "Running AGIs doing something pivotal are not passively safe". I’d go further and state that it's likely people will run AGIs doing non-pivotal acts which are nonetheless unsafe. However, I disagree with the (I believe implied) claim that "We should be running AGIs doing something pivotal" (under the author's notion of "pivotal").

- Disagree (human cooperation or humans assisted by narrow AI could end the acute risk period without an AI system having dangerous capabilities)

Section B.1: The distributional leap (#10-15)

Summary

Detailed comments

#10. Large distributional shift to dangerous domains

Summary: On anything like the standard ML paradigm, you would need to somehow generalize optimization-for-alignment you did in safe conditions, across a big distributional shift to dangerous conditions.

- Disagree - why are we counting on OOD generalization here as opposed to optimizing for what we want on the dangerous distribution?

#11. Sim to real is hard

Summary: There's no known case where you can entrain a safe level of ability on a safe environment where you can cheaply do millions of runs, and deploy that capability to save the world.

- Agree (seems like an instance of #7)

- Unclear. Agree that for many important tasks we aren't going to train in safe environments with millions of runs, and in particular not simulated environments, but disagree with underlying pivotal act frame

- Mostly disagree (debate with interpretability could achieve this if it succeeds)

#12. High intelligence is a large shift

Summary: Operating at a highly intelligent level is a drastic shift in distribution from operating at a less intelligent level.

- Mostly disagree (this relies on the sharp left turn, which doesn't seem that likely to me)

#13. Some problems only occur above an intelligence threshold

Summary: Many alignment problems of superintelligence will not naturally appear at pre-dangerous, passively-safe levels of capability.

- Mostly agree - I think many alignment problems will appear before, and also many won't appear until later (or at least, differences-in-degree will become differences-in-kind)

- Not sure (agree some problems would *naturally* first arise for higher intelligence levels, but we can seek out examples for less intelligent systems, e.g. reward tampering & goal misgeneralization examples)

- Mostly disagree (we'll get demos of problems; Eliezer seems to think this will be hard / unlikely to help though doesn't say that outright, if so I disagree with that)

#14. Some problems only occur in dangerous domains

Summary: Some problems seem like their natural order of appearance could be that they first appear only in fully dangerous domains.

- Mostly agree (misleadingly true: we can create analogous examples before the first critical try)

- Mostly agree (but that doesn't mean we can't usefully study them in safe domains)

#15. Capability gains from intelligence are correlated

Summary: Fast capability gains seem likely, and may break lots of previous alignment-required invariants simultaneously.

- Agree (in particular, once we have ~human-level AI, both AI development and the world at-large probably get very crazy very fast)

- Mostly agree (this is my guess about the nature of intelligence, but I'm not sure I'm right)

- Mostly agree, fast need not imply discontinuous

- Unclear (disagree on fast capability gains being likely, agree on breaking invariants given fast gains)

Section B.2: Central difficulties of outer and inner alignment (#16-24)

Summary

Detailed comments

#16. Inner misalignment

Summary: Outer optimization even on a very exact, very simple loss function doesn't produce inner optimization in that direction.

- Unclear - I strongly agree with the weaker claim that we don't get inner alignment for free, but the claim here seems more false than not? Certainly, outer optimization on most loss functions will lead to _more_ inner optimization in that direction (empirically and theoretically)

#17. Can't control inner properties

Summary: On the current optimization paradigm there is no general idea of how to get particular inner properties into a system, or verify that they're there, rather than just observable outer ones you can run a loss function over.

- Agree (at least currently, the requisite interpretability capabilities and/or conceptual understanding of goal-directedness seems inadequate)

- Mostly agree (though uncertain how much this is about the optimization paradigm and how much it's about interpretability)

- Mostly agree (counter: current interpretability)

- (x2) Mostly disagree (interpretability could address this)

#18. No ground truth (no comments)

Summary: There's no reliable Cartesian-sensory ground truth (reliable loss-function-calculator) about whether an output is 'aligned'.

#19. Pointers problem

Summary: There is no known way to use the paradigm of loss functions, sensory inputs, and/or reward inputs, to optimize anything within a cognitive system to point at particular things within the environment.

- Unclear - agreed that we have no principled way of doing this, but we also don't have great examples of this not working, so depends on how strongly this is intended. I don't think "high confidence that this won't work" is justified.

- Agree (modulo the shard theory objection [AF(p) · GW(p)] to the strict reading of this)

#20. Flawed human feedback

Summary: Human raters make systematic errors - regular, compactly describable, predictable errors.

- Mostly agree (misleading, a major hope is to have your oversight process be smarter than the model, so that its systematic errors are not ones that the model can easily exploit)

- Unclear (agree denotationally, unclear whether we can build enough self-correction on the most load-bearing parts of human feedback)

#21. Capabilities go further

Summary: Capabilities generalize further than alignment once capabilities start to generalize far.

- Agree (seems similar to #8 and #15)

- Mostly agree (by default; but I think there's hope in the observation that generalizing alignment is a surmountable problem for humans, so it might also be for AGI)

#22. No simple alignment core

Summary: There is a simple core of general intelligence but there is no analogous simple core of alignment.

- Mostly agree (but something like "help this agent" seems like a fairly simple core)

- Unclear (there may exist a system that has alignment as an attractor)

#23. Corrigibility is anti-natural.

Summary: Corrigibility is anti-natural to consequentialist reasoning.

- Mostly agree (misleading, I agree with Paul's comment [LW(p) · GW(p)] on "Let's see you write that corrigibility tag")

- Mostly agree (I think we can avoid building arbitrarily consequentialist systems)

- Agree with the statement that corrigibility is anti-natural to consequentialist reasoning. Yudkowsky's view seems to be that everything tends towards *pure* consequentialism, and I disagree with that.

#24. Sovereign vs corrigibility

Summary: There are two fundamentally different approaches you can potentially take to alignment [a sovereign optimizing CEV or a corrigible agent], which are unsolvable for two different sets of reasons. Therefore by ambiguating between the two approaches, you can confuse yourself about whether alignment is necessarily difficult.

- Unclear. Agree in principle (but disagree this is happening that much)

- Mostly disagree (agree these are two distinct approaches that should not be confused, disagree that people are confusing them or that they are unsolvable)

- Mostly disagree - I agree that ambiguating between approaches is bad, but am not sure who/what that refers to, and there seems to be some implicit "all the approaches I've seen are non-viable" claim here, which I'd disagree with

Section B.3: Central difficulties of sufficiently good and useful transparency / interpretability (#25-33)

Summary

Detailed comments

#25. Real interpretability is out of reach

Summary: We've got no idea what's actually going on inside the giant inscrutable matrices and tensors of floating-point numbers.

- Agree - interpretability research has made impressive strides, and more than I expected in 2017, but is still a far shot from understanding most of what's happening inside the big mass of matmuls

- Mostly agree (misleading, we plausibly do better in the future)

- Mostly agree (we know a little now and not sure if we'll know more or less later)

- Unclear (agree we don't have much idea right now, but I think we can develop better understanding, main uncertainty is whether we can do this in time)

#26. Interpretability is insufficient

Summary: Knowing that a medium-strength system of inscrutable matrices is planning to kill us, does not thereby let us build a high-strength system that isn't planning to kill us.

- Mostly agree (misleading). A major hope is that (by using interpretability to carry out oversight) you simply don’t get the medium strength system that is planning to kill you.

- Mostly agree. Interpretability is helpful for cooperation, and also helps to train a system that doesn't kill you if #27 doesn't hold.

- Mostly agree - on it's own, seems correct, but the tone also understates that it would *massively* change the position we are in. If interpretability conclusively revealed that one of the existing prototypes was trying to kill us, that would dramatically change the {ML, global, AGI lab} conversation around xrisk/alignment

- Mostly agree. Agree denotationally, disagree that this capability wouldn't be super useful

#27. Selecting for undetectability

Summary: Optimizing against an interpreted thought optimizes against interpretability.

- Agree - important point to be careful about

- Mostly agree (misleading, written to suggest that you definitely get the deceptive model, instead of it being unclear whether you get the deceptive or aligned model, which is the actually correct thing)

- Not sure (good to be on the lookout for this, but not sure how much it's an issue in practice)

#28. Large option space (no comments)

Summary: A powerful AI searches parts of the option space we don't, and we can't foresee all its options.

#29. Real world is an opaque domain

Summary: AGI outputs go through a huge opaque domain before they have their real consequences, so we cannot evaluate consequences based on outputs.

- Agree (strongly) - seems particularly important when there are consequences we cannot easily observe/attribute (which seems like the rule in many consequential domains, rather than the exception)

- Mostly agree (we can't just evaluate consequences directly, but may be able to do it by evaluating reasoning)

#30. Powerful vs understandable

Summary: No humanly checkable output is powerful enough to save the world.

- Disagree (verification easier than generation)

- Disagree (due to pivotal act framing)

#31. Hidden deception

Summary: You can't rely on behavioral inspection to determine facts about an AI which that AI might want to deceive you about.

- Agree (for highly intelligent AI)

- Agree (but with interpretability tools we don't have to be restricted to behavioral inspection)

- Agree (I think?) E.g. I agree that we don't have good interpretability ways to check for "what the AI wants" or "what subgoals are relevant here" which is a particularly consequential question where AIs might be deceptive

- Unclear (depends on the system's level of situational awareness)

#32. Language is insufficient or unsafe

Summary: Imitating human text can only be powerful enough if it spawns an inner non-imitative intelligence.

- Mostly agree (misleading, typical plans don't depend on an assumption that it's "imitating human thought")

- Unclear - depends a lot on particular definitions

#33. Alien concepts

Summary: The AI does not think like you do, it is utterly alien on a staggering scale.

- Agree (for highly intelligent AI)

- Unclear (depends on details about the AI)

- Unclear - don't think we know much about how NNs work, and what we do know is ambiguous, though agree with #25 above

- Disagree (natural abstraction hypothesis seems likely true)

Section B.4: Miscellaneous unworkable schemes (#34-36)

Summary

Detailed comments

#34. Multipolar collusion

Summary: Humans cannot participate in coordination schemes between superintelligences.

- Unclear (unconvinced this is an unusually-hard subcase of corrigibility for the AI we'd use to help us)

#35. Multi-agent is single-agent

Summary: Any system of sufficiently intelligent agents can probably behave as a single agent, even if you imagine you're playing them against each other.

- Agree (at a sufficiently high level of intelligence I find it hard to imagine them playing any game we intend)

- Unclear (disagree if this is meant to apply to debate, see Paul's Disagreement #24 [AF(p) · GW(p)])

#36. Human flaws make containment difficult (no comments)

Summary: Only relatively weak AGIs can be contained; the human operators are not secure systems.

Section C: "civilizational inadequacy" (#37-43)

Summary

Detailed comments

#37. Optimism until failure

Summary: People have a default assumption of optimism in the face of uncertainty, until encountering hard evidence of difficulty.

- Mostly disagree (humanity seems pretty risk-averse generally, see FDA and other regulatory bodies, or helicopter parenting)

#38. Lack of focus on real safety problems

Summary: AI safety field is not being productive on the lethal problems. The incentives are for working on things where success is easier.

- (x2) Unclear / can't evaluate (depends on field boundaries)

#39. Can't train people in security mindset

Summary: This ability to "notice lethal difficulties without Eliezer Yudkowsky arguing you into noticing them" currently is an opaque piece of cognitive machinery to me, I do not know how to train it into others.

- Unclear (this isn't making a claim about whether others have this machinery or are better at training?)

- Disagree (seems wild to imagine that progress can only happen if someone came up with all the arguments themselves; this seems obviously contradicted by any existing research field)

#40. Can't just hire geniuses to solve alignment

Summary: You cannot just pay $5 million apiece to a bunch of legible geniuses from other fields and expect to get great alignment work out of them.

- Not sure (what have we tried?)

#41. You have to be able to write this list

Summary: Reading this document cannot make somebody a core alignment researcher, you have to be able to write it.

- Mostly agree (but I think it can be one of a suite of things that does produce real alignment research)

- Disagree (I think I both could have and often literally have written the arguments on this list that I agree with; it just doesn't seem like a particularly useful document to me relative to what already existed, except inasmuch as it shocks people into action)

#42. There's no plan

Summary: Surviving worlds probably have a plan for how to survive by this point.

- Unclear (don't know how overdetermined building dangerous AGI is)

- Disagree (depends on what is meant by a "plan", but either I think there's a plan, or I think many surviving worlds don't have a plan)

#43. Unawareness of the risks

Summary: Not enough people have noticed or understood the risks.

- Mostly agree, especially on understanding

- Disagree (you basically have to disagree if you have lower p(doom), there's not really an argument to respond to though)

37 comments

Comments sorted by top scores.

comment by Miranda Zhang (miranda-zhang) · 2022-08-13T17:50:55.445Z · LW(p) · GW(p)

This was interesting and I would like to see more AI research organizations conducting + publishing similar surveys.

Replies from: Vika↑ comment by Vika · 2022-08-17T16:20:16.775Z · LW(p) · GW(p)

Thanks! For those interested in conducting similar surveys, here is a version of the spreadsheet you can copy (by request elsewhere in the comments).

comment by Vika · 2023-12-20T15:27:42.609Z · LW(p) · GW(p)

I'm glad I ran this survey, and I expect the overall agreement distribution probably still holds for the current GDM alignment team (or may have shifted somewhat in the direction of disagreement), though I haven't rerun the survey so I don't really know. Looking back at the "possible implications for our work" section, we are working on basically all of these things.

Thoughts on some of the cruxes in the post based on last year's developments:

- Is global cooperation sufficiently difficult that AGI would need to deploy new powerful technology to make it work?

- There has been a lot of progress on AGI governance and broad endorsement of the risks this year, so I feel somewhat more optimistic about global cooperation than a year ago.

- Will we know how capable our models are?

- The field has made some progress on designing concrete capability evaluations - how well they measure the properties we are interested in remains to be seen.

- Will systems acquire the capability to be useful for alignment / cooperation before or after the capability to perform advanced deception?

- At least so far, deception and manipulation capabilities seem to be lagging a bit behind usefulness for alignment (e.g. model-written evals / critiques, weak-to-strong generalization), but this could change in the future.

- Is consequentialism a powerful attractor? How hard will it be to avoid arbitrarily consequentialist systems?

- Current SOTA LLMs seem surprisingly non-consequentialist for their level of capability. I still expect LLMs to be one of the safest paths to AGI in terms of avoiding arbitrarily consequentialist systems.

I hoped to see other groups do the survey as well - looks like this didn't happen, though a few people asked me to share the template at the time. It would be particularly interesting if someone ran a version of the survey with separate ratings for "agreement with the statement" and "agreement with the implications for risk".

comment by Ruby · 2022-09-17T05:10:48.934Z · LW(p) · GW(p)

Curated. I appreciate the DeepMind alignment team taking the time to engage with Eliezer's list and write up their thoughts. It feels helpful to know which particular points have a relative consensus and which are controversial, all the more so when they offer reasons. And in the specific case of this list, which claims failure is nigh certain, it's interesting to see on which points people disagree and think there's hope.

comment by Andrew_Critch · 2022-08-14T20:33:24.872Z · LW(p) · GW(p)

While I agree with much of this content, I think you guys (the anonymous authors) are most likely to be wrong in your disagreement with the "alien concepts" point (#33).

Replies from: Andrew_Critch, qbolec, Prometheus↑ comment by Andrew_Critch · 2022-08-14T20:45:15.101Z · LW(p) · GW(p)

To make a more specific claim (to be evaluated separately), I mostly expect this due to speed advantage, combined with examples of how human concepts are alien relative to those of analogously speed-disadvantaged living systems. For instance, most plants and somatic (non-neuronal) animal components use a lot of (very slow) electrical signalling to make very complex decisions (e.g., morphogenesis and healing; see Michael Levin's work on reprogramming regenerative organisms by decoding their electrical signalling). To the extent that these living systems (plants, and animal-parts) utilize "concepts" in the course of their complex decision-making, at present they seem quite alien to us, and many people (including some likely responders to this comment) will say that plants and somatic animal components entirely lack intelligence and do not make decisions. I'm not trying to argue for some kind of panpsychism or expanding circle of compassion here, just pointing out a large body of research (again, start with Levin) showing complex and robust decision-making within plants and (even more so) animal bodies, which humans consider relatively speaking "unintelligent" or at least "not thinking in what we regard to be valid abstract concepts", and I think there will be a similar disparity between humans and A(G)I after it runs for a while (say, 1000 subjective civilization-years, or a few days to a year of human-clock-time).

Replies from: rohinmshah↑ comment by Rohin Shah (rohinmshah) · 2022-08-15T05:57:18.455Z · LW(p) · GW(p)

I expect lots of alien concepts in domains where AI far surpasses humans (e.g. I expect this to be true of AlphaFold). But if you look at the text of the ruin argument:

Nobody knows what the hell GPT-3 is thinking, not only because the matrices are opaque, but because the stuff within that opaque container is, very likely, incredibly alien - nothing that would translate well into comprehensible human thinking, even if we could see past the giant wall of floating-point numbers to what lay behind.

I think this is pretty questionable. I expect that a good chunk of GPT-3's cognition is something that could be translated into something comprehensible, mostly because I think humans are really good at language and GPT-3 is only somewhat better on some axes (and worse on others). I don't remember what I said on this survey but right now I'm feeling like it's "Unclear", since I expect lots of AIs to have lots of alien concepts, but I don't think I expect quite as much alienness as Eliezer seems to expect.

(And this does seem to materially change how difficult you expect alignment to be; on my view you can hope that in addition to all the alien concepts the AI also has regular concepts about "am I doing what my designers want" or "am I deceiving the humans" which you could then hope to extract with interpretability.)

↑ comment by qbolec · 2022-11-03T08:00:38.925Z · LW(p) · GW(p)

Also, I wonder to what extent our own "thinking" is based on concepts we ourselves understand. I'd bet I don't really understand what concepts most of my own thinking processes use.

Like: what are the exact concepts I use when I throw a ball? Is there a term for velocity, gravity constant or air friction, or is it just some completely "alien" computation which is "inlined" and "tree-shaked" of any unneeded abstractions, which just sends motor outputs given the target position?

Or: what concepts do I use to know what word to place at this place in this sentence? Do I use concepts like "subject", or "verb" or "sentiment", or rather just go with the flow subconsciously, having just a vague idea of the direction I am going with this argument?

Or: what concepts do I really use when deciding to rotate the steering wheel 2 degrees to the right when driving a car through a forest road's gentle turn? Do I think about "angles", "asphalt", "trees", "centrifugal force", "tire friction", or rather just try to push the future into the direction where the road ahead looks more straight to me and somehow I just know that this steering wheel is "straightening" the image I see?

Or: how exactly do I solve (not: verify an already written proof) a math problem? How does the solution pop into my mind? Is there some systematic search over all possible terms and derivations, or rather some giant hash-map-like interconnected "related tricks and transformations I seen before" which get proposed?

I think my point is that we should not conflate the way we actually solve problems (subconsciously?), with the way we talk (consciously) about solutions we've already found when trying to verify them ourselves (the inner monologue) or convey them to another person. First of all, the Release binary and Debug binaries can differ (it's completely different experience to ride a bike for a first time, than an on 2000th attempt). Second, the on-the-wire format and the data structure before serialization can be very different (the way I explain how to solve an equation to my kid is not exactly how I solve it).

I think, that training a separate AI to interpret for us the inner workings of another AI is risky, the same way a Public Relations department or a lawyer doesn't necessarily give you the honest picture of what the client is really up to.

Also, I there's much talk about distinction between system 1 and 2, or subconsciousness and consciousness, etc.

But, do we really treat seriously the implication of all that: the concepts our conscious part of mind uses to "explain" the subconscious actions have almost nothing to do with how it actually happened. If we force the AI to use these concepts it will either lie to us ("Your honor, as we shall soon see the defendant wanted to..") , or be crippled (have you tried to drive a car using just the concepts from physics text book?). But even in the later case it looks like a lie to me, because even if the AI is really using the concepts it claims/seems/reported to be using, there's still the mismatch in myself: I think I now understand that the AI works just like me, while in the reality I work completely differently than I thought. How bad that is depends on problem domain, IMHO. This might be pretty good if the AI is trying to solve a problem like "how to throw a ball" and a program using physic equations is actually also a good way of doing it. But once we get to more complicated stuff like operating a autonomous drone on the battlefield or governing country's budget I think there's a risk because we don't really know how we ourselves make these kind of decisions.

↑ comment by Prometheus · 2022-09-18T05:15:28.673Z · LW(p) · GW(p)

Yes, this surprised me to. Perhaps it was the phrasing that they disagreed with? If you asked them about all possible intelligences in mindspace, and asked them if they thought AGI would fall very close to most human minds, maybe their answer would be different.

comment by AntonTimmer · 2022-08-13T12:31:38.110Z · LW(p) · GW(p)

As far as I can tell the major disagreements are about us having a plan and taking a pivotal act. There seems to be general "consensus" (Unclear, Mostly Agree, Agree) about what the problems are and how an AGI might look. Since no pivotal acts is needed either you think that we will be able to tackle this problem with the resources we have and will have, you have (way) longer timelines (let's assume Eliezer timeline is 2032 for argument's sake) or you expect the world to make a major shift in priorities concerning AGI.

Am I correct in assuming this or am I missing some alternatives ?

Replies from: rohinmshah↑ comment by Rohin Shah (rohinmshah) · 2022-08-15T06:14:14.155Z · LW(p) · GW(p)

(I'm on the DeepMind alignment team)

There's a fair amount of disagreement within the team as well. I'll try to say some things that I think almost everyone on the team would agree with but I could easily be wrong about that.

you think that we will be able to tackle this problem with the resources we have and will have

Presumably even on a pivotal act framing, we also have to execute a pivotal act with the resources we have and will have, so I'm not really understanding what the distinction is here? But I'm guessing that this is closest to "our" belief of the options you listed.

Note that this doesn't mean that "we" think you can get x-risk down to zero; it means that "we" think that non-pivotal-act strategies reduce x-risk more than pivotal-act strategies.

Replies from: AntonTimmer↑ comment by AntonTimmer · 2022-08-15T10:43:32.477Z · LW(p) · GW(p)

I misused the definition of a pivotal act which makes it confusing. My bad!

I understood the phrase pivotal act more in the spirit of out-off distribution effort. To rephrase it more clearly: Do "you" think an out-off distribution effort is needed right now ? For example sacrificing the long term (20 years) for the short term (5 years) or going for high risk-high reward strategies.

Or should we stay on our current trajectory, since it maximizes our chances of winning ? (which as far as I can tell is "your" opinion)

Replies from: rohinmshah↑ comment by Rohin Shah (rohinmshah) · 2022-08-15T21:49:27.388Z · LW(p) · GW(p)

To the extent I understand you (which at this point I think I do), yes, "we" think we should stay on our current trajectory.

comment by Andrew_Critch · 2022-08-14T20:25:46.098Z · LW(p) · GW(p)

I broadly agree with this post, especially the "(unilateral) pivotal acts are not the right approach" aspect.

Replies from: ClipMonger↑ comment by ClipMonger · 2022-12-02T09:12:09.371Z · LW(p) · GW(p)

The pivotal acts proposed are extremely specific solutions to specific problems, and are only applicable in very specific scenarios of AI clearly being on the brink of vastly surpassing human intelligence. That should be clarified whenever they are brought up; it's a thought experiment solution to a thought experiment problem, and if it suddenly stops being a thought experiment then that's great because you have the solution on a silver platter.

comment by trevor (TrevorWiesinger) · 2022-08-13T02:57:58.229Z · LW(p) · GW(p)

This looks like it's worth a whole lot of funding

Replies from: Vikacomment by Noosphere89 (sharmake-farah) · 2022-08-14T21:21:39.657Z · LW(p) · GW(p)

My viewpoint is that the most dangerous risks rely on inner alignment issues, and that is basically because of very bad transparency tools, instrumental convergence issues toward power and deception, and mesa-optimizers essentially ruining what outer alignment you have. If you could figure out a reliable way to detect or make sure that deceptive models could never be reached in your training process, that would relieve a lot of my fears of X-risk from AI.

I actually think Eliezer is underrating civilizational competence once AGI is released via the MNM effect, as it happened for Covid, unfortunately this only buys time before the end. A superhuman intelligence that is deceiving human civilization based on instrumental convergence essentially will win barring pivotal acts, as Eliezer says. The goal of AI safety is to make alignment not dependent on heroic, pivotal actions.

So Andrew Critich's hopes of not needing pivotal acts only works if significant portions of the alignment problem are solved or at least ameliorated, which we are not super close to doing, So whether alignment will require pivotal acts is directly dependent on solving the alignment problem more generally.

Pivotal acts are a worse solution to alignment and shouldn't be thought of as the default solution, but it is a back-pocket solution we shouldn't forget about.

If I had to rate a crux between Eliezer Yudkowsky's/Rob Bensinger's/Nate Soares's/MIRI's views and Deepmind's Safety Team views or Andrew Critich's view, it's whether the Alignment problem is foresight-loaded (And thus civilization will be incompetent as well as safety requiring more pivotal acts) or empirically-loaded, where we don't need to see the bullets in advance (And thus civilization could be more competent and pivotal acts matter less). It's an interesting crux to be sure.

PS: Does Deepmind's Safety Team have real power to disapprove AI projects? Or are they like the Google Ethics team, where they had no power to disapprove AI projects without being fired.

Replies from: Vika, dave-orr↑ comment by Vika · 2022-08-17T16:57:12.095Z · LW(p) · GW(p)

We don't have the power to shut down projects, but we can make recommendations and provide input into decisions about projects

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2022-08-17T17:03:16.459Z · LW(p) · GW(p)

So you can have non-binding recommendations and input, but no actual binding power over the capabilities researchers, right?

Replies from: Vika↑ comment by Vika · 2022-08-17T17:56:46.934Z · LW(p) · GW(p)

Correct. I think that doing internal outreach to build an alignment-aware company culture and building relationships with key decision-makers can go a long way. I don't think it's possible to have complete binding power over capabilities projects anyway, since the people who want to run the project could in principle leave and start their own org.

↑ comment by Dave Orr (dave-orr) · 2022-08-16T23:01:44.362Z · LW(p) · GW(p)

What's the MNM effect?

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2022-08-16T23:49:35.159Z · LW(p) · GW(p)

The MNM effect is essentially people can strongly react to disasters once they happen since they don't want to die, and this can prevent the worst outcomes from happening. It's a short-term response, but can become a control system.

Here's a link: https://www.lesswrong.com/posts/EgdHK523ZM4zPiX5q/coronavirus-as-a-test-run-for-x-risks [LW · GW]

comment by mukashi (adrian-arellano-davin) · 2022-08-14T03:51:56.573Z · LW(p) · GW(p)

I have to say these guys seem to me much saner than most people writing about AI in LW

comment by David Scott Krueger (formerly: capybaralet) (capybaralet) · 2022-08-15T17:57:39.555Z · LW(p) · GW(p)

Request: could you make a version of this (e.g. with all of your responses stripped) that I/anyone can make a copy of?

Replies from: Vika↑ comment by Vika · 2022-08-17T15:46:52.532Z · LW(p) · GW(p)

Here is a spreadsheet you can copy. This one has a column for each person - if you want to sort the rows by agreement, you need to do it manually after people enter their ratings. I think it's possible to automate this but I was too lazy.

comment by Signer · 2022-08-13T12:03:11.258Z · LW(p) · GW(p)

It seems like “get people to implement stronger restrictions” or “explain misalignment risks” or “come up with better regulation” or “differentially improve alignment” are all better applications of an AGI than “do a pivotal act”.

Is there any justification for this? I mean, it's easy to see how you can "explain misalignment risks", but usefulness of it depend on eventually stopping every single PC owner from destroying the world. Similarly with “differentially improve alignment” - what you going to do with new aligned superhuman AI - give to every factory owner? It doesn't sound like it's actually optimized for survival.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2022-08-13T13:12:52.221Z · LW(p) · GW(p)

Thankfully, the Landauer limit as well as current results in AI mean we probably don't have to do a pivotal act because only companies for the foreseeable future will be able to create AGI.

Replies from: Signer↑ comment by Signer · 2022-08-13T13:58:58.401Z · LW(p) · GW(p)

"Companies develop AGI in foreseeable future" means soon after they will be able to improve technology to the point where not only them can do it. If the plan is "keep AGI ourselves" then ok, but it still leaves the question of "untill what?".

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2022-08-13T14:21:07.863Z · LW(p) · GW(p)

I would say that companies should stop trying to race to AGI. AGI, if it is to be ever built, should only come after we solved inner alignment at least.

comment by Prometheus · 2022-09-18T05:24:24.553Z · LW(p) · GW(p)

Great analysis! I’m curious about the disagreement with needing a pivotal act. Is this disagreement more epistemic or normative? That is to say do you think they assign a very low probability of needing a pivotal act to prevent misaligned AGI? Or do they have concerns about the potential consequences of this mentality? (people competing with each other to create powerful AGI, accidentally creating a misaligned AGI as a result, public opinion, etc.)

Replies from: Vika, sharmake-farah↑ comment by Vika · 2022-10-25T21:07:36.101Z · LW(p) · GW(p)

I would say the primary disagreement is epistemic - I think most of us would assign a low probability to a pivotal act defined as "a discrete action by a small group of people that flips the gameboard" being necessary. We also disagree on a normative level with the pivotal act framing, e.g. for reasons described in Critch's post [AF · GW] on this topic.

↑ comment by Noosphere89 (sharmake-farah) · 2022-09-23T00:42:50.332Z · LW(p) · GW(p)

If I had to say why I'd disagree with a pivotal act framing, PR would probably be the obvious risk, but another risk is that it's easy to by default politicize this type of thing, and by default there are no guardrails.

It will be extremely tempting to change the world to fit your ideology and politics, and that gets very dangerous very quickly.

Now unfortunately this might really happen, we can be in the unhappy scenario where pivotal acts are required, but if it ever comes to that, they will need to be narrow pivotal acts, and the political system shouldn't be changed unless it relates to AI Safety. Narrowness is a virtue, and one of the most important ones during pivotal acts.

comment by Adrien Chauvet (adrien-chauvet) · 2023-01-27T01:03:25.974Z · LW(p) · GW(p)

#4. Can't cooperate to avoid AGI

Maybe we can. This is how the Montreal Protocol came about: scientists discovered that chlorofluorocarbons were bad for the ozone. Governments believed them, then the Montreal Protocol was signed, and CFC use fell by 99.7%, leading to the stabilization of the ozone layer, perhaps the greatest example of global cooperation in history.

It took around 15 years from the time scientists discovered that chlorofluorocarbons were causing a major problem to the time the Montreal Protocol was adopted.

How can scientists convince the world to cooperate on AGI alignment in less time?

Replies from: adrian-arellano-davin↑ comment by mukashi (adrian-arellano-davin) · 2023-01-27T03:19:27.168Z · LW(p) · GW(p)

They haven't managed to do it so far for climate change, which has received massively more attention than AGI. I have seen many times this example being used to argue that we can indeed be successful at coordinating for major challenges, but I think this case is misleading: CFC never played a major role in the economy and they were easily replaceable, so forbidding them was not such an important move.