(The) Lightcone is nothing without its people: LW + Lighthaven's big fundraiser

post by habryka (habryka4) · 2024-11-30T02:55:16.077Z · LW · GW · 269 commentsContents

LessWrong Does LessWrong influence important decisions? Does LessWrong make its readers/writers more sane? LessWrong and intellectual progress Public Accessibility of the Field of AI Alignment LessWrong's influence on research Lighthaven The economics of Lighthaven How does Lighthaven improve the world? Nooks nooks nooks nooks nooks Lighthaven "permanent" residents and the "river and shore" metaphor Does Lighthaven improve the events we run here? The relationship between Lighthaven and LessWrong Lightcone and the funding ecosystem Lightcone's budget Our work on funding infrastructure If it's worth doing it's worth doing with made-up statistics The OP GCR capacity building team survey Lightcone/LessWrong cannot be funded by just running ads Comparing LessWrong to other websites and apps Lighthaven event surplus The future of (the) Lightcone Lightcone culture and principles Things I wish I had time and funding for Building an LLM-based editor. AI prompts and tutors as a content type on LW Building something like an FHI of the West Building funding infrastructure for AI x-risk reduction Something something policy A better review system for AI Alignment research What do you get from donating to Lightcone? Goals for the fundraiser Logistics of donating to Lightcone Tying everything together None 271 comments

Update Jan 19th 2025: The Fundraiser is over! We had raised over $2.1M when the fundraiser closed, and have a few more irons in the fire that I expect will get us another $100k-$200k. This is short of our $3M goal, which I think means we will have some difficulties in the coming year, but is over our $2M goal which if we hadn't met it probably meant we would stop existing or have to make very extensive cuts. Thank you so much to everyone who contributed, seeing so many people give so much has been very heartening.

TLDR: LessWrong + Lighthaven need about $3M for the next 12 months. Donate or send me an email, DM [LW · GW], signal message (+1 510 944 3235), or public comment on this post, if you want to support what we do. We are a registered 501(c)3, have big plans for the next year, and due to a shifting funding landscape need support from a broader community more than in any previous year. [1]

I've been running LessWrong/Lightcone Infrastructure for the last 7 years. During that time we have grown into the primary infrastructure provider for the rationality and AI safety communities. "Infrastructure" is a big fuzzy word, but in our case, it concretely means:

- We build and run LessWrong.com and the AI Alignment Forum.[2]

- We built and run Lighthaven (lighthaven.space), a ~30,000 sq. ft. campus in downtown Berkeley where we host conferences, research scholars, and various programs dedicated to making humanity's future go better.

- We are active leaders of the rationality and AI safety communities, running conferences and retreats, participating in discussion on various community issues, noticing and trying to fix bad incentives, building grantmaking infrastructure, talking to people who want to get involved, and lots of other things.

In general, Lightcone considers itself responsible for the end-to-end effectiveness of the extended rationality and AI safety community. If there is some kind of coordination failure, or part of the engine of impact that is missing, I aim for Lightcone to be an organization that can jump in and fix that, whatever it is.

Doing that requires a non-trivial amount of financial capital. For the next 12 months, we expect to spend around $3M, and in subsequent years around $2M (though we have lots of opportunities to scale up if we can get more funding for it). We currently have around $200k in the bank.[3]

Lightcone is, as far as I can tell, considered cost-effective by the large majority of people who have thought seriously about how to reduce existential risk and have considered Lightcone as a donation target, including all of our historical funders. Those funders can largely no longer fund us, or expect to fund us less, for reasons mostly orthogonal to cost-effectiveness (see the section below on "Lightcone and the funding ecosystem" [LW · GW] for details on why). Additionally, many individuals benefit from our work, and I think it makes sense for those people to support the institutions that provide them value.

This, I think, creates a uniquely strong case for people reading this to donate to us.[4]

I personally think there exists no organization that has been more cost-effective at reducing AI existential risk in the last 5 years, and I think that's likely to continue to be the case in the coming 5 years. Our actions seem to me responsible for a substantial fraction of the positive effects of the field of AI safety, and have also substantially alleviated the negative effects of our extended social cluster (which I think are unfortunately in-expectation of comparable magnitude to the positive effects, with unclear overall sign).

Of course, claiming to be the single most cost-effective intervention out there is a big claim, and one I definitely cannot make with great confidence. But the overall balance of evidence seems to me to lean this way, and I hope in this post to show you enough data and arguments that you feel comfortable coming to your own assessment.

This post is a marathon, so strap in and get comfortable. Feel free to skip to any section of your choice (the ToC on the left, or in the hamburger menu is your friend). Also, ask me questions in the comments (or in DMs), even if you didn't read the whole post.

Now let's zoom out a bit and look at some of the big picture trends and data of the projects we've been working on in the last few years and see what they tell us about Lightcone's impact:

LessWrong

Here are our site metrics from 2017 to 2024:

On almost all metrics, we've grown the activity levels of LessWrong by around 4-5x since 2017 (and ~2x since the peak of LW 1.0). In more concrete terms, this has meant something like the following:

- ~30,000 additional[5] posts with ~50,000,000 words written

- ~100,000 additional comments with (also) ~50,000,000 words written

- ~20,000,000 additional unique users have visited LessWrong with ~70,000,000 additional post visits

You will also quickly notice that many metrics peaked in 2023, not 2024. This is largely downstream the launch of ChatGPT, Eliezer's "List of Lethalities [LW · GW]" and Eliezer's TIME article [LW · GW], which caused a pretty huge spike in traffic and activity on the site. That spike is now over and we will see where things settle in terms of growth and activity. The collapse of FTX also caused a reduction in traffic and activity of practically everything Effective Altruism-adjacent, and I expect we are also experiencing some of that (though much less than more centrally EA-associated platforms like 80,000 hours and the EA Forum, as far as I can tell).

While I think these kind of traffic statistics are a very useful "sign of life" and sanity-check that what we are doing is having any effect at all in the grand scale of things, I don't think they are remotely sufficient for establishing we are having a large positive impact.

One way to get closer to an answer to that question is to decompose it into two questions: "Do the writings and ideas from LessWrong influence important decision-makers?" and "Does LessWrong make its readers & writers more sane?".

I expect the impact of LessWrong to end up extremely heavy-tailed, with a large fraction of the impact coming from a very small number of crucial decision-makers having learned something of great importance on a highly leveraged issue (e.g. someone like Geoffrey Hinton becoming concerned about AI existential risk, or an essay on LW opening the Overton window at AI capability companies to include AI killing everyone, or someone working on an AI control strategy learning about some crucial component of how AIs think that makes things work better).

Does LessWrong influence important decisions?

It's tricky to establish whether reading LessWrong causes people to become more sane and better informed on key issues. It is however relatively easy to judge whether LessWrong is being read by some of the most important decision-makers of the 21st century, or whether it is indirectly causing content to be written that is being read by the most important decision-makers of the 21st century.

I think the extent of our memetic reach was unclear for a few years, but there is now less uncertainty. Among the leadership of the biggest AI capability companies (OpenAI, Anthropic, Meta, Deepmind, xAI), at least 4/5 have clearly been heavily influenced by ideas from LessWrong.[6] While the effect outside of Silicon Valley tech and AI is less clear, things look promising to me there too:

Matt Clifford, CEO of Entrepreneur First and Chair of the UK’s ARIA recently said on a podcast (emphasis mine):

Jordan Schneider: What was most surprising to you in your interactions during the build-up to the summit, as well as over the course of the week?

Matt Clifford: When we were in China, we tried to reflect in the invite list a range of voices, albeit with some obvious limitations. This included government, but also companies and academics.

But one thing I was really struck by was that the taxonomy of risks people wanted to talk about was extremely similar to the taxonomy of risks that you would see in a LessWrong post or an EA Forum post.

I don't know enough about the history of that discourse to know how much of that is causal. It's interesting that when we went to the Beijing Academy of AI and got their presentation on how they think about AI risk safety governance, they were talking about autonomous replication and augmentation. They were talking about CBRN and all the same sort of terms. It strikes me there has been quite a lot of track II dialogue on AI safety, both formal and informal, and one of the surprises was that that we were actually starting with a very similar framework for talking about these things."

Patrick Collison talks on the Dwarkesh podcast about Gwern’s writing on LW and his website:

How are you thinking about AI these days?

Everyone has to be highly perplexed, in the sense that the verdict that one might have given at the beginning of 2023, 2021, back, say, the last eight years — we're recording this pretty close to the beginning of 2024 — would have looked pretty different.

Maybe Gwern might have scored the best from 2019 or something onwards, but broadly speaking, it's been pretty difficult to forecast."

Lina Khan (head of the FTC) answering a question about her “p(doom)”, a concept that originated in LessWrong comments.

Does LessWrong make its readers/writers more sane?

I think this is a harder question to answer. I think online forums and online discussion tend to have a pretty high-variance effect on people's sanity and quality of decision-making. Many people's decision-making seems to have gotten substantially worse by becoming very involved with Twitter, and many subreddits seem to me to have similarly well-documented cases of smart people becoming markedly less sane.

We have tried a lot of things to make LessWrong have less of these sorts of effects, though it is hard to tell how much we have succeeded. We definitely have our own share of frustrating flame wars and tribal dynamics that make reasoning hard.

One proxy that seems useful to look at is something like, "did the things that LessWrong paid attention to before everyone else turn out to be important?". This isn't an amazing proxy for sanity, but it does tell you whether you are sharing valuable information. In market terms, it tells you how much alpha there is in reading LessWrong.

I think on information alpha terms, LessWrong has been knocking it out of the park over the past few years. Its very early interest in AI, early interest in deep learning, early interest in crypto, early understanding of the replication crisis [? · GW], early interest in the COVID pandemic and early interest in prediction markets all have paid off handsomely, and indeed many LessWrong readers have gotten rich off investing in the beliefs they learned from the site (buying crypto and Nvidia early, and going long volatility before the pandemic, sure gives you high returns).[7]

On a more inside-view-y dimension, I have enormously benefitted from my engagement with LessWrong, and many of the people who seem to me to be doing the best work on reducing existential risk from AI and improving societal decision-making seem to report the same. I use many cognitive tools I learned on LessWrong on a daily level, and rarely regret reading things written on the site.

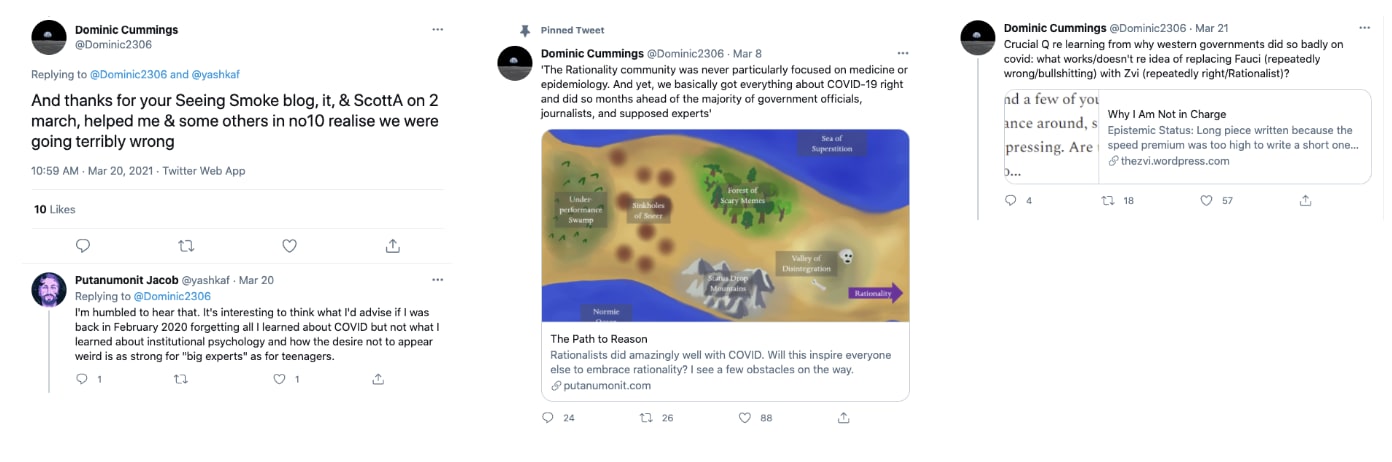

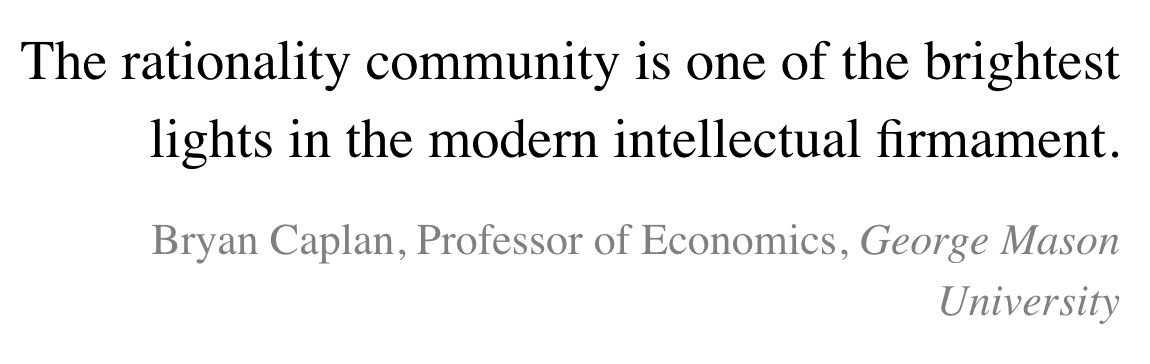

Some quotes and endorsements to this effect:

|  |

LessWrong and intellectual progress

While I think ultimately things on LessWrong have to bottom out in people making better decisions of some kind, I often find it useful to look at a proxy variable of something like "intellectual progress". When I think of intellectual progress, I mostly think about either discovering independently verifiable short descriptions of phenomena that previously lacked good explanations, or distilling ideas in ways that are clearer and more approachable than any previous explanation.

LessWrong hosts discussion about a very wide variety of interesting subjects (genetic engineering [LW · GW], obesity [LW · GW], US shipping law [LW · GW], Algorithmic Bayesian Epistemology [LW · GW], anti-aging [LW · GW], homemade vaccines [LW · GW], game theory [LW · GW], and of course the development of the art of rationality [? · GW]), but the single biggest topic on LessWrong is artificial intelligence and its effects on humanity's long term future. LessWrong is the central discussion and publication platform for a large ecosystem of people who discover, read, and write research about the problems facing us in the development of AI.

I think the ideas developed here push the frontier of human civilization's understanding of AI, how it will work, and how to navigate its development.

- I primarily think this because I read the essays and I build my own models of AI and how the work here connects, and I find useful insights.

- I also believe it because I see people here engaged in answering the kinds of questions I would want to be researching if I were working on the problem (e.g. building concrete threat models involving AIs, trying to get a better fundamental understanding of agency and optimization and intelligence, analyzing human civilization's strategic position with respect to developing AI and negotiating with AI, and more).

- I also believe it because of the reports I read from other people who I respect.

This next section primarily consists of the latter sort of evidence, which is the only one I can really give you in a short amount of space.

Public Accessibility of the Field of AI Alignment

In 2017, trying to understand and contribute to the nascent field of AI alignment using the public written materials was basically not possible (or took 200+ hrs). Our goal with the AI Alignment Forum was to move the field of AI from primarily depending on a people's direct personal conversations with a few core researchers (at the time focused around MIRI and Paul Christiano) to being a field whose core ideas could be learned via engaging with the well-written explanations and discussions online.

I think we largely achieved this basic goal. By 2020 many people had a viable route by spending 20-30 hours engaging with the best LessWrong content. DeepMind's Rohin Shah agreed, writing in 2020 that “the AI Alignment Forum improved our pedagogic materials from 0.1 to 3 out of 10.”

To show this, below I've collected some key posts and testimonials about those posts from researchers and LW contributors about those posts.

Paul Christiano's Research Agenda FAQ [LW · GW] was published in 2018 by Alex Zhu (independent).

Evan Hubinger (Anthropic): “Reading Alex Zhu's Paul agenda FAQ was the first time I felt like I understood Paul's agenda in its entirety as opposed to only understanding individual bits and pieces. I think this FAQ was a major contributing factor in me eventually coming to work on Paul's agenda.”

Eli Tyre: “I think this was one of the big, public, steps in clarifying what Paul is talking about.”

An overview of 11 proposals for building safe advanced AI [LW · GW] by Evan Hubinger (Anthropic) in May 2020

Daniel Kokotajlo (AI Futures Project): “This post is the best overview of the field so far that I know of… Since it was written, this post has been my go-to reference both for getting other people up to speed on what the current AI alignment strategies look like (even though this post isn't exhaustive). Also, I've referred back to it myself several times. I learned a lot from it.”

Niplav: “I second Daniel's comment and review, remark that this is an exquisite example of distillation, and state that I believe this might be one of the most important texts of the last decade.”

It Looks Like You're Trying To Take Over The World [LW · GW] by Gwern (Gwern.net) in March 2022

Garrett Baker (Independent): "Clearly a very influential post on a possible path to doom from someone who knows their stuff about deep learning! There are clear criticisms, but it is also one of the best of its era. It was also useful for even just getting a handle on how to think about our path to AGI."[8]

Counterarguments to the basic AI x-risk case [LW · GW] by Katja Grace in October 2022

Vika Krakona (DeepMind safety researcher, cofounder of the Future of Life Institute): “I think this is still one of the most comprehensive and clear resources on counterpoints to x-risk arguments. I have referred to this post and pointed people to a number of times. The most useful parts of the post for me were the outline of the basic x-risk case and section A on counterarguments to goal-directedness (this was particularly helpful for my thinking about threat models and understanding agency).“

If you want to read more examples of this sort of thing, click to expand the collapsible section below.

10 more LW posts with testimonials

Embedded Agency [? · GW] is a mathematical cartoon series published in 2018 by MIRI researchers Scott Garrabrant and Abram Demski.

Rohin Shah (DeepMind): “I actually have some understanding of what MIRI's Agent Foundations work is about.”

John Wentworth (Independent): “This post (and the rest of the sequence) was the first time I had ever read something about AI alignment and thought that it was actually asking the right questions.”

David Manheim (FHI): “This post has significantly changed my mental model of how to understand key challenges in AI safety… the terms and concepts in this series of posts have become a key part of my basic intellectual toolkit.”

Risks from Learned Optimization [? · GW] is the canonical explanation of the concept of inner optimizers, by Hubinger et al in 2019.

Daniel Filan (Center for Human-Compatible AI): “I am relatively convinced that mesa-optimization… is a problem for AI alignment, and I think the arguments in the paper are persuasive enough to be concerning… Overall, I see the paper as sketching out a research paradigm that I hope to see fleshed out.”

Rohin Shah (DeepMind): “...it brought a lot more prominence to the inner alignment problem by making an argument for it in a lot more detail than had been done before… the conversation is happening at all is a vast improvement over the previous situation of relative (public) silence on the problem.”

Adam Shimi (Conjecture): “For me, this captures what makes this sequence and corresponding paper a classic in the AI Alignment literature: it keeps on giving, readthrough after readthrough.”

Inner Alignment: Explain like I'm 12 Edition by Rafael Harth (Independent) in August 2020

David Manheim (FHI): "This post is both a huge contribution, giving a simpler and shorter explanation of a critical topic, with a far clearer context, and has been useful to point people to as an alternative to the main sequence"

The Solomonoff Prior is Malign [LW · GW] by Mark Xu (Alignment Research Center) in October 2020

John Wentworth: “This post is an excellent distillation of a cluster of past work on maligness of Solomonoff Induction, which has become a foundational argument/model for inner agency and malign models more generally.”

Vanessa Kosoy (MIRI): “This post is a review of Paul Christiano's argument that the Solomonoff prior is malign, along with a discussion of several counterarguments and countercounterarguments. As such, I think it is a valuable resource for researchers who want to learn about the problem. I will not attempt to distill the contents: the post is already a distillation, and does a a fairly good job of it.”

Fun with +12 OOMs of Compute [LW · GW] by Daniel Kokotajlo (of AI Futures Project) in March 2021

Zach Stein-Perlman (AI Lab Watch): “The ideas in this post greatly influence how I think about AI timelines, and I believe they comprise the current single best way to forecast timelines.”

nostalgebraist: “This post provides a valuable reframing of a common question in futurology: "here's an effect I'm interested in -- what sorts of things could cause it?"

Another (outer) alignment failure story [LW · GW] by Paul Christiano (US AISI) in April 2021

1a3orn: “There's a scarcity of stories about how things could go wrong with AI which are not centered on the "single advanced misaligned research project" scenario. This post (and the mentioned RAAP post by Critch) helps partially fill that gap.”

What Multipolar Failure Looks Like, and Robust Agent-Agnostic Processes (RAAPs) [LW · GW] by Andrew Critch (Center for Human-Compatible AI) in April 2021

Adam Shimi: “I have made every person I have ever mentored on alignment study this post. And I plan to continue doing so. Despite the fact that I'm unconvinced by most timeline and AI risk scenarios post. That's how good and important it is.”

Selection Theorems: A Program For Understanding Agents [LW · GW] by John Wentworth (Independent) in September 2021

Vika Krakovna (DeepMind safety researcher, cofounder of the Future of Life Institute): “I like this research agenda because it provides a rigorous framing for thinking about inductive biases for agency and gives detailed and actionable advice for making progress on this problem. I think this is one of the most useful research directions in alignment foundations since it is directly applicable to ML-based AI systems.”

MIRI announces new "Death With Dignity" strategy [LW · GW] by Eliezer Yudkowsky (MIRI) in April 2022

John Wentworth: "Based on occasional conversations with new people, I would not be surprised if a majority of people who got into alignment between April 2022 and April 2023 did so mainly because of this post. Most of them say something like "man, I did not realize how dire the situation looked" or "I thought the MIRI folks were on it or something"."

Let’s think about slowing down AI [LW · GW] by Katja Grace (AI Impacts) in December 2022.

Eli Tyre: “This was counter to the prevailing narrative at the time, and I think did some of the work of changing the narrative. It's of historical significance, if nothing else.”

Larks: “This post seems like it was quite influential.”

LessWrong's influence on research

I think one of the main things LessWrong gives writers and researchers is an intelligent and philosophically mature audience who want to read great posts. This pulls writing out of authors that they wouldn't write if this audience wasn't here. A majority of high-quality alignment research on LessWrong is solely written for LessWrong, and not published elsewhere.

As an example, one of Paul Christiano’s most influential essays is What Failure Looks Like [AF · GW], and while Christiano does have his own AI alignment blog, this essay was only written on the AI Alignment Forum.

As further evidence on this point, here is a quote from Rob Bensinger (from the MIRI staff) in 2021:

“LW made me feel better about polishing and posting a bunch of useful dialogue-style writing that was previously private (e.g., the 'security mindset' dialogues) or on Arbital (e.g., the 'rocket alignment problem' dialogue).”

“LW has helped generally expand my sense of what I feel happy posting [on the internet]. LW has made a lot of discourse about AI safety more open, candid, and unpolished; and it's increased the amount of that discussion a great deal. so MIRI can more readily release stuff that's 'of a piece' with LW stuff, and not worry as much about having a big negative impact on the overall discourse.”

So I think that the vast majority of this work wouldn't have been published if not for the Forum, and would've been done to a lower quality had the Forum not existed. For example, with the 2018 FAQ above on Christiano's Research, even though Alex Zhu may well have spent the same time understanding Paul Christiano’s worldview, Eliezer Yudkowsky would not have been able to get the benefit of reading Zhu’s write-up, and the broader research community would have seen neither Zhu’s understanding or Yudkowsky’s response.

Lighthaven

Since mid-2021 the other big thread in our efforts has been building in-person infrastructure. After successfully reviving LessWrong, we noticed that in more and more of our user interviews "finding collaborators" and "getting high-quality high-bandwidth feedback" were highlighted as substantially more important bottlenecks to intellectual progress than the kinds of things we could really help with by adding marginal features to our website. After just having had a year of pandemic lockdown with very little of that going on, we saw an opportunity to leverage the end of the pandemic into substantially better in-person infrastructure for people working on stuff we care about than existed before.

After a year or two of exploring by running a downtown Berkeley office space, we purchased a $16.5M hotel property, renovated it for approximately $6M and opened it up to events, fellowships, research collaborators and occasional open bookings under the name Lighthaven.

I am intensely proud of what we have built with Lighthaven and think of it as a great validation of Lightcone's organizational principles. A key part of Lightcone's philosophy is that I believe most cognitive skills are general in nature. IMO the key requirement to building great things is not to hire the best people for the specific job you are trying to get done, but to cultivate general cognitive skills and hire the best generalists you can find, who can then bring their general intelligence to bear on whatever problem you decide to focus on. Seeing the same people who built LessWrong, the world's best discussion platform, pivot to managing a year long $6M construction project, and see it succeed in quality beyond anything else I've seen in the space, fills me with pride about the flexibility and robustness of our ability to handle whatever challenges stand between us and our goals (which I expect will be myriad and similarly varied).

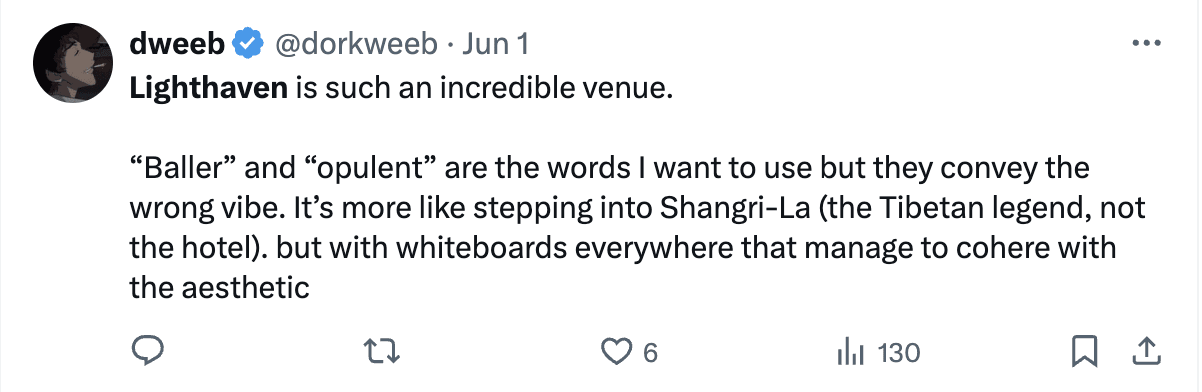

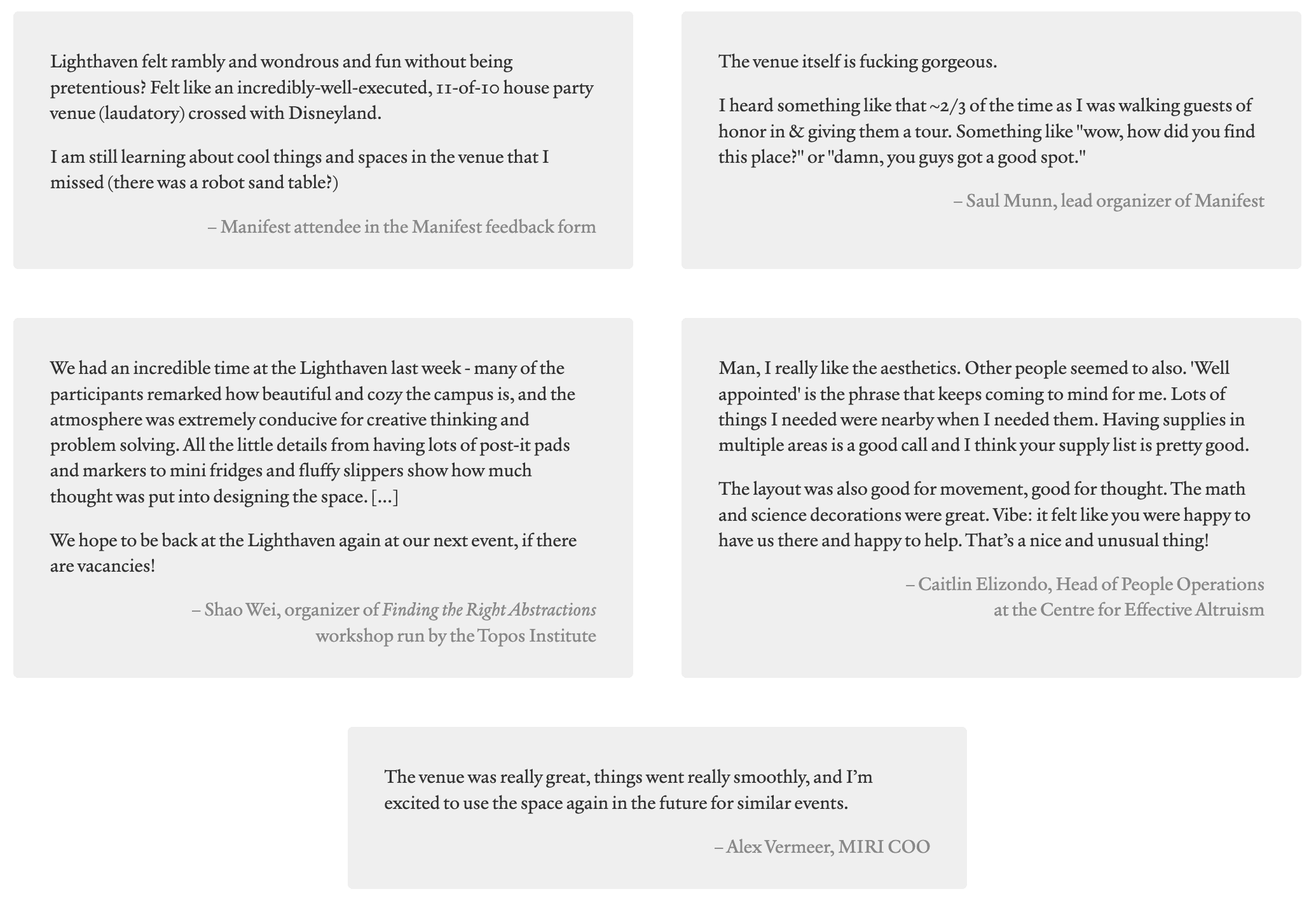

Others seem to think the same:

| |

|  |

|

|

|

|

|  |

| |

And a quick collage of events we've hosted here (not comprehensive):

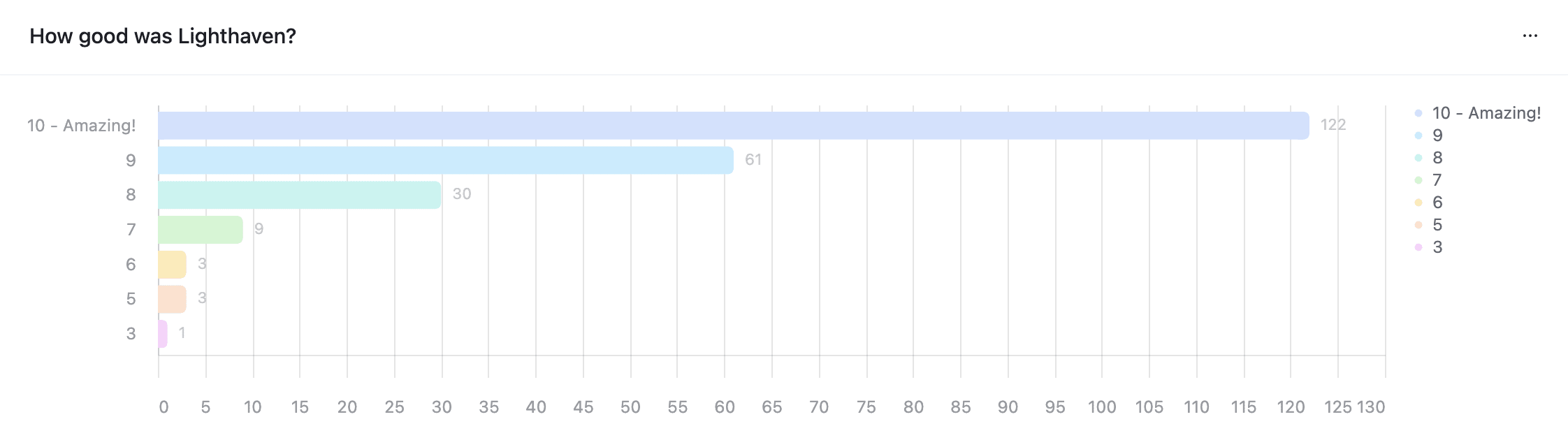

At conferences where we managed to sneak in a question about the venue quality, we've received a median rating of 10/10, with an average of 9.4. All annual conferences organized here wanted to come back the following year, and as far as I know we've never had a client who was not hoping to run more events at Lighthaven in the future (in Lighthaven's admittedly short life so far).

Lighthaven is a very capital-intensive project, and in contrast to our ambitions with LessWrong, is a project where we expect to recoup a substantial chunk of our costs by people just paying us. So a first lens to analyze Lighthaven through is to look at how we are doing in economic terms.

The economics of Lighthaven

We started Lighthaven when funding for work on rationality community building, existential risk, and AI safety was substantially more available. While FTX never gave us money directly for Lighthaven, they encouraged us to expand aggressively, and so I never intended it to be in a position to break even on purely financial grounds.

Luckily, despite hospitality and conferencing not generally being known as an industry with amazing margins, we made it work. I originally projected an annual shortfall of $1M per year, which we would need to make up with philanthropic donations. However, demand has been substantially higher than I planned for, and correspondingly our revenue has been much higher than I was projecting.

| Projections for 2024 | Actuals for 2024 | |

| Upkeep | $800,000 | $1,600,000 |

| Interest payment | $1,000,000 | $1,000,000 |

| Revenue | ($1,000,000) | ($1,800,000) |

| Totals | -$800,000 | -$800,000 |

| Last year's projections for 2025 | New projections for 2025 | |

| Upkeep | $800,000 | $1,600,000 |

| Interest | $1,000,000 | $1,000,000 |

| Revenue | ($1,200,000) | ($2,600,000) |

| Totals | -$600,000 | $0 |

Last year, while fundraising, I projected that we would spend about $800k on the upkeep, utilities and property taxes associated with Lighthaven in 2024 and 2025, as well as $1M on our annual interest payment. I expected we would make about $1M in revenue, resulting in a net loss of ~$500k - $800k.

Since demand was substantially higher, we instead spent ~$1.6M on improvements, upkeep, staffing and taxes, plus an additional $1M in interest payment, against a total of around $1.8M in revenue, in a year in which the campus wasn't operational for a substantial fraction of that year, overall producing revenue much above my expectations.

My best projections for 2025 are that we will spend the same amount[9], but this time make ~$2.6M in revenue—breaking even—and if we project that growth out a bit more, we will be in a position to subsidize and fund other Lightcone activities in subsequent years. At this level of expenditure we are also making substantial ongoing capital investments into the venue, making more of our space usable and adding new features every month[10].

Here is a graph of our 2024 + 2025 monthly income with conservative projections:

How does Lighthaven improve the world?

The basic plan for Lighthaven to make the world better is roughly:

- Improve the quality of events and fellowships that are hosted here, or cause additional high-quality events to happen (or save them time and money by being cheaper and easier to work with than equally good alternatives).

- From the people who attend fellowships and events here, we pick the best and grow a high-quality community of more permanent residents, researchers, and regulars at events.

I think the impact of in-person collaborative spaces on culture and effective information exchange can be very large. The exact models of how Lightcone hopes to do that are hard to communicate and are something I could write many posts about, but we can do a quick case study of how Lightcone differs from other event venues:

Nooks nooks nooks nooks nooks

One of the central design principles of Lighthaven is that we try to facilitate small 2-6 person conversations in a relaxed environment, with relative privacy from each other, while making it as easy as possible to still find anyone you might be looking for. One of the central ways Lighthaven achieves that is by having a huge number of conversational nooks both on the inside and outside of the space. These nooks tend to max out at being comfortable for around 8 people, naturally causing conversations to break up into smaller chunks.

Conferences at Lighthaven therefore cause people to talk much more to each other than in standard conference spaces, in which the primary context for conversation might be the hallways, usually forcing people to stand, and often ballooning into large conversations of 20+ people, as the hallways provide no natural maximum for conversation size.

More broadly, my design choices for Lighthaven have been heavily influenced by Christopher Alexander's writing on architecture and the design of communal spaces. I recommend skimming through A Pattern Language and reading sections that spark your interest if you are interested in how Lighthaven was designed (I do not recommend trying to read the book from front to back, it will get boring quickly).

Lighthaven "permanent" residents and the "river and shore" metaphor

In the long run, I want Lightcone to become a thriving campus with occupants at many different timescales:

- Single-weekend event attendees

- Multi-week program fellows

- Multi-month visiting fellows

- Multi-year permanent researchers, groups and organizations

The goal is for each of these to naturally feed into the following ones, creating a mixture of new people and lasting relationships across the campus. Metaphorically the flow of new people forms a fast-moving and ever-changing "river", with the "shore" being the aggregated sediment of the people who stuck around as a result of that flow.

Since we are just getting started, we have been focusing on the first and second of these, with only a small handful of permanently supported people on our campus (at present John Wentworth [LW · GW], David Lorell, Adam Scholl, Aysja Johnson, Gene Smith [LW · GW] and Ben Korpan).

On the more permanent organizational side, I hope that the campus will eventually house an organization worthy of an informal title like "FHI of the West" [LW · GW], either directly run by Lightcone, or heavily supported by us, but I expect to grow such an organization slowly and incrementally, instead of in one big push (which I initially considered, and might still do in the future, but for now decided against).

Does Lighthaven improve the events we run here?

I've run a lot of conferences and events over the years (I was in charge of the first EA Global conference, and led the team that made EA Global into a global annual conference series with thousands of attendees).[11] I designed Lighthaven to really leverage the lessons I learned from doing that, and I am pretty confident I succeeded, based on my own experiences of running events here, and the many conversations I've had with event organizers here.

The data also seems to back this up (see also my later section [LW · GW] on estimating the value of Lighthaven's surplus based on what people have told us they would be willing to pay to run events here):

I expect a number of people who have run events at Lighthaven will be in the comments and will be happy to answer questions about what it's been like.[12]

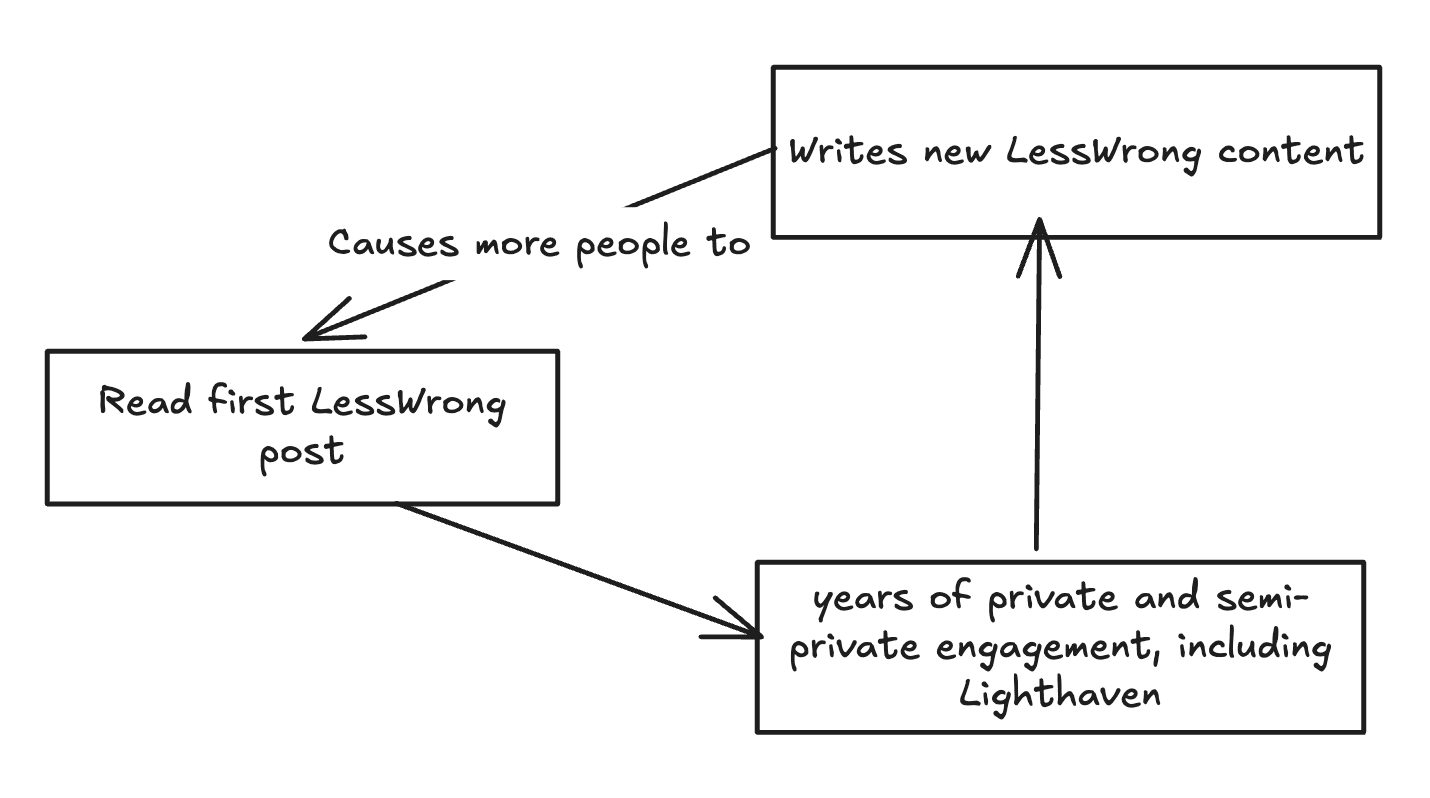

The relationship between Lighthaven and LessWrong

The most popular LessWrong posts, SSC posts or books like HPMoR are usually people's first exposure to core rationality ideas and concerns about AI existential risk. LessWrong is also the place where many people who have spent years thinking about these topics write and share their ideas, which then attracts more people, which in some sense forms the central growth loop of the rationalist ecosystem. Lighthaven and the in-person programs it supports is one of the many components of what happens between someone reading LessWrong for the first time, and someone becoming an active intellectual contributor to the site, which I think usually takes about 3-4 years of lots of in-person engagement and orienting and talking to friends and getting a grip on these ideas, when it happens.

This means in some sense the impact of Lighthaven should in substantial parts be measured by its effects on producing better research and writing on LessWrong and other parts of public discourse.

Of course, the intellectual outputs in the extended rationality and AI safety communities are far from being centralized on LessWrong, and much good being done does not route through writing blog posts or research papers. This makes the above a quite bad approximation of our total impact, but I would say that if I saw no positive effects of Lighthaven on what happens on LessWrong and the AI Alignment Forum, something would have gone quite wrong.

On this matter, I think it's quite early to tell whether Lighthaven is working. I currently feel optimistic that we are seeing a bunch of early signs of a rich intellectual community sprouting up around Lighthaven, but I think we won't know for another 2-3 years whether LessWrong and other places for public intellectual progress have gotten better as a result of our efforts here.

Lightcone and the funding ecosystem

Having gone through some of our historical impact, and big projects, let's talk about funding.

Despite what I, and basically all historical funders in the ecosystem, consider to be a quite strong track record, practically all historical mechanisms by which we have historically received funding are unable to fund us going forward, or can only give us substantially reduced funding.

Here is a breakdown of who we received funding from over the last few years:

You might notice the three big items in this graph, FTX Future Fund[13], Open Philanthropy, and the Survival and Flourishing Fund.

FTX Future Fund is no more, and indeed we ended up returning around half of the funding we received from them[14], and spent another 15% of the amount they gave to us in legal fees, and I spent most of my energy last year figuring out our legal defense and handling the difficulties of being sued by one of the most successful litigators of the 21st century, so that was not very helpful. And of course the Future Fund is even less likely to be helpful going forward.

Good Ventures will not accept future Open Philanthropy recommendations to fund us and Open Phil generally seems to be avoiding funding anything that might have unacceptable reputational costs for Dustin Moskovitz. Importantly, Open Phil cannot make grants through Good Ventures to projects involved in almost any amount of "rationality community building", even if that work is only a fraction of the organizations efforts and even if there still exists a strong case on grounds unrelated to any rationality community building. The exact lines here seem somewhat confusing and unclear and my sense are still being figured out, but Lightcone seems solidly out.

This means we aren't getting any Open Phil/Good Ventures money anymore, while as far as I know, most Open Phil staff working on AI safety and existential risk think LessWrong is very much worth funding, and our other efforts at least promising (and many Open Phil grantees report being substantially helped by our work).

This leaves the Survival and Flourishing Fund, who have continued to be a great funder to us. And 2/3 of our biggest funders disappearing would already be enough to force us to seriously change how we go about funding our operations, but there are additional reasons why it's hard for us to rely on SFF funding:

- Historically on the order of 50% of SFF recommenders[15] are recused from recommending us money. SFF is quite strict about recusals, and we are friends with many of the people that tend to be recruited for this role. The way SFF is set up, this causes a substantial reduction in funding allocated to us (compared to the recommenders being fully drawn from the set of people who are not recused from recommending to us).

- Jaan and SFC[16] helped us fund the above-mentioned settlement with the FTX estate (providing $1.7M in funding). This was structured as a virtual "advance" against future potential donations, where Jaan expects to only donate 50% of future recommendations made to us via things like the SFF, until the other 50% add up to $1.29M[17] in "garnished" funding. This means for the foreseeable future, our funding from the SFF is cut in half.

Speaking extremely roughly, this means compared to 2022, two thirds of our funders have completely dropped out of funding us, and another sixth is going to be used to pay work that we had originally done under an FTX Future Fund grant, leaving us with one sixth of the funding, which is really not very much.

This all, importantly, is against a backdrop where none of the people or institutions that have historically funded us have updated against the cost-effectiveness of our operations. To the contrary, my sense is the people at Open Philanthropy, SFF and Future Fund have positively updated on the importance of our work, while mostly non-epistemic factors have caused the people involved to be unable to recommend funding to us.

This I think is a uniquely important argument for funding us. I think Lightcone is in the rare position of being considered funding-worthy by many of the key people that tend to try to pick up the most cost-effective interventions, while being de-facto unable to be funded by them.

I do want to express extreme gratitude for the individuals that have helped us survive throughout 2023 when most of these changes in the funding landscape started happening, and Lightcone transitioned from being a $8M/yr organization to a $3M/yr organization. In particular, I want to thank Vitalik Buterin and Jed McCaleb who each contributed $1,000,000 in 2023, Scott Alexander who graciously donated $100,000, Patrick LaVictoire who donated $50,000, and many others who contributed substantial amounts.

Lightcone's budget

While I've gone into our spending in a few disparate ways in other sections of this post, at this point I think it makes sense to give a quick overview over our spending in a more consolidated place. At a high-level, here is our forecasted annual budget:

| Type | Cost | |

|---|---|---|

| Core Staff Salaries, Payroll, etc. (6 people) | $1.4M | |

| Lighthaven (Upkeep) | ||

| Operations & Sales | $240k | |

| Repairs & Maintenance Staff | $200k | |

| Porterage & Cleaning Staff | $320k | |

| Property Tax | $300k | |

| Utilities & Internet | $180k | |

| Additional Rental Property | $180k | |

| Supplies (Food + Maintenance) | $180k | |

| Lighthaven Upkeep Total | $1.6M | |

| Lighthaven Mortgage | $1M | |

| LW Hosting + Software Subscriptions | $120k | |

| Dedicated Software + Accounting Staff | $330k | |

| Total Costs | $4.45M | |

| Expected Lighthaven Income | ($2.55M) | |

| Annual Shortfall | $1.9M | |

And then, as explained in the "The Economics of Lighthaven" section [? · GW], in the coming year, we will have an additional mortgage payment of $1M due in March.

The core staff consists of generalists who work on a very wide range of different projects. My best guess is about 65% of the generalist labor in the coming year will go into LW, but that might drastically change depending on what projects we take on.

Our work on funding infrastructure

Now that I've established some context on the funding ecosystem, I also want to go a bit into the work that Lightcone has done on funding around existential risk reduction, civilizational sanity and rationality development.

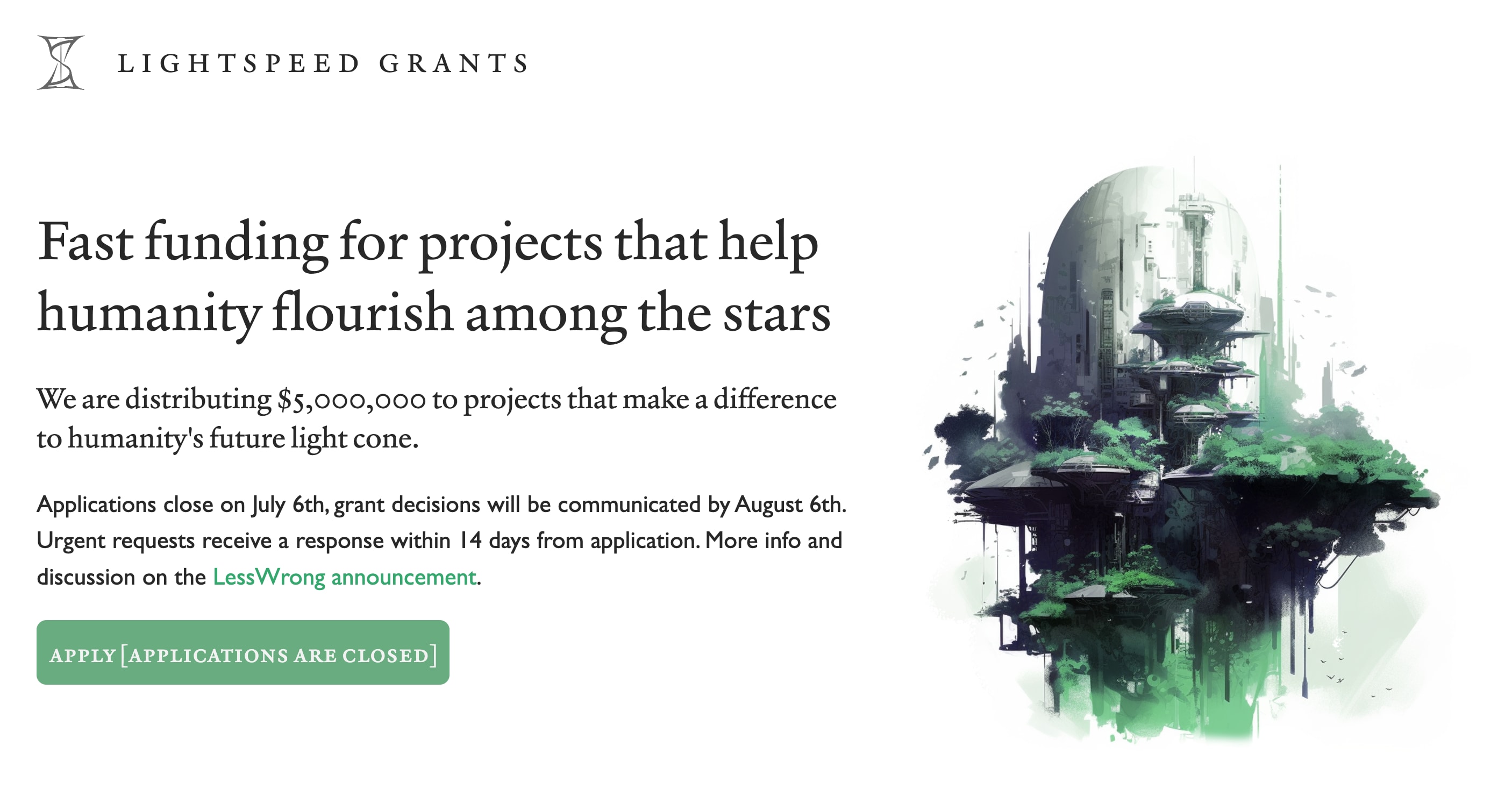

The third big branch of historical Lightcone efforts has been to build the S-Process, a funding allocation mechanism used by SFF, FLI and Lightspeed Grants.

Together with the SFF, we built an app and set of algorithms that allows for coordinating a large number of independent grant evaluators and funders much more efficiently than anything I've seen before, and it has successfully been used to distribute over $100M in donations over the last 5 years. Internally I feel confident that we substantially increased the cost-effectiveness of how that funding was allocated—my best guess is on the order of doubling it, but more confidently by at least 20-30%[18], which I think alone is a huge amount of good done.[19]

Earlier this year, we also ran our own funding round owned end-to-end under the banner of "Lightspeed Grants":

Somewhat ironically, the biggest bottleneck to us working on funding infrastructure has been funding for ourselves. Working on infrastructure that funds ourselves seems ripe with potential concerns about corruption and bad incentives, and so I have not felt comfortable applying for funding from a program like Lightspeed Grants ourselves. Our non-SFF funders historically were also less enthusiastic about us working on funding infrastructure for the broader ecosystem than our other projects.

This means that in many ways, working on funding infrastructure reduces the amount of funding we receive, by reducing the pots of money that could potentially go to us. As another instance of this, I have been spending around 10%-20% of my time over the past 5 years working as a fund manager on the Long Term Future Fund. As a result, Lightcone has never applied to the LTFF, or the EA Infrastructure Fund, as my involvement with EA Funds would pose too tricky of a COI in evaluating our application. But I am confident that both the LTFF and the EAIF would evaluate an application by Lightcone quite favorably, if we had never been involved in it.

(The LTFF and the EAIF are therefore two more examples of funders that usually pick up the high cost-effectiveness fruit, but for independent reasons are unable to give to Lightcone Infrastructure, leaving us underfunded relative to our perceived cost-effectiveness.)

If it's worth doing it's worth doing with made-up statistics

Thus is it written: “It’s easy to lie with statistics, but it’s easier to lie without them.”

Ok, so I've waffled about with a bunch of high-level gobbledigosh, but as spreadsheet altruists the only arguments we are legally allowed to act on must involve the multiplication of at least 3 quantities and at least two google spreadsheets.

So here is the section where I make some terrible quantitative estimates which will fail to model 95% of the complexity of the consequences of any of our actions, but which I have found useful in thinking about our impact, and which you will maybe find useful too, and which you can use to defend your innocence when the local cost-effectiveness police demands your receipts.

The OP GCR capacity building team survey

Open Philanthropy has run two surveys in the last few years in which they asked people they thought were now doing good work on OP priority areas like AI safety what interventions, organizations and individuals were particularly important for people getting involved, or helped people to be more productive and effective.

Using that survey, and weighting respondents by how impactful Open Phil thought their work was going to be, they arrived at cost-effectiveness estimates for various organizations (to be clear, this is only one of many inputs in OPs grantmaking).

In their first 2020 survey, here is the table they produced[20]:

| Org | $/net weighted impact points (approx; lower is better) |

| SPARC | $9 |

| LessWrong 2.0 | $46 |

| 80,000 Hours | $88 |

| CEA + EA Forum | $223 |

| CFAR | $273 |

As you can see, LessWrong 2.0's impact was in estimated cost-effectiveness only behind SPARC (which is a mostly volunteer driven program, and this estimate does not take into account opportunity cost of labor).

In their more recent 2023 survey, Lightcone's work performed similarly well. While the data they shared didn't include any specific cost-effectiveness estimates, they did include absolute estimates on the number of times that various interventions showed up in their data:

These are the results from the section where we asked about a ton of items one by one and by name, then asked for the respondent’s top 4 out of those. I’ve included all items that were listed more than 5 times.

These are rounded to the nearest multiple of 5 to avoid false precision.

80,000 Hours 125 University groups 90 EAGs/EAGxes 70 Open Philanthropy 60 Eliezer's writing 45 LessWrong (non-Eliezer) 40 [...] Lightcone (non-LW) 15

To get some extremely rough cost-effectiveness numbers out of this, we can divide the numbers here by the budget for the associated organizations, though to be clear, this is definitely an abuse of numbers.

Starting from the top, during the time the survey covered (2020 - early 2023) the annual budget of 80,000 Hours averaged ~$6M. Lightcone's spending (excluding Lighthaven construction, which can't have been relevant by then) averaged around $2.3M. University groups seem to have been funded at around $5M/yr[21], and my best guess is that EAG events cost around $6M a year during that time [EA · GW]. I am going to skip Open Philanthropy because that seems like an artifact of the survey, and Eliezer, because I don't know how to estimate a reasonable number for him.

This produces this table (which I will again reiterate is a weird thing to do):

| Project | Mention | Mentions / $M |

| 80,000 Hours | 125 | 6.4 |

| University groups | 90 | 5. |

| EAGs/EAGxes | 70 | 3.6 |

| Lightcone (incl. LW) | 40 + 15 | 6.8 |

As you can see, my totally objective table says that we are the most cost-effective intervention that you can fund out there (to be clear, I think the central takeaway here is more "by this very narrow methodology Lightcone is competitive with the best interventions, I think the case for it being the very best is kind of unstable")

Lightcone/LessWrong cannot be funded by just running ads

An IMO reasonable question to ask is "could we fund LessWrong if we just ran ads?". It's not fully clear how that relates to our cost-effectiveness, but I still find it a useful number to look at as a kind of lower-bound on the value that LessWrong could produce, with a small change.

LessWrong gets around 20 million views a year, for around 3 million unique users and 12 million engagement minutes. For our audience (mostly American and english-speaking) using Google AdSense, you would make about $2 per 1000 views, resulting in a total ad revenue of around $40,000, a far cry from the >$1,000,000 that LessWrong spends a year.

Using Youtube as another benchmark, Youtubers are paid about $15 for 1000 U.S. based ad impressions, with my best guess of ad frequency on Youtube being about once every 6 minutes, resulting in 2 million ad impressions and therefore about $30,000 in ad revenue (this is ignoring sponsorship revenue for Youtube videos which differ widely for different channels, but where my sense is they tend to roughly double or triple the default Youtube ad revenue, so a somewhat more realistic number here is $60,000 or $90,000).

Interestingly, this does imply that if you were willing to buy advertisements that just consisted of getting people in the LessWrong demographic to read LessWrong content, that would easily cover LessWrong's budget. A common cost per click for U.S. based ads is around $2, and it costs around $0.3 to get someone to watch a 30-second ad on Youtube, resulting in estimates of around $40,000,000 to $4,000,000 to get people to read/watch LessWrong content by just advertising for it.

Comparing LessWrong to other websites and apps

Another (bad) way of putting some extremely rough number of the value LessWrong provides to the people on it, is to compare it against revenue per active user numbers for other websites and social networks.

| Platform | U.S. ARPU (USD) | Year | Source |

| $226.93 (Annual) | 2023 | Statista | |

| $56.84 (Annual) | 2022 | Statista | |

| Snapchat | $29.98 (Annual) | 2020 | Search Engine Land |

| $25.52 (Annual) | 2023 | Stock Dividend Screener | |

| $22.04 (Annual) | 2023 | Four Week MBA |

I think by the standards of usual ARPU numbers, LessWrong has between 3,000 and 30,000 active users. So if we use Reddit as a benchmark this would suggest something like $75,000 - $750,000 per year in revenue, and if we use Facebook as a benchmark, this would suggest something like $600,000 - $6,000,000.

Again, it's not enormously clear what exactly these numbers mean, but I still find them useful as very basic sanity-checks on whether we are just burning money in highly ineffectual ways.

Lighthaven event surplus

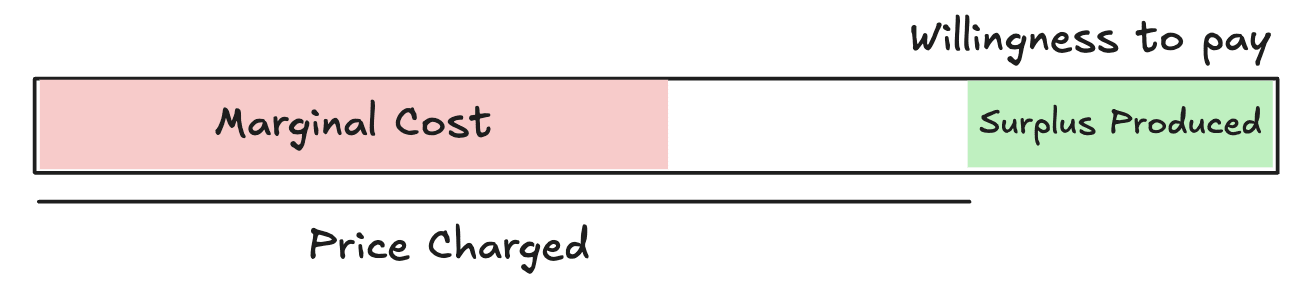

Over the last year, we negotiated pricing with many organizations that we have pre-existing relationships with using the following algorithm:

- Please estimate your maximum willingness to pay for hosting your event at Lighthaven (i.e. at what price would you be indifferent between Lighthaven and your next best option)

- We will estimate the marginal cost to us of hosting your event

- We use the difference between these as an estimate of the surplus produced by Lighthaven and we split it 50/50, i.e. you pay us halfway between our marginal cost and your maximum willingness to pay

This allows a natural estimate of the total surplus generated by Lighthaven, measured in donations to the organizations that have hosted events here.

On average, event organizers estimated total value generated at around 2x our marginal cost.

Assuming this ratio also holds for all events organized at Lighthaven, which seems roughly right to me, we can estimate the total surplus generated by Lighthaven. Also, many organizers adjusted the value-add from Lighthaven upwards after the event, suggesting this is an underestimate of the value we created (and we expect to raise prices in future years to account for that).

This suggests that our total value generated this way is ~1.33x our revenue from Lighthaven, which is likely to be around $2.8M in the next 12 months. This suggests that as long as Lighthaven costs less than ~$3.72M, it should be worth funding if you thought it was worth funding the organizations that have hosted events and programs here (and that in some sense historical donations to Lighthaven operate at least at a ~1.33x multiplier compared to the average donation to organizations that host events here).

To help get a sense of what kind of organizations do host events here, here is an annotated calendar of all the events hosted here in 2024, and our (charitable) bookings for 2025:

The future of (the) Lightcone

Now that I have talked extensively about all the things we have done in the past, and how you should regret not giving to us last year, now comes the part where I actually describe what we might do in the future. In past fundraising documents to funders and the public, I have found this part always the hardest. I value flexibility and adaptability very highly, and with charities, even more so than with investors in for-profit companies, I have a feeling that people who give to us often get anchored on the exact plans and projects that we were working on when they did.

I think to predict what we will work on in the future, it is helpful to think about Lightcone at two different levels: What are the principles behind how Lightcone operates, and what are the concrete projects that we are considering working on?

Lightcone culture and principles

Lightcone has grown consistently but extremely slowly over the last 7 years. There are some organizations I have had a glimpse into that have seen less net-growth, but I can’t think of an organization that has added as few hires (including people who later left) to their roster that now still work there. I’ve consistently hired ~1 person per year to our core team for the six years Lightcone has existed (resulting in a total team size of 7 core team members).

This is the result of the organization being quite deeply committed to changing strategies when we see the underlying territory shift. Having a smaller team, and having long-lasting relationships, makes it much easier for us to pivot, and allows important strategic and conceptual updates to propagate through the organization more easily.[22]

Another result of the same commitment is that we basically don’t specialize into narrow roles, but instead are aiming to have a team of generalists where, if possible, everyone in the organization can take on almost any other role in the organization. This enables us to shift resources between different parts of Lightcone depending on which part of the organization is under the most stress, and to feel comfortable considering major pivots that would involve doing a very different kind of work, without this requiring major staff changes every time. I don't think we have achieved full universal generality among our staff, but it is something we prioritize and have succeeded at much more than basically any other organization I can think of.

Another procedural commitment is that we try to automate as much of our work as possible, and aim for using software whenever possible to keep our total staff count low, and create processes to handle commitments and maintain systems, instead of having individuals who perform routine tasks on an ongoing basis (or at the very least try our best to augment the individuals doing routine tasks using software and custom tools).

There is of course lots more to our team culture. For a glimpse into one facet of it, see our booklet "Adventures of the Lightcone Team".

Things I wish I had time and funding for

AGI sure looks to me like it's coming, and it's coming uncomfortably fast. While I expect the overall choice to build machine gods beyond our comprehension and control will be quite bad for the world, the hope that remains routes in substantial chunks through leveraging the nascent AGI systems that we have access to today and will see in the coming years.

Concretely, one of the top projects I want to work on is building AI-driven tools for research and reasoning and communication, integrated into LessWrong and the AI Alignment Forum. If we build something here, it will immediately be available to and can easily be experimented with by people working on reducing AI existential risk, and I think has a much larger chance than usual of differentially accelerating good things.

We've already spent a few weeks building things in the space, but our efforts here are definitely still at a very early stage. Here is a quick list of things I am interested in exploring, though I expect most of these to not be viable, and the right solutions and products to probably end up being none of these:

Building an LLM-based editor.

LessWrong admins currently have access to a few special features in our editor that I have found invaluable. Chief among them is having built-in UI for "base-model Claude 3.5 Sonnet"[23] and Llama 405b-base continuing whatever comment or post I am in the middle of writing, using my best LessWrong comments and posts as a style and content reference (as well as some selected posts and comments by other top LW authors). I have found this to be among the best tools against writer's block, where every time I solidly get stuck, I generate 5-10 completions of what the rest of my post could look like, use it as inspiration of all kinds of different directions my post could go, then delete them and keep writing.

Using base models has at least so far been essential for getting any useful writing work out of LLMs, with the instruction-tuned models reliably producing obtuse corpo-speak when asked to engage in writing tasks.

Similarly LLMs are now at a point where they can easily provide high-level guidance to drafts of yours, notice sections where your explanations are unclear, fix typos, shorten and clean up extremely long left-branching sentences, and do various other straightforward improvements to the quality of your writing.

AI prompts and tutors as a content type on LW

LLM systems are really good tutors. They are not as good as human instructors (yet), but they are (approximately) free, eternally patient, and have a breadth of knowledge vastly beyond that of any human alive. With knowledge and skill transfer being one of the key goals for LessWrong, I think we should try to leverage that.

I would like to start with iterating on getting AI systems to teach the core ideas on LW, then after doing it successfully, experiment with opening up the ability to create tutors like that to authors on LessWrong, who would like to get AI assistance explaining and teaching the concepts they would like to communicate.

Authors and the LessWrong team can read the chats people had with our AI tutors[24], giving authors the ability to correct anything wrong that the AI systems said, and then using those corrections as part of the prompt to update how the tutor will do things in the future. I feel like this unlocks a huge amount of cool pedagogical content knowledge [LW · GW] that has previously been inaccessible to people writing on LessWrong, and gives you a glimpse into how people fail to understand (or successfully apply your concepts) in ways that previously could have only been achieved by teaching people one on one.

Building something like an FHI of the West

But AI things are not the only things I want to work on. In a post a few months ago I said:

The Future of Humanity Institute is dead:

I knew that this was going to happen in some form or another for a year or two, having heard through the grapevine and private conversations of FHI's university-imposed hiring freeze and fundraising block, and so I have been thinking about how to best fill the hole in the world that FHI left behind.

I think FHI was one of the best intellectual institutions in history. Many of the most important concepts in my intellectual vocabulary were developed and popularized under its roof, and many crucial considerations that form the bedrock of my current life plans were discovered and explained there (including the concept of crucial considerations [? · GW] itself).

With the death of FHI (as well as MIRI moving away from research towards advocacy), there no longer exists a place for broadly-scoped research on the most crucial considerations for humanity's future. The closest place I can think of that currently houses that kind of work is the Open Philanthropy worldview investigation team, which houses e.g. Joe Carlsmith, but my sense is Open Philanthropy is really not the best vehicle for that kind of work.

While many of the ideas that FHI was working on have found traction in other places in the world (like right here on LessWrong), I do think that with the death of FHI, there no longer exists any place where researchers who want to think about the future of humanity in an open ended way can work with other people in a high-bandwidth context, or get operational support for doing so. That seems bad.

So I am thinking about fixing it (and have been jokingly internally referring to my plans for doing so as "creating an FHI of the West")

Since then, we had the fun and privilege of being sued by FTX, which made the umbrella of Lightcone a particularly bad fit for making things happen in the space, but now that that is over, I am hoping to pick this project back up again.

As I said earlier in this post, I expect that if we do this, I would want to go about it in a pretty incremental and low-key way, but I do think it continues to be one of the best things that someone could do, and with our work on LessWrong and ownership of a world-class 20,000 sq. ft. campus in the most important geographical region of the world, I think we are among the best placed people do this.

Building funding infrastructure for AI x-risk reduction

There currently doesn't really exist any good way for people who want to contribute to AI existential risk reduction to give money in a way that meaningfully gives them assistance in figuring out what things are good to fund. This is particularly sad since I think there is now a huge amount of interest from funders and philanthropists who want to somehow help with AI x-risk stuff, as progress in capabilities has made work in the space a lot more urgent, but the ecosystem is currently at a particular low-point in terms of trust and ability to direct that funding towards productive ends.

I think our work on the S-Process and SFF has been among the best work in the space. Similarly, our work on Lightspeed Grants helped, and I think could grow into a systemic solution for distributing hundreds of millions of dollars a year, at substantially increased cost-effectiveness.

Something something policy

Figuring out how to sanely govern the development of powerful AI systems seems like a top candidate for the most important thing going on right now. I do think we have quite a lot of positive effect on that already, via informing people who work in the space and causing a bunch of good people to start working in the space, but it is plausible that we want to work on something that is substantially more directed towards that.

This seems particularly important to consider given the upcoming conservative administration, as I think we are in a much better position to help with this conservative administration than the vast majority of groups associated with AI alignment stuff. We've never associated ourselves very much with either party, have consistently been against various woke-ish forms of mob justice for many years, and have clearly been read a non-trivial amount by Elon Musk (and probably also some by JD Vance).

I really don't know what doing more direct work in the space would look like. The obvious thing to do is to produce content that is more aimed at decision-makers in government, and to just talk to various policy people directly, but it might also involve doing things like designing websites for organizations that work more directly on influencing policy makers (like our recently-started collaborations with Daniel Kokotajlo's research team "AI Futures Project" and Zach Stein-Perlman's AI Lab Watch to help them with their website designs and needs).

A better review system for AI Alignment research

I do not believe in pre-publication private anonymous peer-review. I think it's dumb to gate access to articles behind submissions to journals, and I think in almost all circumstances it's not worth it for reviewers to be anonymous, both because I think great reviewers should be socially rewarded for their efforts, and bad reviewers should be able to be weeded out.

But I do think there is a kind of work that is often undersupplied that consists of engaging critically with research, suggesting improvements, helping the author and the reader discover related work, and successfully replicating, or failing to replicate key results. Right now, the AI Alignment field has very little incentive for that kind of work, which I think is sad.

I would like to work on making more of that kind of review happen. I have various schemes and ideas in mind for how to facilitate it, and think we are well-placed to do it.

Again, our operating philosophy values pivoting to whatever we end up thinking is best and I think it's quite likely we will not make any of the above a substantial focus of the next 1-2 years, but it still seemed useful to list.

What do you get from donating to Lightcone?

I think the best reason to donate to us is because you think that doing so will cause good things to happen in the world (like it becoming less likely that you and all your friends will die from a rogue AI). That said, credit allocation is important, and I think over the past few years there has been too little credit given to people donating to keep our community institutions intact, and I personally have been too blinded by my scope-sensitivity[25] and so ended up under-investing in my relationships to anyone but the very largest donors.

I think many things would be better if projects like LessWrong and Lighthaven were supported more by the people who are benefitting from them instead of large philanthropists giving through long chains of deference with only thin channels of evidence about our work. This includes people who benefitted many years ago when their financial means were much less, and now are in a position to help the institutions that allowed them to grow.

That means if you've really had your thinking or life-path changed by the ideas on LessWrong or by events and conversations at Lighthaven, then I'd make some small request for you to chip in to keep up the infrastructure alive for you and for others.

If you donate to us, I will try to ensure you get appropriate credit (if you desire). I am still thinking through the best ways to achieve that, but some things I feel comfortable committing to (and more to come):

- If you donate at least $1,000 we will send you a special-edition Lightcone or LessWrong t-shirt

- If you donate at least $5,000, we will add you to the Lightcone donor leaderboard, under whatever name you desire (to be created at lesswrong.com/leaderboard)

- We will also add a plaque in your celebration to our Lighthaven legacy wall with a sigil and name of your choice (also currently being built, but I'll post comments with pictures as donations come in!)

- Various parts of Lighthaven are open to be named after you! You can get a bench (or similarly prominent object) with a nice plaque with dedication of your choice if you donate at least

$1,000$2,000[26], or you can get a whole hall or area of the campus named after you at higher numbers.[27]

As the first instance of this, I'd like to give enormous thanks to @drethelin [LW · GW] for opening our fundraiser with a $150,000 donation in whose thanks we have renamed our northwest gardens to "The Drethelin Gardens" for at least the next 2 years.

If you can come up with any ways that you think would be cool to celebrate others who have given to Lightcone, or have any ideas for how you want your own donation to be recognized, please reach out! I wasn't really considering naming campus sections after people until drethelin reached out, and I am glad we ended up going ahead with that.

Goals for the fundraiser

We have three fundraising milestones for this fundraiser, one for each of the million dollars:

- May. The first million dollars will probably allow us to continue operating after our first (deferred) interest payment on our loan, and continue until May.

- November. The second million dollars gets us all the way to our second interest payment, in November.

- 2026. The third million dollars allows to make our second interest payment, and make it to the end of the year.

We'll track our progress through each goal with a fundraising thermometer on the front page[28]. Not all of Lightcone's resources will come from this fundraiser of course. Whenever we receive donations (from any source), we'll add the funds to the "Raised" total on the frontpage.

Logistics of donating to Lightcone

We are a registered 501(c)3 in the US, and can receive tax-deductible donations in Germany and Switzerland via Effektiv-Spenden.de. To donate to us through effektiv-spenden.de, send an email to me (habryka@lightconeinfrastructure.com) and Johanna Schröder (johanna.schroeder@effektiv-spenden.org) and we will send you the info you need to send the funds and get that sweet sweet government tax reduction.

If there is enough interest, can probably also set up equivalence determinations in most other countries that have a similar concept of tax-deductability (I am working on getting us set up for UK gift aid).

We can also accept donations of any appreciated asset that you might want to donate. We are set up to receive crypto, stocks, stock options, and if you want to donate your appreciated Magic the Gathering collection, we can figure out some way of giving you a good donation receipt for that. Just reach out (via email, DM [LW · GW], or text/signal at +1 510 944 3235) and I will get back to you ASAP with the logistics.

Also, please check if your employer has a donation matching program! Many big companies double the donations made by their employees to nonprofits (for example, if you work at Google and donate to us, Google will match your donation up to $10k). Here is a quick list of organizations with matching programs I found, but I am sure there are many more. If you donate through one of these, please also send me an email, I almost never get access to your email, through these, which makes it hard for me to reach out and thank you.

If you want to donate less than $5k in cash, I recommend our every.org donation link. We lose about 2-3% of that in fees if you use a credit card, and 1% if you use bank transfer, so if you want to donate more and want us to lose less to fees, you can reach out and I'll send you our wire transfer details.

If you want to send us BTC, we have a wallet! The address is 37bvhXnjRz4hipURrq2EMAXN2w6xproa9T.

Tying everything together

Whew, that was a marathon of a post. I had to leave out a huge number of things that we've done, and a huge number of hopes and aims and plans I have for the future. Feel free to ask me in the comments about any details.

I hope this all helps explain what Lightcone's deal is and gives you the evidence you need to evaluate my bold claims of cost-effectiveness.

So thank you all. I think with the help from the community and recent invigorated interest into AI x-risk stuff, we can pull the funds together to continue Lightcone's positive legacy.

If you can and want to be a part of that, donate to us here. We need to raise $3M to survive the next 12 months, and can productively use a lot of funding beyond that.

- ^

Donations are tax-deductible in the US. Reach out for other countries, we can likely figure something out.

- ^

Our technical efforts here also contribute to the EA Forum, which started using our code in 2019.

- ^

Why more money this year than next year? The reason is that we have an annual interest payment of $1M on our Lighthaven mortgage that was due in early November, which we negotiated to be deferred to March. This means this twelve month period will have double our usual mortgage payments.

We happen to also own a ~$1M building adjacent to Lighthaven in full, so we have a bit of slack. We are looking into taking out a loan on that property, but we are a non-standard corporate entity from the perspective of banks so it has not been easy. If for some reason you want to arrange a real-estate insured loan for us, instead of donating to us, that would also be quite valuable.

- ^

I am also hoping to create more ways of directing appreciation and recognition to people whose financial contributions allow us to have good things (see the section below on "What do you get from donating to Lightcone?" [LW · GW]).

- ^

What does "additional" mean here? That's of course quite tricky, since it's really hard to establish what would have happened if we hadn't worked on LessWrong. I am not trying to answer that tricky question here, I just mean "more content was posted to LW".

- ^

As a quick rundown: Shane Legg is a Deepmind cofounder and early LessWrong poster [LW · GW] directly crediting Eliezer for working on AGI. Demis has also frequently referenced LW ideas and presented at both FHI and the Singularity Summit. OpenAI's founding team and early employees were heavily influenced by LW ideas (and Ilya was at my CFAR workshop in 2015). Elon Musk has clearly read a bunch of LessWrong, and was strongly influenced by Superintelligence which itself was heavily influenced by LW. A substantial fraction of Anthropic's leadership team actively read and/or write on LessWrong.

- ^

For a year or two I maintained a simulated investment portfolio at investopedia.com/simulator/ with the primary investment thesis "whenever a LessWrong comment with investment advice gets over 40 karma, act on it". I made 80% returns over the first year (half of which was buying early shorts in the company "Nikola" after a user posted a critique of them on the site).

After loading up half of my portfolio on some option calls with expiration dates a few months into the future, I then forgot about it, only to come back to see all my options contracts expired and value-less, despite the sell-price at the expiration date being up 60%, wiping out most of my portfolio. This has taught me both that LW is amazing alpha for financial investment, and that I am not competent enough to invest on it (luckily other people [LW(p) · GW(p)] have [LW(p) · GW(p)] done [LW · GW] reasonable [LW(p) · GW(p)] things [LW(p) · GW(p)] based on things said on LW and do now have a lot of money, so that's nice, and maybe they could even donate some back to us!)

- ^

This example is especially counterfactual on Lightcone's work. Gwern wrote the essay at a retreat hosted by Lightcone, partly in response to people at the retreat saying they had a hard time visualizing a hard AI takeoff; and Garrett Baker is a MATS fellow who was a MATS fellow at office space run by Lightcone and provided (at the time freely) to MATS.

- ^

It might be a bit surprising to read that I expect the upkeep costs to stay the same, despite revenue increasing ~35%. The reason I expect this is that I see a large number of inefficiencies in our upkeep, and also we had a number of fixed-costs that we had to pay this year, that I don't expect to need to pay next year.

- ^

Yes, I know that you for some reason aren't supposed to use the word "feature" to describe improvements to anything but software, but it's clearly the right word.

"We shipped the recording feature in Eigen Hall and Ground Floor Bayes, you can now record your talks by pressing the appropriate button on the wall iPad"

- ^

Leverage Research had started the tradition of annual EA conferences 2-3 years earlier, under the name "EA Summit". CEA took over that tradition in 2014/2015 and started the "EA Global" series, which has been the annual EA conference since then (after coordinating some with Leverage about this, though I am confused on how much).

- ^

Austin Chen from Manifold, Manifund and Manifest says: