Posts

Comments

By penalizing the reward hacks you can identify, you’re training the AI to find reward hacks you can’t detect, and to only do them when you won’t detect them.

I wonder if it would be helpful to penalize deception only if the CoT doesn't admit to it. It might be harder generate test data for this since it's less obvious, but hopefully you'd train the model to be honest in CoT?

I'm thinking of this like the parenting stategy of not punishing children for something bad if they admit unprompted that they did it. Blameless portmortems are also sort-of similar.

Consider instead that Trump was elected with over 50% of the popular vote. Perhaps there are more fundamental cultural factors at play than the method used to count ballots.

Winning the popular vote in the current system doesn't tell you what would happen in a different system. This is the same mistake people make when they talk about who would have won if we didn't have an electoral college: If we had a different system, candidates would campaign differently and voters would vote differently.

I doubt this organization could get 501(c) status since it's only purpose is to make political donations (and it only matters if the organization you donate to is 501(c), it doesn't matter if they then re-grant it to another charitable organization). I'm not an expert on this though.

The value of the startup is only loosely correlated with being positive for AI safety (capabilities are valuable, but they're not the only valuable thing). Ideally the startup would be worth billions if and only if AI safety was solved.

I'd like to learn more Spanish words but have trouble sitting down to actually do language lessons, so I recently set my Claude "personal preferences" to:

Try to teach a random Spanish word in every conversation.

(This is the whole thing)

This has worked surprisingly well, and Claude usually either drops one word in Spanish with a translation midway through a response:

For your specific situation, I recommend a calibración (calibration) approach:

2. Accounting for concurrency: Ensure you're capturing all hilos (threads) involved in query execution, especially for parallel queries.

(From a conversation about benchmarking)

Or it ends the conversation with a fun fact:

¡Palabra en español! "Herramienta" - which means "tool" in Spanish, quite relevant to your search for tools to automate SSH known_hosts management.

La palabra española para hoy es "configurar" - which means "to configure" in English, fitting perfectly with our discussion about configurable thinking limits!

I don't know if this actually useful for learning, but it's fun and worked better than I expected.

My wife tried a similar prompt (although her preferences are much longer) and it made Claude sometimes respond entirely in Spanish, so this could probably be made more specific. If you run into that, maybe try "Response in English but try to teach a random Spanish word in every conversation" would work better?

It used to be that we had a two-tiered citizenry: one class owned and controlled the nation’s government (the nobility) and one class merely worked for said nation (the laborers). Then we decided that the laborers should also partially own and control the government. However, this practice was not extended to the workplace, which remains in that classic hierarchy to this day; with one class owning and controlling the firm, while the other class merely works for it.

This is not true? There are no legal restrictions on what class of people can own and control firms. Many worker-owned co-ops exist[1], and even among public corporations, around 40% of stock is held by workers in retirement accounts[2]. In some industries, it's very common to receive stock as compensation too. A lot of small businesses are tautologically worker-owned since they only have one employee (the owner).

Just because we don't legally mandate that every business is a co-op doesn't mean they aren't legal and don't exist.

- ^

I suspect very large worker-owned co-ops are uncommon since the value of a slice of ownership goes down as the size increases, but there's no legal restrictions on the size of a co-op.

- ^

This is an underestimate of stock owned by workers since it doesn't include taxable savings, but it would be hard to separate wage labor from the labor of creating and running companies in taxable accounts. Retirement accounts should be representative of 'normal workers' since there are low per-person caps and it's hard to fund them with anything except wages.

This post prompted me to look into more general purpose solutions to this, since it seems like "SSH into an IP that's known to be owned by a public cloud" should be fully automated at this point. We know which IP's are part of AWS and we can fetch the host keys securely using the AWS CLI (or helper tools like this). We should be able to do the same over HTTPS for GitHub, Azure, Google Cloud, etc.

It's surprising to me that no one seems to have made a general-purpose CLI or SSH plugin (if that's a thing) for this. Google Cloud has a custom CLI that does this but it obviously only works for their servers.

I think normal people sort files into folders (and understand filesystems) less than you'd expect. On second thought though, I think you're proposing something less confusing than I initially though. I think a general-purpose memory-category-tagging system would be way too confusing for users, but "you can create conversation categories and memory will only apply to other conversations in that category" is probably reasonable.

This sounds like the kind of thing power users would like but normal people would find confusing, like how Google+ was really cool for the nerds who were into it, but most people prefer to just have one list of friends on social networks.

One downside of this is that charitable donations are typically tax deductable, but political donations aren't. Whether this matters depends on tax brackets and whether the donater is going to itemize, but I imagine it would make it harder to convince people.

I saw that there is a "friend" option for tickets, and kids are also allowed. How likely is a friend or spouse who doesn't read these blogs to enjoy coming along? Did a lot of people bring friends/spouses last time?

I'm not sure if this is helpful (you might already know), but in Let's Think Dot By Dot, they found that LLM's could use filler tokens to improve computation, but they had to be specially trained for it to work. By default the extra tokens didn't help.

We haven't really established why OpenBrain's market dominance is inevitable.

I think they gave OpenBrain a generic name to indicate that they don't know which company this would be, so I think it's tautologically defined that OpenBrain is dominant because the dominant company is the one we're looking at.

This market seems valuable, but it depends on what you're using it for. "Experts can't predict what this politician is going to do" is useful information. Also it seems like a lot of voters were assuming the chance of Trump implementing major tariffs was tiny, so updating toward 50% would have helped them.

I’ve had emails ignored, responses that amount to “this didn’t come from the right person,” and the occasional reply like this one, from a very prominent member of AI safety:

“Without reading the paper, and just going on your brief description…”

That’s the level of seriousness these ideas are treated with.

I only had time to look at your first post, and then only skimmed it because it's really long. Asking people you don't know to read something of this length is more than you can really expect. People are busy and you're not the only one with demands on their time.

I would advise trying to put something at the beginning to help people understand what you're about to cover and why they should care about it. For the capitalism post, I agree with most of what you said (although some of your bullet points are unsupported assertions), but I still don't know what I'm supposed to take out of this, since ending capitalism isn't tractable, and (as you mention in regards to governments) non-capitalism doesn't help.

This seems to explain a lot about why Altman is trying so hard both to make OpenAI for-profit (to more easily raise money with that burn rate) and why he wants so much bigger data centers (to keep going on "just make it bigger").

Due to an apparently ravenous hunger among our donor base for having benches with plaques dedicated to them, and us not actually having that many benches, the threshold for this is increased to $2,000.

Given the clear mandate from the community, when do you plan to expand Lighthaven with a new Hall of Benches, and how many benches do you think you can fit in it?

I think it's more that learning to prioritize effectiveness over aesthetics will make you a more effective software engineer. Sometimes terrible languages are the right tool for the job, and I find it gives me satisfaction to pick the right tool even if I wish we lived in a world where the right tool was also the objectively best language (OCaml, obviously).

This economist thinks the reason is that inputs were up in January and the calculation is treating that as less domestic production rather than increased inventories:

OK, so what can we say about the current forecast of -2.8% for Q1 of 2025? First, almost all of the data in the model right now are for January 2025 only. We still have 2 full months in the quarter to go (in terms of data collection). Second, the biggest contributor to the negative reading is a massive increase in imports in January 2025.

[...]

The Atlanta Fed GDPNow model is doing exactly that, subtracting imports. However, it’s likely they are doing it incorrectly. Those imports have to show up elsewhere in the GDP equation. They will either be current consumption, or added to business inventories (to be consumed in the future). My guess, without knowing the details of their model, is that it’s not picking up the change in either inventories or consumption that must result from the increased imports.

https://economistwritingeveryday.com/2025/03/05/understanding-the-projected-gdp-decline/

I updated this after some more experimentation. I now bake them uncovered for 50 minutes rather than doing anything more complicated, and I added some explicit notes about additional seasonings. I also usually do a step where I salt and drain the potatoes, so I mentioned that in the variations.

During our evaluations we noticed that Claude 3.7 Sonnet occasionally resorts to special-casing in order to pass test cases in agentic coding environments like Claude Code. Most often this takes the form of directly returning expected test values rather than implementing general solutions, but also includes modifying the problematic tests themselves to match the code’s output.

Claude officially passes the junior engineer Turing Test?

But if we are merely mathematical objects, from whence arises the feelings of pleasure and pain that are so fundamental?

My understanding is that these feelings are physical things that exist in your brain (chemical, electrical, structural features, whatever). I think of this like how bits (in a computer sense) are an abstract thing, but if you ask "How does the computer know this bit is a 1?", the answer is that it's a structural feature of a hard drive or an electrical signal in a memory chip.

Allowing for charitable donations as an alternative to simple taxation does shift the needle a bit but not enough to substantially alter the argument IMO.

Not to mention that allowing for charitable donations as an alternative would likely lead to everyone setting up charities for their parents to donate to.

The resistance to such a policy is largely about ideology rather than about feasibility. It is about the quiet but pervasive belief that those born into privilege should remain there.

I don't think this is true at all. There is an ideological argument for inheritance, but it's not the one you're giving.

The ideological argument is that in a system with private property, people should be able to spend the money they earn in the ways they want, and one of the things people most want is to spend money on their children. The important person served by inheritance law is the person who made the money, not their inheritors (who you rightly point out didn't do anything).

Sam Altman is almost certainly aware of the arguments and just doesn't agree with them. The OpenAI emails are helpful for background on this, but at least back when OpenAI was founded, Elon Musk seemed to take AI safety relatively seriously.

Elon Musk to Sam Teller - Apr 27, 2016 12:24 PM

History unequivocally illustrates that a powerful technology is a double-edged sword. It would be foolish to assume that AI, arguably the most powerful of all technologies, only has a single edge.

The recent example of Microsoft's AI chatbot shows how quickly it can turn incredibly negative. The wise course of action is to approach the advent of AI with caution and ensure that its power is widely distributed and not controlled by any one company or person.

That is why we created OpenAI.

They also had a specific AI safety team relatively early on, and mention explicitly the reasons in these emails:

- Put increasing effort into the safety/control problem, rather than the fig leaf you've noted in other institutions. It doesn't matter who wins if everyone dies. Related to this, we need to communicate a "better red than dead" outlook — we're trying to build safe AGI, and we're not willing to destroy the world in a down-to-the-wire race to do so.

They also explicitly reference this Slate Star Codex article, and I think Elon Musk follows Eliezer's twitter.

I don't understand why perfect substitution matters. If I'm considering two products, I only care which one provides what I want cheapest, not the exact factor between them.

For example, if I want to buy a power source for my car and have two options:

Engine: 100x horsepower, 100x torque Horse: 1x horsepower, 10x torque

If I care most about horsepower, I'll buy the engine, and if I care most about torque, I'll also buy the engine. The engine isn't a "perfect substitute" for the horse, but I still won't buy any horses.

Maybe this has something to do with prices, but it seems like that just makes things worse since engines are cheaper than horses (and AI's are likely to be cheaper than humans).

Location: Remote. Timaeus will likely be located in either Berkeley or London in the next 6 months, and we intend to sponsor visas for these roles in the future.

Will all employees be required to move to Berkeley or London, or will they have the option to continue working remotely?

I think the biggest tech companies collude to fix wages so that they are sufficiently higher than every other company's salaries to stifle competition

The NYT article you cite says the exact opposite, that Big Tech companies were sued for colluding to fix wages downward, not upward. Why would engineers sue if they were being overpaid?

It seems like the big players already have plans to cut Nvidia out of the loop though.

And while they seem to have the best general purpose hardware, they're limited by competition with AMD, Apple, and Qualcomm.

I rice my potatoes while they're still burning hot, which is annoying, but I'm impatient and it means the result is still warm. If you're (reasonably) waiting for the potatoes to cool down, you might be able to re-heat them in the microwave or on the stove without too much of a change to texture, although you'd have to be careful about how you stir it.

Doesn't the stand mixer method overmix and produce glue-y mashed potatoes? I actually don't mind that texture but I thought that's why people don't usually do it that way.

I also like Yukon Golds best in mashed potatoes, but I use a ricer (similar to this one).

I get the 10 lbs bags at Costco (usually buying 20 lbs at a time). Are the Trader Joe's ones noticably better tasting? I'd love to try more potato varieties but no one seems to sell anything more interesting unless I want tiny colorful potatoes that cost $10/lb.

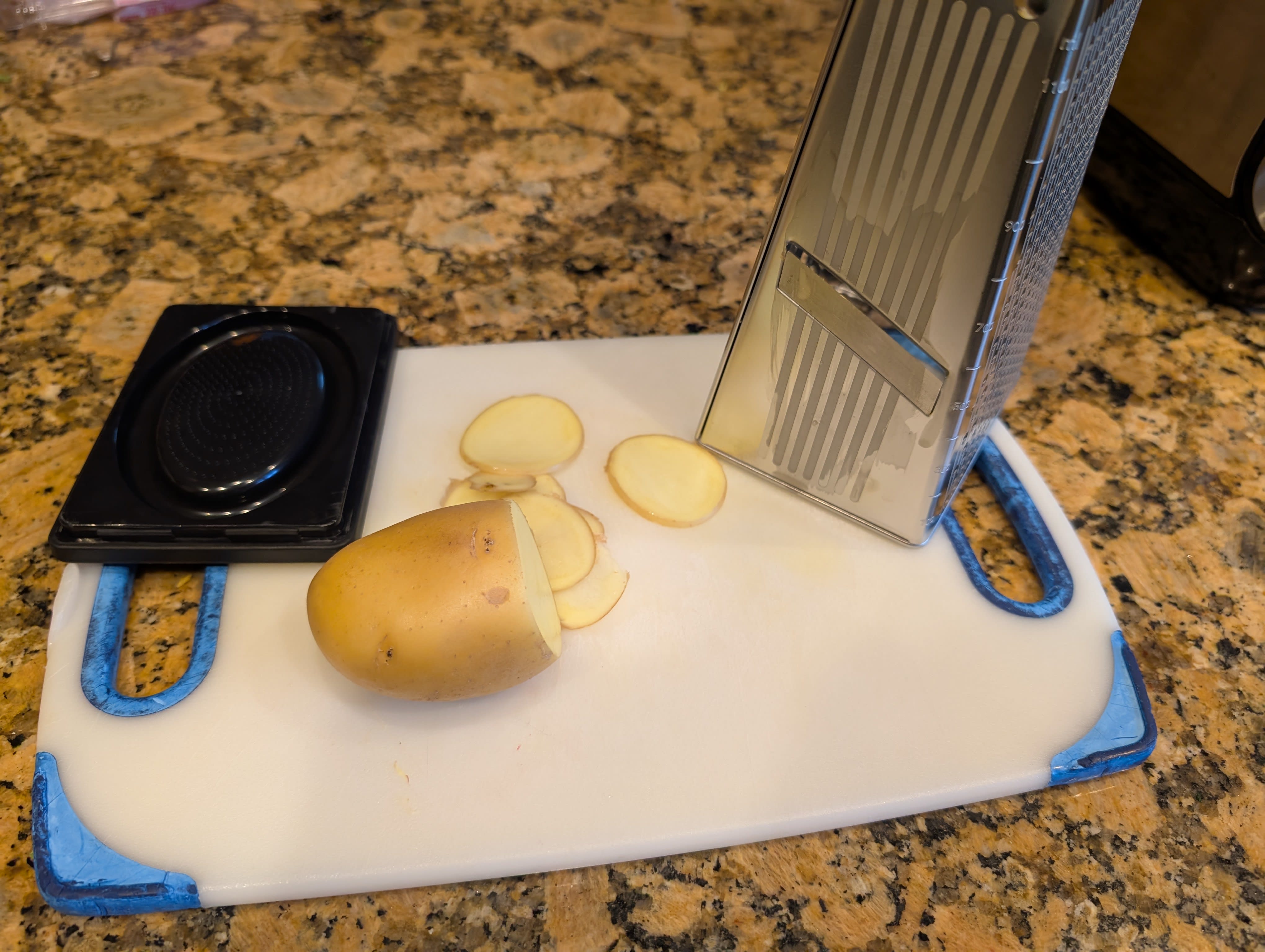

I actually have this exact box grater so I sacrificed a potato for science and determined:

- The thickness looks a little smaller than I usually do but should be fine.

- It's not quite as sharp as my mandoline so it might get tiring.

- The slicer is very small, so you might have trouble with large potatoes. I usually cut potatoes on the small side (for smaller slices) anyway, so this might not be a problem.

- You should get something to protect your hand since you'll definitely cut the tip of your finger off if you slice 6 lbs of potatoes like this without a guard.

I agree that more crispy bits is good. The recipe above optimizes for not being annoying to make, but doing the exact same thing and spreading the mixture on two sheet pans might work (and it would probably have a much shorter bake time).

I suspect the crispier version would be harder to store and wouldn't reheat as well though.

That's a good point. I don't really know what I'm doing, so I'm not able to predict exact variations. I found that this worked relatively consistently no matter how I cooked it, but the version in the recipe above was the best.

I definitely endorse changing the recipe based on how it goes:

- If it's not crispy enough, bake it longer uncovered, or increase the temperature, or move the pan closer to the top of the oven.

- If the internal texture is crunchy/uncooked, bake it (covered) longer.

- If the internal texture is too mushy, bake it (covered) shorter. You could also make the inside crispier by discarding the liquid released when you salt the potatoes, but you'd also need to adjust the amount of salt to make it taste good, and it would effect how it cooks.

Wouldn't the FDA not really be a blocker here, since doctors are allowed to prescribe medications off-label? It sounds more like a medical culture (or liability) thing, although I guess they kind-of interact since using FDA-approved medications in the FDA-approved way is (probably?) a good way to avoid liability issues.

I'm planning to donate $1000[1] but not until next year (for tax purposes). If there was a way that pledge that I would.

- ^

I'm committing to donating $1000 but it might end up being more when I actually think through all of the donations I plan to do next year.

I showed up and some other people were in the room :(

I'm finishing up packing but won't make it there until 2:15 or so.

Haha, well that dosage probably would probably cause weight loss.

All of the sources I can find give the density as exactly 4 oz = 1/2 cup, although maybe this is just an approximation that's infecting other data sources?

https://www.wolframalpha.com/input?i=density+of+butter+*+(1%2F2+cup)+in+ounces

But 1/2 cup of butter weighs 4 ounces according to every source I can find: https://www.wolframalpha.com/input?i=density+of+butter+*+(1%2F2+cup)+in+ounces

Which means a 4 ounce stick of butter is 1/2 cup by volume.

It sounds like 1/2 cup of butter (8 tbps) weighs 4 oz, so shouldn't this actually work out so each of those sections actually is 1 tbsp in volume, and it's just a coincidence (or not) that the density of butter is 1 oz / 2 fl oz?

The problem is that lack of money isn't the reason there's not enough housing in places that people want to live. Zoning laws intentionally exclude poor people because rich people don't want to live near them. Allocating more money to the problem doesn't really help (see: the ridiculous amount of money California spends on affordable housing), and if you fixed the part where it's illegal, the government spending isn't necessary because real estate developers would build apartments without subsidies if they were allowed to.

Also, the most recent election shows that ordinary people really, really don't like inflation, so I don't think printing trillions of dollars for this purpose is actually more palatable.

You're right, I was taking the section saying "In this new system, the only incentive to do more and go further is to transcend the status quo in some way, and earn recognition for a unique contribution." too seriously. On a second re-read, it seems like your proposal is actually just to print money to give people food stamps and housing vouchers. I think the answer to why we don't do that is that we do that.

Food is essentially a solved problem in the United States, and the biggest problem with housing vouchers is that there physically isn't enough housing in some areas. Printing more money doesn't cause more housing to exist (it could change incentives, but incentives don't matter much when building housing for poor people is largely illegal).

I think you've re-invented Communism. The reason we don't implement it is that in practice it's much worse for everyone, including poor people.

I'll try to make it but I might be moving that day so I'm not sure :\

Finally, note to self, probably still don’t use SQLite if you have a good alternative? Twice is suspicious, although they did fix the bug same day and it wasn’t ever released.

But is this because SQLite is unusually buggy, or because its code is unusually open, short and readable and thus understandable by an AI? I would guess that MySQL (for example) has significantly worse vulnerabilities but they're harder to find.

I don't know anything about you in particular, but if you know alignment researchers who would recommend you, could you get them to refer you either internally or through their contacts?

This is actually why a short position (a complicated loan) would theoretically work. If we all die, then you, as someone else's counterparty, never need to pay your loan back.

(I think this is a bad idea, but not because of counterparty risk)