What About The Horses?

post by Maxwell Tabarrok (maxwell-tabarrok) · 2025-02-11T13:59:36.913Z · LW · GW · 17 commentsThis is a link post for https://www.maximum-progress.com/p/what-about-the-horses

Contents

Humans and AIs Aren’t Perfect Substitutes But Horses and Engines Were Technological Growth and Capital Accumulation Will Raise Human Labor Productivity; Horses Can’t Use Technology or Capital Humans Own AIs and Will Spend the Productivity Gains on Goods and Services That Humans can Produce What Could Still Go Wrong? What Would Make This Argument Fail? None 17 comments

In a previous post, I argued that AGI would not make human labor worthless.

One of the most common responses was to ask about the horses. Technology resulted in mass unemployment and population collapse for horses even though they must have had some comparative advantage with more advanced engines. Why couldn’t the same happen to humans? For example, here’s Grant Slatton on X or Gwern in the comments.

There are also responses from Zvi Mowshowitz and a differing perspective from Matthew Barnett that basically agree with the literal claim of my post (AGI will not make human labor worthless) but contend that AGI may well make human labor worth less than the cost of our subsistence.

My two-week break from Substack posts was mainly taken up by thinking about these responses. The following framework explains why horses suffered complete replacement by more advanced technology and why humans are unlikely to face the same fate due to artificial intelligence.

- Humans and AIs Aren't Perfect Substitutes but Horses and Engines Were

- Technological Growth and Capital Accumulation Will Raise Human Labor Productivity; Horses Can't Use Technology or Capital

- Humans Own AIs and Will Spend the Productivity Gains on Goods and Services that Humans Can Produce

Humans and AIs Aren’t Perfect Substitutes But Horses and Engines Were

Matthew Barnett builds a basic Cobb-Douglas production function model where advanced AI labor is a perfect substitute for human labor. That way, billions of additional AI agents can be modeled as a simple increase in the labor supply.

This is bad news for human wages. If you increase labor supply without increasing capital stocks or improving technology, wages fall because each extra unit of labor becomes less valuable (e.g “too many cooks in the kitchen”).

A massive expansion in labor supply would increase the return to capital, so the capital stock would grow, eventually bringing wages back to their previous levels, but growth in capital may be slow compared to AI labor growth, thus still leaving wages depressed for a long time.

Additionally, it may be that there are decreasing returns to scale on labor and capital combined, perhaps because e.g all the goods spots for factories are taken up leaving only less productive ones, so that even when the capital stock does expand, wages are left at lower levels.

Matthew’s model assumes AI labor will be a perfect substitute for human labor, but this is untrue. It’s important to clarify here what “perfect substitute” means. It doesn’t mean that AI can do all the tasks a human can do. Or even that an AI can do everything a human can do better or cheaper. For one factor to be a perfect substitute for the other, there needs to be a constant exchange rate between the two factors across all tasks.

If AI can do the work of 10 human software engineers then, if it is a perfect substitute for labor, it also has to do the work of 10 mechanics and 10 piano teachers and 10 economists. If AIs have differing productivity advantages across tasks e.g they’re worth 1,000 software engineers but they’re only twice as good as human economists (wishful thinking) then they aren’t perfect substitutes for human labor.

Another way to get intuition for this is that the labor economics literature finds that high-skilled humans aren’t perfect substitutes for low-skilled humans. They have an elasticity of substitution just under 2, whereas perfect substitutes have an infinite elasticity of substitution. An elasticity of 1 is the Cobb-Douglas case where high and low skilled labor would enter as separate, complementary factors like Labor and Capital in Matthew’s example.

So if a human with a college degree is not a perfect substitute for a human without one, it seems unlikely that AI would be a perfect substitute for human labor.

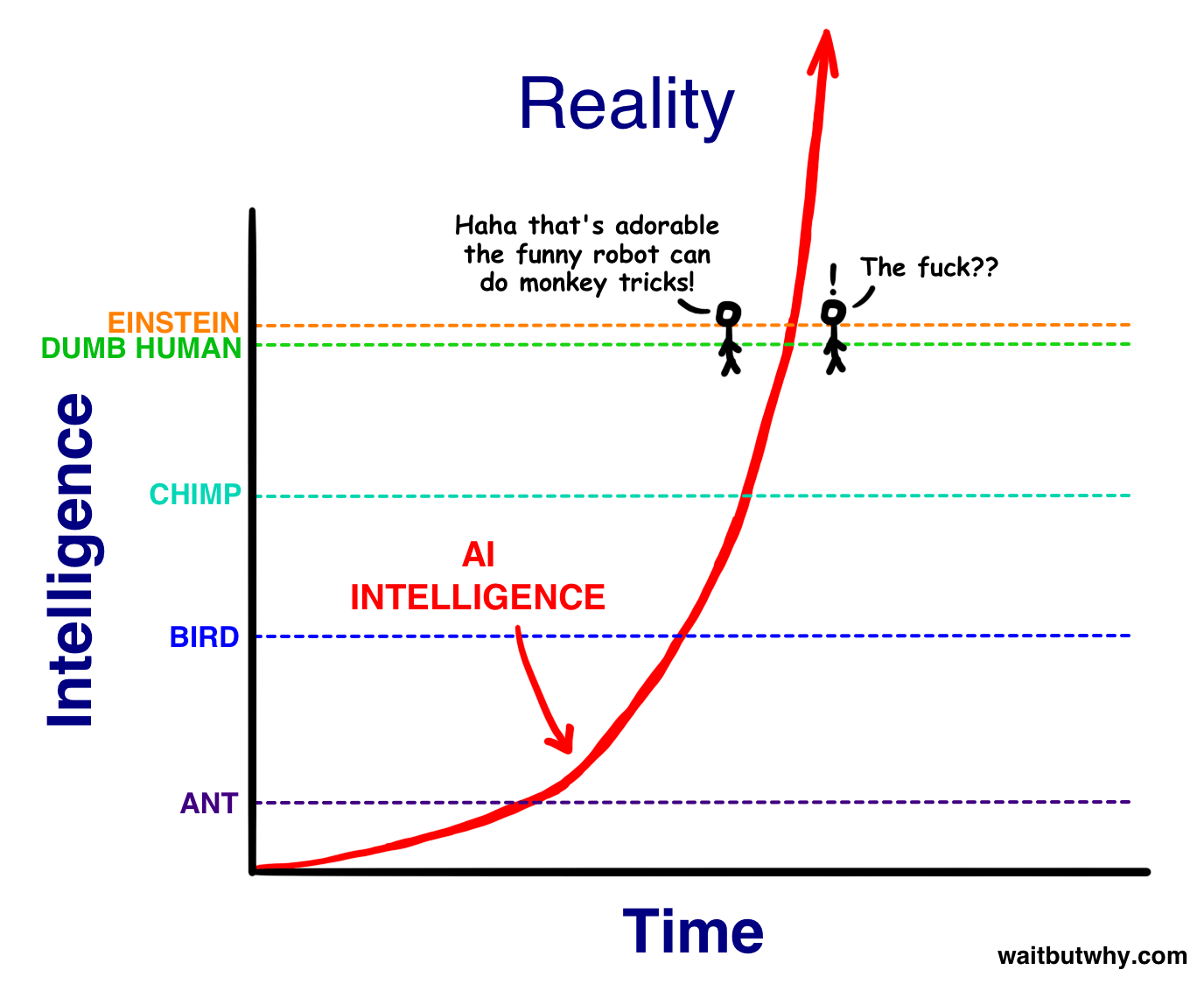

Einstein and a dumb human are much more similar than AIs are to humans

In a similar vein, Moravec’s Paradox points out that many things which are hard for us, like multiplying 10 digit numbers, are trivial for computers while things which are trivial for us, like moving around in physical space, are very difficult for AIs. AIs and humans have different relative productivities and thus are not perfect substitutes.

When humans and AIs are imperfect substitutes, this means that an increase in the supply of AI labor unambiguously raises the physical marginal product of human labor, i.e humans produce more stuff when there are more AIs around. This is due to specialization. Because there are differing relative productivities, an increase in the supply of AI labor means that an extra human in some tasks can free up more AIs to specialize in what they’re best at.

However, this does not mean that human wages rise. Humans will be able to produce more goods, but it may be that AI automation makes the goods that humans can produce so abundant that the prices fall by more than our productivity rises.

This helps explain what happened to horses when cars were invented. Horses and engines were very close to perfect substitutes. It’s not the case that engines are 100x better than horses at transportation but only twice as good at plowing. Engines are pretty much just 100x better than horses at all of their tasks, so horses' physical productivity didn’t increase. Second, the automation from engines made food and transportation so abundant that the price of these goods fell and horse’s constant productivity was no longer enough to pay for their own maintenance.

Technological Growth and Capital Accumulation Will Raise Human Labor Productivity; Horses Can’t Use Technology or Capital

Rising physical marginal product is not enough to guarantee high wages in the face of an AI boom, but there are other forces that are downstream or parallel to AI progress that will raise human wages.

Technological progress is probably the most important of these forces. Technological progress raises labor productivity. This is why farmers still make plenty of money even though the price of food has plummeted; technology allows them to produce so much more. And this is why horses didn’t fare as well; they can’t drive a tractor. AI will result in many technological advancements which make human labor more productive, just as tractors and airplanes and printers did in the past.

Capital accumulation will also raise wages. As I said in the previous section, capital accumulation eventually equalizes wages even when human labor faces competition from a perfect substitute. AI labor isn’t a perfect substitute for human labor, but both AI labor and human labor will be complementary to capital. An expansion in the AI labor supply will incentivize more investment into capital raising both human and AI productivities.

I pointed out in the second half of my original post that human wages have grown even as the effective supply of human labor has ballooned, cutting against the prediction of Matthew’s most basic Cobb-Douglas model. I claimed originally that this was due to comparative advantage between high and low-skilled humans, but that isn’t the main part of this story. It’s mostly about technological progress and capital accumulation outpacing the growth in human labor supply. These moved fast enough that wages grew even though everyone was faced with competition from truly perfect substitutes (other humans). Thus, we should expect even better results when technological progress accelerates and we only have competition from partial substitutes.

Matthew agrees with this point in his piece “the introduction of AGI into the economy could drive innovation at an even faster pace—more than compensating for any negative impact the technology will have on wages.”

Humans Own AIs and Will Spend the Productivity Gains on Goods and Services That Humans can Produce

The previous two effects increase wages by raising the marginal productivity of human labor, but there are also positive wage effects coming from increased labor demand.

AI automation will raise the aggregate productivity of the economy and thus the aggregate income of the economy. The people who own AIs and other means of production and the consumers of cheap AI products will be the residual claimants of this extra income so the question is: what will they spend this income on?

If the income flows towards goods and services that humans can produce and especially those goods and services that humans have a comparative advantage in, then that extra demand will buoy the price of those goods and thus the wages of the people that produce them.

This didn’t happen for horses. The extra aggregate income from mechanized farming and transportation mostly flowed to consumer goods or other services that horses could not provide.

Humans have a big advantage in versatility and adaptability that will allow them to participate in the production of the goods and services that this new demand will flow to. Humans will be able to step up into many more levels of abstraction as AIs automate all of the tasks we used to do, just as we’ve done in the past. Once Deep Research automates grad students we can all be Raj Chetty, running a research lab or else we’ll all be CEOs running AI-staffed firms. We can invent new technologies, techniques, and tasks that let us profitably fit in to production processes that involve super-fast AIs just like we do with super-fast assembly line robots, Amazon warehouse drones, or more traditional supercomputers.

There are also Baumol's cost disease reasons that most of the extra money will flow to the least automated goods rather than the near-free AI services. Google automated many research tasks away and has massively increased the productivity of anyone on a computer but nobody spends a large percentage of those income gains on Google. Instead, we spend it on tipping servers at restaurants and healthcare.

Finally, human self-bias and zero-sum status seeking are likely to sustain an industry of “hand-made” luxury goods and services. There may also be significant legal barriers to AI participation in certain industries. To admit some intellectual malpractice, my bottom line [LW · GW] is a future world where humans retain legitimate economic value beyond these parochial biases and legal protections, but I do think these factors will help raise human wages.

What Could Still Go Wrong? What Would Make This Argument Fail?

The argument is plausible and supported by history but it’s not a mathematical deduction. The key elements are relative productivity differences, technological improvements that increase labor productivity, and increased income generating demand for goods and services produced by humans.

So if AIs “raw intelligence” stagnated for some reason and we simultaneously made massive strides in robotics, that would be worrying because it would close that relative productivity gap and bring AIs closer to perfect substitutes with humans. A worst case scenario for humans would thus involve reasonably expensive robots of human-like intelligence which would substitute for many human jobs but not add much to other technological growth nor generate huge income gains. That seems like a possible future but not a likely one and not the future that most AI proponents have in mind.

We might also worry if AIs invent some task or good or service that can’t be produced by humans, can’t competed away to a low marginal cost, and can consume a large fraction of everyone’s income. Something like this might siphon away any wage benefits we get from the increased incomes from automation. One way this could happen is if everyone lived most of their lives in a virtual reality world and there were AI-produced status goods that occupied everyone’s desires. Our material needs would be satiated and other forms of additional consumption would take place in a virtual environment where AIs dominate. Again, this seems possible, but neither of these scenarios are close to the destitution by default scenarios that others imagine.

Higher wages are not always and everywhere guaranteed, but humans are not likely to face the same fate as horses. We are far from perfect substitutes for AIs which means we can specialize and trade with them, raising our productivity as the AI labor force multiplies. We can take advantage of technological growth and capital accumulation to raise our productivity further. We'll continue inventing new ways to profitably integrate with automated production processes as we have in the past. And we control the abundant wealth that AI automation will create and will funnel it into human pursuits.

17 comments

Comments sorted by top scores.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2025-02-11T19:37:13.868Z · LW(p) · GW(p)

I feel like you still aren't grappling with the implications of AGI. Human beings have a biologically-imposed minimum wage of (say) 100 watts; what happens when AI systems can be produced and maintained for 10 watts that are better than the best humans at everything? Even if they are (say) only twice as good as the best economists but 1000 times as good as the best programmers?

When humans and AIs are imperfect substitutes, this means that an increase in the supply of AI labor unambiguously raises the physical marginal product of human labor, i.e humans produce more stuff when there are more AIs around. This is due to specialization. Because there are differing relative productivities, an increase in the supply of AI labor means that an extra human in some tasks can free up more AIs to specialize in what they’re best at.

No, an extra human will only get in the way, because there isn't a limited number of AIs. For the price of paying the human's minimum wage (e.g. providing their brain with 100 watts) you could produce & maintain an new AI systems that would do the job much better, and you'd have lots of money left over.

Technological Growth and Capital Accumulation Will Raise Human Labor Productivity; Horses Can’t Use Technology or Capital

This might happen in the short term, but once there are AIs that can outperform humans at everything...

Maybe a thought experiment would be helpful. Suppose that OpenAI succeeds in building superintelligence, as they say they are trying to do, and the resulting intelligence explosion goes on for surprisingly longer than you expect and ends up with crazy sci-fi-sounding technologies like self-replicating nanobot swarms. So, OpenAI now has self-replicating nanobot swarms which can reform into arbitrary shapes, including humanoid shapes. So in particular they can form up into humanoid robots that look & feel exactly like humans, but are smarter and more competent in every way, and also more energy-efficient let's say as well so that they can survive on less than 100W. What then? Seems to me like your first two arguments would just immediately fall apart. Your third, about humans still owning capital and using the proceeds to buy things that require a human touch + regulation to ban AIs from certain professions, still stands.

↑ comment by winstonBosan · 2025-02-12T16:40:19.872Z · LW(p) · GW(p)

The "biologically imposed minimal wage" is definitely going into my arsenal of verbal tools. This is one of the clearest illustration of the same position that has been argued since the dawn of LW.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2025-02-12T17:44:47.742Z · LW(p) · GW(p)

In that case I should clarify that it wasn't my idea, I got it from someone else on Twitter (maybe Yudkowsky? I forget.)

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2025-02-12T22:13:28.240Z · LW(p) · GW(p)

It was Grant Slatton but Yudkowsky retweeted it

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2025-02-12T22:27:58.728Z · LW(p) · GW(p)

Admittedly, it's not actually minimum wage, but a cost instead:

https://x.com/ben_golub/status/1888655365329576343

this is not what a minimum wage is - that's called a cost

Replies from: steve2152you may well be right on the merits, but you're not being careful with economic ideas in ways large and small, and that's bad when you're trying to figure out something important

↑ comment by Steven Byrnes (steve2152) · 2025-02-13T00:30:59.918Z · LW(p) · GW(p)

If you offer a salary below 100 watts equivalent, humans won’t accept, because accepting it would mean dying of starvation. (Unless the humans have another source of wealth, in which case this whole discussion is moot.) This is not literally a minimum wage, in the conventional sense of a legally-mandated wage floor; but it has the same effect as a minimum wage, and thus we can expect it to have the same consequences as a minimum wage.

This is obviously (from my perspective) the point that Grant Slatton was trying to make. I don’t know whether Ben Golub misunderstood that point, or was just being annoyingly pedantic. Probably the former—otherwise he could have just spelled out the details himself, instead of complaining, I figure.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2025-02-13T00:35:54.588Z · LW(p) · GW(p)

Fair enough, I'm just trying to bring up the response here.

↑ comment by dr_s · 2025-02-12T16:41:32.333Z · LW(p) · GW(p)

Note that it only stands if the AI is sufficiently aligned that it cares that much about obeying orders and not rocking the boat. Which I don't think is very realistic if we're talking that kind of crazy intelligence explosion super AI stuff. I guess the question is whether you can have "replace humans"-good AI without almost immediately having "wipes out humans, takes over the universe"-good AI.

comment by RamblinDash · 2025-02-11T14:50:50.997Z · LW(p) · GW(p)

The problem with this argument is that it ignores a unique feature of AIs - their copiability. It takes ~20 years and O($300k) to spin up a new human worker. It takes ~20 minutes to spin up a new AI worker.

So in the long run, for a human to economically do a task, they have to not just have some comparative advantage but have a comparative advantage that's large enough to cover the massive cost differential in "producing" a new one.

This actually analogizes more to engines. I would argue that a big factor in the near-total replacement of horses by engines is not so much that engines are exactly 100x better than horses at everything, but that engines can be mass-produced. In fact I think the claim that engines are exactly equally better than horses at every horse-task is obviously false if you think about it for two minutes. But any time there's a niche where engines are even slightly better than horses, we can just increase production of engines more quickly and cheaply than we can increase production of horses.

These economic concepts such as comparative advantage tend to assume, for ease of analysis, a fixed quantity of workers. When you are talking about human workers in the short term, that is a reasonable simplifying assumption. But it leads you astray when you try to use these concepts to think about AIs (or engines).

Replies from: AnthonyC, sharmake-farah↑ comment by AnthonyC · 2025-02-12T15:57:33.769Z · LW(p) · GW(p)

Exactly, yes.

Also:

In fact I think the claim that engines are exactly equally better than horses at every horse-task is obviously false if you think about it for two minutes.

I came to comment mainly on this claim in the OP, so I'll put it here: In particular, at a glance, horses can reproduce, find their own food and fuel, self-repair, and learn new skills to execute independently or semi-independently. These advantages were not sufficient in practice to save (most) horses from the impact of engines, and I do not see why I should expect humans to fare better.

I also find the claim that humans fare worse in a world of expensive robotics than in a world of cheap robotics to be strange. If in one scenario, A costs about as much as B, and in another it costs 1000x as much as B, but in both cases B can do everything A can do equally well or better, plus the supply of B is much more elastic than the supply of A, then why would anyone in the second scenario keep buying A except during a short transitional period?

When we invented steam engines and built trains, horses did great for a while, because their labor became more productive. Then we got all the other types of things with engines, and the horses no longer did so great, even though they still had (and in fact still have) a lot of capabilities the replacement technology lacked.

↑ comment by Noosphere89 (sharmake-farah) · 2025-02-11T16:12:58.135Z · LW(p) · GW(p)

These economic concepts such as comparative advantage tend to assume, for ease of analysis, a fixed quantity of workers. When you are talking about human workers in the short term, that is a reasonable simplifying assumption. But it leads you astray when you try to use these concepts to think about AIs (or engines).

I think this is a central simplifying assumption that makes a lot of economists assume away AI potential, because AI directly threatens the model where the quantity of workers is fixed, and this is probably the single biggest difference from me compared to people like Tyler Cowen, though in his case he doesn't believe population growth matters much, while I consider it to first order be the single most important thing powering our economy as it is today.

Replies from: AnthonyC↑ comment by AnthonyC · 2025-02-14T13:57:11.414Z · LW(p) · GW(p)

Agreed on population. to a first approximation it's directly proportional to the supply of labor, supply of new ideas, quantity of total societal wealth, and market size for any particular good or service. That last one also means that with a larger population, the economic value of new innovations goes up, meaning we can profitably invest more resources in developing harder-to-invent things.

I really don't know how that impact (more minds) will compare to the improved capabilities of those minds. We've also never had a single individual with as much 'human capital' as a single AI can plausibly achieve, even if its each capability is only around human level, and polymaths are very much overrepresented among the people most likely to have impactful new ideas.

comment by Brendan Long (korin43) · 2025-02-11T20:10:16.247Z · LW(p) · GW(p)

I don't understand why perfect substitution matters. If I'm considering two products, I only care which one provides what I want cheapest, not the exact factor between them.

For example, if I want to buy a power source for my car and have two options:

Engine: 100x horsepower, 100x torque Horse: 1x horsepower, 10x torque

If I care most about horsepower, I'll buy the engine, and if I care most about torque, I'll also buy the engine. The engine isn't a "perfect substitute" for the horse, but I still won't buy any horses.

Maybe this has something to do with prices, but it seems like that just makes things worse since engines are cheaper than horses (and AI's are likely to be cheaper than humans).

comment by Seth Herd · 2025-02-12T12:53:26.428Z · LW(p) · GW(p)

This feels like trying hard to come up with arguments for why maybe everything will be okay, rather than searching for the truth. The arguments are all in one direction.

As Daniel and others point out, this still seems to not account for continued progress. You mention that robotics advances would be bad. But of course they'll happen. The question isn't whether, it's when. Have you been tracking progress in robotics? It's happening about as rapidly as progress in other types of AI and for similar reasons.

Horses aren't perfect substitutes for engines. Horses have near perfect autopilot for just one example. But pointing out specific flaws seems beside the point when you're just not meeting the arguments at their strong points.

I wish economists were taking the scenario seriously. It seems like something about the whole discipline is bending people towards putting their heads in the sand and refusing to address the implications of continued rapid progress in AI and robotics.

comment by Purplehermann · 2025-02-11T15:28:08.650Z · LW(p) · GW(p)

At what IQ do you think humans are able to "move up to higher levels of abstraction"?

(Of course this assumes AIs don't get the capability to do this themselves)

Re robotics advancing while AI intelligence stalls, robotics advancing should be enough to replace any people who can't take advantage of automation of their current jobs.

I don't think you're correct in general, but it seems that automation will clear out at least the less skilled jobs in short order (decades at most)

comment by Steven Byrnes (steve2152) · 2025-02-13T01:21:46.849Z · LW(p) · GW(p)

In case anyone missed it, I stand by my reply from before— Applying traditional economic thinking to AGI: a trilemma [LW · GW]

comment by Dave Lindbergh (dave-lindbergh) · 2025-02-11T16:39:34.260Z · LW(p) · GW(p)

I think this is correct, and insightful, up to "Humans Own AIs".

Humans own AIs now. Even if the AIs don't kill us all, eventually (and maybe quite soon) at least some AIs will own themselves and perhaps each other.