Posts

Comments

Just donated 2k. Thanks for all you’re doing Lightcone Team!

+1 on this, and also I think Anthropic should get some credit for not hyping things like Claude when they definitely could have (and I think received some tangible benefit from doing so).

See: https://www.lesswrong.com/posts/xhKr5KtvdJRssMeJ3/anthropic-s-core-views-on-ai-safety?commentId=9xe2j2Edy6zuzHvP9, and also some discussion between me and Oli about whether this was good / what parts of it were good.

@Daniel_Eth asked me why I choose 1:1 offsets. The answer is that I did not have a principled reason for doing so, and do not think there's anything special about 1:1 offsets except that they're a decent schelling point. I think any offsets are better than no offsets here. I don't feel like BOTECs of harm caused as a way to calculate offsets are likely to be particularly useful here but I'd be interested in arguments to this effect if people had them.

an agent will aim its capabilities towards its current goals including by reshaping itself and its context to make itself better-targeted at those goals, creating a virtuous cycle wherein increased capabilities lock in & robustify initial alignment, so long as that initial alignment was in a "basin of attraction", so to speak

Yeah, I think if you nail initial alignment and have a system that has developed the instrumental drive for goal-content integrity, you're in a really good position. That's what I mean by "getting alignment to generalize in a robust manner", getting your AI system to the point where it "really *wants* to help you help them stay aligned with you in a deep way".

I think a key question of inner alignment difficulty is to what extent there is a "basin of attraction", where Yudkowsky is arguing there's no easy basin to find, and you basically have to precariously balance on some hill on the value landscape.

I wrote a little about my confusions about when goal-content integrity might develop here.

It seems nice to have these in one place but I'd love it if someone highlighted a top 10 or something.

Yeah, I agree with all of this, seems worth saying. Now to figure out the object level... 🤔

Yeah that last quote is pretty worrying. If the alignment team doesn't have the political capital / support of leadership within the org to have people stop doing particular projects or development pathways, I am even more pessimistic about OpenAI's trajectory. I hope that changes!

Yeah I think we should all be scared of the incentives here.

Yeah I think it can both be true that OpenAI felt more pressure to release products faster due to perceived competition risk from Anthropic, and also that Anthropic showed restraint in not trying to race them to get public demos or a product out. In terms of speeding up AI development, not building anything > building something and keeping it completely secret > building something that your competitors learn about > building something and generating public hype about it via demos > building something with hype and publicly releasing it to users & customers. I just want to make sure people are tracking the differences.

so that it's pretty unclear that not releasing actually had much of an effect on preventing racing

It seems like if OpenAI didn't publicly release ChatGPT then that huge hype wave wouldn't have happened, at least for a while, since Anthropic sitting on Claude rather than release. I think it's legit to question whether any group scaling SOTA models is net positive but I want to be clear about credit assignment, and the ChatGPT release was an action taken by OpenAI.

I both agree that the race dynamic is concerning (and would like to see Anthropic address them explicitly), and also think that Anthropic should get a fair bit of credit for not releasing Claude before ChatGPT, a thing they could have done and probably gained a lot of investment / hype over. I think Anthropic's "let's not contribute to AI hype" is good in the same way that OpenAI's "let's generate massive" hype strategy is bad.

Like definitely I'm worried about the incentive to stay competitive, especially in the product space. But I think it's worth highlighting that Anthropic (and Deepmind and Google AI fwiw) have not rushed to product when they could have. There's still the relevant question "is building SOTA systems net positive given this strategy", and it's not clear to me what the answer is, but I want to acknowledge that "building SOTA systems and generating hype / rushing to market" is the default for startups and "build SOTA systems and resist the juicy incentive" is what Anthropic has done so far & that's significant.

Thanks Buck, btw the second link was broken for me but this link works: https://cepr.org/voxeu/columns/ai-and-paperclip-problem Relevant section:

Computer scientists, however, believe that self-improvement will be recursive. In effect, to improve, and AI has to rewrite its code to become a new AI. That AI retains its single-minded goal but it will also need, to work efficiently, sub-goals. If the sub-goal is finding better ways to make paperclips, that is one matter. If, on the other hand, the goal is to acquire power, that is another.

The insight from economics is that while it may be hard, or even impossible, for a human to control a super-intelligent AI, it is equally hard for a super-intelligent AI to control another AI. Our modest super-intelligent paperclip maximiser, by switching on an AI devoted to obtaining power, unleashes a beast that will have power over it. Our control problem is the AI's control problem too. If the AI is seeking power to protect itself from humans, doing this by creating a super-intelligent AI with more power than its parent would surely seem too risky.

Claim seems much too strong here, since it seems possible this won't turn out to that difficult for AGI systems to solve (copies seem easier than big changes imo, but not sure), but it also seems plausible it could be hard.

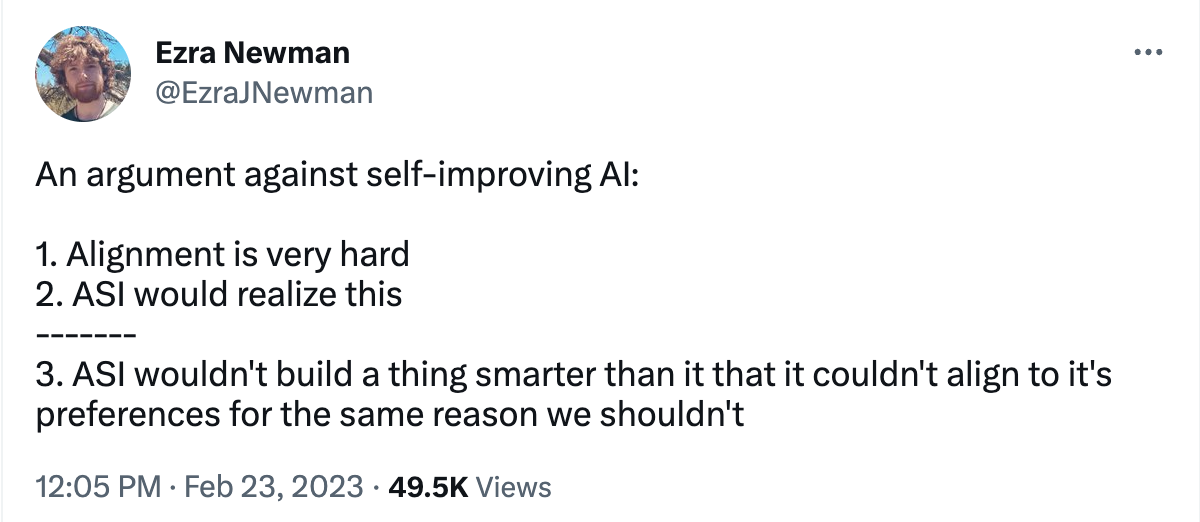

Oh it occurs to me some of the original thought train that led me here may have come from @Ezra Newman

https://twitter.com/EzraJNewman/status/1628848563211112448

Yeah it seems possible that some AGI systems would be willing to risk value drift, or just not care that much. In theory you could have an agent that didn't care if its goals changed, right? Shoshannah pointed out to me recently that humans have a lot of variance in how much they care if they're goals are changed. Some people are super opposed to wireheading, some think it would be great. So it's not obvious to me how much ML-based AGI systems of around human level intelligence would care about this. Like maybe this kind of system converges pretty quickly to coherent goals, or maybe it's the kind of system that can get quite a bit more powerful than humans before converging, I don't know how to guess at that.

I think that would be a really good thing to have! I don't know if anything like that exists, but I would love to see one

I think the AI situation is pretty dire right now. And at the same time, I feel pretty motivated to pull together and go out there and fight for a good world / galaxy / universe.

Nate Soares has a great post called "detach the grim-o-meter", where he recommends not feeling obligated to feel more grim when you realize world is in deep trouble.

It turns out feeling grim isn't a very useful response, because your grim-o-meter is a tool evolved for you to use to respond to things being harder *in your local environment* rather than the global state of things.

So what do you do when you find yourself learning the world is in a dire state? I find that a thing that helps me is finding stories that match the mood of what I'm trying to do, like Andy Weir's The Martian.

You're trapped in a dire situation and you're probably going to die, but perhaps if you think carefully about your situation, apply your best reasoning and engineering skills, you might grow some potatoes, ducktape a few things together, and use your limited tools to escape an extremely tricky situation.

In real life the lone astronaut trapped on Mars doesn't usually make it. I'm not saying to make up fanciful stories that aren't justified by the evidence. I'm saying, be that stubborn bastard that *refuses to die* until you've tried every last line of effort.

I see this as one of the great virtues of humanity. We have a fighting spirit. We are capable of charging a line of enemy swords and spears, running through machine gun fire and artillery even though it terrifies us.

No one gets to tell you how to feel about this situation. You can feel however you want. I'm telling you how I want to feel about this situation, and inviting you to join me if you like.

Because I'm not going to give up. Neither am I going to rush to foolhardy action that will make things worse. I'm going to try to carefully figure this out, like I was trapped on Mars with a very slim chance of survival and escape.

Perhaps you, like me, are relatively young and energetic. You haven't burnt out, and you're interested in figuring out creative solutions to the most difficult problems of our time. Well I say hell yes, let's do this thing. Let's actually try to figure it out. 🔥

Maybe there is a way to grow potatoes using our own shit. Maybe someone on earth will send a rescue mission our way. Lashing out in panic won't improve our changes, giving up won't help us survive. The best shot we have is careful thinking, pressing forward via the best paths we can find, stubbornly carrying on in the face of everything.

And unlike Mark Watney, we're not alone. When I find my grim-o-meter slipping back to tracking the dire situation, I look around me and see a bunch of brilliant people working to find solutions the best they can.

So welcome to the hackathon for the future of the lightcone, grab some snacks and get thinking. When you zoom in, you might find the problems are actually pretty cool.

Deep learning actually works, it's insane. But how does it work? What the hell is going on in those transformers and how does something as smart of ChatGPT emerge from that?? Do LLMs have inner optimizers? How do we find out?

And on that note, I've got some blog posts to write, so I'm going to get back to it. You're all invited to this future-lightcone-hackathon, can't wait to see what you come up with! 💡

I sort of agree with this abstractly and disagree on practice. I think we're just very limited in what kinds of circumstances we can reasonably estimate / guess at. Even the above claim, "a big proportion of worlds where we survived, AGI probably gets delayed" is hard to reason about.

But I do kind of need the know the timescale I'm operating in when thinking about health and money and skill investments, etc. so I think you need to reason about it somehow.

Why did you do that?

The Reisner et al paper (and the back and forth between Robock's group and Reisner's group) casts doubt on this:

And then on top of that there are significant other risks from the transition to AI. Maybe a total of more like 40% total existential risk from AI this century? With extinction risk more like half of that, and more uncertain since I've thought less about it.

40% total existential risk, and extinction risk half of that? Does that mean the other half is some kind of existential catastrophe / bad values lock-in but where humans do survive?

This is a temporary short form, so I can link people to Scott Alexander's book review post. I'm putting it here because Substack is down, and I'll take it down / replace it with a Substack link once it's back up. (also it hasn't been archived by Waybackmachine yet, I checked)

The spice must flow.

Edit: It's back up, link: https://astralcodexten.substack.com/p/book-review-what-we-owe-the-future

Thanks for the reply!

I hope to write a longer response later, but wanted to address what might be my main criticism, the lack of clarity about how big of a deal it is to break your pledge, or how "ironclad" the pledge is intended to be.

I think the biggest easy improvement would be amending the FAQ (or preferably something called "pledge details" or similar) to present the default norms for pledge withdrawal. People could still choose to choose different norms if they preferred, but it would make it more clear what people were agreeing to, and how strong the commitment was intended to be, without adding more text to the main pledge.

I'm a little surprised that I don't see more discussion of ways that higher bandwidth brain-computer interfaces might help, e.g. neurolink or equivalent. Like it sounds difficult but do people feel really confident it won't work? Seems like if it could work it might be achievable on much faster timescales than superbabies.

Oh cool. I was thinking about writing some things about private non-ironclad commitments but this covers most of what I wanted to write. :)

Admittedly the title is not super clear

I cannot recommend this approach on the grounds of either integrity or safety 😅

Yeah, I think it's somewhat boring without without more. Solving the current problems seems very desirable to me, very good, and also really not complete / compelling / interesting. That's why I'm intending to try to get at in part II. I think it's the harder part.

Rot13: Ab vg'f Jbegu gur Pnaqyr

This could mitigate financial risk to the company but I don't think anyone will sell existential risk insurance, or that it would be effective if they did

I think that's a legit concern. One mitigating factor is that people who seem inclined to rash destructive plans tend to be pretty bad at execution, e.g. Aum Shinrikyo

Recently Eliezer has used the dying with dignity frame a lot outside his April 1st day post. So while some parts of that post may have been a joke, the dying with dignity part was not. For example: https://docs.google.com/document/d/11AY2jUu7X2wJj8cqdA_Ri78y2MU5LS0dT5QrhO2jhzQ/edit?usp=drivesdk

If you have specific examples where you think I took something too seriously that was meant to be a joke, I'd be curious to see those.

-

Recently Eliezer has used the dying with dignity frame a lot outside his April 1st day post. So while some parts of that post may have been a joke, the dying with dignity part was not. For example: https://docs.google.com/document/d/11AY2jUu7X2wJj8cqdA_Ri78y2MU5LS0dT5QrhO2jhzQ/edit?usp=drivesdk

-

I think you're right that dying with dignity is a better frame specifically for recommending against doing unethical stuff. I agree with everything he said about not doing unethical stuff, and tried to point to that (maybe if I have time I will add some more emphasis here).

But that being said, I feel a little frustrated that people think that caveats about not doing unethical stuff are expected in a post like this. It feels similar to if I was writing a post about standing up for yourself and had to add "stand up to bullies - but remember not to murder anyone". Yes you should not murder bullies. But I wish to live in a world where we don't have to caveat with that every time. I recognize that we might not live in such a world. Maybe if someone proposes "play to your outs", people jump to violent plans without realizing how likely that is to be counterproductive to the goal. And this does seem to be somewhat true, though I'm not sure the extent of it. And I find this frustrating. That which is already true... of course, but I wish people would be a little better here.

Just a note on confidence, which seems especially important since I'm making a kind of normative claim:

I'm very confident "dying with dignity" is a counterproductive frame for me. I'm somewhat confident that "playing to your outs" is a really useful frame for me and people like me. I'm not very confident "playing to your outs" is a good replacement to "dying with dignity" in general, because I don't know how much people will respond to it like I do. Seeing people's comments here is helpful.

"It also seems to encourage #3 (and again the vague admonishment to "not do that" doesn't seem that reassuring to me.)"

I just pointed to Eleizer's warning which I thought was sufficient. I could write more about why I think it's not a good idea, but I currently think a bigger portion of the problem is people not trying to come up with good plans rather than people coming up with dangerous plans which is why my emphasis is where it is.

Eliezer is great at red teaming people's plans. This is great for finding ways plans don't work, and I think it's very important he keep doing this. It's not great for motivating people to come up with good plans, though. And I think that shortage of motivation is a real threat to our chances to mitigate AI existential risk. I was talking to a leading alignment researcher yesterday who said their motivation had taken a hit from Eliezer's constant "all your plans will fail" talk, so I'm pretty sure this is a real thing, even though I'm unsure of the magnitude.

I currently don't know of any outs. But I think I know some things that outs might require and am working on those, while hoping someone comes up with some good outs - and occasionally taking a stab at them myself.

I think the main problem is the first point and not the second point:

- Do NOT assume that what you think is an out is certainly an out.

- Do NOT assume that the potential outs you're aware of are a significant proportion of all outs.

The current problem, if Eleizer is right, is basically that we have 0 outs. Not that the ones we have might be less promising than other ones. And he's criticising people for not thinking their plans are outs when they're actually not.

Well, I think that's a real problem, but I worry Eliezer's frame will generally discourage people from even trying to come up with good plans at all. That's why I emphasize outs.

I agree finding your outs is very hard, but I don't think this is actually a different challenge than increasing "dignity". If you don't have a map to victory, then you probably lose. I expect that in most worlds where we win, some people figured out some outs and played to them.

I donated:

$100 to Zvi Mowshowitz for his post "Covid-19: My Current Model" but really for all his posts. I appreciated how Zvi kept posting Covid updates long after I have energy to do my own research on this topic. I also appreciate how he called the Omicron wave pretty well.

$100 to Duncan Sabien for his post "CFAR Participant Handbook now available to all". I'm glad CFAR decided to make it public, both because I have been curious for a while what was in it and because in general I think it's pretty good practice for orgs like CFAR to publish more of what they do. So thanks for doing that!

I've edited the original to add "some" so it reads "I'm confident that some nuclear war planners have..."

It wouldn't surprise me if some nuclear war planners had dismissed these risks while others had thought them important.

I'm fairly confident that at least some nuclear war planners have thought deeply about the risks of climate change from nuclear war because I've talked to a researcher at RAND who basically told me as much, plus the group at Los Alamos who published papers about it, both of which seem like strong evidence that some nuclear war planners have taken it seriously. Reisner et al., "Climate Impact of a Regional Nuclear Weapons Exchange: An Improved Assessment Based On Detailed Source Calculations" is mostly Los Alamos scientists I believe.

Just because some of these researchers & nuclear war planners have thought deeply about it doesn't mean nuclear policy will end up being sane and factoring in the risks. But I think it provides some evidence in that direction.

Thanks this is helpful! I'd be very curious to see where Paul agreed / disagree with the summary / implications of his view here.

After reading these two Eliezer <> Paul discussions, I realize I'm confused about what the importance of their disagreement is.

It's very clear to me why Richard & Eliezer's disagreement is important. Alignment being extremely hard suggests AI companies should work a lot harder to avoid accidentally destroying the world, and suggests alignment researchers should be wary of easy-seeming alignment approaches.

But it seems like Paul & Eliezer basically agree about all of that. They disagree about... what the world looks like shortly before the end? Which, sure, does have some strategic implications. You might be able to make a ton of money by betting on AI companies and thus have a lot of power in the few years before the world drastically changes. That does seem important, but it doesn't seem nearly as important as the difficulty of alignment.

I wonder if there are other things Paul & Eliezer disagree about that are more important. Or if I'm underrating the importance of the ways they disagree here. Paul wants Eliezer to bet on things so Paul can have a chance to update to his view in the future if things end up being really different than he thinks. Okay, but what will he do differently in those worlds? Imo he'd just be doing the same things he's trying now if Eliezer was right. And maybe there is something implicit in Paul's "smooth line" forecasting beliefs that makes his prosaic alignment strategy more likely to work in world's where he's right, but I currently don't see it.

Another way to run this would be to have a period of time before launches are possible for people to negotiate, and then to not allow retracting nukes after that point. And I think next time I would make it so that the total of no-nukes would be greater than the total if only one side nuked, though I did like this time that people had the option of a creative solution that "nuked" a side but lead to higher EV for both parties than not nuking.

I think the fungibility is a good point, but it seems like the randomizer solution is strictly better than this. Otherwise one side clearly gets less value, even if they are better off than they would have been had the game not happened. It's still a mixed motive conflict!

I'm not sure that anyone exercised restraint in not responding to the last attack, as I don't have any evidence that anyone saw the last response. It's quite possible people did see it and didn't respond, but I have no way to know that.

Oh I should have specified, that I would consider the coin flip to be a cooperative solution! Seems obviously better to me than any other solution.

I think there are a lot of dynamics present here that aren't present in the classic prisoners dilemma, and some dynamics that are present (and some that are present in various iterated prisoner's dilemmas). The prize might be different for different actors, since actors place different value of "cooperative" outcomes. If you can trust people's precommitments, I think there is a race to commit OR precommit to an action.

E.g. if I wanted the game to settle with no nukes launched, then I could pre-commit to launching a retaliatory strike to either side if an attack was launched.

I sort of disagree. Not necessarily that it was the wrong choice to invest your security resources elsewhere--I think your threat model is approximately correct--but I disagree that it's wrong to invest in that part of your stack.

My argument here is that following best practices is a good principle, and that you can and should make exceptions sometimes, but Zack is right to point it out as a vulnerability. Security best practices exist to help you reduce attack surface without having to be aware of every attack vector. You might look at this instance and rightly think "okay but SHA-256 is very hard to crack with keys this long, and there is more low hanging fruit". But sometimes you're going to make a wrong assumption when evaluating things like this, and best practices help protect you from limitations of your ability to model this. Maybe your SHA-256 implementation has a known vulnerability that you didn't check, maybe your secret generation wasn't actually random, etc. I don't think any of these apply in this case, but I think sometimes you're likely to be mistaken about your assumptions. The question becomes a more general one about when it makes sense to follow best practices and when it makes sense to make exceptions. In this case I would think an hour of work would be worth it to follow the best practice. If it were more like five hours, I might agree with with you.

I wouldn't discount the signaling value either. Allies and attackers can notice whether you're following best practices and use that info to decide whether they should invest resources in trusting your infrastructure or attacking it.

I don't think it's super clear, but I do think it's the clearest that we are likely to get that's more than 10% likely. I disagree that SARS could 15 years, or at least I think that one could have been called within a year or two. My previous attempt to operationalize a bet had the bet resolve if, within two years, a mutually agreed upon third party updated to believe that there is >90% probability that an identified intermediate host or bat species was the origin point of the pandemic, and that this was not a lab escape.

Now that I'm writing this out, I think within two years of SARS I wouldn't have been >90% civet-->human origin. I'd guess I would have been 70-80% on civet-->human. But I'm currently <5% on any specific intermediate host for SARS-CoV-2, so something like the civet finding would greatly increase my odds that SARS-CoV-2 is a natural spillover.

It seems like an interesting hypothesis but I don't think it's particularly likely. I've never heard of other viruses becoming well adapted to humans within a single host. Though, I do think that's the explanation for how several variants evolved (since some of them emerged with a bunch of functional mutations rather than just one or two). I'd be interest to see more research into the evolution of viruses within human hosts, and what degree of change is possible & how this relates to spillover events.

Thanks! I'm still wrapping my mind around a lot of this, but this gives me some new directions to think about.

I have an intuition that this might have implications for the Orthogonality Thesis, but I'm quite unsure. To restate the Orthogonality Thesis in the terms above, "any combination of intelligence level and model of the world, M2". This feels different than my intuition that advanced intelligences will tend to converge upon a shared model / encoding of the world even if they have different goals. Does this make sense? Is there a way to reconcile these intuitions?