Estimating the Current and Future Number of AI Safety Researchers

post by Stephen McAleese (stephen-mcaleese) · 2022-09-28T21:11:33.703Z · LW · GW · 14 commentsThis is a link post for https://forum.effectivealtruism.org/posts/3gmkrj3khJHndYGNe/estimating-the-current-and-future-number-of-ai-safety

Contents

Summary Introduction Definitions Past estimates Estimating the number of AI safety researchers Organizational estimate Technical AI safety research organizations Non-technical AI safety research organizations Conclusions and notes Comparison of estimates How has the number of technical AI safety researchers changed over time? Technical AI safety research organizations Technical AI safety researchers How could productivity increase in the future? Conclusions None 14 comments

Summary

I estimate that there are about 300 full-time technical AI safety researchers, 100 full-time non-technical AI safety researchers, and 400 AI safety researchers in total today. I also show that the number of technical AI safety researchers has been increasing exponentially over the past few years and could reach 1000 by the end of the 2020s.

Introduction

Many previous posts have estimated the number of AI safety researchers and a generally accepted order-of-magnitude estimate is 100 full-time researchers. The question of how many AI safety researchers there are is important because the value of work in an area on the margin is proportional to how neglected it is.

The purpose of this post is the analyze this question in detail and come up with hopefully a fairly accurate estimate. I'm going to focus mainly on estimating the number of technical researchers because non-technical AI safety research is more varied and difficult to analyze. Though I'll create estimates for both types of researchers.

I'll first summarize some recent estimates before coming up with my own estimate. Then I'll compare all the estimates later.

Definitions

I'll be using some specific terms in the post which I think are important to define to avoid misunderstanding or ambiguity. First, I'll define 'AI safety', also known as AI alignment, as work that is done to reduce existential risk from advanced AI. This kind of work tends to focus on the long-term impact of AI rather than short-term problems such as the safety of self-driving cars or AI bias.

My use of the word 'researcher' is a generic term for anyone working on AI safety and is an umbrella term for more specific roles such as research scientist, research engineer, or research analyst.

Also, I'll only be counting full-time researchers. However, since my goal is to estimate research capacity, what I'm really counting is the number of full-time equivalent researchers. For example, two part-time researchers working 20 hours per week can be counted as one full-time researcher.

I'll define technical AI safety research as research that is directly related to AI safety such as technical machine learning work or conceptual research (e.g. ELK). And non-technical research includes research related to AI governance, policy, and meta-level work such as this post.

Past estimates

- 80,000 hours: estimated that there were about 300 people working on reducing existential risk from AI in 2022 with a 90% confidence interval between 100 and 1,500. The estimate used data drawn from the AI Watch database.

- A recent post [EA · GW] (2022) on the EA Forum estimated that there are 100-200 people working full-time on AI safety technical research.

- Another recent post [LW · GW] (2022) on LessWrong claims that there are about 150 people working full-time on technical AI safety.

- In a recent Twitter thread (2022), Benjamin Todd counters the idea that AI safety is saturated and says that there are only about 10 AI safety groups which each have about 10 researchers which is 100 researchers in total. He also states that this number has grown from about 30 in 2017 and that there are 100,000+ researchers working on AI capabilities [1].

- This Vox article said that about 50 in the world were working full-time on technical AI safety in 2020.

- This presentation estimated that fewer than 100 people were working full-time on technical AI alignment in 2021.

- There were about 2000 'highly engaged' members of Effective Altruism in 2021. 450 of those people were working on AI safety.

Estimating the number of AI safety researchers

Organizational estimate

I'll estimate the number of technical AI safety researchers and then the number of non-technical AI safety researchers. My main estimation method will be what I call an 'organizational estimate' which involves creating a list of organizations working on AI safety and then estimating the number of researchers working full-time in each organization to create a table similar to the one in this post. I'll also estimate the number of independent researchers. Note that I'll only be counting people who work full-time on AI safety.

To create the estimates in the tables below I used the following sources (ordered from most to least reliable):

- Web pages listing all the researchers working at an organization.

- Asking people who work at the organization.

- Scraping publications and posts from sites including The Alignment Forum, DeepMind and OpenAI and analyzing the data [2].

- LinkedIn insights to estimate the number of employees in an organization.

The confidence column shows how much information went into the estimate and how confident I am about the estimate.

Technical AI safety research organizations

| Name | Estimate | Lower bound (95% CI) | Upper bound (95% CI) | Overall confidence |

|---|---|---|---|---|

| Other | 80 [3] | 15 | 150 | Medium |

| Centre for Human-Compatible AI | 25 | 5 | 50 | Medium |

| DeepMind | 20 | 5 | 60 | Medium |

| OpenAI | 20 | 5 | 50 | Medium |

| Machine Intelligence Research Institute | 10 | 5 | 20 | High |

| Center for AI Safety (CAIS) | 10 | 5 | 14 | High |

| Fund for Alignment Research (FAR) | 10 | 5 | 15 | High |

| GoodAI | 10 | 5 | 15 | High |

| Sam Bowman | 8 | 2 | 10 | Medium |

| Jacob Steinhardt | 8 | 2 | 10 | Medium |

| David Krueger | 7 | 5 | 10 | High |

| Anthropic | 15 | 5 | 40 | Low |

| Redwood Research | 12 | 10 | 20 | High |

| Future of Humanity Institute | 10 | 5 | 30 | Medium |

| Conjecture | 10 | 5 | 20 | High |

| Algorithmic Alignment Group (MIT) | 5 | 3 | 7 | High |

| Aligned AI | 4 | 2 | 5 | High |

| Apart Research | 4 | 3 | 6 | High |

| Foundations of Cooperative AI Lab (CMU) | 3 | 2 | 8 | Medium |

| Alignment of Complex Systems Research Group (Prague) | 2 | 2 | 8 | Medium |

| Alignment research center (ARC) | 2 | 2 | 5 | High |

| Encultured AI | 2 | 1 | 5 | High |

| Totals | 277 | 99 | 558 | Medium |

Non-technical AI safety research organizations

| Name | Estimate | Lower bound (95% CI) | Upper bound (95% CI) | Overall confidence |

|---|---|---|---|---|

| Centre for Security and Emerging Technology (CSET) | 10 | 5 | 40 | Medium |

| Epoch AI | 4 | 2 | 10 | High |

| Centre for the Governance of AI | 10 | 5 | 15 | High |

| Leverhulme Centre for the Future of Intelligence | 4 | 3 | 10 | Medium |

| OpenAI | 10 | 1 | 20 | Low |

| DeepMind | 10 | 1 | 20 | Low |

| Center for the Study of Existential Risk (CSER) | 3 | 2 | 7 | Medium |

| Future of Life Institute | 4 | 3 | 6 | Medium |

| Center on Long-Term Risk | 5 | 5 | 10 | High |

| Open Philanthropy | 5 | 2 | 15 | Medium |

| AI Impacts | 3 | 2 | 10 | High |

| Rethink Priorities | 8 | 5 | 10 | High |

| Other [4] | 10 | 5 | 30 | Low |

| Totals | 86 | 41 | 203 | Medium |

Conclusions and notes

Summary of the results in the tables above:

- Technical AI safety researchers:

- Point estimate: 277

- Range: 99-558

- Non-technical AI safety researchers:

- Point estimate: 86

- Range: 41-203

- Total AI safety researchers:

- Point estimate: 363

- Range: 140-761

In conclusion, there are probably around 300 technical AI safety researchers, 100 non-technical AI safety researchers and around 400 AI safety researchers in total.[5]

Comparison of estimates

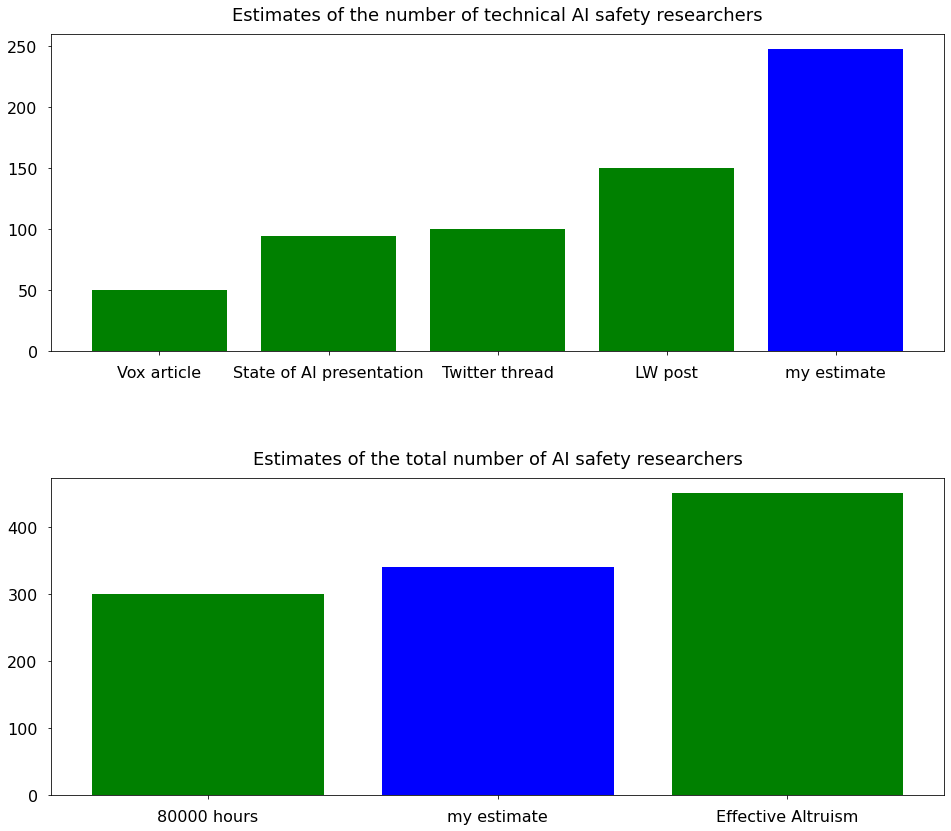

The bar charts below compare my estimates with the estimates from the "Past estimates" section.

In the first chart, my estimate is higher than all the historical estimates possibly because newer estimates will tend to be higher as the number of AI safety researchers increases or because my estimate includes more organizations. My estimate is similar to the other total estimates in the second chart.

How has the number of technical AI safety researchers changed over time?

Technical AI safety research organizations

| Name | Number of researchers | Founding Year |

| Center for AI Safety (CAIS) | 10 | 2022 |

| Fund for Alignment Research (FAR) | 10 | 2022 |

| Conjecture | 10 | 2022 |

| Aligned AI | 4 | 2022 |

| Apart Research | 4 | 2022 |

| Encultured AI | 2 | 2022 |

| Anthropic | 15 | 2021 |

| Redwood Research | 12 | 2021 |

| Alignment Research Center (ARC) | 2 | 2021 |

| Alignment Forum | 50 | 2018 |

| Sam Bowman | 8 | 2020[6] |

| Jacob Steinhardt | 8 | 2016[6] |

| David Krueger | 7 | 2016[6] |

| Center for Human-Compatible AI | 30 | 2016 |

| OpenAI | 20 | 2016 |

| DeepMind | 20 | 2012 |

| Future of Humanity Institute (FHI) | 10 | 2005 |

| Machine Intelligence Research Institute (MIRI) | 15 | 2000 |

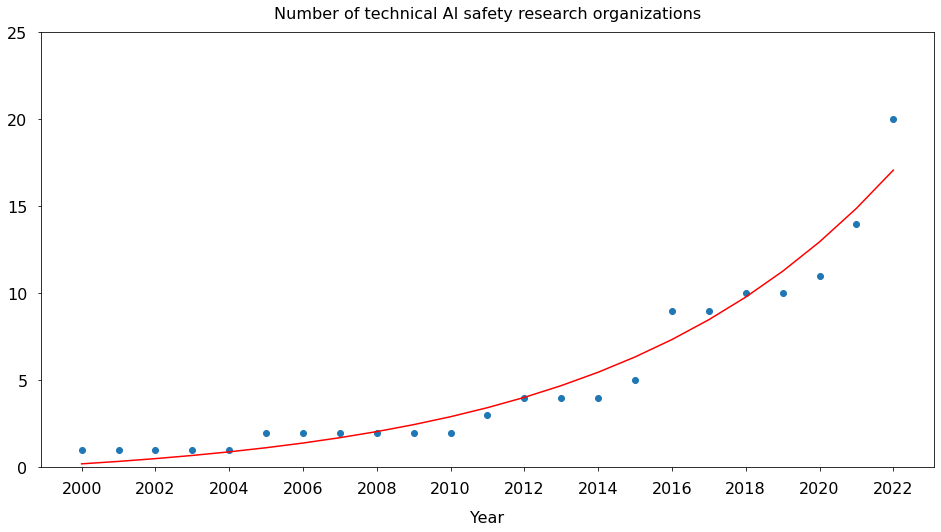

I graphed the data in the table above to show how the total number of technical AI safety organizations has changed over time:

The blue dots are the actual number of organizations in each year and the red line is an exponential model fitting the data.

I found that the number of technical AI safety research organizations is increasing exponentially at about 14% per year which makes sense given that EA funding is increasing and AI safety seems increasingly pressing and tractable.

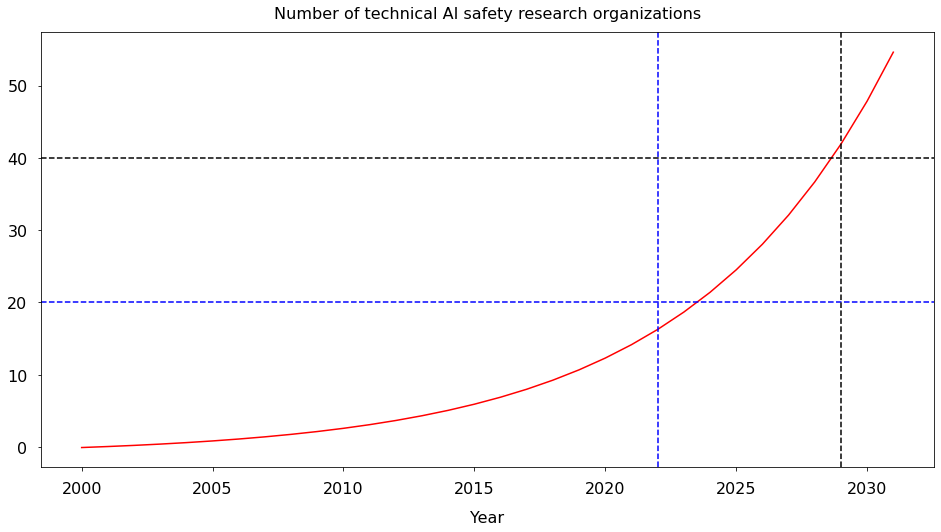

Then I extrapolated the same model into the future to create the following graph:

The table above includes 20 technical AI safety research organizations currently in existence and the model predicts that the number of organizations will double to 40 by 2029.

Technical AI safety researchers

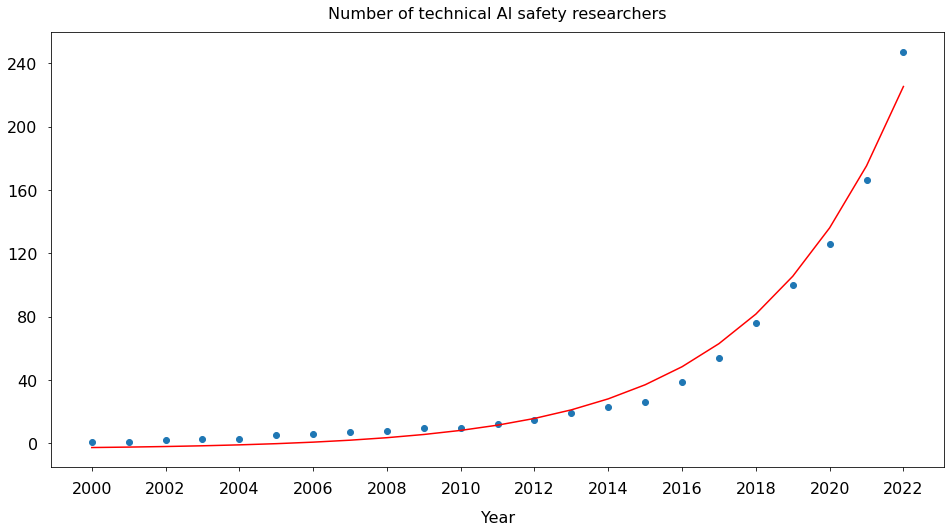

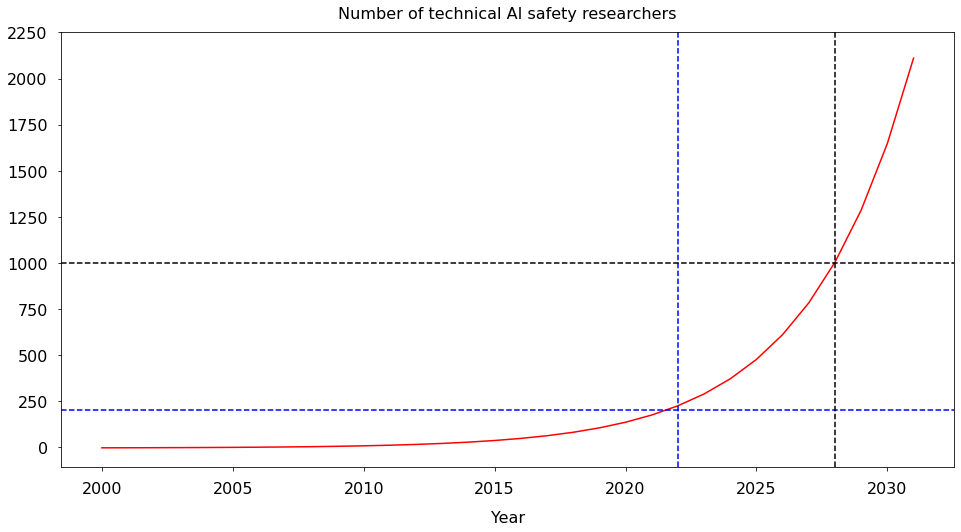

I also created a model to estimate how the total number of AI safety researchers has changed over time. In the model, I assumed that the number of researchers in each organization has increased linearly from zero when each organization was founded up to the current number in 2022. The blue dots are the data points from the model and the red line is an exponential curve fitting the dots.

The model estimates that the number of technical AI safety researchers has been increasing at a rate of about 28% per year since 2000.

The next graph shows the model extrapolated into the future and predicts that the number of technical AI safety researchers will increase from about 200 in 2022 to 1000 by 2028.

How could productivity increase in the future?

How the overall productivity of the technical AI safety research community will increase as the number of researchers increases is unclear. A well-known law that describes the research productivity of a field is Lotka's Law [7]. The formula for Lotka's Law is:

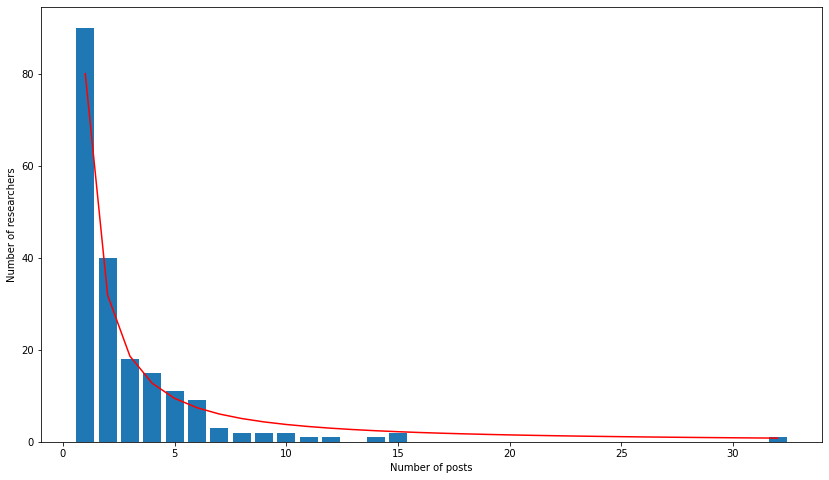

Y is the number of researchers who have published X articles. C is the total number of contributors in the field who have published one article and n is a constant which usually has a value of 2. I found that a value of 2.3 fits data from the Alignment Forum most well [8]:

The graph above shows that about 80 people have published a post on the Alignment Forum in the past six months. In this case, C = 80 and n = 2.3. Then the total number of posts published can be calculated by multiplying Y by X for each value of X and adding all the values together. For example:

80 / 1^2.3 = ~80 researchers have posted 1 post -> 80 * 1 = 80

80 / 2^2.3 = ~16 researchers have posted 2 posts -> 16 * 2 = 32

80 / 3^23 = ~6 researchers have posted 3 posts -> 6 * 3 = 18

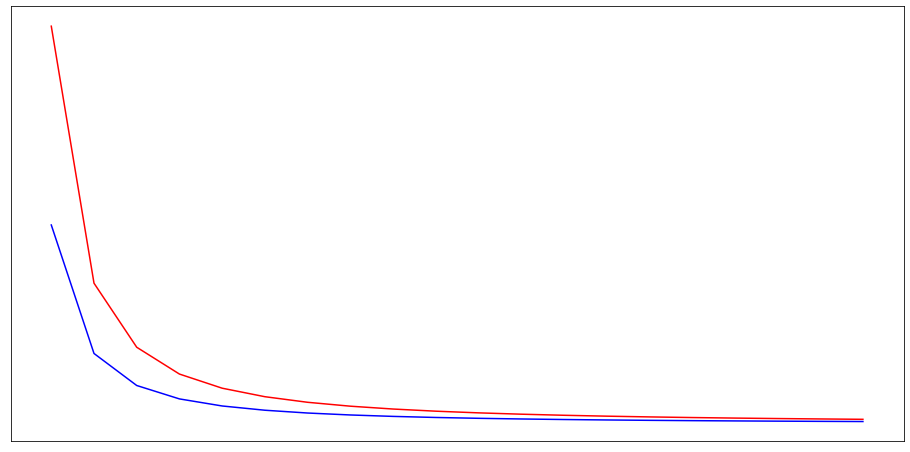

What happens when the number of researchers is increased? In other words, what happens when the value of C is doubled?

I found that when C is doubled, the total number of articles published per year also doubles. In the chart above, the area under the red curve is exactly double the area under the blue curve. In other words, the total productivity of a research field increases linearly with the number of researchers. The reason why is that increasing the number of researchers increases the number of low-productivity and high-productivity researchers equally.

It's important to note that simply increasing the number of posts will not necessarily increase the overall rate of progress. More researchers will be helpful if large problems can be broken up and parallelized so that each individual or team can work on a sub-problem. Nevertheless, increasing the size of the field should also increase the number of talented researchers if research quality is more important.

Conclusions

I estimated that there are about 300 full-time technical and 100 full-time non-technical AI safety researchers today which is roughly in line with previous estimates though my estimate for the number of technical researchers is significantly higher.

To be conservative, I think the correct order-of-magnitude estimate for the number of full-time AI safety researchers is around 100 today though I expect this to increase to 1000 in a few years.

The number of technical AI safety organizations and researchers has been increasing exponentially by about 10-30% per year, I expect that trend to continue for several reasons:

- Funding: EA funding has increased significantly over the past several years and will probably continue to increase in the future. Also, AI is and will increasingly be advanced enough to be commercially valuable which will enable companies such as OpenAI and DeepMind to continue funding AI safety research.

- Interest: as AI advances and the gap between current systems and AGI narrows, it will become easier and require less imagination to believe that AGI is possible. Consequently, it might become easier to get funding for AI safety research. AI safety research will also seem increasingly urgent which will motivate more people to work on it.

- Tractability: as time goes on, the current AI architectures will probably become increasingly similar to the architecture used in the first AGI system which will make it easier to experiment with AGI-like systems and learn useful properties about them.

By extrapolating past trends, I've estimated that the number of technical AI safety organizations will double from about 20 to 40 by 2030 and the number of technical AI safety researchers will increase from about 300 in 2022 to 1000 by 2030. I find it striking how many well-known organizations working on AI safety were founded very recently. This trend suggests that some of the most influential AI safety organizations will be founded in the future.

I then found that the number of posts published per year will likely increase at the same rate as the number of researchers. If the number of researchers increases by a factor of five by the end of the decade, I expect the number of posts or papers per year to also increase by that amount.

Breaking up problems into subproblems will probably help make the most of that extra productivity. As the volume of articles increases, skills or tools for summarization, curation, or distillation will probably be highly valuable for informing researchers about what is currently happening in their field.

- ^

My estimate is far lower as I would only classify researchers as 'AI capabilities' researchers if push the state-of-the-art forward. Though the number of AI safety researchers is almost certainly lower than the number of AI capabilities researchers.

- ^

What I did:

- Alignment Forum: scrape posts and count the number of unique authors.

- DeepMind: scrape safety-tagged publications and count the number of unique authors.

- OpenAI: manually classify publications as safety-related. Then count the number of unique authors.

- ^

Manually curated list of people on the Alignment Forum who don't work at any of the other organizations. Includes groups such as:

- Independent alignment researchers (e.g. John Wentworth)

- Researchers in programs such as SERI MATS and Refine (e.g. carado [AF · GW])

- Researchers in master's or PhD degrees studying AI safety (e.g. Marius Hobbhahn [AF · GW])

- ^

There are about 45 research profile on Google Scholar with the 'AI governance' tag. I counted about 8 researchers who weren't at the other organizations listed.

- ^

Note that the technical estimate is more accurate than the non-technical estimate because technical research is more clearly defined. I also put more research into estimating the number of technical AI safety researchers than non-technical researchers.

Also bear in mind that since I probably failed to include some organizations or groups in the table, the true figures could be higher.

- ^

These are rough guesses but the model is fairly robust to them.

- ^

Edit: thank you puffymist from the LessWrong comments section for recommending Lotka's Law over Price's Law as it is more accurate.

- ^

In case you're wondering, the outlier on the far right of the chart is John Wentworth.

14 comments

Comments sorted by top scores.

comment by RobertM (T3t) · 2022-09-29T07:04:39.419Z · LW(p) · GW(p)

Interesting analysis. Some questions and notes:

How are you looking at "researchers" vs. "engineers"? At some organizations, i.e. Redwood, the boundary is very fuzzy - there isn't a sharp delineation between anyone whose job it is to primarily "think of ideas", vs. "implement ideas that researchers come up with", so it seems reasonable to count most of their technical staff as researchers.

FAR, on the other hand, does have separate job titles for "Research Scientist" (4 people) vs. "Research Engineer" (5 people), though they do also say they "expect everyone on the project to help shape the research direction".

Some of the other numbers seem like overestimates.

CHAI has 2 researchers and 6 research fellows, and only 2 (maybe 3) of the research fellows are doing anything recognizable as alignment research. (Not extremely confident; didn't spend a lot of time digging for details for those that didn't have websites. But generally not optimistic.) One of the researcher is Andrew Critch, who is one of the two people at Encultured. If you throw in Stuart Russell that's maybe 6 people, not 30.

FHI has 2 people in their AI Safety Research Group. There are also a couple people in their macrostrategy research group it wouldn't be crazy to count. Everybody else listed on the page either isn't working on technical Alignment research or is doing so under another org also listed here. So maybe 4 people, rather than 10?

I don't have very up-to-date information but I would pretty surprised if MIRI had 15 full-time research staff right now.

Also, I think that every single person I counted above has at least a LessWrong account, and most also have Alignment Forum accounts, so a good chunk are probably double-counted.

On the other hand, there are a number of people going through SERI MATS who probably weren't counted; most of them will have LessWrong accounts but probably not Alignment Forum accounts (yet).

I'd be very happy to learn that there were 5 people at Meta doing something recognizable as alignment research; the same for Google Brain. Do you have any more info on those?

Replies from: stephen-mcaleese↑ comment by Stephen McAleese (stephen-mcaleese) · 2022-09-29T20:49:31.529Z · LW(p) · GW(p)

Thanks for the feedback.

I added a new section in the introduction named 'Definitions' to define some commonly-used terms in the post and decrease ambiguity. To answer your question, research engineers and research scientists would be in the technical AI safety research category.

I re-estimating the number of researchers at the organizations you mentioned and came up with the following numbers:

CHAI

- 2 researchers

- 6 researchers

- 21 graduate students

- 11 interns

Total: 40

From reading their website, CHAI has a lot graduate students and interns and I would consider them to be full-time researchers. My previous estimate for CHAI is 10-30-60 (low-estimate-high) and I changed it to 5-25-50 in light of this new information. My estimate is less than 40 to be conservative and also because I doubt all of these researchers are working full-time at CHAI. Also, some of them have probably been counted in the Alignment Forum total.

FHI

Information from their website:

- AI safety research group: 2

- AI governance researchers: 1

- Research Scholars Programme + DPhil Scholars and Affiliates: 9

- Total: 12

Change in estimate: 10-10-40 -> 5-10-30

MIRI

- 1 leader (Nate Soares)

- 9 research staff

Change in estimate: 10-15-30 -> 5-10-20

"Also, I think that every single person I counted above has at least a LessWrong account, and most also have Alignment Forum accounts, so a good chunk are probably double-counted."

I analyzed the people who posted AI safety posts on LessWrong and found that only 15% also had Alignment Forum accounts. I avoided double-counting by subtracting the LessWrong users who also have an Alignment Forum account from the LessWrong total.

For SERI MATS, I'm guessing that some of those people will be counted in the AF count. I also added an 'Other' row for other groups I didn't include in the table.

I decided to delete the Google Brain and Meta entries because I have very little information about them.

comment by Ryan Kidd (ryankidd44) · 2024-04-24T16:42:39.861Z · LW(p) · GW(p)

I found this article useful. Any plans to update this for 2024?

Replies from: stephen-mcaleese↑ comment by Stephen McAleese (stephen-mcaleese) · 2024-04-26T09:11:58.500Z · LW(p) · GW(p)

I think I might create a new post using information from this post [AF · GW] which covers the new AI alignment landscape.

comment by Stephen McAleese (stephen-mcaleese) · 2022-09-29T22:42:04.959Z · LW(p) · GW(p)

Edits based on feedback from LessWrong and the EA Forum:

EDITS:

- Added new 'Definitions' section to introduction to explain definitions such as 'AI safety', 'researcher' and the difference between technical and non-technical research.

UPDATED ESTIMATES (lower bound, estimate, upper bound):

TECHNICAL

- CHAI: 10-30-60 -> 5-25-50

- FHI: 10-10-40 -> 5-10-30

- MIRI: 10-15-30 -> 5-10-20

NON-TECHNICAL

- CSER: 5-5-10 -> 2-5-15

- Delete BERI from the list of non-technical research organizations

- Delete SERI from the list of non-technical research organizations

- Levelhume Centre: 5-10-70 (Low confidence) -> 2-5-15 (Medium confidence)

- FLI: 5-5-20 -> 3-5-15

- Add OpenPhil: 2-5-15

- Epoch: 5-10-15 -> 2-4-10

- Add 'Other': 5-10-50

comment by tutor vals · 2022-10-13T17:33:10.162Z · LW(p) · GW(p)

Commenting to signal appreciation despite this post currently being low upvotes after a while. The number of researchers currently working in AI is a datapoint that I often brink up in conversation about the importance of going into AI safety and the potential impact once could have. So far I've been saying there are between a hundred and a thousand AI safety researchers, which seems like I was correct, but it's more strong if I can say the current best estimate I found is around 200.

Thanks.

↑ comment by Stephen McAleese (stephen-mcaleese) · 2022-10-13T17:52:03.603Z · LW(p) · GW(p)

I agree. Having an idea of how many AI safety researchers there are is important for knowing how neglected the problem area is.

Note that the EA Forum version has more upvotes because this is a crosspost from there.

comment by puffymist · 2022-09-30T19:28:28.529Z · LW(p) · GW(p)

Minor comment on one small paragraph:

Price's Law says that half of the contributions in a field come from the square root of the number of contributors. In other words, productivity increases linearly as the number of contributors increases exponentially. Therefore, as the number of AI safety researchers increases exponentially, we might expect the total productivity of the AI safety community to increase linearly.

I think Price's law is false, but I don't know what law it should be instead. I'll look at the literature on the rate of scientific progress (eg. Cowen & Southwood (2019)) to see if I could find any relationship between number of researchers and research productivity.

Price's law is a poor fit; Lotka's law is a better fit

The most prominent citation for Price's law, Nicholls (1988), says that Price's law is a poor fit (section 4: Validity of the Price Law):

Little empirical investigation of the Price law has been carried out to date [4,14]. Glänzel and Schubert [12] have reported some empirical results. They analyzed Lotka’s Chemical Abstracts data and found that the most prolific authors contributed less that 20% of the total number of papers. They also refer, but without details, to the examination of “several dozens” of other empirical data sets and conclude that “in the usually studied populations of scientists, even the most productive authors are not productive enough to fulfill the requirements of Price’s conjecture” [12]. Some incidental results of scientometric studies suggest that about 15% of the authors will be necessary to generate 50% of the papers [16,17].

To further examine the empirical validity of Price’s hypothesis, 50 data sets were collected and analyzed here. ... the contribution of the most prolific group of authors fell considerably short of the [50% of the papers] predicted by Price. ... The actual proportion of all authors necessary to generate at least 50% of the papers was found to be much larger that . Table 2 summarizes these results. In some cases, ..., more than half of the total number of papers is generated by those authors contributing only a single paper each. The absolute and relative size of for various population sizes t is given in Table 3. All the empirical results referred to here are consistent; and, unfortunately, there seems little reason to suppose that further empirical results would offer any support for the Price law.

Nicholls (1988) continues, saying that Lotka's law (number of authors with publications is proportional to ) has good empirical support, and finds to be a good fit for sciences and humanities, and to be a good fit in social sciences.

A different paper, Chung & Cox (1990), also finds that Price's Law is a poor fit while Lotka's law with between 1.95 to 3.26 to be a good fit in finance.

(Allison, Price, Griffith, Moravcsik & Stewart (1976) discusses the mathematical relationship between Price's Law and Lotka's Law: neither implies the other; nor are they contradictory.)

Later edits:

Porby, in his post Why I think strong general AI is coming soon [LW · GW], mentions a tangentially related idea: core researchers contribute much more insight than newer researchers. New researchers need a lot of time to become core researchers.

In Porby's model, the research productivity at year may be proportional to the number of researchers at year .

Replies from: stephen-mcaleese, stephen-mcaleese↑ comment by Stephen McAleese (stephen-mcaleese) · 2022-10-05T21:08:58.297Z · LW(p) · GW(p)

Edit: rewrote the section on Price's Law to use Lotka's Law instead.

Replies from: puffymist, puffymist↑ comment by puffymist · 2022-11-03T14:31:46.703Z · LW(p) · GW(p)

I just realized that Scott Alexander (2018) had previously written specifically about scientific progress, while Holden Karnofsky (2021) had written about broader technological progress [? · GW].

(Sorry about the long delay; I hope it's still of interest.)

↑ comment by Stephen McAleese (stephen-mcaleese) · 2022-09-30T21:17:12.631Z · LW(p) · GW(p)

Thanks for the explanation. It seems like Lotka's Law is much more accurate than Price's Law (though Price's Law is simpler and more memorable).

comment by Mitchell Reynolds (mitchell-reynolds) · 2023-04-05T02:53:30.430Z · LW(p) · GW(p)

Solid analysis and an exploratory [LW · GW] comment in thinking about the future of AI safety researchers.

I find it striking how many well-known organizations working on AI safety were founded very recently. This trend suggests that some of the most influential AI safety organizations will be founded in the future.

In various winner take all scenarios, being early at the right time is often more important than being truly "the best." I'm modestly confident this will be true for capabilities but unsure if this will be true for AI safety organizations.

I think this matters in thinking about where the ~1000 future AI safety researchers will be employed and what research agendas are being pursued. My low-confidence guess for the future distribution would be a slightly higher n vs today with a higher average of researchers-per-organization vs today.

comment by Stephen McAleese (stephen-mcaleese) · 2022-10-02T17:10:17.180Z · LW(p) · GW(p)

More edits:

- DeepMind: 5 -> 10.

- OpenAI: 5 -> 10.

- Moved GoodAI from the non-technical to technical table.

- Added technical research organization: Algorithmic Alignment Group (MIT): 4-7.

- Merged 'other' and 'independent researchers' into one group named 'other' with new manually created (accurate) estimate.