What is a Tool?

post by johnswentworth, David Lorell · 2024-06-25T23:40:07.483Z · LW · GW · 4 commentsContents

Cognition: What does it mean, cognitively, to view or model something as a tool? Convergence: Insofar as different minds (e.g. different humans) tend to convergently model the same things as tools, what are the “real patterns” in the environment which give rise to that convergence? Corroboration: From that characterization, what other properties or implications of tool-ness can we derive and check? Modularity Loopiness None 4 comments

Throughout this post, we’re going to follow the Cognition -> Convergence -> Corroboration methodology[1]. That means we’ll tackle tool-ness in three main stages, each building on the previous:

- Cognition: What does it mean, cognitively, to view or model something as a tool?

- Convergence: Insofar as different minds (e.g. different humans) tend to convergently model the same things as tools, what are the “real patterns” in the environment which give rise to that convergence?

- Corroboration: Having characterized the real patterns convergently recognized as tool-ness, what other properties or implications of tool-ness can we derive and check? What further predictions does our characterization make?

We’re not going to do any math in this post, though we will gesture at the spots where proofs or quantitative checks would ideally slot in.

Cognition: What does it mean, cognitively, to view or model something as a tool?

Let’s start with a mental model of (the cognition of) problem solving, then we’ll see how “tools” naturally fit into that mental model.

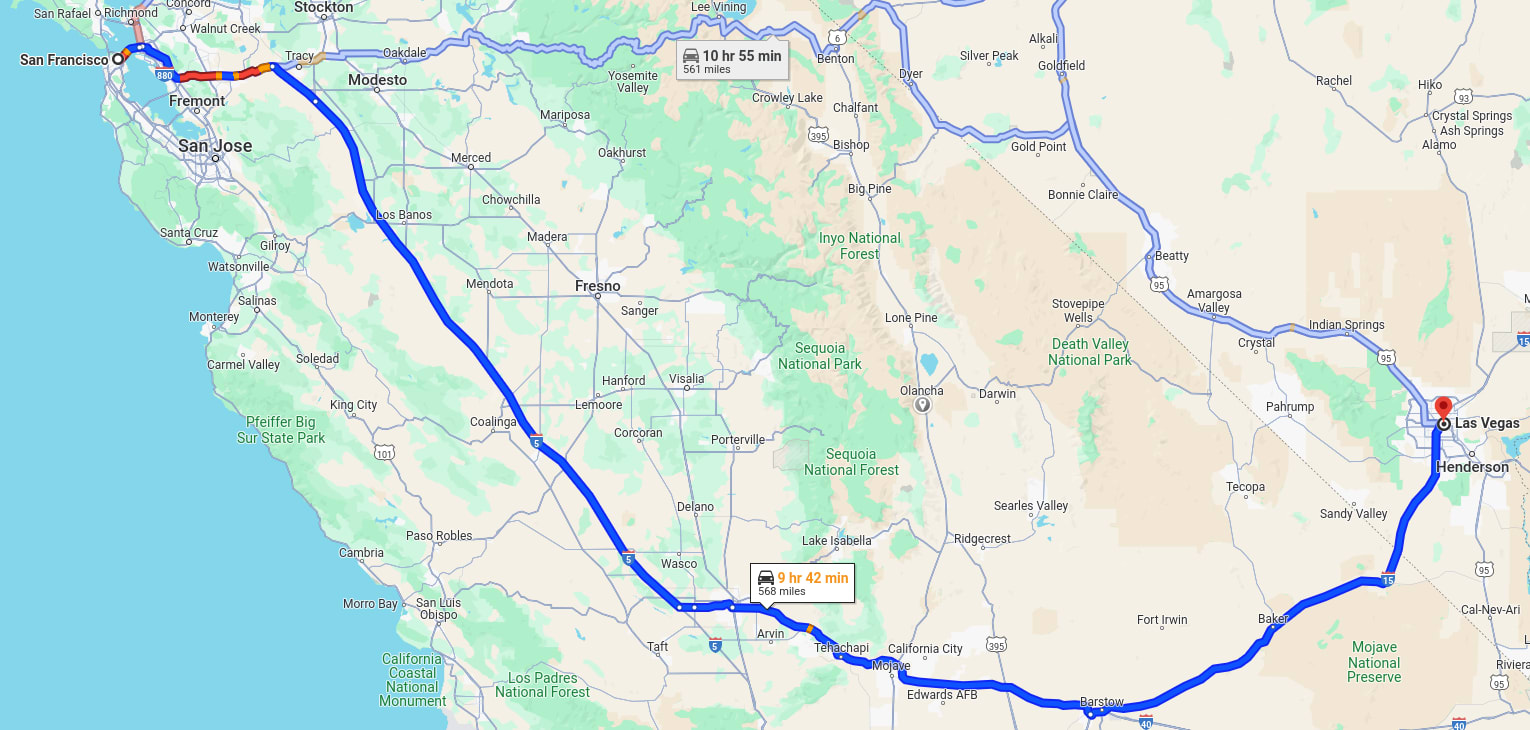

When problem-solving, humans often come up with partial plans - i.e. plans which have “gaps” in them, which the human hasn’t thought through how to solve, but expects to be tractable. For instance, if I’m planning a roadtrip from San Francisco to Las Vegas, a partial plan might look like “I’ll take I-5 down the central valley, split off around Bakersfield through the Mojave, then get on the highway between LA and Vegas”. That plan has a bunch of gaps in it: I’m not sure exactly what route I’ll take out of San Francisco onto I-5 (including whether to go across or around the Bay), I don’t know which specific exits to take in Bakersfield, I don’t know where I’ll stop for gas, I haven’t decided whether I’ll stop at the town museum in Boron, I might try to get pictures of the airplane storage or the solar thermal power plant, etc. But I expect those to be tractable problems which I can solve later, so it’s totally fine for my plan to have such gaps in it.

How do tools fit into that sort of problem-solving cognition?

Well, sometimes similar gaps show up in many different plans (or many times in one plan). And if those gaps are similar enough, then it might be possible to solve them all “in the same way”. Sometimes we can even build a physical object which makes it easy to solve a whole cluster of similar gaps.

Consider a screwdriver, for instance. There’s a whole broad class of problems for which my partial plans involve unscrewing screws. Those partial plans involve a bunch of similar “unscrew the screw” gaps, for which I usually don’t think in advance about how I’ll unscrew the screw, because I expect it to be tractable to solve that subproblem when the time comes. A screwdriver is a tool for that class of gaps/subproblems[2].

So here’s our rough cognitive characterization:

- Humans naturally solve problems using partial plans which contain “gaps”, i.e. subproblems which we put off solving until later

- Sometimes there are clusters of similar gaps

- A tool makes some such cluster relatively easy to solve.

Convergence: Insofar as different minds (e.g. different humans) tend to convergently model the same things as tools, what are the “real patterns” in the environment which give rise to that convergence?

First things first: there are limits to how much different minds do, in fact, convergently model the same things as tools.

You know that thing where there’s some weird object or class of objects, and you’re not sure what it is or what it’s for, but then one day you see somebody using it for its intended purpose and you’re like “oh, that’s what it’s for”? (👀)

From this, we learn several things about tools:

- Insofar as different humans convergently model the same things as tools at all, the real patterns which give rise to that convergence are (at least in part) patterns of usage, not just properties internal to the tool itself.

- Once we see someone using a tool for something, it usually is pretty obvious that the thing is a tool and I’d expect most people to converge on that model. I also expect people to mostly converge on their model of what-the-tool-is-for, conditional on the people seeing the tool used in the same ways.

- Note that we often only need to see a tool used a handful of times, maybe even just once, in order for this convergence to kick in. So, an analogue of the word-learning argument [LW · GW] applies: we can’t be learning the convergent tool-model by brute-force observation of lots of usage examples; most of the relevant learning must happen in an unsupervised way, not routing through usage examples.[3]

To my mind, these facts suggest a model analogous to the cluster model of word-meaning [LW · GW]. Model:

- There’s (some degree of) convergence across minds in the “gaps” which naturally show up in plans.

- Sometimes those gaps form clusters. We already have a story [LW · GW] (including some math and quantitative empirics) for when clusters are convergent across minds.

- … so when someone sees another person using a certain object to solve a subproblem from a particular cluster, that one example might be enough to connect that object to that subproblem-cluster - i.e. model the object as a tool whose purpose is to solve subproblems in that cluster.

- … so insofar as the clusters are convergent across minds, different minds should also need only a few usage-examples to agree on which subproblem-cluster(s) a given tool “is for”.

Ideally, we’d like to mathematically prove the convergence steps above, or at least come up with some realistic and simple/empirically-checkable sufficient conditions under which convergence occurs. We have at least part of such an argument for cluster convergence, but convergence in the “gaps” which show up in plans is a wide-open problem.

Corroboration: From that characterization, what other properties or implications of tool-ness can we derive and check?

We’ll give a couple example implications here; perhaps you can come up with some more!

Modularity

Let’s go back to the roadtrip example. My (partial) road trip plan has a bunch of gaps in it[4]:

- I’m not sure exactly what route I’ll take out of San Francisco onto I-5 (including whether to go across or around the Bay)

- I don’t know which specific exits to take in Bakersfield

- I don’t know where I’ll stop for gas

- I haven’t decided whether I’ll stop at the town museum in Boron

- I might try to get pictures of the airplane storage or the solar thermal power plant

Notice that these gaps are modular: my choice about what route to take out of San Francisco is mostly-independent of which exits I take in Bakersfield, the choice about where to stop for gas is mostly-independent of both of those (unless it’s along the route from San Francisco to I-5, or in Bakersfield), the choice about whether to stop at the town museum in Boron is mostly-independent of all of those, etc. Insofar as the choices interact at all, it’s via a few low-dimensional variables like e.g. how much time various routes or stops will take, or with only a few other choices.

From a cognitive perspective, modularity is an important prerequisite for the whole partial-plan-with-gaps strategy to be useful. If all the subproblems were tightly coupled to each other, then we couldn't solve them one-by-one as they came up.

Modularity of subproblems, in turn, implies some corresponding modularity in tools.

Consider a screwdriver again. Screws show up in lots of different objects, in lots of different places. In order for a screwdriver to make those subproblems easy to solve, it has to be able to screw/unscrew screws in all those different contexts (or at least a lot of them).

Furthermore, the screwdriver has to not have lots of side effects which could mess up other subproblems in some of those contexts - for instance, if the screwdriver is to be used for tight corners when building wooden houses, it better not spit flames out the back. To put it differently: part of what it means for a tool to solve a subproblem-cluster is that the tool roughly preserves the modularity of that subproblem-cluster.

Loopiness

Having a screwdriver changes what partial plans I make: with a screwdriver on hand, I will make a lot more plans which involve screwing or unscrewing things. Hammers are notorious for making an awful lot of things look like nails. When I have a tool which makes it easy to solve subproblems in a certain cluster, I’m much more willing to generate partial plans whose gaps are in that cluster.

… and if I’m generating partial plans with a different distribution of gaps, then that might change which clusters naturally show up in the distribution of gaps.

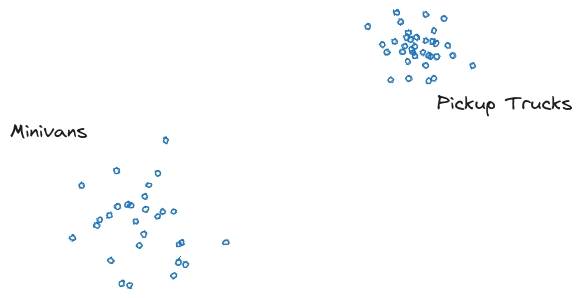

We’ve talked about a phenomenon like this before in the context of social constructs [LW · GW]. A toy example, in that context, talks about a model in which cars and trucks each naturally cluster:

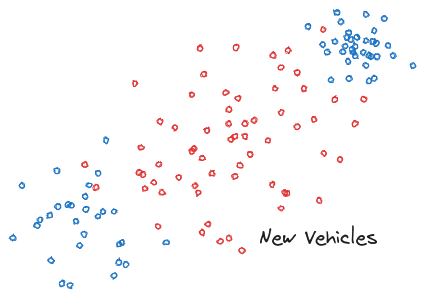

… but of course people could just choose to build different kinds of vehicles which fall in other parts of the space:

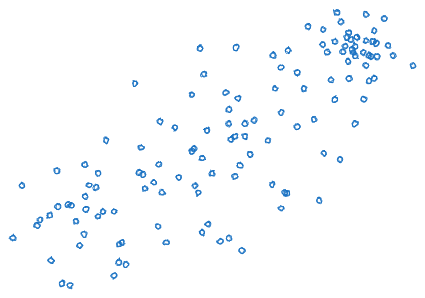

… and that changes which clusters are natural.

The key point here is that we can change which clusters are natural/convergent for modeling the world by changing what stuff is in the world.

That sort of phenomenon is also possible with tools. In particular: in a given environment, we hypothesized in the previous section that there’s (at least some) convergence across minds in the gaps which show up in partial plans, and convergence in clustering of those gaps. But the “given environment” includes whatever tools are in the environment. So sometimes, by creating a new tool, someone might change what kinds of partial plans people form, and thereby create a whole new subproblem-cluster, or change the shapes of existing subproblem-clusters.

Furthermore, insofar as people are aware of different tools available in the environment, they might form different kinds of partial plans with different kinds of gaps, and thereby have different subproblem-clusters. I expect that’s a big factor in the cases where it takes tens of examples to learn what a new tool is for (as opposed to 1-3 examples): there’s an iterative process of refining one’s model of the tool and its cluster, then generating different kinds of plans to leverage the new tool/cluster, then re-clustering based on the new kinds of plans generated. Eventually, that process settles down to a new steady state.

- ^

… a name which we just made up for a methodology we’ve found quite fruitful lately and should probably write an independent post on at some point. Consider this post an example/case study of how the Cognition -> Convergence -> Corroboration methodology works.

- ^

Probably some of you are thinking “doesn’t the screwdriver also change which plans I make, and therefore which gaps show up in my plans? Y’know, because I’m more likely to consider plans involving unscrewing stuff if I have a screwdriver.”. Yes; hold that thought.

- ^

Even when we do need more than a handful of examples, it’s more like tens of different examples than different examples, so the argument still applies. More on that later.

- ^

Note that the subproblems in the road trip example in particular are not necessarily solved by tools. We do not expect that every subproblem or even cluster of subproblems has an existing tool which fits it.

4 comments

Comments sorted by top scores.

comment by p.b. · 2024-06-26T18:18:48.411Z · LW(p) · GW(p)

I don't get what role the "gaps" are playing in this.

Where is it important for what a tool is, that it is for a gap and not just any subproblem? Isn't a subproblem for which we have a tool never a gap?

Or maybe the other way around: Aren't subproblem classes that we are not willing to leave as gaps those we create tools for?

If I didn't know about screwdrivers I probably wouldn't say "well, I'll just figure out how to remove this very securely fastened metal thing from the other metal thing when I come to it".

comment by jmh · 2024-06-26T01:08:34.554Z · LW(p) · GW(p)

Perhaps it's just the terminolgoy of the story but are tools modeled as physical objects or are they more general than that? For example, I often think of things like government and markets (and other social institutions) as tools which exists at a social level problem space.

With footnote 4, is the point that there is not tight problem-tool mapping, in the sense that we will have problems/subprobles that are solved without the use of a tool (as you have them modeled/defined)? Or is it better understood as saying we will have gaps we have not yet solved, or not yet solved in some way that has converged to some widely shared conception of a tool that solves the problem?

Replies from: johnswentworth↑ comment by johnswentworth · 2024-06-26T01:28:02.380Z · LW(p) · GW(p)

Perhaps it's just the terminolgoy of the story but are tools modeled as physical objects or are they more general than that?

I was trying to be agnostic about that. I think the cognitive characterization works well for a fairly broad notion of tool, including thought-tools, mathematical proof techniques, or your examples of governments and markets.

With footnote 4, is the point that...

There may be subproblems which don't fit any cluster well, there may be clusters which don't have any standard tool, some of those subproblem-clusters may be "solved" by a tool later but maybe some never will be... there's a lot of possibilities.

comment by quetzal_rainbow · 2024-06-27T08:38:06.618Z · LW(p) · GW(p)

I think the important part of toolness is knowledge of area of applicability of said tools. Within area of applicability you can safely optimize state of tool in arbitrary directions.