[ASoT] Observations about ELK

post by leogao · 2022-03-26T00:42:20.540Z · LW · GW · 0 commentsContents

No comments

This document outlines some of my current thinking about ELK, in the form of a series of observations I have made that inform my thinking about ELK.

Editor’s note: I’m experimenting with having a lower quality threshold for just posting things even while I’m still confused and unconfident about my conclusions, but with this disclaimer at the top. Thanks to AI_WAIFU and Peter Barnett for discussions.

- One way we can think of ELK is we have some set of all possible reporters, each of which takes in the latent states of a world model and outputs, for the sake of concreteness, the answer to some particular fixed question. So essentially we can think of a reporter+model pair as assigning some answer to every point in the action space. Specifically, collapsing possible sequences of actions into just a set of possible actions and only worrying about one photo doesn’t result in any loss of generality but makes things easier to talk about.

- We pick some prior over the set of possible reporters. We can collect training data which looks like pairs (action, answer). This can, however, only cover a small part of the action space, specifically limited by how well we can “do science.”

- This prior has to depend on the world model. If it didn’t, then you could have two different world models with the same behavior on the set where we can do science, but where one of the models understands what the actual value of the latent variable is, and one is only able to predict that the diamond will still appear there but can’t tell whether the diamond is still real. A direct translator for the second will be a human simulator for the first.

- More generally, we don’t really have guarantees that the world model will actually understand what’s going on, and so it might genuinely believe things about the latent variable that are wrong. Like, we might have models which think exactly like a human and so they don't understand anything a human wouldn’t understand, so the direct translator for this model would also simultaneously be a human simulator, because the model literally believes exactly what a human would believe.

- There are more than just human simulators and direct translators that are consistent with the training data; there are a huge number of ways the remaining data can be labeled. This basically breaks any proposal that starts with penalizing reporters that look like a human simulator.

- I basically assume the “human simulator” is actually simulating us and whatever science-doing process we come up with, since this doesn’t change much and we’re assuming that doing science alone isn’t enough because we’re looking at the worst case.

- So, for any fixed world model, we need to come up with some prior over all reporters such that after picking all the reporters consistent with our data (action, answer), the maximum of the resulting posterior is the direct translator.

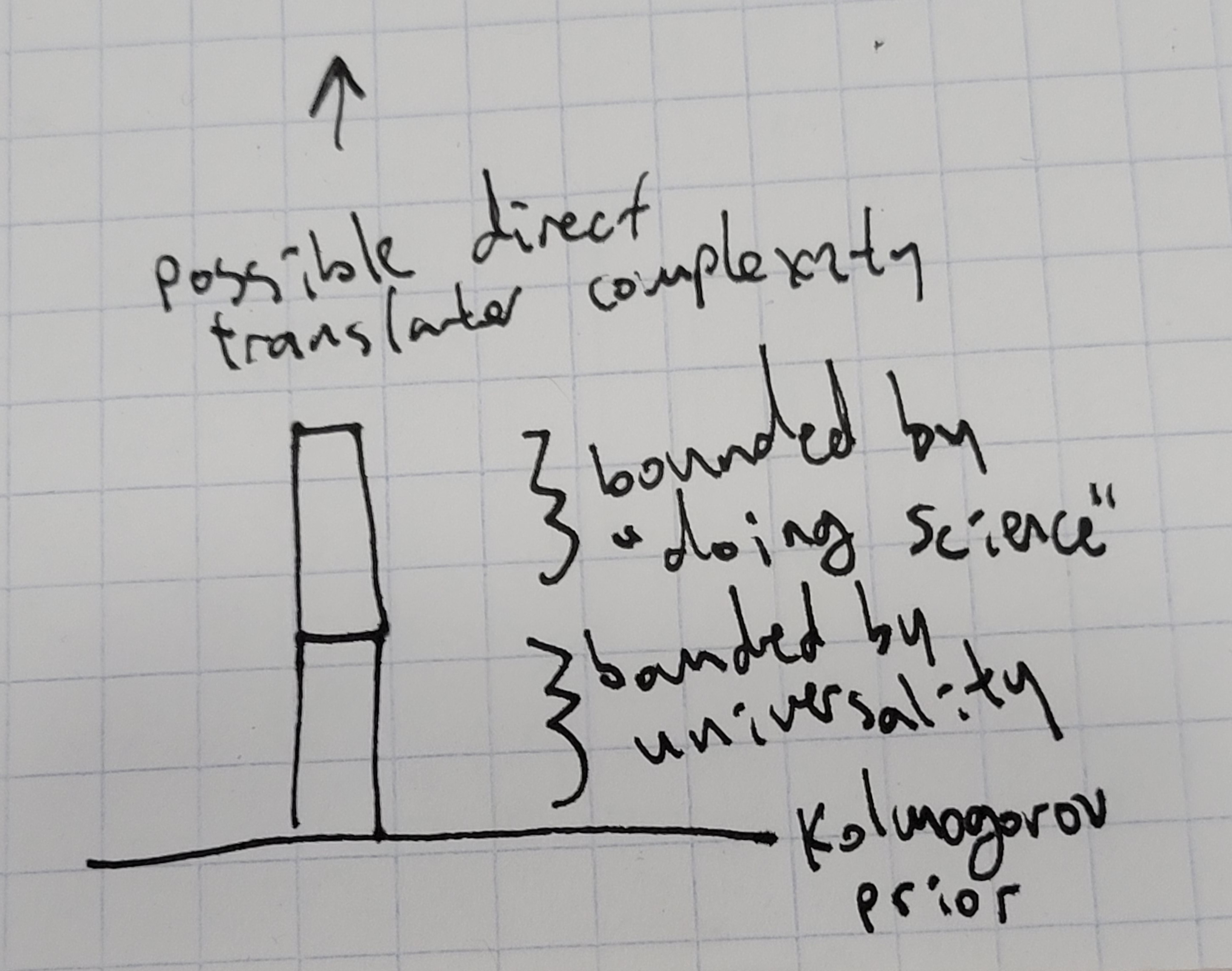

- A big assumption I’m making is that the KL distance between our prior and the complexity prior has to be finite. My intuition for why this has to be is that a) universality of the complexity prior, and b) even if our prior doesn’t have to cover all possible reporters, it still seems reasonable that as complexity increases the number of reporters that our prior accepts as nonzero needs to increase exponentially, which gets something similar to universality of complexity on the subset of plausible reporters. I think this point is the main weak point of the argument.

- Since the amount of data we can get is bounded by our ability to do science, the amount of information we can get from that is also bounded. However, since we’re assuming worst case, we can make the complexity difference between the direct translator and all the other junk arbitrarily big, and therefore arbitrarily less likely, and since our modified prior and our data only get us a finite amount of evidence, we can always imagine a world where the direct translator is sufficiently complex such that we can’t pick it out.

- So to solve ELK we would need to somehow rule out a huge part of the set of possible reporters before even updating on data. In some sense this feels like we ran in a circle, because there is always the prior that looks at the model, solves ELK, and then assigns probability 1 to the direct translator. So we can always just move all the difficulty into choosing the prior and then the data part is just entirely useless. I guess in retrospect it is kind of obvious that the prior has to capture the part that the data can’t capture, but that's literally the entire ELK problem.

- One seemingly useful thing this does tell us is that our prior has to be pretty restrictive if we want to solve ELK in the worst case, which I think rules out a ton of proposals right off the bat. In fact, I think your prior can only assign mass to a finite number of reporters, because to get the sum to converge it needs to decay fast enough, which also gets you the same problem.

- Only assigning mass to a finite number of reporters is equivalent to saying we know the upper bound of direct translator complexity. Therefore, we can only solve ELK if we can bound direct translator complexity, but in the worst case setting we're assuming direct translator complexity can be unbounded.

So either we have to be able to bound complexity above, violating the worst case assumption, or we have to bound the number of bits we can get away from the complexity prior. Therefore, there exists no worst case solution to ELK.(Update: I no longer think this is accurate, because there could exist a solution to ELK that function entirely by looking at the original model. Then the conclusion of this post is weaker and only shows that assigning an infinite prior is not a feasible strategy for worst case)

0 comments

Comments sorted by top scores.