Posts

Comments

if asked about recommendation algoritms, I think it might be much higher - given a basic understanding of what they are, addictiveness, etc

imo, our philosophical progress has been stagnated by media (in the classic sense of the word) - recording ideas makes it harder to forget them. akin to training at very low dropout

In a parallel universe with a saner civilization, there must be tons of philosophy professors workings with tons of AI researchers to try to improve AI's philosophical reasoning.

Sanskrit scholars worked for generations to make Sanskrit better for philosophy

But maybe we are just bad at politics and coalition-building.

Mostly due to a feeling of looking down on people imo

Thank you. We just had some writers join, who're, among other things, going to make an up to date About Us section. some out of date stuff is available on https://aiplans.substack.com

Something that we use internally is: https://docs.google.com/document/d/1wcVlWRTKJqiXOvKNl6PMHCBF3pQItCCcnYwlWvGgFpc/edit?usp=sharing

We're primarily focused on making a site rebuild atm, which has a lot of new and improved features users have been asking for. Preview (lots of form factor stuff broken atm) at: https://ai-plans-site.pages.dev/

I want to know.

Ok, so are these not clickbait then?

"Stop This Train, Win a Lamborghini"

"$1 vs $250,000,000 Private Island!"

"$1 vs $100,000,000 House!"

"I Hunted 100 People!"

"Press This Button To Win $100,000!"

My Clients, The Liars

And All The Shoggoths Merely Players

Acting Wholesomely

These are the most obvious examples. By 'clickbait', here I mean a title that's more for drawing in readers than accurately communicating what the post is about. Doesn't mean it can't be accurate too - after all, MrBeast rarely lies in his video titles - but it means that instead of choosing the title that is most accurate, they chose the most eye catching and baiting title out of the pool of accurate/semi-accurate titles.

update on my beliefs - among humans of above average intelligence, the primary factor for success is willpower - stamina, intensity and consistency

You might be interested in the Broad List of Vulnerabilities

Thank you, this is useful. Planning to use this for AI-Plans.

Hasn't that happened?

Yet, the top posts on LessWrong are pretty much always clickbaited, just in the LessWrong lingo.

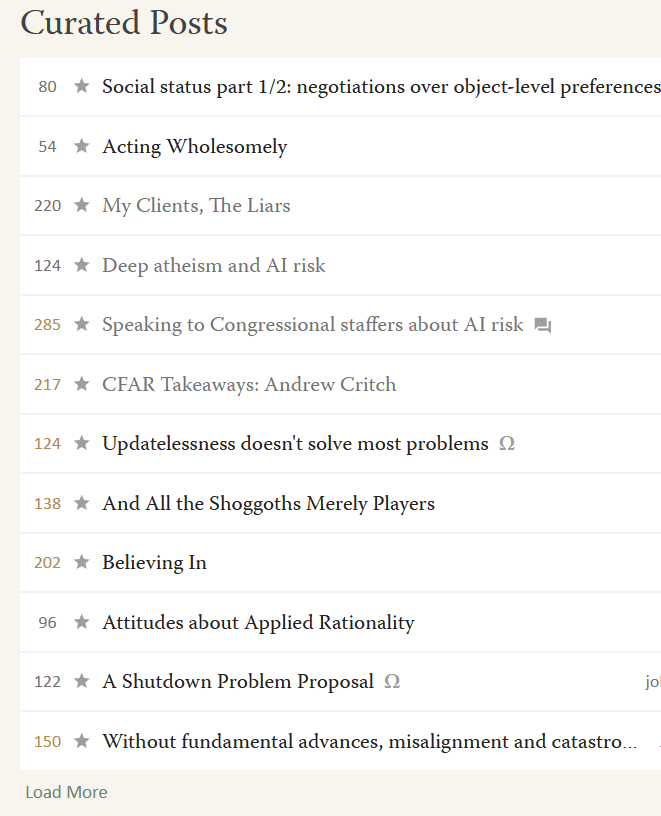

The Curated Posts seem to be some of the worst cases of this:

I find that LessWrong folk in general are really, really, susceptible to deception and manipulation when it's done in their language.

Week 3: How hard is AI alignment?

Seems like something important to be aware of, even if they may disagree.

for sure. right now it's just a google form and google sheets. would you be interested in taking charge of this?

Thank you, I've labelled that as the form link now and added the DB link.

Thank you! I'll add those as well!

Ah, sorry, here's the link! https://docs.google.com/spreadsheets/d/1uXzWavy1mS0X-uQ21UPWHlAHjXFJoWWlN62EyKAoUmA/edit?usp=sharing

Thank you for pointing that out, also added it to the post!

Updated to 115.

Perhaps a note on Pre-Requisites would be useful.

E.g. the level of math & comp sci that's assumed.

Suggestion: try going through the topics to 50+ random strangers. Wildly useful for improving written work.

Yes, that's what I'm referring to. As in, getting that enacted as a policy.

This is an absurdly low bar, but yes, this should be done.

How can I help?

We're doing this at AI-Plans.com!! With the Critique-a-Thon and the Law-a-Thon!

Extremely important

there is an issue with surface level insights being unfaily weighted, but this is solvable, imo. especially with youtube, which can see which commenters have watched the full video.

You can compete with someone more intelligent but less hard working by being more organized, disciplined, focused and hard working. And being open to improvement.