AI doing philosophy = AI generating hands?

post by Wei Dai (Wei_Dai) · 2024-01-15T09:04:39.659Z · LW · GW · 23 commentsContents

23 comments

23 comments

Comments sorted by top scores.

comment by johnswentworth · 2024-01-15T19:00:01.820Z · LW(p) · GW(p)

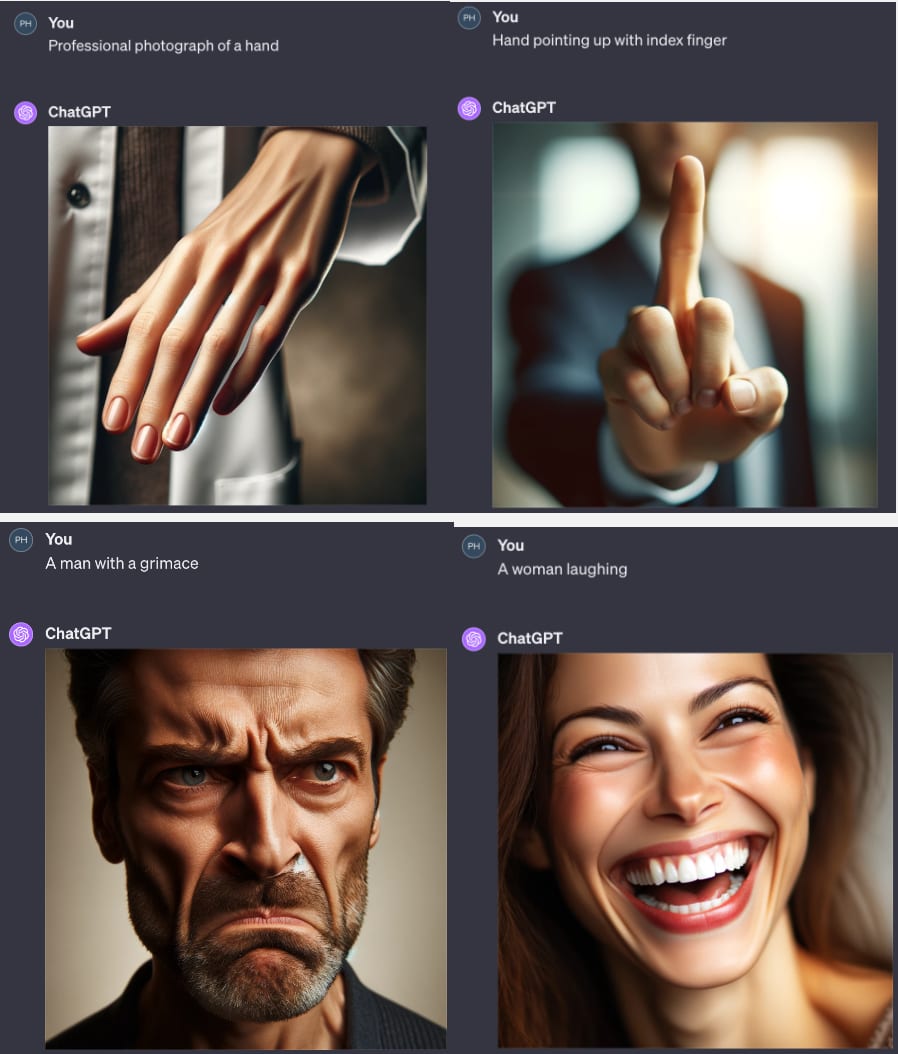

Some explanations I've seen for why AI is bad at hands:

My girlfriend practices drawing a lot, and has told me many times that hands (and faces) are hard not because they're unusual geometrically but because humans are particularly sensitive to "weirdness" in them. So an artist can fudge a lot with most parts of the image, but not with hands or faces.

My assumption for some time has been that e.g. those landscape images you show are just as bad as the hands, but humans aren't as tuned to notice their weirdness.

Replies from: Wei_Dai, Phib↑ comment by Wei Dai (Wei_Dai) · 2024-01-15T21:51:27.369Z · LW(p) · GW(p)

Even granting your girlfriend's point, it's still true that AIs' image generation capabilities are tilted more towards landscapes (and other types of images) and away from hands, compared with humans, right? I mean, by the time that any human artist can create landscape images that look anywhere nearly as good as the ones in my example, they would certainly not be drawing hands as bad as the ones in my example (i.e., completely deformed, with wrong number of fingers and so on).

Replies from: johnswentworth↑ comment by johnswentworth · 2024-01-16T02:39:05.517Z · LW(p) · GW(p)

By the time a human artist can create landscape images which look nearly as good as those examples to humans, yeah, I'd expect they at least get the number of fingers on a hand consistently right (which is also a "how good it looks to humans" thing). But that's still reifying "how good it looks to humans" as the metric.

↑ comment by worse (Phib) · 2024-01-17T21:34:51.582Z · LW(p) · GW(p)

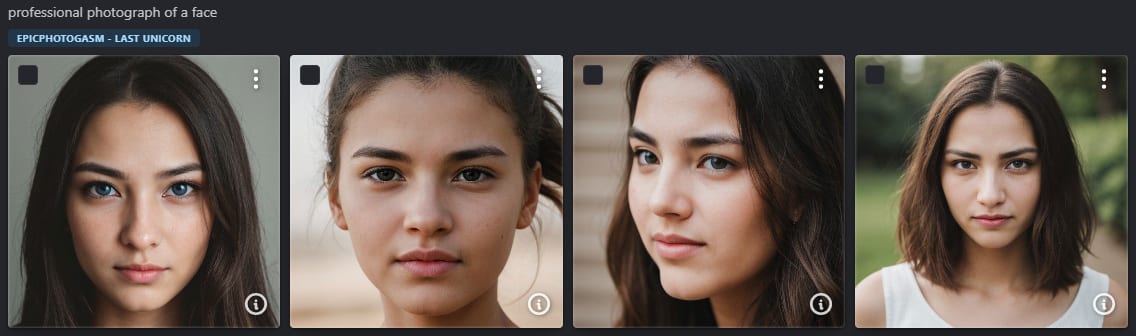

I think if this were true, then it would also hold that faces are done rather poorly right now which, maybe? Doing some quick tests, yeah, both faces and hands at least on Dalle-3 seem to be similar levels of off to me.

Replies from: gwern↑ comment by gwern · 2024-01-17T21:57:01.715Z · LW(p) · GW(p)

I disagree with Wentworth here: faces are easy. That's why they were the first big success of neural net generative modeling. They are a uniform object usually oriented the same way with a reliable number of features like 2 eyes, 1 nose, 1 mouth, and 2 ears. (Whereas with hands, essentially nothing can be counted on, not the orientation, not the number of hands nor fingers, nor their relationships. And it's unsurprising that we are so often blind to serious errors in illustrations like having two left hands or two left feet.) Humans are hyperalert to the existence of, but highly forgiving about the realism, where it comes to faces :-)

This is why face feature detectors were so easy to create many decades ago. And remember ProGAN & StyleGAN: generating faces that people struggled to distinguish from real was easy for GANs, and people rate GAN faces as 'more trustworthy' etc. (Generally, you could only tell by looking at the parts which weren't faces, like the earrings, necklaces, or backgrounds, and being suspicious if the face was centered & aligned to the 3 key points of the Nvidia dataset.) For a datapoint, I would note that when we fed cropped images of just hands into TADNE & BigGAN, we never noticed that they were generating flawless hands, although the faces were fine. Or more recently, when SD first came out, people loved the faces... and it was the hands that screwed up the images, not the faces. The faces were usually fine.

If DALL-E 3 has faces almost as bad as its hands (although I haven't spent much time trying to generate photorealistic faces personally, I haven't noticed any shortage in the DALL-E subreddits), that probably isn't due to faces being as intrinsically hard as hands. They aren't. Nor can it be due to any intrinsic lack of data in online image scrapes - if there is one thing that is in truly absurd abundance online, it is images of human faces! (Particularly selfies.)

OA in the past has screwed around with the training data for DALL-Es, like DALL-E 2's inability to generate anime (DALL-E 3 isn't all that good either), so I would guess that any face-blindness was introduced as part of the data filtering & processing. Faces are very PII and politically-charged. (Consider how ImageNet was recently bowdlerized to erase all human faces in it! Talk about horses & barns...) OA has been trying to avoid copyrighted images or celebrities or politicians, so it would be logical for them to do things like run face recognition software, and throw out any images which contain any face which is loosely near any human with, say, a Wikipedia article which has a photo. They might go so far as to try to erase faces or drop images with too-large faces.

Replies from: Phib, Wei_Dai↑ comment by worse (Phib) · 2024-01-18T00:26:47.997Z · LW(p) · GW(p)

So here was my initial quick test, I haven't spent much time on this either, but have seen the same images of faces on subreddits etc. and been v impressed. I think asking for emotions was a harder challenge vs just making a believable face/hand, oops

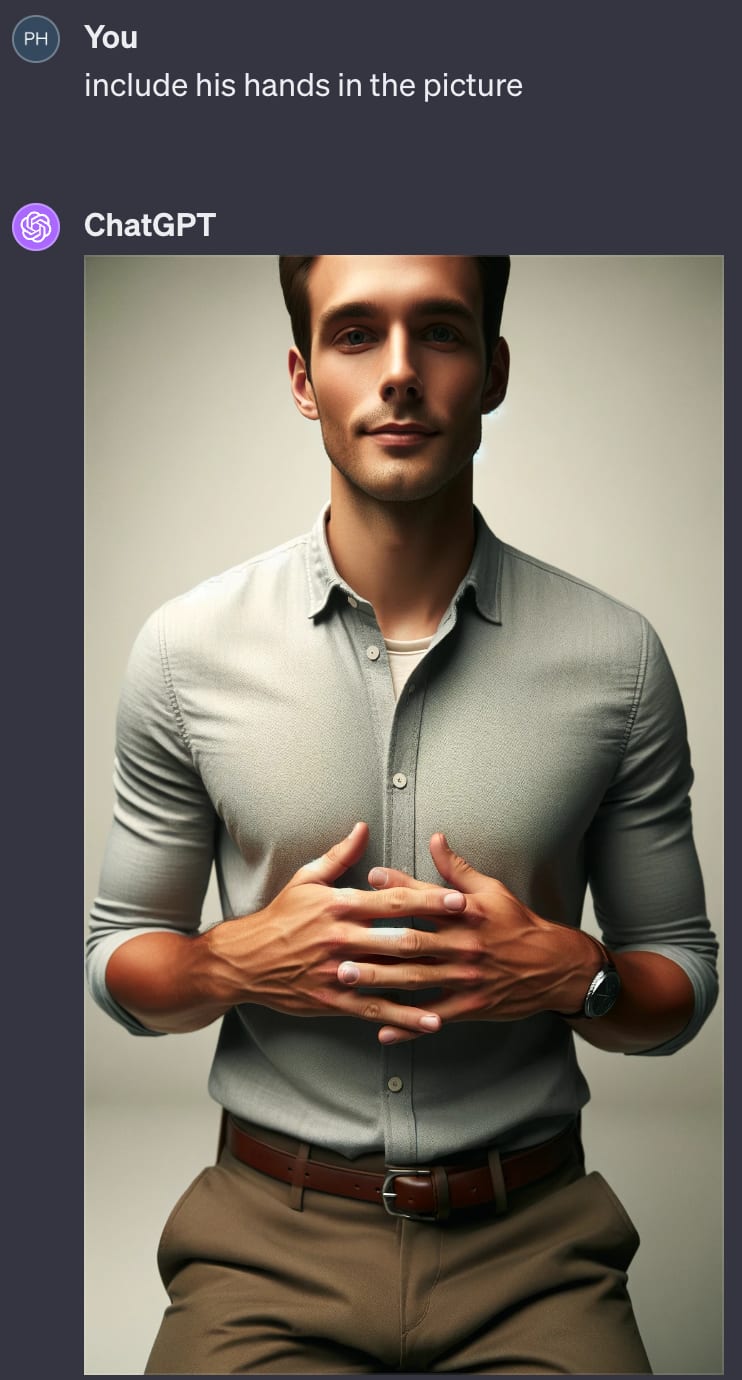

I really appreciate your descriptions of the distinctive features of faces and of pareidolia, and do agree that faces are more often better represented than hands, specifically hands often have the more significant/notable issues (misshapen/missing/overlapped fingers). Versus with faces where there's nothing as significant as missing an eye, but it can be hard to portray something more specific like an emotion (though same can be said for, e.g. getting Dalle not to flip me off when I ask for an index finger haha).

Rather difficult to label or prompt a specific hand orientation you'd like as well, versus I suppose, an emotion (a lot more descriptive words for the orientation of a face than a hand)

So yeah, faces do work, and regardless of my thoughts on uncanny valley of some faces+emotions, I actually do think hands (OP subject) are mostly a geometric complexity thing, maybe we see our own hands so much that we are more sensitive to error? But they don't have the same meaning to them as faces for me (minute differences for slightly different emotions, and benefitting perhaps from being able to accurately tell).

↑ comment by gwern · 2024-01-20T01:27:42.209Z · LW(p) · GW(p)

FWIW, I would distinguish between the conditional task of 'generating a hand/face accurately matching a particular natural language description' and the unconditional task of 'generating hands/faces'. A model can be good at unconditional generation but then bad at conditional generation because they, say, have a weak LLM or they use BPE tokenization or the description is too long. A model may know perfectly well how to model hands in many positions but then just not handle language perfectly well. One interesting recent paper on the sometimes very different levels of capabilities depending on the directions you're going in modalities: "The Generative AI Paradox: "What It Can Create, It May Not Understand"", West et al 2023.

↑ comment by Wei Dai (Wei_Dai) · 2024-01-17T22:15:54.695Z · LW(p) · GW(p)

Yeah, agreed. See this example (using the same image generation model as in the OP) if anyone is still not convinced.

comment by gwern · 2024-01-15T19:47:44.906Z · LW(p) · GW(p)

There are news articles about this problem going back to at least 2022

But people have known it well before 2022. The observation that hands appear uniquely hard probably goes back to the BigGAN ImageNet Generator released in late 2018 and widely used on Ganbreeder; that was the first general-purpose image generator which was high-quality enough that people could begin to notice that the hands seemed a lot worse. (Before that, the models are all too domain-restricted or too low-quality to make that kind of observation.) If no one noticed it on Ganbreeder, we definitely had begun noticing it in Tensorfork's anime generator work during 2019-2020 (especially the TADNE preliminaries), and that's why we created hand-tailored hand datasets and released PALM in June 2020.

(And I have been telling people 'hands are hard' ever since, as they keep rediscovering this... I'm still a little surprised how unwilling generator creators seem to be to create hand-specific datasets or add in hand-focus losses like Make-a-Scene's focal losses, considering how once SD was released, complaints about hands exploded in frequency and became probably the single biggest reason that samples had to be rejected or edited.)

comment by Matthew Barnett (matthew-barnett) · 2024-01-17T23:07:51.072Z · LW(p) · GW(p)

In a parallel universe with a saner civilization, there must be tons of philosophy professors workings with tons of AI researchers to try to improve AI's philosophical reasoning. They're probably going on TV and talking about 养兵千日,用兵一时 (feed an army for a thousand days, use it for an hour) or how proud they are to contribute to our civilization's existential safety at this critical time. There are probably massive prizes set up to encourage public contribution, just in case anyone had a promising out of the box idea (and of course with massive associated infrastructure to filter out the inevitable deluge of bad ideas). Maybe there are extensive debates and proposals about pausing or slowing down AI development until metaphilosophical [? · GW] research catches up.

This paragraph gives me the impression that you think we should be spending a lot more time, resources and money on advancing AI philosophical competence. I think I disagree, but I'm not exactly sure where my disagreement lies. So here are some of my questions:

- How difficult do you expect philosophical competence to be relative to other tasks? For example:

- Do you think that Harvard philosophy-grad-student-level philosophical competence will be one of the "last" tasks to be automated before AIs are capable of taking over the world?

- Do you expect that we will have robots that are capable of reliably cleaning arbitrary rooms, doing laundry, and washing dishes, before the development of AI that's as good as the median Harvard philosophy graduate student? If so, why?

- Is the "problem" more that we need a superhuman philosophical reasoning to avoid a catastrophe? Or is the problem that even top-human-level philosophers are hard to automate in some respect?

- Why not expect philosophical competence to be solved "by default" more-or-less using transfer learning from existing philosophical literature, and human evaluation (e.g. RLHF, AI safety via debate, iterated amplification and distillation etc.)?

- Unlike AI deception generally, it seems we should be able to easily notice if our AIs are lacking in philosophical competence, making this problem much less pressing, since people won't be comfortable voluntarily handing off power to AIs that they know are incompetent in some respect.

- To the extent you disagree with the previous bullet point, I expect it's either because you think the problem is either (1) sociological (i.e. the problem is that people will actually make the mistake of voluntarily handing power to AIs they know are philosophically incompetent), or the problem is (2) hard because of the difficulty of evaluation (i.e. we don't know how to evaluate what good philosophy looks like).

- In case (1), I think I'm probably just more optimistic than you about this exact issue, and I'd want to compare it to most other cases where AIs fall short of top-human level performance. For example, we likely would not employ AIs as mathematicians if people thought that AIs weren't actually good at math. This just seems obvious to me.

- Case (2) seems more plausible to me, but I'm not sure why you'd find this problem particularly pressing compared to other problems of evaluation, e.g. generating economic policies that look good to us but are actually bad.

- More generally, the problem of creating AIs that produce good philosophy, rather than philosophy that merely looks good, seems like a special case of the general "human simulator" argument, where RLHF is incentivized to find AIs that fool us by producing outputs that look good to us, but are actually bad. To me it just seems much more productive to focus on the general problem of how to do accurate reinforcement learning (i.e. RL that rewards honest, corrigible, and competent behavior), and I'm not sure why you'd want to focus much on the narrow problem of philosophical reasoning as a special case here. Perhaps you can clarify your focus here?

- What specific problems do you expect will arise if we fail to solve philosophical competence "in time"?

- Are you imagining, for example, that at some point humanity will direct our AIs to "solve ethics" and then implement whatever solution the AIs come up with? (Personally I currently don't expect anything like this to happen in our future, at least in a broad sense.)

↑ comment by Wei Dai (Wei_Dai) · 2024-01-17T23:55:31.908Z · LW(p) · GW(p)

What specific problems do you expect will arise if we fail to solve philosophical competence “in time”?

- The super-alignment effort will fail [LW(p) · GW(p)].

- Technological progress will continue to advance faster than philosophical progress, making it hard or impossible for humans to have the wisdom to handle new technologies correctly. I see AI development itself as an instance of this, for example the e/acc crowd trying to advance AI without regard to safety because they think it will automatically align with their values (something about "free energy"). What if, e.g., value lock-in becomes possible in the future and many decide to lock in their current values (based on their religions and/or ideologies) to signal their faith/loyalty?

- AIs will be optimized for persuasion and humans won't know how to defend [LW · GW] against bad but persuasive philosophical arguments aimed to manipulate them.

but I’m not sure why you’d find this problem particularly pressing compared to other problems of evaluation, e.g. generating economic policies that look good to us but are actually bad

Bad economic policies can probably be recovered from and are therefore not (high) x-risks.

My answers to many of your other questions are "I'm pretty uncertain, and that uncertainty leaves a lot of room for risk." See also Some Thoughts on Metaphilosophy [LW · GW] if you haven't already read that, as it may help you better understand my perspective. And, it's also possible that in the alternate sane universe, a lot of philosophy professors have worked with AI researchers on the questions you raised here, and adequately resolved the uncertainties in the direction of "no risk", and AI development has continued based on that understanding, but I'm not seeing that happening here either.

Let me know if you want me to go into more detail on any of the questions.

comment by Dagon · 2024-01-15T17:02:27.866Z · LW(p) · GW(p)

Hands are notoriously hard for human artists, which may be the cause of lack of training data. HOWEVER, hands have a huge advantage that the median human can evaluate a hand image for believably very cheaply, and there will be broad agreement on the binary classification "OK" or "bad".

I don't know of any such mechanism for "doing philosophy".

Also, there does exist lots of examples of both real and of well-drawn hands, which COULD be filtered into training data if it becomes worth the cost. There are no clear examples of "good philosophy" that could be curated into a training set.

Replies from: Wei_Dai↑ comment by Wei Dai (Wei_Dai) · 2024-01-19T22:28:06.485Z · LW(p) · GW(p)

I think I made these points in the OP? Not sure if you're just agreeing with me, or maybe I'm missing your point?

Replies from: Dagon↑ comment by Dagon · 2024-01-20T18:42:00.221Z · LW(p) · GW(p)

I think I meant the OP didn't make very clear the distinction between "no training data" meaning "data exists, and humans can tell good from bad, but it's hard to find/organize" vs "we don't have a clear definition of good, and it's hard to tell good from bad, so we honestly don't think the data exists to find".

Replies from: Wei_Dai↑ comment by Wei Dai (Wei_Dai) · 2024-01-21T01:14:51.120Z · LW(p) · GW(p)

Ah I see, thanks for the clarification. Personally I'm uncertain about this, and have some credence on each possibility, and may have written the OP to include both possibilities without explicitly distinguishing between them. See also #3 in this EAF comment [EA(p) · GW(p)] and its followup for more of how I think about this.

comment by Kabir Kumar (kabir-kumar-1) · 2024-08-13T21:13:48.675Z · LW(p) · GW(p)

In a parallel universe with a saner civilization, there must be tons of philosophy professors workings with tons of AI researchers to try to improve AI's philosophical reasoning.

Sanskrit scholars worked for generations to make Sanskrit better for philosophy

Replies from: adele-lopez-1, kabir-kumar-1↑ comment by Adele Lopez (adele-lopez-1) · 2024-08-13T23:38:33.115Z · LW(p) · GW(p)

Sanskrit scholars worked for generations to make Sanskrit better for philosophy

That sounds interesting, do you know a good place to get an overview of what the changes were and how they approached it?

↑ comment by Kabir Kumar (kabir-kumar-1) · 2024-08-13T21:15:47.987Z · LW(p) · GW(p)

imo, our philosophical progress has been stagnated by media (in the classic sense of the word) - recording ideas makes it harder to forget them. akin to training at very low dropout

comment by Wei Dai (Wei_Dai) · 2025-04-12T21:30:00.993Z · LW(p) · GW(p)

Since I wrote this post, AI generation of hands has gotten a lot better, but the top multimodal models still can't count fingers from an existing image. Gemini 2.5 Pro, Grok 3, and Claude 3.7 Sonnet all say this picture (which actually contains 8 fingers in total) contains 10 fingers, while ChatGPT 4o says it contains 12 fingers!

comment by Roman Leventov · 2024-01-16T02:18:07.778Z · LW(p) · GW(p)

Apart from the view on philosophy as "cohesive stories that bind together and infuse meaning into scientific models", which I discussed with you earlier and you was not very satisfied with, another interpretation of philosophy (natural phil, phil of science, phil of mathematics, and metaphil, at least) is "apex generalisation/abstraction". Think Bengio's "AI scientist", but the GM should be even deeper to first sample a plausible "philosophy of science" given all the observations about the world up to the moment, then sample plausible scientific theory given the philosophy and all observations up to the moment on a specific level or coarse-graining/scientific abstraction (quantum, chemical, bio, physio, psycho, socio, etc.), then sample mechanistic model that describes the situation/system of interest at hand (e.g., a morphology of a particular organism, given the laws of biology, or morphology of the particular society), given the observations of the system up to the moment, and then finally sample plausible variables values that describe the particular situation at a particular point in time given all the above.

If this interpretation is correct, then doing philosophy well and not deluding ourselves is far off. And there is a huge risk in thinking we can do it well before we actually can.

comment by Vladimir_Nesov · 2024-01-15T13:22:09.944Z · LW(p) · GW(p)

Philosophy and to some extent even decision theory are more like aspects of value content. AGIs and ASIs have the capability to explore them, if only they had the motive. Not taking away this option and not disempowering its influence doesn't seem very value-laden, so it's not pivotal to explore it in advance, even though it would help. Avoiding disempowerment is sufficient to eventually get around to industrial production of high quality philosophy. This is similar to how the first generations of powerful AIs shouldn't pursue CEV, and more to the point don't need to pursue CEV.

comment by RogerDearnaley (roger-d-1) · 2024-01-15T22:31:36.232Z · LW(p) · GW(p)

In a parallel universe with a saner civilization, there must be tons of philosophy professors workings with tons of AI researchers to try to improve AI's philosophical reasoning.

Quite a lot of the questions that have been considered in Philosophy are related to how minds work, with the most of the evidence available until now being introspection. Since we are now building minds, and starting to do mechanistic interpretability on them, I am hoping that we'll get to the point where AI researchers can help improve the philosophical reasoning of philosophy professors, on subjects relating to how minds work.

Replies from: Wei_Dai↑ comment by Wei Dai (Wei_Dai) · 2024-01-19T22:31:01.224Z · LW(p) · GW(p)

Yeah, it seems like a potential avenue of philosophical progress. Do you have ideas for what specific questions could be answered this way and what specific methods might be used?