Clickbait Soapboxing

post by DaystarEld · 2024-03-13T14:09:29.890Z · LW · GW · 15 commentsThis is a link post for https://daystareld.com/clickbait-soapboxing/

Contents

15 comments

Someone on Twitter said:

I am guilty of deliberately stating things in a bold & provocative form on here in order to stimulate discussion. Leaving hedges & caveats for the comments section. On net, I think this is better than alternatives, but I’m open to being convinced otherwise.

And I finally felt the urge to write up thoughts I’ve had about what I’ll call “clickbait soapboxing” for the past year or so. A disclaimer is that I feel like I could write a whole book on this sort of thing, and will inevitably have more complex thoughts about what I say here that comes off as simple.

Also, I’m not super confident I am right to feel so strongly about how bad it seems, and also also, I personally like many people (like the above poster) who regularly do this.

But I don’t feel at all confident that people doing it are tracking all the effects it has, and they certainly don’t seem to acknowledge it. So this seems maybe like it’s useful to say explicitly.

First off, some of these are clearly a “me” thing. For example, I have trouble trusting people to be as capable of “actual” vulnerability or sincerity when they don’t put effort into representing their thoughts accurately. It feels, at best, like a shield against criticism: “I was wrong on purpose!”

But I know others struggle with inhibition/social anxiety: “I’d rather speak boldly, knowing I’m wrong in some way, than not speak at all!” Which, yeah, makes sense! But are you planning to ever address the root cause? Is it healing, or cope/crutch? (Not judging, I really don’t know!)

In any case, there are still externalities. Illusion of transparency is real! Typical mind fallacy is real!

Should you care? shrug What makes us care about anything we say in the first place? Just don’t motte-bailey “communicating for self-expression” or "processing out loud" vs “sharing ideas and learning" or "talking about True Things."

As for me (and maybe others out there like me), the effects include things like thinking:

“Did this person actually change their mind? Do they actually believe the more nuanced thing? Or are they just backpedaling due to getting stronger pushback than expected?”

As well as:

“Are they actually interested in learning and sharing interesting ideas? Or are they optimizing for being interesting and getting followers?”

And

“If they misinform someone, would they care? Would they do it on purpose, if it got them likes and subscribes?”

I don’t make judgements like these lightly. These are just thoughts that I have about people, possibilities that seem ever so slightly more likely, the more I see them engage in sloppy or misleading communication practices.

Val [LW · GW] writes well about a sense of “stillness” that is important to being able to think and see and feel clearly. I think the default for news media, social media, and various egregores in general are to hijack our attention and thought patterns, channel them into well-worn grooves.

And I have a hard time feeling trust that people who (absent forewarning/consent) try to trigger people in any way in order to have a “better” conversation… are actually prioritizing having a better conversation? It seems like the same generators are at work as when an organization or ideology does it.

And all this is, in my view, very clearly eroding the epistemic commons.

Humans are social monkeys. Loud emotive takes drown out nuanced thoughtful ones. People update off massively shared and highly upvoted headlines. Far fewer read the nuanced comments.

And very few, vanishingly few, seem to reliably be able to give themselves space to feel when they’re thinking, or give themselves trust to think when they’re feeling. I certainly don’t always react gracefully to being triggered.

So why shrink that space? Why erode that trust? Are you driven more by worry you won’t be able to speak, or fear you won’t feel heard? And then, fear you won’t feel heard, or anxiety your views won’t be validated?

I dislike psychoanalysis, and I definitely don’t assert these things as sure bets of why people do what they do. But it’s what bubbles up in my thoughts, and it’s what inhibits trust in my heart.

And all this also acts as a bit of an explanation to those who’ve asked me why I don’t use twitter much. By design, it feels antagonistic to giving people space to think and feel; writers unless they pay money, and readers unless they fight an endless war of attrition against things trying to eat their attention and turn them into balls of rage and fear.

I’ve no reason to make such a system work, and I’m uninterested in making it work “for me.” In my heart, that feels like surrender to the same generators destroying public discourse, and leads otherwise thoughtful and caring people to being a bit less so, for the sake of an audience.

15 comments

Comments sorted by top scores.

comment by Seth Herd · 2024-03-14T06:11:01.302Z · LW(p) · GW(p)

I think you're preaching to the choir. I think the majority opinion among LW users is that it's a sin against rationality to overstate ones' case or ones beliefs, and that "generating discussion" is not a sufficient reason to do so.

It works to generate more discussion, but it really doesn't seem to generate good discussion. I think it creates animosity through arguments, and that creates polarization. Which is a major mind-killer.

Replies from: Viliam, Jiro, DaystarEld↑ comment by Viliam · 2024-03-14T15:59:51.526Z · LW(p) · GW(p)

Yeah, I think it is okay to simplify things when someone puts an explicit disclaimer like "this is a simplification" or "this is not literally true, but it is an attempt to point in a certain direction".

But without such disclaimer, I will assume "once clickbait, always clickbait", especially when the priors on people being stupid on internet are so high.

Replies from: kabir-kumar-1↑ comment by Kabir Kumar (kabir-kumar-1) · 2024-03-17T14:26:48.344Z · LW(p) · GW(p)

Yet, the top posts on LessWrong are pretty much always clickbaited, just in the LessWrong lingo.

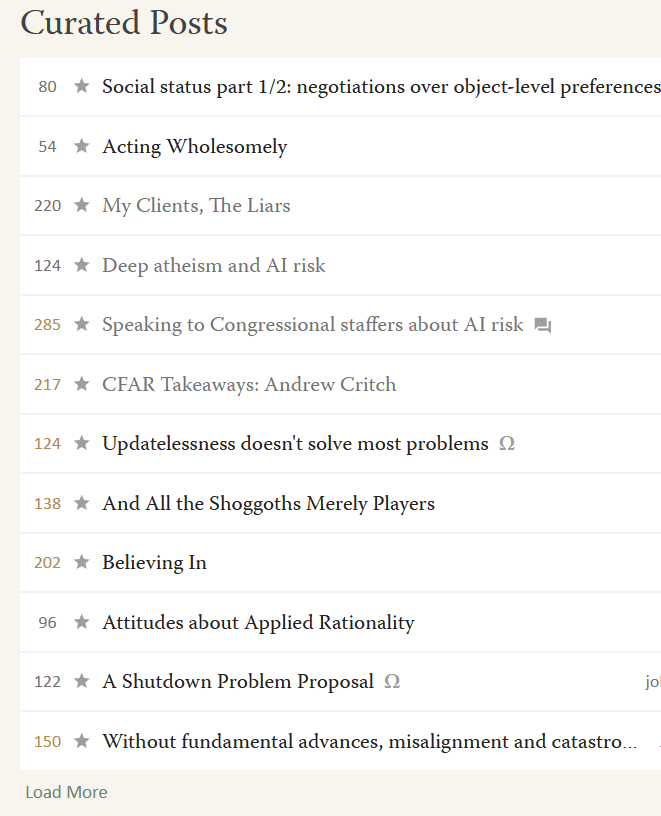

The Curated Posts seem to be some of the worst cases of this:

I find that LessWrong folk in general are really, really, susceptible to deception and manipulation when it's done in their language.

↑ comment by habryka (habryka4) · 2024-03-17T20:50:33.543Z · LW(p) · GW(p)

I... really don't see any clickbait here. If anything these titles feel bland to me (and indeed I think LW users could do much better at making titles that are more exciting, or more clearly highlight a good value proposition for the reader, though karma makes up for a lot).

Like, for god's sake, the top title here is "Social status part 1/2: negotiations over object-level preferences". I feel like that title is at the very bottom of potential clickbaitiness, given the subject matter.

↑ comment by ryan_greenblatt · 2024-03-17T17:39:34.094Z · LW(p) · GW(p)

Which of these titles are click bait?

I disagree with the thesis of some, but none seem like click bait titles to me.

Replies from: kabir-kumar-1↑ comment by Kabir Kumar (kabir-kumar-1) · 2024-04-04T10:59:30.873Z · LW(p) · GW(p)

My Clients, The Liars

And All The Shoggoths Merely Players

Acting Wholesomely

These are the most obvious examples. By 'clickbait', here I mean a title that's more for drawing in readers than accurately communicating what the post is about. Doesn't mean it can't be accurate too - after all, MrBeast rarely lies in his video titles - but it means that instead of choosing the title that is most accurate, they chose the most eye catching and baiting title out of the pool of accurate/semi-accurate titles.

Replies from: MondSemmel↑ comment by MondSemmel · 2024-04-04T13:51:47.943Z · LW(p) · GW(p)

I don't really agree with this definition of clickbait. A title that merely accurately communicates what the post is about, is usually a boring one and thus communicates that the post is boring and not worth reading. Also see my comment here [LW(p) · GW(p)]. Excerpt:

Replies from: kabir-kumar-1Similarly, a bunch of things have to line up for an article to go viral: someone has to click on your content (A), then like it (B), and then finally follow a call to action like sharing it or donating (C). From this perspective, it's important to put a significant fraction of one's efforts on quality (B) into efforts on presentation / clickability (A).

(Side note: If this sounds like advocacy for clickbait, I think it isn't. The de facto problem with a clickbaity title like "9 Easy Tips to Win At Life" is not the title per se, but that the corresponding content never delivers.)

↑ comment by Kabir Kumar (kabir-kumar-1) · 2024-04-06T21:09:44.335Z · LW(p) · GW(p)

Ok, so are these not clickbait then?

"Stop This Train, Win a Lamborghini"

"$1 vs $250,000,000 Private Island!"

"$1 vs $100,000,000 House!"

"I Hunted 100 People!"

"Press This Button To Win $100,000!"

Replies from: MondSemmel↑ comment by MondSemmel · 2024-04-06T22:17:12.705Z · LW(p) · GW(p)

No, those are clickbait. 4 is straightforwardly misleading with the meaning of the word "hunt". 2 and 3 grab attention via big dollar numbers without explaining any context. And 1 and 5 are clickbait but wouldn't be if an arbitrary viewer could at any time actually do the things described in the titles, rather than these videos being about some competition that's already happened.

Whereas a title saying "Click on this blog post to win $1000" wouldn't be clickbait if anyone could click on the blog post and immediately receive $1000. It would become clickbait if it was e.g. a limited-time offer and expired, but would not be clickbait if the title was changed at that point.

↑ comment by Jiro · 2024-03-15T05:53:35.519Z · LW(p) · GW(p)

I think the majority opinion among LW users is that it’s a sin against rationality to overstate ones’ case or ones beliefs, and that “generating discussion” is not a sufficient reason to do so.

I've seen it claimed otherwise in the wild.

Replies from: Seth Herd↑ comment by DaystarEld · 2024-03-14T09:45:11.781Z · LW(p) · GW(p)

>I think you're preaching to the choir.

Definitely, but if anyone's going to disagree in a way that might change my mind or add points I haven't thought of, I figured it would be people here.

comment by Shankar Sivarajan (shankar-sivarajan) · 2024-03-15T14:46:06.740Z · LW(p) · GW(p)

There is a difference between conveying facts/beliefs with high fidelity, and saying things that are technically correct. The latter, when deliberately misleading, is lying; the former, even when imprecise/"wrong", is honesty.

Depending on the format, the nuance can be implicit, such when talking to intelligent and reasonable men, or with footnotes, hyperlinks, etc. in a place that anyone reading the brief unnuanced "bold & provocative" statement can easily find it.

On Twitter, since I can only find the top-level post with none of the replies and comments, this person (whom you haven't anonymized) would in fact be engaging in lying, and since he appears to care, ought to stop.

comment by TeaTieAndHat (Augustin Portier) · 2024-03-14T12:20:03.646Z · LW(p) · GW(p)

I agree, as most people here probably do. But it always seems weird to me to see that sort of things being framed as "X is disinforming people by optimizing for clicks", or generally, "X is doing a bad thing"—which you kinda did, though not too much. Some people, and disproportionately the ones who think deeply enough to notice that sort of things, are quite aware that this is what they’re doing. But most are just not thinking enough about it? Thinking hard about what one does is pretty uncommon, after all. But then, the point I just made is obvious: it’s exactly because we can be irrational without being at all malicious that LW exists. Still, I prefer to file it in my brain as "this person is betraying the rules!" only when the person really should know better (or is actually acting maliciously).