Posts

Comments

This is true, and it strongly influences the ways Americans think about how to provide public goods to the rest of the world. But they're thinking about how to provide public goods the rest of the world[1]. "America First" is controversial in American intellectual circles, whereas in my (limited) conversations in China people are usually confused about what other sort of policy you would have.

- ^

Disclosure: I'm American, I came of age in this era

(Counterpoint: for big groups like bureaucracies, intra-country variances can average out. I do think we can predict that a group of 100 random Americans writing an AI constitution would place more value on political self-determination and less on political unity than a similar group of Chinese.)

There's more variance within countries than between countries. Where did the disruptive upstart that cares about Free Software[1] come from? China. Is that because China's more libertarian than the US? No, it's because there's a wide variance in both the US and China and by chance the most software-libertarian company was Chinese. Don't treat countries like point estimates.

- ^

Free as in freedom, not as in beer

Of course, I agree, it's such a pattern that it doesn't look like a joke. It looks like a very compelling true anecdote. And if someone repeats this "very compelling true anecdote" (edit and other people recognize that, no, it's actually a meme) they'll make AI alignment worriers look like fools who believe Onion headlines.

This is a joke, not something that happened, right? Could you wrap this in quote marks or put a footnote or somehow to indicate this is riffing on a meme and not a real anecdote from someone in the industry? I read a similar comment on LessWrong a few months ago and it was only luck that kept me from repeating it as truth to people on the fence about whether to take AI risks seriously.

LessWrong is uncensored in China.

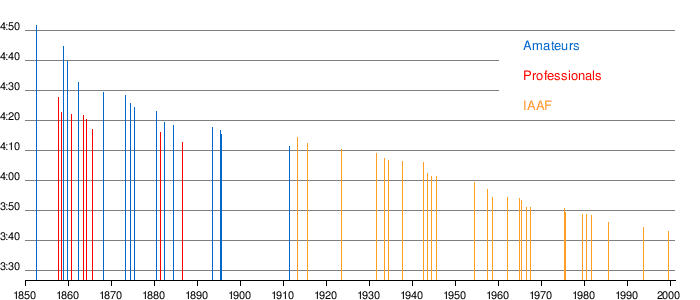

I know it's not your main point, but for the actual 4-minute-mile I'm on the side of the null hypothesis. In a steady progression, once any one arbitrary threshold is crossed (4:10 minutes, 4:00 minutes, 3:50 minutes), many others are soon to follow.

Trolling a bit, perhaps we could talk about a "4-Minute-Mile Gell-Mann Effect". Events that to outsiders look like discontinuous revolutions look to insiders like minor ticks with surprising publicity.

A standard trick is to add noise to the signal to (stochastically) let parts get over the hump.

BTW, feed this post into ChatGPT and it will tell you the answer

Somewhat mean caveat emptor for other readers: I just spent an hour trying to understand this post, and wish that I hadn't. It's still possible I'm missing the thing, but inside view is I've found the thing and the thing just isn't that interesting.[1]

- ^

Feeding a program its Gödel numbering isn't relevant (and doesn't work?!), and the puzzle is over perhaps missing out on an unfathomably small amount of money[2].

- ^

By "unfathomably small" I mean ≈ dollars. And, sure, there could be a deep puzzle there, but I feel that when a puzzle has its accidental complexity removed you usually can produce a more compelling use case.

Could you spell this out? I don't see how AI has much to do with trade. Is the idea that AI development is bounded on the cost of GPUs, and this will raise the cost of outside-China GPUs compared to inside-China GPUs? Or is it that there will be less VC money e.g. because interest rates go up to combat inflation?

Yes, it means figure out how the notation works.

That's a good Coasian point. Talking out of my butt, but I think the airlines don't carry the risk. The sale channel (airlines, Expedia, etc.) take commissions distributing an insurance product designed another company (Travel Insured International, Seven Corners) who handles product design compliance, with the actual claims being handled by another company and the insurance capital by yet another company (AIG, Berkshire Hathaway).

LLMs tell me the distributors get 30–50% commission, which tells you that it's not a very good product for consumers.

But fear of death does seem like a kind of value systematization

I don't think it's system 1 doing the systemization. Evolution beat fear of death into us in lots of independent forms (fear of heights, snakes, thirst, suffocation, etc.), but for the same underlying reason. Fear of death is not just an abstraction humans invented or acquired in childhood; is a "natural idea" pointed at by our brain's innate circuitry from many directions. Utilitarianism doesn't come with that scaffolding. We don't learn to systematize Euclidian and Minkowskian spaces the same way either.

Quick takes are presented inline, posts are not. Perhaps posts could be presented as title + <80 (140?) character summary.

You may live in a place where arguments about the color of the sky are really arguments about tax policy. I don't think I live there? I'm reading your article saying "If Blue-Sky-ism is to stand a chance against the gravitational pull of Green-Sky-ism, it must offer more than talk of a redistributionist tax system" and thinking "...what on earth...?". This might be perceptive cultural insight about somewhere, but I do not understand the context. [This my guess as to why are you are being voted down]

You might be[1] overestimating the popularity of "they are playing god" in the same way you might overestimate the popularity of woke messaging. Loud moralizers aren't normal people either. Messages that appeal to them won't have the support you'd expect given their volume.

Compare, "It's going to take your job, personally". Could happen, maybe soon, for technophile programmers! Don't count them out yet.

- ^

Not rhetorical -- I really don't know

Eliezer Yudkowsky wrote a story Kindness to Kin about aliens who love(?) their family members proportionally to the Hamilton's "I'd lay down my life for two brothers or eight cousins" rule. It gives an idea to how alien it is.

Then again, Proto-Indo-European had detailed family words that correspond rather well to confidence of genetic kinship, so maybe it's a cultural thing.

Sure, I think that's a fair objection! Maybe, for a business, it may be worth paying the marginal security costs of giving 20 new people admin accounts, but for the federal government that security cost is too high. Is that what people are objecting to? I'm reading comments like this:

Yeah, that's beyond unusual. It's not even slightly normal. And it is in fact very coup-like behavior if you look at coups in other countries.

And, I just don't think that's the case. I think this is pretty-darn-usual and very normal in the management consulting / private equity world.

I don't think foreign coups are a very good model for this? Coups don't tend to start by bringing in data scientists.

What I'm finding weird is...this was the action people thought worrying enough to make it to the LessWrong discussion. Cutting red tape to unblock data scientists in cost-cutting shakeups -- that sometimes works well! Assembling lists of all CIA officers and sending them emails, or trying to own the Gaza strip, or <take your pick>. I'm far mode on these, have less direct experience, but they seem much more worrying. Why did this make the threshold?

Huh, I came at this with the background of doing data analysis in large organizations and had a very different take.

You're a data scientist. You want to analyze what this huge organization (US government) is spending its money on in concrete terms. That information is spread across 400 mutually incompatible ancient payment systems. I'm not sure if you've viscerally felt the frustration of being blocked, spending all your time trying to get permission to read from 5 incompatible systems, let alone 400. But it would take months or years.

Fortunately, your boss is exceptionally good at Getting Things Done. You tell him that there's one system (BFS) that has all the data you need in one place. But BFS is protected by an army of bureaucrats, most of whom are named Florence, who are Very Particular, are Very Good at their job, Will Not let this system go down, Will Not let you potentially expose personally identifiably information by violating Section 3 subparagraph 2 of code 5, Will Not let you sweet talk her into bypassing the safety systems she has spent the past 30 years setting up to protect oh-just-$6.13 trillion from fraud, embezzlement, and abuse, and if you manage somehow manage to get around these barriers she will Stop You.

Your boss Gets Things Done and threatens Florence's boss Mervin that if he does not give you absolutely all the permissions you ask for, Mervin will become the particular object of attention of two people named Elon Musk and Donald Trump.

You get absolutely all the permissions you want and go on with your day.

Ah, to have a boss like that!

EDIT TL/DR: I think this looks weirder in Far mode? Near mode (near to data science, not near government), giving outside consultant data scientists admin permissions for important databases does not seem weird or nefarious. It's the sort of thing that happens when the data scientist's boss is intimidatingly high in an organization, like the President/CEO hiring a management consultant.

Checking my understanding: for the case of training a neural network, would S be the parameters of the model (along with perhaps buffers/state like moment estimates in Adam)? And would the evolution of the state space be local in S space? In other words, for neural network training, would S be a good choice for H?

In a recurrent neural networks doing in-context learning, would S be something like the residual stream at a particular token?

I'll conjecture the following in a VERY SPECULATIVE, inflammatory, riff-on-vibes statements:

- Gradient descent solves problem in the complexity class P[1]. It is P-Complete.

- Learning theory (and complexity theory) have for decades been pushing two analogous bad narratives about the weakness of gradient descent (and P).

- These narratives dominate because it is easy prove impossibility results like "Problem X can't be solved by gradient descent" (or "Problem Y is NP-Hard"). It's academically fecund -- it's a subject aspiring academics can write a lot of papers about. Results about what gradient descent (and polynomial time) can't do compose a fair portion of the academic canon

- In practice, these impossible results are corner cases cases don't actually come up. The "vibes" of these impossibility results run counter to the "vibes" of reality

- Example, gradient descent solves most problems, even though it theoretically it gets trapped in local minima. (SAT is in practice fast to solve, even though in theory it's theoretical computer science's canonical Hard-Problem-You-Say-Is-Impossible-To-Solve-Quickly)

- The vibe of reality is "local (greedy) algorithms usually work"

- ^

Stoner-vibes based reason: I'm guessing you can reduce a problem like Horn Satisfiability[2] to gradient descent. Horn Satisfiability is a P-compete problem -- you can transform any polynomial-time decision problem in a Horn Satisfiability problem using a log-space transformation. Therefore, gradient descent is "at least as big as P" (P-hard). And I'm guessing you can your formalization of gradient descent in P as well (hence "P-Complete"). That would mean gradient descent is not be able to solve harder problems in e.g. NP unless P=NP

- ^

Horn Satisfiability is about finding true/false values that satisfy a bunch of logic clauses of the form . or (that second clause means "don't set both and to true -- at least one of them has to be false" ). In the algorithm for solving it, you figure out a variable that must be set to true or false, then propagate that information forward to other clauses. I bet you can do this with a loss function turning into a greedy search on a hypercube.

Thanks! I'm not a GPU expert either. The reason I want to spread the toll units inside GPU itself isn't to turn the GPU off -- it's to stop replay attacks. If the toll thing is in a separate chip, then the toll unit must have some way to tell the GPU "GPU, you are cleared to run". To hack the GPU, you just copy that "cleared to run" signal and send it to the GPU. The same "cleared to run" signal must always make the GPU work, unless there is something inside the GPU to make sure won't accept the same "cleared to run" signal twice. That the point of the mechanism I outline -- a way to make it so the same "cleared to run" signal for the GPU won't work twice.

Bonus: Instead of writing the entire logic (challenge response and so on) in advance, I think it would be better to run actual code, but only if it's signed (for example, by Nvidia), in which case they can send software updates with new creative limitations, and we don't need to consider all our ideas (limit bandwidth? limit gps location?) in advance.

Hmm okay, but why do I let Nvidia send me new restrictive software updates? Why don't I run my GPUs in an underground bunker, using the old most broken firmware?

I used to assume disabling a GPU in my physical possession would be impossible, but now I'm not so sure. There might be ways to make bypassing GPU lockouts on the order of difficulty of manufacturing the GPU (requiring nanoscale silicon surgery). Here's an example scheme:

Nvidia changes their business models from selling GPUs to renting them. The GPU is free, but to use your GPU you must buy Nvidia Dollars from Nvidia. Your GPU will periodically call Nvidia headquarters and get an authorization code to do 10^15 more floating point operations. This rental model is actually kinda nice for the AI companies, who are much more capital constrained than Nvidia. (Lots of industries have moved from this buy to rent model, e.g. airplane engines)

Question: "But I'm an engineer. How (the hell) could Nvidia keep me from hacking a GPU in my physical possession to bypass that Nvidia dollar rental bullshit?"

Answer: through public key cryptography and the fact that semiconductor parts are very small and modifying them is hard.

In dozens to hundreds or thousands of places on the GPU, NVidia places toll units that block signal lines (like ones that pipe floating point numbers around) unless the toll units believe they have been paid with enough Nvidia dollars.

The toll units have within them a random number generator, a public key ROM unique to that toll unit, a 128 bit register for a secret challenge word, elliptic curve cryptography circuitry, and a $$$ counter which decrements every time the clock or signal line changes.

If the $ $ $ counter is positive, the toll unit is happy and will let signals through unabated. But if the $ $ $ counter reaches zero,[1] the toll unit is unhappy and will block those signals.

To add to the $$$ counter, the toll unit (1) generates a random secret <challenge word>, (2) encrypts the secret using that toll unit's public key (3) sends <encrypted secret challenge word> to a non-secure parts of the GPU,[2] which (4) through driver software and the internet, phones NVidia saying "toll unit <id> challenges you with <encrypted secret challenge word>" (5) Nvidia looks up the private key for toll unit <id> and replies to the GPU "toll unit <id>, as proof that I Nvidia know your private key, I decrypted your challenge word: <challenge word>", (6) after getting this challenge word back, the toll unit adds 10^15 or whatever to the $$$ counter.

There are a lot of ways to bypass this kind of toll unit (fix the random number generator or $$$ counter to a constant, just connect wires to route around it). But the point is to make it so you can't break a toll unit without doing surgery to delicate silicon parts which are distributed in dozens to hundreds of places around the GPU chip.

- ^

Implementation note: it's best if disabling the toll unit takes nanoscale precision, rather than micrometer scale precision. The way I've written things here, you might be able to smudge a bit of solder over the whole $$$ counter and permanently tie the whole thing to high voltage, so the counter never goes down. I think you can get around these issues (make it so any "blob" of high or low voltage spanning multiple parts of the toll circuit will block the GPU) but it takes care.

- ^

This can be done slowly, serially with a single line

Not a billion billion times. You need ≈2^100 presses to get any signal, and ≈O(2^200) presses to figure out which way the signal goes. 2^200≈10^60. Planck time's about 10^-45 seconds. If you try to press the button more than 10^45 times per second the radiation the electrons in the button will emit will be so high frequency an small wavelength that it will collapse into a black hole.

Incentives for NIMBYism is an objection I've seldom seen stated. "Of course I don't want to up-zone my neighborhood to allow more productive buildings -- that would triple my taxes!".

You're being downvoted and nobody's telling you why :-(, so I thought I'd give some notes.

- You're not talking to the right audience. Few groups are more emotionally in favor of a glorious transhumanist future than people on LessWrong. This is not technophobes who are afraid of change. It's technophiles who have realized, in harsh constrat to the conclusion they emotionally want, that making a powerful AI would likely be bad for humanity.

- Yes, it's important to overly anthropomorphize AIs, and you are doing that all over the place in your argument.

- These arguments have been rehashed a lot. It's fine to argue that the LessWrong consensus opinion is wrong, but you should indicate you're familiar with why the LessWrong consensus opinion is what it is.

(To think about what it might not settle on a cooperative post-enlightenment philosophy, read, I don't know, correct heaps?)

Conjunction Fallacy. Adding detail make ideas feel more realistic, and strictly less likely to be true.

Virtues for communication and thought can be diametrically opposed.

In a world where AI progress has wildly accelerated chip manufacture

This world?

What distinction are you making between "visualising" and "seeing"?

Good question! By "seeing" I meant having qualia, an apparent subjective experience. By "visualizing" I meant...something like using the geometric intuitions you get by looking at stuff, but perhaps in a philosophical zombie sort of way? You could use non-visual intuitions to count the vertices on a polyhedron, like algebraic intuitions or 3D tactile intuitions (and I bet blind mathematicians do). I'm not using those. I'm thinking about a wireframe image, drawn flat.

I'm visualizing a rhombicosidodecahedron right now. If I ask myself "The pentagon on the right and the one hiding from view on the left -- are they the same orientation?", I'll think "ahh, let's see... The pentagon on the right connects through the squares to those three pentagons there, which interlock with those 2/4 pentagons there, which connect through squares to the one on the left, which, no, that left one is upside-down compared to the one on the right -- the middle interlocking pentagons rotated the left assembly 36° compared to the right". Or ask "that square between the right pentagon and the pentagon at 10:20 above it <mental point>. Does perspective mean the square's drawn as a diamond, or a skewed rectangle, weird quadrilateral?" and I think "Nah, not diamond shaped -- it's a pretty rectangular trapezoid. The base is maybe 1.8x height? Though I'm not too good at guessing aspect ratios? Seems like I if I rotate the trapezoid I can fit 2 into the base but go over by a bit?"

I'm putting into words a thought process which is very visual, BUT there is almost no inner cinema going along with those visualizations. At most ghostly, wispy images, if that. A bit like the fleeting oscillating visual feeling you get when your left and right eyes are shown different colors?

...I do not believe this test. I'd be very good at counting vertices on a polyhedron through visualization and very bad at experiencing the sensation of seeing it. I do "visualize" the polyhedra, but I don't "see" them. (Frankly I suspect people who say they experience "seeing" images are just fooling themselves based on e.g. asking them to visualize a bicycle and having them draw it)

Thanks for crossposting! I've highly appreciated your contributions and am glad I'll continue to be able to see them.

Quick summary of a reason why constituent parts like of super-organisms, like the ant of ant colonies, the cells of multicellular organisms, and endosymbiotic organelles within cells[1] are evolutionarily incentivized to work together as a unit:

Question: why do ants seem to care more about the colony than themselves? Answer: reproduction in an ant colony is funneled through the queen. If the worker ant wants to reproduce its genes, it can't do that by being selfish. It has to help the queen reproduce. Genes in ant workers have nothing to gain by making their ant more selfish and have much to gain by making their worker protect the queen.

This is similar to why cells in your pancreas cooperate with cells in your ear. Reproduction of genes in the body is funned through gametes. Somatic evolution does pressure the cells in your pancreas to reproduce selfishly at the expense of cells in your ear (this is pancreatic cancer). But that doesn't help the pancreas genes long term. Pancreas-genes and the ear-genes are forced to cooperate with each other because they can only reproduce when bound together in a gamete.

This sort of bounding together of genes making disperate things cooperate and act like a "super organism" is absent in members of a species. My genes do not reproduce in concert with your genes. If my genes figure out a way to reproduce at your expense, so much the better for them.

- ^

Like mitochondria and chloroplasts, which were separate organisms but evolved to work so close with their hosts that they are now considered part of the same organism.

EDIT Completely rewritten to be hopefully less condescending.

There are lessons from group selection and the extended phenotype which vaguely reduce to "beware thinking about species as organisms". It is not clear from this essay whether you've encountered those ideas. It would be helpful for me reading this essay to know if you have.

Hijacking this thread, has anybody worked through Ape in the coat's anthropic posts and understood / gotten stuff out of them? It's something I might want to do sometime in my copious free time but haven't worked up to it yet.

Sorry, that was an off-the-cuff example I meant to help gesture towards the main idea. I didn't mean to imply it's a working instance (it's not). The idea I'm going for is:

- I'm expecting future AIs to be less single LLMs (like Llama) and more loops and search and scaffolding (like o1)

- Those AIs will be composed of individual pieces

- Maybe we can try making the AI pieces mutually dependent in such a way that it's a pain to get the AI working at peak performance unless you include the safety pieces

This might be a reason to try to design AI's to fail-safe and break without controlling units. E.g. before fine-tuning language models to be useful, fine-tune them to not generate useful content without approval tokens generated by a supervisory model.

I suspect experiments with almost-genetically identical twin tests might advance our understanding about almost all genes except sex chromosomes.

Sex chromosomes are independent coin flips with huge effect sizes. That's amazing! Natural provided us with experiments everywhere! Most alleles are confounded (e.g.. correlated with socioeconomic status for no causal reason) and have very small effect sizes.

Example: Imagine an allele which is common in east asians, uncommon in europeans, and makes people 1.1 mm taller. Even though the allele causally makes people taller, the average height of the people with the allele (mostly asian) would be less than the average height of the people without the allele (mostly European). The +1.1 mm in causal height gain would be drowned out by the ≈-50 mm in Simpson's paradox. Your almost-twin experiment gives signal where observational regression gives error.

That's not needed for sex differences. Poor people tend to have poor children. Caucasian people tend to have Caucasian children. Male people do not tend to have male children. It's pretty easy to extract signal about sex differences.

(far from my area of expertise)

The player can force a strategy where they win 2/3 of the time (guess a door and never switch). The player never needs to accept worse

The host can force a strategy where the player loses 1/3 of the time (never let the player switch). The host never needs to accept worse.

Therefore, the equilibrium has 2/3 win for the player. The player can block this number from going lower and the host can block this number from going higher.

I want to love this metaphor but don't get it at all. Religious freedom isn't a narrow valley; it's an enormous Shelling hyperplane. 85% of people are religious, but no majority is Christian or Hindu or Kuvah'magh or Kraẞël or Ŧ̈ř̈ȧ̈ӎ͛ṽ̥ŧ̊ħ or Sisters of the Screaming Nightshroud of Ɀ̈ӊ͢Ṩ͎̈Ⱦ̸Ḥ̛͑.. These religions don't agree on many things, but they all pull for freedom of religion over the crazy *#%! the other religions want.

Suppose there were some gears in physics we weren't smart enough to understand at all. What would that look like to us?

It would look like phenomena that appears intrinsically random, wouldn't it? Like imagine there were a simple rule about the spin of electrons that we just. don't. get. Instead noticing the simple pattern ("Electrons are up if the number of Planck timesteps since the beginning of the universe is a multiple of 3"), we'd only be able to figure out statistical rules of thumb for our measurements ("we measure electrons as up 1/3 of the time").

My intuitions conflict here. One the one hand, I totally expect there to be phenomena in physics we just don't get. On the other hand, the research programs you might undertake under those conditions (collect phenomena which appear intrinsically random and search for patterns) feel like crackpottery.

Maybe I should put more weight on superdetermism.

Humans are computationally bounded, Bayes is not. In an ideal Bayesian perspective:

- Your prior must include all possible theories a priori. Before you opened your eyes as a baby, you put some probability of being in a universe with Quantum Field Theory with gauge symmetry and updated from there.

- Your update with unbounded computation. There's not such thing as proofs, since all poofs are tautological.

Humans are computationally bounded and can't think this way.

(riffing)

"Ideas" find paradigms for modeling the universe that may be profitable to track under limited computation. Maybe you could understand fluid behavior better if you kept track of temperature, or understand biology better if you keep track of vital force. With a bayesian-lite perspective, they kinda give you a prior and places to look where your beliefs are "mailable".

"Proofs" (and evidence) are the justifications for answers. With a bayesian-lite perspective, they kinda give you conditional probabilities.

"Answers" are useful because they can become precomputed, reified, cached beliefs with high credence inertial you can treat as approximately atomic. In a tabletop physics experiment, you can ignore how your apparatus will gravitationally move the earth (and the details of the composition of the earth). Similarly, you can ignore how the tabletop physics experiment will move you belief about the conservation of energy (and the details of why your credences about the conservation of energy are what they are).

Statements made to the media pass through an extremely lossy compression channel, then are coarse-grained, and then turned into speech acts.

That lossy channel has maybe one bit of capacity on the EA thing. You can turn on a bit that says "your opinions about AI risk should cluster with your opinions about Effective Altruists", or not. You don't get more nuance than that.[1]

If you have to choose between outputting the more informative speech act[2] and saying something literally true, it's more cooperative to get the output speech act correct.

(This is different from the supreme court case, where I would agree with you)

- ^

I'm not sure you could make the other side of the channel say "Dan Hendrycks is EA adjacent but that's not particularly necessary for his argument" even if you spent your whole bandwidth budget trying to explain that one message.

- ^

See Grice's Maxims

If someone wants to distance themselves from a group, I don't think you should make a fuss about it. Guilt by association is the rule in PR and that's terrible. If someone doesn't want to be publicly coupled, don't couple them.

I think the classic answer to the "Ozma Problem" (how to communicate to far-away aliens what earthlings mean by right and left) is the Wu experiment. Electromagnetism and the strong nuclear force aren't handed, but the weak nuclear force is handed. Left-handed electrons participate in weak nuclear force interactions but right-handed electrons are invisible to weak interactions[1].

(amateur, others can correct me)

- ^

Like electrons, right-handed neutrinos are also invisible to weak interactions. Unlike electrons, neutrinos are also invisible to the other forces*[2]. So the standard model basically predicts there should invisible particles wizzing around everywhere that we have no way to detect or confirm exist at all.

- ^

Besides gravity

Can you symmetrically put the atoms into that entangled state? You both agree on the charge of electrons (you aren't antimatter annihilating), so you can get a pair of atoms into |↑,↑⟩, but can you get the entangled pair to point in opposite directions along the plane of the mirror?

Edit Wait, I did that wrong, didn't I? You don't make a spin up atom by putting it next to a particle accelerator sending electrons up. You make a spin up atom by putting it next to electrons you accelerate in circles, moving the electrons in the direction your fingers point when a (real) right thumb is pointing up. So one of you will make a spin-up atom and the other will make a spin-down atom.

No, that's a very different problem. The matrix overlords are Laplace's demon, with god-like omniscience about the present and past. The matrix overlords know the position and momentum of every molecule in my cup of tea. They can look up the microstate of any time in the past, for free.

The future AI is not Laplace's demon. The AI is informationally bounded. It knows the temperature of my tea, but not the position and momentum of every molecule. Any uncertainties it has about the state of my tea will increase exponentially when trying to predict into the future or retrodict into the past. Figuring out which water molecules in my tea came from the kettle and which came from the milk is very hard, harder than figuring out which key encrypted a cypher-text.

Oh, wait, is this "How does a simulation keep secrets from the (computationally bounded) matrix overlords?"

I don't think I understand your hypothetical. Is your hypothetical about a future AI which has:

- Very accurate measurements of the state of the universe in the future

- A large amount of compute, but not exponentially large

- Very good algorithms for retrodicting* the past

I think it's exponentially hard to retrodict the past. It's hard in a similar way as encryption is hard. If an AI isn't power enough to break encryption, it also isn't powerful enough to retrodict the past accurately enough to break secrets.

If you really want to keep something secret from a future AI, I'd look at ways of ensuring the information needed to theoretically reconstruct your secret is carried away from the earth at the speed of light in infrared radiation. Write the secret in sealed room, atomize the room to plasma, then cool the plasma by exposing it to the night sky.

*predicting is using your knowledge of the present to predict the state of the future. Retrodicting is using your knowledge of the present to predict retrodict the state of the past