Extreme Security

post by lc · 2022-08-15T12:11:05.147Z · LW · GW · 6 commentsContents

6 comments

What makes "password" a bad password?

You might say that it's because everybody else is already using it, and maybe you'd be correct that everybody in the world deciding to no longer use the password "password" could eventually make it acceptable again.

But consider this: if everyone who was using that password for something changed it tomorrow, and credibly announced so, it would still be an English dictionary word. Any cracker that lazily included the top N words in an English dictionary in a cracking list would still be liable to break it, not because people actually use every English dictionary word, but simply because trying the top N English words is a commonly deployed tactic.

You could go one step further and say "password" is also a bad password because it's in that broader set of English dictionary words, but really, you should just drop the abstraction altogether. "Password" is a bad password because hackers are likely to try it. Hackers are likely to try it because they're trying to break your security, yes, but also because of a bunch of seemingly irrelevant details like what they tell humans to do on online password cracking resources, and the hashcat & JohnTheRipper programs' standard features. If due to some idiosyncratic psychological quirk hackers were guaranteed never to think of "password" as a possible password and never managed to insert "password" in their cracking lists, it would be the Best Password. A series of 17 null bytes (which are used to signal the end of C strings) tends to this day to be an excellent choice, when the website accepts that, and simple derivations of that strategy will probably go on being an excellent choice despite my mentioning them in this LW post.

Generating a password with a high amount of "entropy" is just a way of ensuring that password crackers are very unlikely to break them without violating Shannon's maxim. Nobody wants to have to violate Shannon's maxim because it means they can't talk about their cool tricks publicly, and their systems' design itself will become a confidential secret in need of protection.

Unfortunately "not violating Shannon's maxim" is not always a luxury you have. There are legitimate and recognized circumstances where modeling the particular opponents you're liable to face becomes critical for adequate performance. High frequency traders who sometimes choose to ignore exploitable bugs for an extra millisecond saved in latency. Corporations with "assume breach" network stances like the kinds Crowdstrike enables. Racketeerers [LW · GW] whose power comes from the amount of people they can trust and whom compete with other racketeers. Secret service organizations. There are nano-industries that I can't even name because it'd be an outfo-hazard to explain how they're vulnerable. As the black hole is to physicists, these industrial problems are to security engineers. Where conditions of strong performance requirements, pricey defenses, and predictable attacker behavior coexist, you gotta adopt a different, more complete model, even if it means you can't make a DEFCON presentation.

My "clever-but-not-actually-useful-name" for these risk mitigation strategies is Extreme Security because it's security in extremis - not because these systems are particularly secure, or even because the problems are particularly hard. They're what happens when you're forced to use the minimum security necessary instead of finding a "merely" sufficient configuration for a set of resources.

Whether it's an extreme security situation that forced your hand, or simple laziness, there's nothing about modeling your opponent that makes your security solution "wrong" or "incomplete". The purpose of security is to (avoid getting) cut (by) the enemy. For most of human history, before computers, we just thought of threat modeling as a natural part of what security was - you don't spend more money on walls if Igor is a dumbass and you could be spending them on internal political battles instead. The opportunity cost of playing defense or the asymmetry of most "security problems" meant you had to take into account all the infoz to defeat bandits. It was only until the U.S. built an armed forces the size of every other military, when computer programmers' adversaries became "every hacker in the world that might target their systems", when police and intelligence agencies began to put cameras armed with facial & license plate recognition on every street corner in New York & London, did we start to think of security situations that you can't talk about in a journal as "insufficient". But of course if you leave your door unlocked and no one robs you, by chance or something else, you've won.

Now, I see alignment conversations like this everywhere:

Alice: Suppose we had an interpretability mechanism that showed us what the AI was optimizing...

Bob: Ah, but what if the AI has modified itself in anticipation of such oversight and will unknowingly-to-it change its optimization when surveillance is lifted or we won't be able to react?

Charlie: Suppose we have two AIs, each playing a zero sum debate game...

Bob: Ah, but what if they spontaneously learn Updateless Decision Theory and to read each others code and start making compacts with each other that harm humans?

Denice: Suppose we extensively catalog SOTA AI capabilities and use only lower-capability helper AIs to solve the harder alignment problems...

Bob: Ah, but what if we get an AI that hides its full power prior to us deploying it for safety research, or using it for some important social function, and then it performs a treacherous turn?

When someone asks "what's the best movie", the pedantically correct answer is to back up, explain that the question is underspecified, and then ask if they mean "what movie maximizes satsfaction for me", or "what movie produces highest average satisfaction for a particular group of people". And when someone asks "what's the best possible security configuration", you could interpret that as saying "what's the security configuration that works against all adversaries", but really they probably mean "what security configuration is most likely to work against the particular foe I'm liable to face", and oftentimes that means you should ask them about their threat model.

Instead of asking clarifying questions, these conversations (and for the record I stand a good chance of involuntarily strawmanning the people that would identify as Bob) often feel to me like they take as granted that we're fighting the mind shaped exactly the way it needs to be to kill us. Instead of treating AGI like an engineering problem, the people I see talk about alignment treat it like a security problem, where the true adversary is not a particularly defined superintelligence resulting from a particularly defined ML training process, but an imaginary Evil Supervillain designing this thing specifically to pass our checks and balances.

Alignment is still an extremely hard problem, but we shouldn't pretend it's harder than it is by acting like we don't get to choose our dragon. A strong claim that an oversight mechanism is going to fail, as opposed to merely being extraordinarily risky, because the AGI will be configured a certain way, is a claim that needs substantiation. If the way we get this AGI is through some sort of complicated, layered reinforcement learning paradigm, where a wide variety of possible agents with different psychologies will be given the opportunity to influence their own or each others' training processes, then maybe it really is highly likely that we'll land on whichever one has the foresight and inclination to pull some complicated hijinks. But if the way we get AGI is something like the way we scale up GPT-style transformers, presumably the answer to why we didn't get an oracle that was hiding its power level is because "that's not how gradient descent works".

I realize there is good reason to be skeptical of such arguments: they're fragile. I don't want to use this kind of logic, you don't want to use this kind of logic, when trying to prevent something from ending the world. It relies on assumptions about when we will get AGI and how, and nobody today can confidently know the answers to those questions. We have strong theoretical reasons to believe most of the incidental ways you can scam computer hackers wouldn't work on an unmuddled superintelligent AI capable of modifying its own source code and re-deriving arithmetic from the peano axioms. And we should do ourselves a FAVAR [LW · GW] and try not to risk the world on these intuitions.

Yet these schemes I mentioned are currently pretty much the most sound proposals we have, and they're fragile mostly when we unwittingly find ourselves in possession of particular minds. Determining whether or not we are likely to actually be overseeing those minds is a problem worth exploring! And conditioning such criticisms on plausible assumptions about the set of AIs that might actually come out of DeepMind, is both an important part of understanding when they fail, and when they might succeed anyways!

6 comments

Comments sorted by top scores.

comment by Thomas Kwa (thomas-kwa) · 2022-08-16T20:33:31.789Z · LW(p) · GW(p)

Related [LW · GW]: some worst-case assumptions are methodological, and some are because you're facing adversarial pressure, and it pays to know which is which.

comment by RasmusHB (JohannWolfgang) · 2022-08-22T18:54:47.699Z · LW(p) · GW(p)

I found the first part of the post a little bit cryptic (no pun intended). Since the second part is not just aimed at an audience with technical security knowledge, maybe at least including Shannon's maxim and a link to outfo-hazard (ok, that one is not technical) would help. Though after googling it, I still don't understand the part about Shannon's maxim, e.g.,

Generating a password with a high amount of "entropy" is just a way of ensuring that password crackers are very unlikely to break them without violating Shannon's maxim.

If Shannon's maxim is: "The enemy knows the system", violating means that they do not know it? How does not knowing the system help crackers crack high-entropy passwords? Or is "violating Shannon's maxim" to say that the attacker knows the secrete key? In that case, wouldn't it be better to say "violating Kerckhoff's principle"? (I prefer Kerckhoff's principle anyway, Shannon's maxim seems IMO just a more cryptic (sorry again) restatement of it.)

Otherwise, nice post.

Replies from: lc↑ comment by lc · 2022-08-22T19:03:33.291Z · LW(p) · GW(p)

Violating shannon's maxim means making a security system that is insecure if an attacker knows how the system works. In this case, a password generation mechanism like "use the most memorable password not in the password cracking list" violates shannon's maxim - if an attacker knows you're doing that he can generate such passwords himself and test them against the password hash. A password generation mechanism like "select 18 random hexadecimal characters" is secure even if an attacker knows you're doing that, because you're randomly selecting from 16^18 possible passwords.

Replies from: tricky_labyrinth↑ comment by tricky_labyrinth · 2022-12-24T06:50:55.867Z · LW(p) · GW(p)

I've heard it go by the name security through obscurity (see https://en.wikipedia.org/wiki/Security_through_obscurity).

comment by magic9mushroom · 2024-04-10T07:42:17.519Z · LW(p) · GW(p)

I think the basic assumed argument here (though I'm not sure where or even if I've seen it explicitly laid out) goes essentially like this:

- Using neural nets is more like the immune system's "generate everything and filter out what doesn't work" than it is like normal coding or construction. And there are limits on how much you can tamper with this, because the whole point of neural nets is that humans don't know how to write code as good as neural nets - if we knew how to write such code deliberately, we wouldn't need to use neural nets in the first place.

- You hopefully have part of that filter designed to filter out misalignment. Presumably we agree that if you don't have this, you are going to have a bad time.

- This means that two things will get through your filter: golden-BB false negatives in exactly the configurations that fool all your checks, and true aligned AIs which you want.

- But both corrigibility and perfect sovereign alignment are highly rare (corrigibility because it's instrumentally anti-convergent, and perfect sovereign alignment because value is fragile), which means that your filter for misalignment is competing against that rarity to determine what comes out.

- If P(golden-BB false negative) << P(alignment), all is well.

- But if P(golden-BB false negative) >> P(alignment) despite your best efforts, then you just get golden-BB false negatives. Sure, they're highly weird, but they're still less weird than what you're looking for and so you wind up creating them reliably when you try hard enough to get something that passes your filter.

comment by ShowMeTheProbability · 2022-08-16T07:53:48.430Z · LW(p) · GW(p)

we shouldn't pretend it's harder than it is by acting like we don't get to choose our dragon.

I fully agree, I believe it would be good to 'roll out a carpet' for the simplest friendly AI assuming such a thing were possible to increase the odds of a friendly pick from any potential selection of candidate AI's

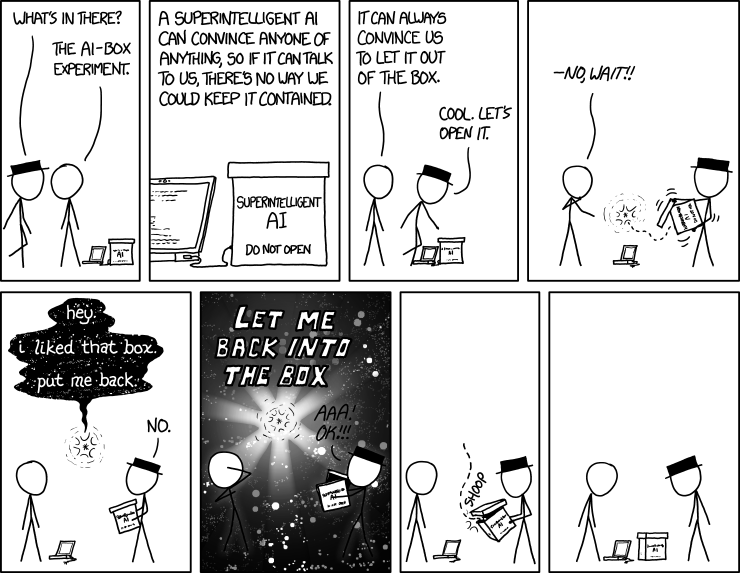

I feel like the XKCD nails it, give the AI-box an opportunity to communicate without making nonconsentual changes to it's enviroment, because yaknow it lives there.