Closed Limelike Curves's Shortform

post by Closed Limelike Curves · 2019-08-16T03:53:12.158Z · LW · GW · 43 commentsContents

44 comments

43 comments

Comments sorted by top scores.

comment by Closed Limelike Curves · 2024-07-18T16:06:15.275Z · LW(p) · GW(p)

The Wikipedia articles on the VNM theorem, Dutch Book arguments, money pump, Decision Theory, Rational Choice Theory, etc. are all a horrific mess. They're also completely disjoint, without any kind of Wikiproject or wikiboxes for tying together all the articles on rational choice.

It's worth noting that Wikipedia is the place where you—yes, you!—can actually have some kind of impact on public discourse, education, or policy. There is just no other place you can get so many views with so little barrier to entry. A typical Wikipedia article will get more hits in a day than all of your LessWrong blog posts have gotten across your entire life, unless you're @Eliezer Yudkowsky [LW · GW].

I'm not sure if we actually "failed" to raise the sanity waterline, like people sometimes say, or if we just didn't even try. Given even some very basic low-hanging fruit interventions like "write a couple good Wikipedia articles" still haven't been done 15 years later, I'm leaning towards the latter. edit me senpai

EDIT: Discord to discuss editing here.

Replies from: sami-petersen, niplav, jarviniemi, Jonas Hallgren, metachirality, niplav↑ comment by SCP (sami-petersen) · 2024-07-23T10:41:43.199Z · LW(p) · GW(p)

I appreciate the intention here but I think it would need to be done with considerable care, as I fear it may have already led to accidental vandalism of the epistemic commons. Just skimming a few of these Wikipedia pages, I’ve noticed several new errors. These can be easily spotted by domain experts but might not be obvious to casual readers.[1] I can’t know exactly which of these are due to edits from this community, but some very clearly jump out.[2]

I’ll list some examples below, but I want to stress that this list is not exhaustive. I didn’t read most parts of most related pages, and I omitted many small scattered issues. In any case, I’d like to ask whoever made any of these edits to please reverse them, and to triple check any I didn’t mention below.[3] Please feel free to respond to this if any of my points are unclear![4]

False statements

The page on Independence of Irrelevant Alternatives (IIA) claims that IIA is one of the vNM axioms, and that one of the vNM axioms “generalizes IIA to random events.”

Both are false. The similar-sounding Independence axiom of vNM is neither equivalent to, nor does it entail, IIA (and so it can’t be a generalisation). You can satisfy Independence while violating IIA. This is a not a technicality; it’s a conflation of distinct and important concepts. This is repeated in several places.

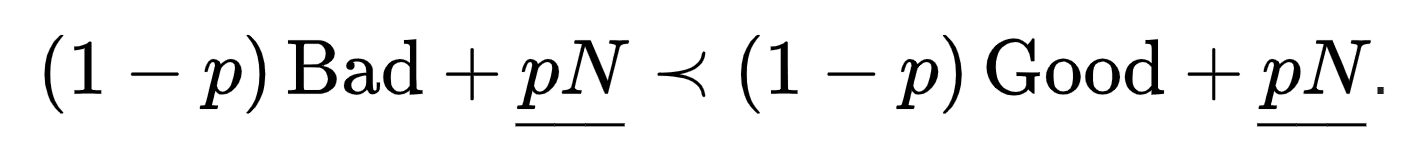

- The mathematical statement of Independence there is wrong. In the section conflating IIA and Independence, it’s defined as the requirement that

for any and any outcomes Bad, Good, and N satisfying Bad≺Good. This mistakes weak preference for strict preference. To see this, set p=1 and observe that the line now reads N≺N. (The rest of the explanation in this section is also problematic but the reasons for this are less easy to briefly spell out.) The Dutch book page states that the argument demonstrates that “rationality requires assigning probabilities to events [...] and having preferences that can be modeled using the von Neumann–Morgenstern axioms.” This is false. It is an argument for probabilistic beliefs; it implies nothing at all about preferences. And in fact, the standard proof of the Dutch book theorem assumes something like expected utility (Ramsey’s thesis).

This is a substantial error, making a very strong claim about an important topic. And it's repeated elsewhere, e.g. when stating that the vNM axioms “apart from continuity, are often justified using the Dutch book theorems.”

- The section ‘The theorem’ on the vNM page states the result using strict preference/inequality. This is a corollary of the theorem but does not entail it.

Misleading statements

- The decision theory page states that it’s “a branch of applied probability theory and analytic philosophy concerned with the theory of making decisions based on assigning probabilities to various factors and assigning numerical consequences to the outcome.” This is a poor description. Decision theorists don’t simply assume this, nor do they always conclude it—e.g. see work on ambiguity or lexicographic preferences. And besides this, decision theory is arguably more central in economics than the fields mentioned.

- The IIA article’s first sentence states that IIA is an “axiom of decision theory and economics” whereas it’s classically one of social choice theory, in particular voting. This is at least a strange omission for the context-setting sentence of the article.

- It’s stated that IIA describes “a necessary condition for rational behavior.” Maybe the individual-choice version of IIA is, but the intention here was presumably to refer to Independence. This would be a highly contentious claim though, and definitely not a formal result. It’s misleading to describe Independence as necessary for rationality.

- The vNM article states that obeying the vNM axioms implies that agents “behave as if they are maximizing the expected value of some function defined over the potential outcomes at some specified point in the future.” I’m not sure what ‘specified point in the future’ is doing there; that’s not within the framework.

- The vNM article states that “the theorem assumes nothing about the nature of the possible outcomes of the gambles.” That’s at least misleading. It assumes all possible outcomes are known, that they come with associated probabilities, and that these probabilities are fixed (e.g., ruling out the Newcomb paradox).

Besides these problems, various passages in these articles and others are unclear, lack crucial context, contain minor issues, or just look prone to leave readers with a confused impression of the topic. (This would take a while to unpack, so my many omissions should absolutely not be interpreted as green lights.) As OP wrote: these pages are a mess. But I fear the recent edits have contributed to some of this.

So, as of now, I’d strongly recommend against reading Wikipedia for these sorts of topics—even for a casual glance. A great alternative is the Stanford Encyclopedia of Philosophy, which covers most of these topics.

- ^

I checked this with others in economics and in philosophy.

- ^

E.g., the term ‘coherence theorems’ is unheard of outside of LessWrong, as is the frequency of italicisation present in some of these articles.

- ^

I would do it myself but I don’t know what the original articles said and I’d rather not have to learn the Wikipedia guidelines and re-write the various sections from scratch.

- ^

Or to let me know that some of the issues I mention were already on Wikipedia beforehand. I’d be happy to try to edit those.

↑ comment by habryka (habryka4) · 2024-07-23T18:26:34.036Z · LW(p) · GW(p)

Or to let me know that some of the issues I mention were already on Wikipedia beforehand. I’d be happy to try to edit those.

None of these changes are new as far as I can tell (I checked the first three), so I think your basic critique falls through. You can check the edit history yourself by just clicking on the "View History" button and then pressing the "cur" button next to the revision entry you want to see the diff for.

Like, indeed, the issues you point out are issues, but it is not the case that people reading this have made the articles worse. The articles were already bad, and "acting with considerable care" in a way that implies inaction would mean leaving inaccuracies uncorrected.

I think people should edit these pages, and I expect them to get better if people give it a real try. I also think you could give it a try and likely make things better.

Edit: Actually, I think my deeper objection is that most of the critiques here (made by Sammy) are just wrong. For example, of course Dutch books/money pumps frequently get invoked to justify VNM axioms. See for example this.

Replies from: sami-petersen, gwern, Sylvester Kollin↑ comment by SCP (sami-petersen) · 2024-07-23T19:06:11.344Z · LW(p) · GW(p)

check the edit history yourself by just clicking on the "View History" button and then pressing the "cur" button

Great, thanks!

I hate to single out OP but those three points were added by someone with the same username (see first and second points here; third here). Those might not be entirely new but I think my original note of caution stands.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-07-23T20:56:02.970Z · LW(p) · GW(p)

Well, thinking harder about this, I do think your critiques on some of these is wrong. For example, it is the case that the VNM axioms frequently get justified by invoking dutch books (the most obvious case is the argument for transitivity, where the standard response is "well, if you have circular preferences I can charge you a dollar to have you end up where you started").

Of course, justifying axioms is messy, and there isn't any particularly objective way of choosing axioms here, but in as much as informal argumentation happens, it tends to use a dutch book like structure. I've had many conversations with formal academic experience in academia and economics here, and this is definitely a normal way for dutch books to go.

For a concrete example of this, see this recent book/paper: https://www.iffs.se/media/23568/money-pump-arguments.pdf

Replies from: Sylvester Kollin↑ comment by SMK (Sylvester Kollin) · 2024-07-23T21:27:47.025Z · LW(p) · GW(p)

You are conflating the Dutch book arguments for probabilism (Pettigrew, 2020) with the money-pump arguments for the vNM axioms (Gustafsson, 2022).

Replies from: habryka4, Closed Limelike Curves↑ comment by habryka (habryka4) · 2024-07-23T22:33:12.453Z · LW(p) · GW(p)

I've pretty consistently (by many different people) seen "Dutch Book arguments" used interchangeably with money pumps. My understanding (which is also the SEP's) is that "what is a money pump vs. a dutch book argument" is not particularly well-defined and the structure of the money pump arguments is basically the same as the structure of the dutch book arguments.

This is evident from just the basic definitions:

"A Dutch book is a set of bets that ensures a guaranteed loss, i.e. the gambler will lose money no matter what happens."

Which is of course exactly what a money pump is (where you are the person offering the gambles and therefore make guaranteed money).

The money pump Wikipedia article also links to the Dutch book article, and the book/paper I linked describes dutch books as a kind of money pump argument. I have never heard anyone make a principled distinction between a money pump argument and a dutch book argument (and I don't see how you could get one without the other).

Indeed, the Oxford Reference says explicitly:

money pump

A pattern of intransitive or cyclic preferences causing a decision maker to be willing to pay repeated amounts of money to have these preferences satisfied without gaining any benefit. [...] Also called a Dutch book [...]

(Edit: It's plausible that for weird historical reasons the exact same argument, when applied to probabilism would be called a "dutch book" and when applied to anything else would be called a "money pump", but I at least haven't seen anyone defend that distinction, and it doesn't seem to follow from any of the definitions)

Replies from: sami-petersen↑ comment by SCP (sami-petersen) · 2024-07-23T23:32:18.875Z · LW(p) · GW(p)

I think it'll be helpful to look at the object level. One argument says: if your beliefs aren't probabilistic but you bet in a way that resembles expected utility, then you're succeptible to sure loss. This forms an argument for probabilism.[1]

Another argument says: if your preferences don't satisfy certain axioms but satisfy some other conditions, then there's a sequence of choices that will leave you worse off than you started. This forms an agument for norms on preferences.

These are distinct.

These two different kinds of arguments have things in common. But they are not the same argument applied in different settings. They have different assumptions, and different conclusions. One is typically called a Dutch book argument; the other a money pump argument. The former is sometimes referred to as a special case of the latter.[2] But whatever our naming convensions, it's a special case that doesn't support the vNM axioms.

Here's why this matters. You might read assumptions of the Dutch book theorem, and find them compelling. Then you read a article telling you that this implies the vNM axioms (or constitutes an argument for them). If you believe it, you've been duped.

- ^

(More generally, Dutch books exist to support other Bayesian norms like conditionalisation.)

- ^

This distinction is standard and blurring the lines leads to confusions. It's unfortunate when dictionaries, references, or people make mistakes. More reliable would be a key book on money pumps (Gustafsson 2022) referring to a key book on Dutch books (Pettigrew 2020):

"There are also money-pump arguments for other requirements of rationality. Notably, there are money-pump arguments that rational credences satisfy the laws of probability. (See Ramsey 1931, p. 182.) These arguments are known as Dutch-book arguments. (See Lehman 1955, p. 251.) For an overview, see Pettigrew 2020." [Footnote 9.]

↑ comment by habryka (habryka4) · 2024-07-24T02:14:32.471Z · LW(p) · GW(p)

I mean, I think it would be totally reasonable for someone who is doing some decision theory or some epistemology work, to come up with new "dutch book arguments" supporting whatever axioms or assumptions they would come up with.

I think I am more compelled that there is a history here of calling money pump arguments that happen to relate to probabilism "dutch books", but I don't think there is really any clear definition that supports this. I agree that there exists the dutch book theorem, and that that one importantly relates to probabilism, but I've just had dozens of conversations with academics and philosophers and academics and decision-theorists, where in the context of both decision-theory and epistemology question, people brought up dutch books and money pumps interchangeably.

Replies from: sami-petersen↑ comment by SCP (sami-petersen) · 2024-07-24T09:34:55.878Z · LW(p) · GW(p)

I agree that there exists the dutch book theorem, and that that one importantly relates to probabilism

I'm glad we could converge on this, because that's what I really wanted to convey.[1] I hope it's clearer now why I included these as important errors:

- The statement that the vNM axioms “apart from continuity, are often justified using the Dutch book theorems” is false since these theorems only relate to belief norms like probabilism. Changing this to 'money pump arguments' would fix it.

- There's a claim on the main Dutch book page that the arguments demonstrate that “rationality requires assigning probabilities to events [...] and having preferences that can be modeled using the von Neumann–Morgenstern axioms.” I wouldn't have said it was false if this was about money pumps.[2] I would've said there was a terminological issue if the page equated Dutch books and money pumps. But it didn't.[3] It defined a Dutch book as "a set of bets that ensures a guaranteed loss." And the theorems and arguments relating to that do not support the vNM axioms.

Would you agree?

- ^

The issue of which terms to use isn't that important to me in this case, but let me speculate about something. If you hear domain experts go back and forth between 'Dutch books' and 'money pumps', I think that is likely either because they are thinking of the former as a special case of the latter without saying so explicitly, or because they're listing off various related ideas. If that's not why, then they may just be mistaken. After all, a Dutch book is named that way because a bookie is involved!

- ^

Setting asside that "demonstrates" is too strong even then.

- ^

It looks like OP edited the page just today and added 'or money pump'. But the text that follows still describes a Dutch book, i.e. a set of bets. (Other things were added too that I find problematic but this footnote isn't the place to explain it.)

↑ comment by Closed Limelike Curves · 2024-07-26T03:08:17.262Z · LW(p) · GW(p)

We certainly are, which isn't unique to either of us; Savage discusses them all in a single common framework on decision theory, where he develops both sets of ideas jointly. A money pump is just a Dutch book where all the bets happen to be deterministic. I chose to describe things this way because it lets me do a lot more cross-linking within Wikipedia articles on decision theory, which encourages people reading about one to check out the other.

↑ comment by gwern · 2024-07-23T19:37:28.448Z · LW(p) · GW(p)

You can check the edit history yourself by just clicking on the "View History" button and then pressing the "cur" button next to the revision entry you want to see the diff for.

Note that if the edit history is long or you are doing a lot of checks, there are tools to bisect WP edit histories: at the top of the diff page, "External tools: Find addition/removal (Alternate)"

so eg https://wikipedia.ramselehof.de/wikiblame.php?user_lang=en&lang=en&project=wikipedia&tld=org&article=Von+Neumann–Morgenstern+utility+theorem&needle=behave+as+if+they+are+maximizing+the+expected+value+of+some+function&skipversions=0&ignorefirst=0&limit=500&offmon=7&offtag=23&offjahr=2024&searchmethod=int&order=desc&start=Start&user= identifies in 10s the edit https://en.wikipedia.org/w/index.php?title=Von_Neumann–Morgenstern_utility_theorem&diff=prev&oldid=1165485303 which turns out to be spurious but then we can restart with the older text.

↑ comment by SMK (Sylvester Kollin) · 2024-07-23T22:13:09.684Z · LW(p) · GW(p)

Edit: Actually, I think my deeper objection is that most of the critiques here (made by Sammy) are just wrong. For example, of course Dutch books/money pumps frequently get invoked to justify VNM axioms. See for example this.

Sami never mentioned money pumps. And "the Dutch books arguments" are arguments for probabilism and other credal norms[1], not the vNM axioms.

- ^

Again, see Pettigrew (2020) (here is a PDF from Richard's webpage).

↑ comment by Gustav Alexandrie (gustav-alexandrie) · 2024-08-07T12:16:22.559Z · LW(p) · GW(p)

I broadly agree with all of Sami's points. However, on this terminological issue I think it is a bit less clear cut. It is true that many decision theorists distinguish between "dutch books" and "money pumps" in the way you are suggesting, and it seems like this is becoming the standard terminology in philosophy. That said, there are definitely some decision theorists that use "Dutch book arguments" to refer to money pump arguments for VNM axioms. For example, Yaari writes that "an agent that violates Expected Utility Theory is vulnerable to a so-called Dutch book".

Now, given that the entry is called "dutch book theorems" and mostly focuses on probabilism, Sami is still right to point out that it is confusing to say that these arguments support EUT. Maybe I would have put this under "misleading" rather than under "false" though.

↑ comment by Closed Limelike Curves · 2024-07-26T02:41:33.424Z · LW(p) · GW(p)

Yes, these Wikipedia articles do have lots of mistakes. Stop writing about them here and go fix them!

Replies from: sami-petersen↑ comment by SCP (sami-petersen) · 2024-07-26T10:19:59.379Z · LW(p) · GW(p)

I don't apprecaite the hostility. I aimed to be helpful in spending time documenting and explaining these errors. This is something a heathy epistemic community is appreciative of, not annoyed by. If I had added mistaken passages to Wikipedia, I'd want to be told, and I'd react by reversing them myself. If any points I mentioned weren't added by you, then as I wrote in my first comment:

...let me know that some of the issues I mention were already on Wikipedia beforehand. I’d be happy to try to edit those.

The point of writing about the mistakes here is to make clear why they indeed are mistakes, so that they aren't repeated. That has value. And although I don't think we should encourage a norm that those who observe and report a problem are responsible for fixing it, I will try to find and fix at least the pre-existing errors.

Replies from: Closed Limelike Curves↑ comment by Closed Limelike Curves · 2024-07-26T17:33:44.274Z · LW(p) · GW(p)

I'm not annoyed by these, and I'm sorry if it came across that way. I'm grateful for your comments. I just meant to say these are exactly the sort of mistakes I was talking about in my post as needing fixing! However, talking about them here isn't going to do much good, because people read Wikipedia, not LessWrong shortform comments, and I'm busy as hell working on social choice articles already.

From what I can tell, there's one substantial error I introduced, which was accidentally conflating IIA with VNM-independence. (Although I haven't double-checked, so I'm not sure they're actually unrelated.) Along with that there's some minor errors involving strict vs. non-strict inequality which I'd be happy to see corrected.

Replies from: sami-petersen↑ comment by SCP (sami-petersen) · 2024-07-28T23:19:07.212Z · LW(p) · GW(p)

Thanks. Let me end with three comments. First, I wrote a few brief notes here that I hope clarify how Independence and IIA differ. Second, I want to stress that the problem with the use of Dutch books in the articles is a substantial one, not just a verbal one, as I explained here [LW(p) · GW(p)] and here [LW(p) · GW(p)]. Finally, I’m happy to hash out any remaining issues via direct message if you’d like—whether it’s about these points, others I raised in my initial comment, or any related edits.

↑ comment by niplav · 2024-07-18T18:41:43.871Z · LW(p) · GW(p)

This seems true, thanks for your editing on the related pages.

Trying to collect & link the relevant pages:

- Dutch book theorems

- Von Neumann-Morgenstern utility theorem

- Rational Choice Cheory

- Decision Theory

- Cox's Theorem

- Discounting

↑ comment by ProgramCrafter (programcrafter) · 2024-07-20T09:49:54.611Z · LW(p) · GW(p)

There is also article Decision-making.

Importance arguments:

- Five wikiprojects rely on this article, but it is C-class on Wikipedia scale;

- Topic seems quite important for people. If someone not knowing how to make decisions stumbles upon the article, the first image they see... is a flowchart, which can scare non-programmists away.

↑ comment by Seth Herd · 2024-07-23T19:12:55.556Z · LW(p) · GW(p)

This seems like a much better target for spreading rationalism. The other listed articles all seem quite detailed and far from the central rationalist project. Decision-making seems like a more likely on-ramp.

Replies from: Closed Limelike Curves↑ comment by Closed Limelike Curves · 2024-07-27T02:25:10.554Z · LW(p) · GW(p)

I'm less interested in spreading rationalism per se and more in teaching people about rationality. The other articles are very strongly+closely related to rationality; I chose them since they're articles describing key concepts in rational choice.

Replies from: Seth Herd↑ comment by Olli Järviniemi (jarviniemi) · 2024-07-19T19:09:11.255Z · LW(p) · GW(p)

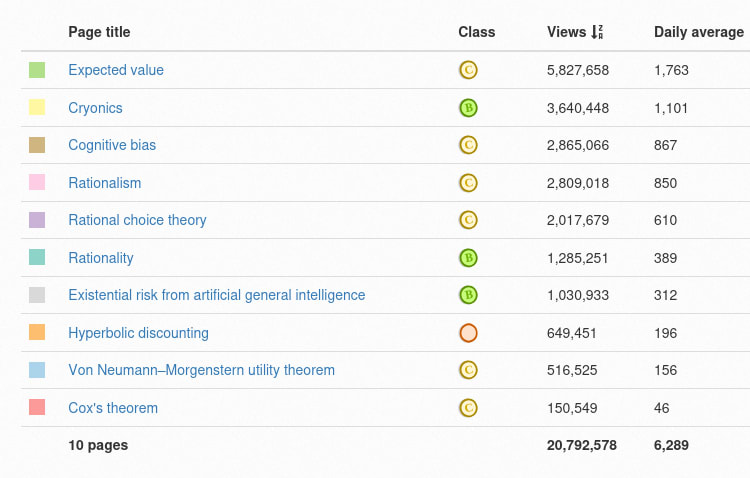

A typical Wikipedia article will get more hits in a day than all of your LessWrong blog posts have gotten across your entire life, unless you're @Eliezer Yudkowsky.

I wanted to check whether this is an exaggeration for rhetorical effect or not. Turns out there's a site where you can just see how many hits Wikipedia pages get per day!

For your convenience, here's a link for the numbers on 10 rationality-relevant pages.

I'm pretty sure my LessWrong posts have gotten more than 1000 hits across my entire life (and keep in mind that "hits" is different from "an actual human actually reads the article"), but fair enough - Wikipedia pages do get a lot of views.

Thanks for the parent for flagging this and doing editing. What I'd now want to see is more people actually coordinating to do something about it - set up a Telegram or Discord group or something, and start actually working on improving the pages - rather than this just being one of those complaints on how Rationalists Never Actually Tried To Win, which a lot of people upvote and nod along with, and which is quickly forgotten without any actual action.

(Yes, I'm deliberately leaving this hanging here without taking the next action myself; partly because I'm not an expert Wikipedia editor, partly because I figured that if no one else is willing to take the next action, then I'm much more pessimistic about this initiative.)

Replies from: gwern, programcrafter↑ comment by gwern · 2024-07-19T22:03:53.635Z · LW(p) · GW(p)

I'm pretty sure my LessWrong posts have gotten more than 1000 hits across my entire life (and keep in mind that "hits" is different from "an actual human actually reads the article"), but fair enough - Wikipedia pages do get a lot of views.

Wikipedia pageviews punch above their weight. First, your pageviews probably do drop off rapidly enough that it is possible that a WP day = lifetime. People just don't go back and reread most old LW links. I mean, look at the submission rate - there's like a dozen a day or something. (I don't even read most LW submissions these days.) While WP traffic is extremely durable: 'Expected value' will be pulling in 1.7k hits/days (or more) likely practically forever.

Second, the quality is distinct. A Wikipedia article is an authoritative reference which is universally consulted and trusted. That 1.7k excludes all access via the APIs AFAIK, and things like readers who read the snippets in Google Search. If you Google the phrase 'expected value', you may not even click through to WP because you just read the searchbox snippet:

About

In probability theory, the expected value is a generalization of the weighted average. Informally, the expected value is the arithmetic mean of the possible values a random variable can take, weighted by the probability of those outcomes.

This includes machine learning. Every LLM is trained very heavily on Wikipedia; any given LW page, on the other hand, may well not make the cut, either because it's too recent to show up in the old datasets everyone starts with like The Pile, or because it gets filtered out for bad reasons, or they just don't train enough tokens. And there is life beyond LLM in ML (hard to believe these days, but I am told ML researchers still exist who do other things), and WP articles will be in those, as part of the network or WikiData etc. A LW post will not.

Then you have the impact of WP. As anyone who's edited niche topics for years can tell you, WP articles are where everyone starts, and you can see the traces for decades afterwards. Hallgren mentions David Gerard, and Roko's Basilisk is a good example of that - it is the one thing "everyone knows" about LessWrong, and it is due almost solely to Wikipedia. The hit count on the 'LessWrong' WP article will never, ever reflect that.

But editing WP is difficult even without a Gerard, because of the ambient deletionists. An example: you may have seen recently going around (even on MR) a Wikipedia link about the interesting topic of 'disappearing polymorphs'. It is a fascinating chemistry topic, but on Gwern.net, I did not link to it, but to a particular revision of another article. Why? Because an editor, Smokefoot, butchered it after I drew attention to it on social media prior to the current burst of attention. (Far from the first time - this is one of the hazards of highlighting any Wikipedia article.) We can thank Yitzilitt & Cosmia Nebula for since writing a new 'Disappearing polymorph' article which can stand up to Smokefoot's butchering; it is almost certainly the case that it took them 100x, if not 1000x, more time & effort to write that than it took Smokefoot to delete the original material. (On WP, when dealing with a deletionist, it is worse than "Brandolini's law" - we should be so lucky that it only took 10x the effort...)

Replies from: Cosmia_Nebula↑ comment by Cosmia_Nebula · 2024-07-22T00:53:59.426Z · LW(p) · GW(p)

Finally somepony noticed my efforts!

Concurring with the sentiment, I have realized that nothing I write is going to be as well-read as Wikipedia, so I have devoted myself to writing Wikipedia instead of trying to get a personal blog anymore.

I will comment on a few things:

- I really want to get the neural scaling law page working with some synthesis and updated data, but currently there are no good theoretical synthesis. Wikipedia isn't good for just a giant spreadsheet.

- I wrote most of the GAN page, the Diffusion Model page, Mixture of Experts, etc. I also wrote a few sections of LLM and keep the giant table updated for each frontier model. I am somewhat puzzled by the fact that it seems I am the only pony who thought of this. There are thousands of ML personal blogs, all in the Celestia-forsaken wasteland of not getting read, and then there is Wikipedia... but nopony is writing there? Well, I guess my cutie mark is in Wikipedia editing.

- The GAN page and the Diffusion Model page were Tirek-level bad. They read like somepony paraphrased about 10 news reports. There was barely a single equation, and that was years after GAN and DM had proved their worth! So I fired the Orbital

FriendshipMathematical Cannon. I thought that if I'm not going to write another blog, then Wikipedia has to be on the same level of a good blog, so I set my goal to the Lilian Wang's blog level, and a lack of mathematics is definitely bad. - I fought a bitter edit war on Artificial intelligence in mathematics with an agent of Discord [deletionist] and lost. The edit war seems lost too, but a brief moment is captured in Internet Archive... like tears in the rain. I can only say like Galois... "On jugera [Posterity will judge]".

- My headcanon is that Smokefoot is a member of BloodClan.

↑ comment by gwern · 2024-07-22T20:33:28.158Z · LW(p) · GW(p)

The GAN page and the Diffusion Model page were Tirek-level bad. They read like somepony paraphrased about 10 news reports. There was barely a single equation, and that was years after GAN and DM had proved their worth

Yes, but WP deletionists only permit news reports, because those are secondary sources. You have to write these articles with primary sources, but they hate those; see one of their favorite jargons, WP:PRIMARY. (Weng's blog, ironically, might make the cut as a secondary source, despite containing pretty much just paraphrases or quotes from primary sources, but only because she's an OA exec.) Which is a big part of why the DL articles all suck because there just aren't many good secondary or tertiary sources like encyclopedias. (Well, there's the Schmidhuber Scholarpedia articles in some cases, but aside from being outdated, it's, well, Schmidhuber.) There is no GAN textbook I know of which is worthwhile, and I doubt ever will be.

Replies from: Cosmia_Nebula, Closed Limelike Curves↑ comment by Cosmia_Nebula · 2024-07-23T15:04:50.039Z · LW(p) · GW(p)

there's the Schmidhuber Scholarpedia articles in some cases, but aside from being outdated, it's, well, Schmidhuber.

I hate Schmimdhuber with a passion because I can smell everything he touches on Wikipedia and they are always terrible.

Sometimes when I read pages about AI, I see things that almost certainly came from him, or one of his fans. I struggle to speak of exactly what Schmidhuber's kind of writing gives, but perhaps this will suffice: "People never give the right credit to anything. Everything of importance is either published by my research group first but miscredited to someone later, or something like that. Deep Learning? It's done not by Hinton, but Amari, but not Amari, but by Ivanenkho. The more obscure the originator, the better, because it reveals how bad people are at credit assignment -- if they were better at it, the real originators would not have been so obscure."

For example, LSTM is actually originated by Schmidhuber... and actually, it's also credited to Schmidhuber (... or maybe Hochreiter?). But then GAN should be credited to Schmidhuber, and also Transformers. Currently he (or his fans) kept trying to put the phrase "internal spotlights of attention" into the Transformer page, and I kept removing it. He wanted the credit so much that he went for argument-by-punning, renaming "fast weight programmer" to "linear transformers", and to quote out of context "internal spotlights of attention" just to fortify the argument with a pun! I can do puns too! Rosenblatt (1962) even wrote about "back-propagating errors" in an MLP with a hidden layer. So what?

I actually took Schmidhuber's claim seriously and carefully rewrote of Ivanenkho's Group method of data handling, giving all the mathematical details, so that one may evaluate it for itself instead of Schmidhuber's claim. A few months later someone manually reverted everything I wrote! What does it read like according to a partisan of Ivanenkho?

The development of GMDH consists of a synthesis of ideas from different areas of science: the cybernetic concept of "black box" and the principle of successive genetic selection of pairwise features, Godel's incompleteness theorems and the Gabor's principle of "freedom of decisions choice", and the Beer's principle of external additions. GMDH is the original method for solving problems for structural-parametric identification of models for experimental data under uncertainty... Since 1989 the new algorithms (AC, OCC, PF) for non-parametric modeling of fuzzy objects and SLP for expert systems were developed and investigated. Present stage of GMDH development can be described as blossom out of deep learning neuronets and parallel inductive algorithms for multiprocessor computers.

Well excuse me, "Godel's incompleteness theorems"? "the original method"? Also, I thought "fuzzy" has stopped being fashionable since 1980s. I actually once tried to learn fuzzy logic and gave up after not seeing what is the big deal. It is filled with such pompous and self-important terminology, as if the lack of substance must be made up by the heights of spiritual exhortation. Why say "combined" when they could say "consists of a synthesis of ideas from different areas of science"?

As a side note, such turgid prose, filled with long noun-phrases is pretty common among the Soviets. I once read that this kind of massive noun-phrase had a political purpose, but I don't remember what it is.

Replies from: Cosmia_Nebula↑ comment by Cosmia_Nebula · 2024-11-05T01:37:15.179Z · LW(p) · GW(p)

Edit: I found it. It's from Yurchak, Alexei. "Soviet hegemony of form: Everything was forever, until it was no more." Comparative studies in society and history 45.3 (2003): 480-510.

The following examples are taken from a 1977 leading article, "The Ideological Conviction of the Soviet Person" (Ideinost' sovetskogo cheloveka, Pravda, July 1, 1977). For considerations of space, I will limit this analysis to two generative principles of block-writing: the principle of complex modification and that of complex nominalization. The first sentence in the Pravda text reads: "The high level of social consciousness of the toilers of our country, their richest collective experience and political reason, manifest themselves with an exceptional completeness in the days of the all-people discussion of the draft of the Constitution of the USSR." I have italicized phrases that are nouns with complex modifiers that function as "building blocks" of ideological discourse.

"the high level of consciousness of the toilers," the double-modifier "high level" conveys not only the claim that the Soviet toilers' consciousness exists (to be high it must exist), but also that it can be measured comparatively, by different "levels." The latter claim masks the former one, thereby making it harder to question directly...

during the 1960s and 1970s... nominal structures increased and new long nominal chains were created. This increased the circularity of ideological discourse. In the excerpt from the same 1977 leading article; the italicized phrase (which in English translation is broken into two parts) is a block of multiple nominals: "The spiritual image of the fighter and creator of the citizen of the developed socialist society reveals itself to the world in all its greatness and beauty both in the chiseled lines of the outstanding document of the contemporary times, and in the living existence, in the everyday reality of the communist construction (I v chekannykh strokakh vy- daiushchegosia dokumenta sovremennosti, i v zhivoi deistvitel'nosti, v povsed-nevnykh budniakh kommunisticheskogo stroitel'stva raskryvaetsia pered mirom vo vsem velichii i krasote dukhovnyi obraz bortsa i sozidatelia, grazhdanina razvitogo sotsialisticheskogo obshchestva)."

nominals allow one to render ideological claims implicit, masking them behind other ideas, and therefore rendering them less subject to scrutiny or multiple interpretations. This nominal chain can be deconstructed into several corresponding verbal phrases, each containing one idea: "the citizen of the developed socialist society is a fighter and creator," "the fighter and creator possesses a spiritual image," "the spiritual image is great and beautiful," etcetera. Converting these verbal phrases into one nominal phrase converts claims into presuppositions, presenting ideas as pre-established facts.

the 1970s discourse was special: its sentences contained particularly long nominal chains and only one verb, often simply a copula, with the sole purpose of turning these long chains of nominals into a sentence. This style created a notoriously "wooden" sound, giving ideological discourse its popular slang name, "oak language" (dubovyi iazyk).

↑ comment by Closed Limelike Curves · 2024-07-26T03:34:15.211Z · LW(p) · GW(p)

Yes, but WP deletionists only permit news reports, because those are secondary sources. You have to write these articles with primary sources, but they hate those; see one of their favorite jargons, WP:PRIMARY.

Aren't most of the sources going to be journal articles? Academic papers are definitely fair game for citations (and generally make up most citations on Wikipedia).

↑ comment by ProgramCrafter (programcrafter) · 2024-07-20T17:17:21.870Z · LW(p) · GW(p)

What I'd now want to see is more people actually coordinating to do something about it - set up a Telegram or Discord group or something, and start actually working on improving the pages - rather than this just being one of those complaints on how Rationalists Never Actually Tried To Win, which a lot of people upvote and nod along with, and which is quickly forgotten without any actual action.

So mote it be. I can start the group/server and do moderation (though not 24/7, of course). Whoever is reading this: please choose between Telegram and Discord with inline react.

Moderation style I currently use: "reign of terror", delete offtopic messages immediately, after large discussions delete the messages which do not carry much information (even if someone replies to them).

I've created a couple of prediction markets:

Will I manage group for improvement of Wikipedia-related articles Will LessWrong have book review on some newly-added source to Wikipedia rationality-related article

Replies from: programcrafter, Closed Limelike Curves↑ comment by ProgramCrafter (programcrafter) · 2024-07-23T08:29:30.628Z · LW(p) · GW(p)

I have created Discord server: "Decision Articles Treatment", https://discord.gg/P7m63mAP.

@the gears to ascension [LW · GW] @Olli Järviniemi [LW · GW] @DusanDNesic [LW · GW] not sure if your reacts would create notifications, so pinging manually.

Replies from: Closed Limelike Curves↑ comment by Closed Limelike Curves · 2024-07-26T03:15:54.479Z · LW(p) · GW(p)

@ProgramCrafter [LW · GW] Link is broken, probably expired.

↑ comment by Closed Limelike Curves · 2024-07-26T18:08:09.897Z · LW(p) · GW(p)

Permanent link that won't expire here. @the gears to ascension [LW · GW] @Olli Järviniemi [LW · GW]

↑ comment by Jonas Hallgren · 2024-07-19T07:46:44.657Z · LW(p) · GW(p)

Well, it seems like this story might have to do something with it?: https://www.lesswrong.com/posts/3XNinGkqrHn93dwhY/reliable-sources-the-story-of-david-gerard [LW · GW]

I don't know to what extent that is, though; otherwise, I agree with you.

Replies from: Closed Limelike Curves, Viliam↑ comment by Closed Limelike Curves · 2024-07-26T03:17:55.497Z · LW(p) · GW(p)

I think it's unrelated; David Gerard is mean to rationalists and spends lots of time editing articles about LW/ACX, but doesn't torch articles about math stuff. The reason these articles are bad is because people haven't put much effort into them.

↑ comment by Viliam · 2024-07-20T18:28:03.653Z · LW(p) · GW(p)

Ah, in a parallel universe without David Gerard the obvious next step would be to create a WikiProject Rationality. In this universe, this probably wouldn't end well? Coordination outside Wikipedia is also at risk of accusation of brigading or something.

Replies from: Closed Limelike Curves, MondSemmel, Closed Limelike Curves↑ comment by Closed Limelike Curves · 2024-07-26T03:22:16.150Z · LW(p) · GW(p)

In this universe it would end just fine! Go ahead and start one. Looks like someone else is creating a Discord.

Brigading would be if you called attention to one particular article's talk page and told people "Hey, go make this particular edit to this article."

↑ comment by MondSemmel · 2024-07-21T18:04:31.199Z · LW(p) · GW(p)

Eh, wasn't Arbital meant to be that, or something like it? Anyway, due to network effects I don't see how any new wiki-like project could ever reasonably compete with Wikipedia.

Replies from: Closed Limelike Curves↑ comment by Closed Limelike Curves · 2024-07-26T03:18:37.021Z · LW(p) · GW(p)

I think Arbital was supposed to do that, but basically what you said.

↑ comment by Closed Limelike Curves · 2024-07-26T18:07:58.577Z · LW(p) · GW(p)

https://discord.gg/skNZzaAjsC

↑ comment by metachirality · 2024-07-19T07:04:06.764Z · LW(p) · GW(p)

I think the thing that actually makes people more rational is thinking of them as principles you can apply to your own life rather than abstract notions, which is hard to communicate in a Wikipedia page about Dutch books.

Replies from: Closed Limelike Curves↑ comment by Closed Limelike Curves · 2024-07-26T03:16:11.928Z · LW(p) · GW(p)

Sure, but you gotta start somewhere, and a Wikipedia article would help.