2022 AI Alignment Course: 5→37% working on AI safety

post by Dewi (dewi) · 2024-06-21T17:45:33.881Z · LW · GW · 3 commentsContents

Introduction Impact Now vs then Conclusion None 3 comments

Introduction

In 2022, we ran the second iteration of the AI Safety Fundamentals: Alignment course. The curriculum was designed by Richard Ngo, and the course was run by myself and Jamie. The course’s objectives were to teach participants about AI alignment, motivate them to work in the field, and help them find opportunities to contribute. We received 450 applications, made 342 offers to join the course, and the cost per student was £440.

This blog post analyses the career trajectories of the course participants, comparing how many were working on AI safety when they started the course vs how many are working on AI safety today.

Impact

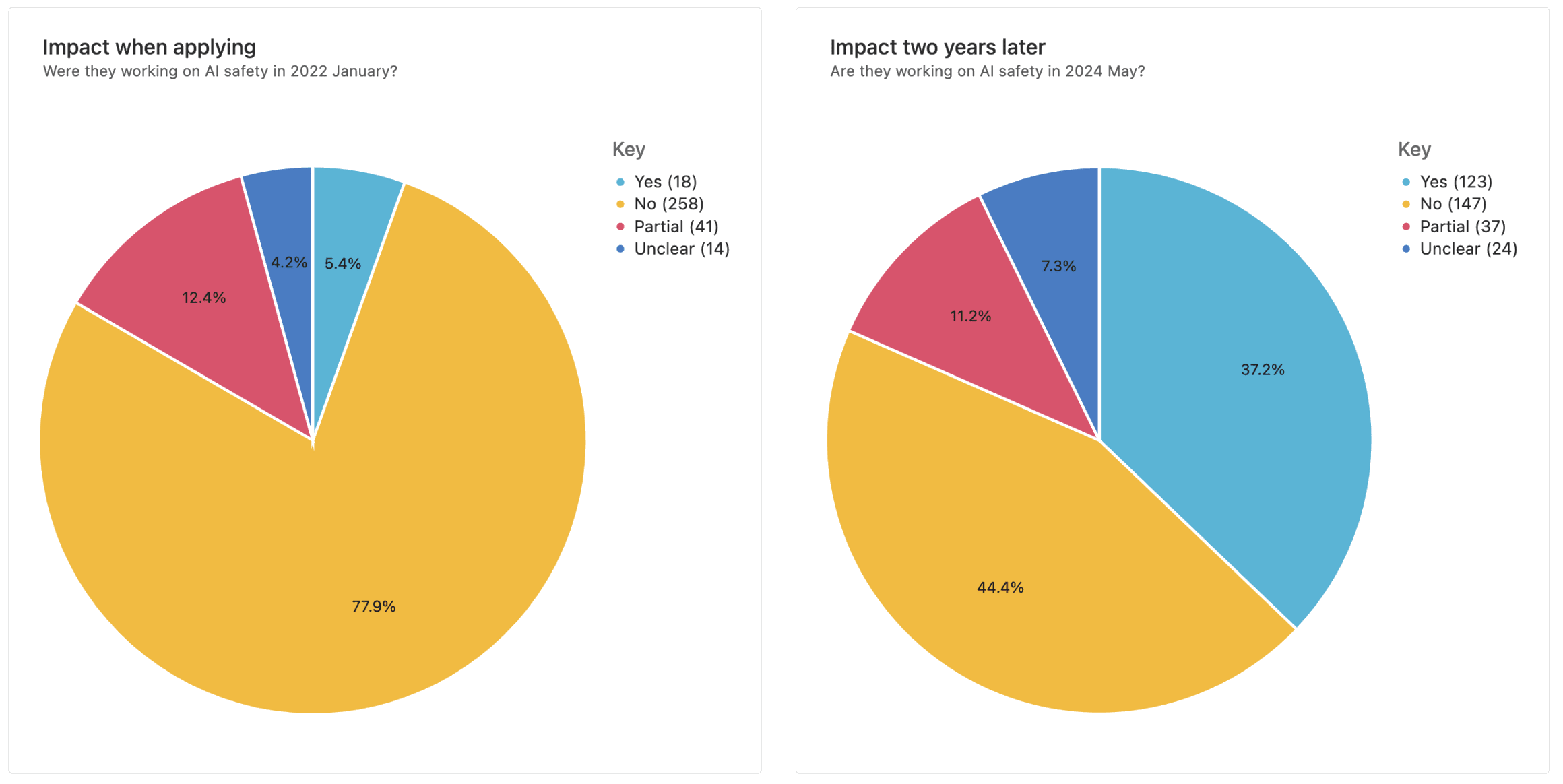

In the course’s application form, we asked participants for their CV / LinkedIn profile, their experience in AI safety, and their career plans. By evaluating each individual using this information, we estimate only 18 participants (5% of 342) were working on AI safety when we accepted them onto the course.

To determine the number of course alumni working on AI safety today, we looked into each individual’s online presence, including their LinkedIn, personal website, blog posts, etc. looking for signs of AI safety-motivated work, and we sent out an alumni survey in January 2024 to learn more about their post-course actions. Our research suggests 123 alumni (37% of 342) work ~full-time on AI safety today, up 105 from 18 initially.

Of the 105, 20 expressed no interest in pursuing a career in AI safety in their initial application. Either they intended to pursue a different career, or had not done any career planning. We compared their plans from January 2022 with the actions they’ve taken since then and we evaluated their responses to the alumni survey. Based on this, we believe most of the 20 would not be working in AI safety today had they not participated in the course.

The other 85 expressed a desire to work in AI safety and had tentative plans for how they’d enter the field, but weren’t yet active contributors. Their responses to our alumni survey describe how the course accelerated their impact by helping them find impactful roles sooner, and giving them the tools to have a greater impact in those roles.

Now vs then

Our courses today are a major improvement over the course in 2022.

In 2022, the curriculum was a static list of resources and discussion plans were just a few open-ended prompts.

We now provide much higher-quality learning experiences via compelling and coherent course narratives, challenging pre-session exercises, and engaging session activities that drive towards our learning objectives.

In 2022, we had no idea if people were doing the readings or who attended each session. Some facilitators would let us know who attended via a post-session form, but it was a clunky process and was ignored most of the time.

Now, participants tick off and give feedback on each reading in our course platform, and we track session attendance via an easy two-click login process. We adapt the curriculum on an ongoing basis based on feedback, and we disband low-attendance cohorts so participants are always in cohorts with engaged peers. We can guess at the attendance and engagement during the 2022 course, but we can tell exactly for current courses.

In 2022, we struggled to recruit enough facilitators to match the demand and quality of our participants. We sent last-minute messages to people in our network asking if they were interested, or had any recommendations. This was a significant barrier to growth.

We now recruit Teaching Fellows who facilitate 5-10 courses each and work 0.5-1 FTE. This has enabled us to scale the courses while improving discussion quality. Teaching Fellows are evaluated via a mock facilitated discussion before they’re selected, and their facilitation skills improve further as a result of specialised training we give them, and consistent practice while in the role.

In 2022, evaluating applications was 2 weeks of work for two team members, and running the course logistics was a further 3 months full-time for one team member. We had limited time to run events, improve the learning experience, or provide tailored support for the most engaged participants.

Today, most operational tasks are automated, including evaluating applicants against a set criteria, onboarding emails, collecting people’s time availability, creating cohorts, and enabling participants to switch cohorts if their availability changes. Running a course’s logistics takes ~0.2 FTE, and we can now prioritise creating excellent experiences.

Conclusion

The 2022 AI alignment course has 123 graduates who are now working ~full-time on AI safety (37% of the cohort), up from 18 participants at the start of the course. We estimate that 20 of those would not be working on AI safety were it not for them joining the course, based on their 2024 survey responses and their 2022 career plans. We also accelerated the impact of the other 85 participants by helping them find impactful opportunities and performing better in those roles.

Today, we're delivering better learning experiences at a greater scale, and there are far more opportunities for graduates to have an impact in the AI safety space. Therefore, we expect our current work to be even more impactful, and we’re excited to continue supporting ambitious, driven, altruistic individuals towards impactful careers.

3 comments

Comments sorted by top scores.

comment by habryka (habryka4) · 2024-06-21T17:53:46.591Z · LW(p) · GW(p)

The title seems... confused? There is no way that "5% of people had no impact" and "37% of people now have a substantial impact". The post seems to suggest that what you actually mean is "X% of people now self-identify as working on AI safety". IMO the title should reflect that.

Also, equating "works on AI safety" with "has an impact" seems pretty misguided, since there are tons of ways to have an impact without doing the kind of work I assume you would classify as working on AI safety.

(I overall like the course, but the language in this post doesn't really provide much evidence given its markety-bend, which makes it hard for me to trust)

Replies from: Alex_Altair, dewi↑ comment by Alex_Altair · 2024-06-21T21:20:23.641Z · LW(p) · GW(p)

Agreed the title is confusing. I assumed it meant that some metric was 5% for last year's course, and 37% for this year's course. I think I would just nix numbers from the title altogether.

↑ comment by Dewi (dewi) · 2024-06-22T16:45:07.913Z · LW(p) · GW(p)

Thanks for this feedback! I've updated the title wording to from "___ having an impact" to "___ working on AI safety".