Charitable Reads of Anti-AGI-X-Risk Arguments, Part 1

post by sstich · 2022-10-05T05:03:43.262Z · LW · GW · 4 commentsContents

The Future is Unpredictable None 4 comments

Prompted by the AI Worldview Prize, I want to try to understand the arguments against existential misalignment risk with as open a mind as possible. I decided the best way to start would be to summarize anti-x-risk arguments, and evaluate them charitably. I’m starting with what I think is the most accessible "folk argument," which is simply that:

The Future is Unpredictable

The short form of this argument is:

- Humans are really bad at predicting the future 10+ years out.

- We’re particularly bad at predicting specific future scenarios; we’re better at making general comments. (Specific scenario: “A humanoid robot will do your laundry.” General comment: “Robots will do more tasks in the future.”)

- Therefore we should assign very low probability to any specific scenario that we can imagine about the future, including AGI x-risk scenarios like paperclip maximization. The unlikelihood of these scenarios makes misalignment x-risk unlikely.

I find the premises of this argument more convincing than the conclusion. I think it’s true that humans are bad at predicting the long-range future, and that we’re especially bad at predicting specific scenarios. As survey articles, I’ll call attention to Luke Muellhauser’s explanation of why it’s hard to score historical attempts at long-range predictions, Holden Karnofsky’s summary of a study on the long-range forecasts of “Big Three” science fiction authors, and Dan Luu’s informal evaluation of futurist predictions. Luu is pessimistic about our ability to predict the long-range future, and grades previous forecasts as very inaccurate; Karnofsky is optimistic, and grades prior forecasts as reasonably accurate. I recommend that interested readers look at the raw files of predictions and judge accuracy for themselves; both Luu and Karnofsky provide sources.

I think it’s useful to make a distinction between “general comments” about the future and “specific scenarios.” Many of the predictions considered by Karnofsky and Luu are general comments about the future, such as the idea that computers will foster human creativity or that we’ll think more about waste and pollution in 2019 than we did in 1983 (both from Asimov, in the Karnofsky study). I think these are akin to present day comments like “artificial intelligence will have important economic consequences” or “we’ll care more about AGI alignment in 2058 than we do in 2022”, which may be true but are far removed from specific x-risk concerns. When considering present-day concerns like paperclip maximization [? · GW] or power-seeking AI which lay out specific scenarios as future possibilities, I think the right reference set consists of similarly specific scenario predictions from the past.

And unfortunately, I think our record here is quite poor. Asimov predicted that by 2020 or earlier, we’d see “road building factories," settlements on the moon, nuclear fusion reactors as a source of practically unlimited energy, radioisotope powered home appliances, etc. Clarke imagines flying cars, brains connected to remote limbs by radio, the ability to control your dream like a “Hollywood director,” etc. It’s boring and unproductive to mock these predictions, and I don’t like criticizing people from the past who were brave enough to make falsifiable forecasts about the future. But I do think that “specific scenario” predictions are the right reference class for many AGI misalignment predictions, and I do think it’s relevant that most specific scenario predictions from the past were badly wrong. It should make us very skeptical about our ability to forecast specific scenarios in the future.

So much for the argument’s premises. What about its conclusion? Does knowing that humans are bad at specific scenario prediction mean that we should think is low? Not necessarily. I do think that knowing this should make us skeptical that something isomorphic to paperclip maximization will occur (similarly for any other specific misalignment x-risk scenario). But that doesn’t necessarily mean that the broad class of existential AGI risks has small probability in aggregate. There are lots of potential misalignment risks, and we shouldn’t take our inability to forecast exactly the kind of disaster we’ll face as evidence that there’s no risk of disaster. I think an apt analogy is natural resource shortages. Famously, many of the specific scenarios that people predicted in the past were wrong. Erlich was wrong about food shortages, Hubbert was wrong about peak oil, etc. However, this doesn’t mean that natural resource shortages are a non-issue; Nauru really did run out of phosphate, Britain really did have severe shipbuilding timber shortages. Furthermore, the main reason why major natural resource shortages didn’t occur is because clever humans predicted them in advance & invented solutions (E.g. the Haber-Bosch process and the Green Revolution for food shortages.). In this sense, failed predictions about natural resource shortages aren’t evidence that the shortages were non-issues; they’re more evidence that we can sometimes solve these very real problems if clever humans foresee them and solve them in advance.

4 comments

Comments sorted by top scores.

comment by Viliam · 2022-10-08T20:01:33.795Z · LW(p) · GW(p)

Not sure if anyone made a review of this, but it seems to me that if you compare the past visions of the future, such as science fiction stories, we have less of everything except for computers. We do not have teleports or cheap transport to other planets, we have not colonized the other planets, we do not have the flying cars, we cannot magically cure all diseases and make people younger.

But we have smartphones = internet and phone and camera in your pocket. Even better that the communicators in Star Trek. Especially the internet part; imagine how different some stories could be if everything that a hero sees could be photographed and immediately uploaded to social networks.

The artificial intelligence is probably the only computer-related sci-fi thing that we do not have yet.

So it is not obvious in my opinion in which direction to update. I am tempted to say that computers evolve faster than everything else, so we should expect AI soon. On the other hand, maybe the fact that AI is currently an exception in this trend means that it will continue to be the exception. No strong opinion on this; just saying that "we are behind the predictions" does not in general apply to computer-related things.

Replies from: sstich↑ comment by sstich · 2022-10-08T23:05:18.219Z · LW(p) · GW(p)

Not sure if anyone made a review of this, but it seems to me that if you compare the past visions of the future, such as science fiction stories, we have less of everything except for computers.

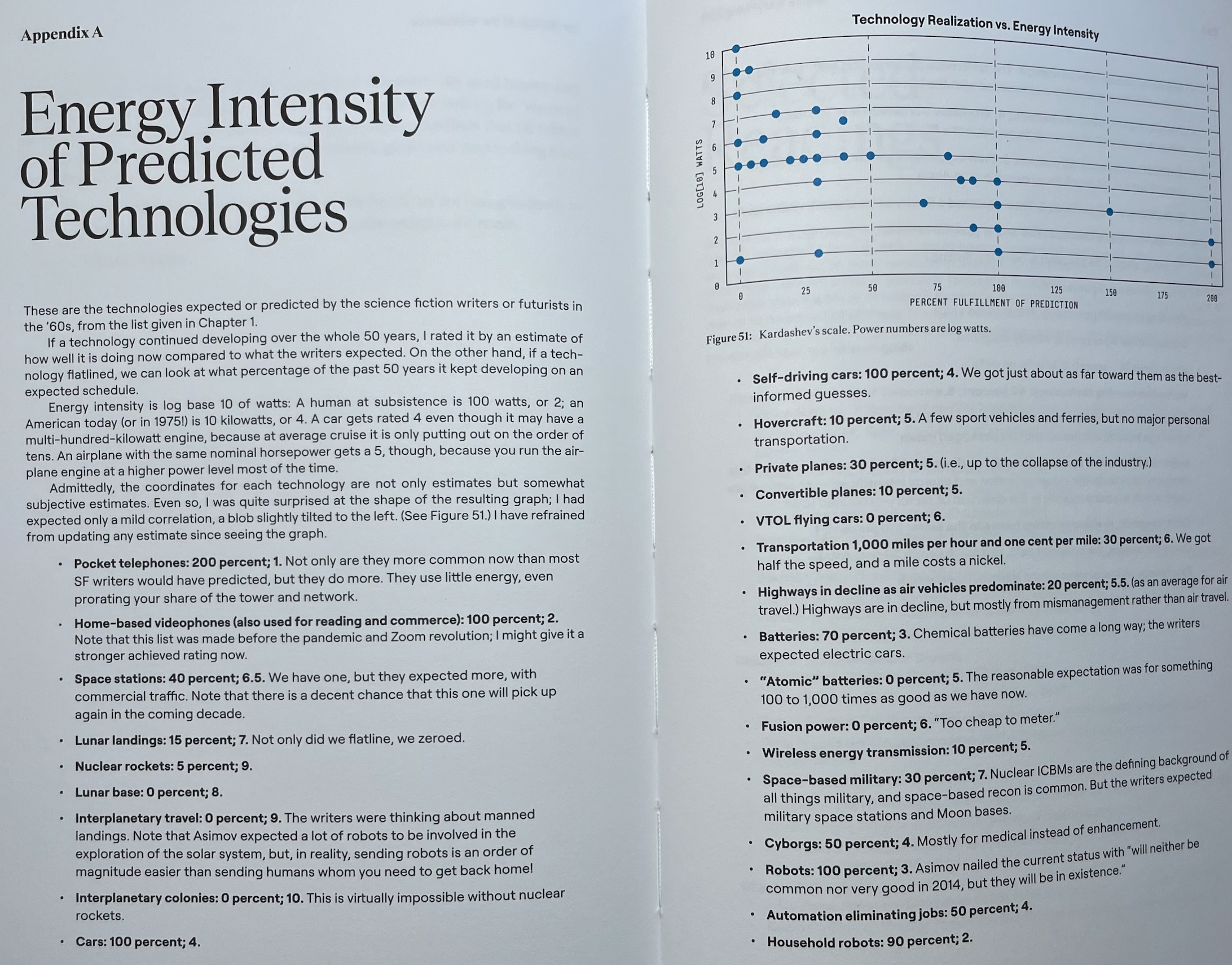

Yes! J Storrs Hall did an (admittedly subjective) study of this, and argues that the probability of a futurist prediction from the 1960s being right decreases logarithmically with the energy intensity required to achieve it. This is from Appendix A in "Where's my Flying Car?"

So it is not obvious in my opinion in which direction to update. I am tempted to say that computers evolve faster than everything else, so we should expect AI soon. On the other hand, maybe the fact that AI is currently an exception in this trend means that it will continue to be the exception. No strong opinion on this; just saying that "we are behind the predictions" does not in general apply to computer-related things.

I think this is a good point. At some point, I should put together a reference class of computer-specific futurist predictions from the past for comparison to the overall corpus.

comment by zeshen · 2022-10-07T14:14:10.643Z · LW(p) · GW(p)

I thought this is a reasonable view and I'm puzzled with the downvotes. But I'm also confused by the conclusion - are you arguing on whether the x-risk from AGI is something predictable or not? Or is the post just meant to convey examples on the merits to both arguments?

Replies from: sstich↑ comment by sstich · 2022-10-08T01:14:00.062Z · LW(p) · GW(p)

Thanks — I'm not arguing for this position, I just want to understand the anti AGI x-risk arguments as well as I can. I think success would look like me being able to state all the arguments as strongly/coherently as their proponents would.