Introducing EffiSciences’ AI Safety Unit

post by WCargo (Wcargo), Charbel-Raphaël (charbel-raphael-segerie), Florent_Berthet · 2023-06-30T07:44:56.948Z · LW · GW · 0 commentsContents

What is EffiSciences? TL;DR of the content Results Counterfactual full time equivalent AI Unit General takeaways Observations Advice Activities Machine Learning for Good: Our most successful program Turing Seminar: Academical AI Safety course AIS training day: the 80/20 of the Turing Seminar EffiSciences’ educational hackathons: Hosting Apart’s hackathons: Talks: Lovelace study groups: Roadmap for next year Create research and internship opportunities: International bootcamps: Strengthening our bonds with French academia: Diving into AI governance: Talking to the media Conclusion None No comments

This post was written by Léo Dana, Charbel-Raphaël Ségerie, and Florent Berthet, with the help of Siméon Campos, Quentin Didier, Jérémy Andréoletti, Anouk Hannot, Angélina Gentaz, and Tom David.

In this post, you will learn what were EffiSciences’ most successful field-building activities as well as our advice, reflections, and takeaways to field-builders. We also include our roadmap for the next year. Voilà.

What is EffiSciences?

EffiSciences is a non-profit based in France whose mission is to mobilize scientific research to overcome the most pressing issues of the century and ensure a desirable future for generations to come.

EffiSciences was founded in January 2022 and is now a team of ~20 volunteers and 4 employees.

At the moment, we are focusing on 3 topics: AI Safety, biorisks, and climate change. In the rest of this post, we will only present our AI safety unit and their results.

TL;DR: In one year, EffiSciences created and held several AIS bootcamps (ML4Good), taught accredited courses in universities, organized hackathons and talks in France’s top research universities. We reached 700 students, 30 of whom are already orienting their careers into AIS research or field building. Our impact was found to come as much from kickstarting students as from upskilling them. And we are in a good position to become an important stakeholder in French universities on those key topics.

| Field-building programs | TL;DR of the content | Results |

| Machine Learning for Good bootcamp (ML4G) | Parts of the MLAB and AGISF condensed in a 10-day bootcamp (very intense) | 2 ML4G, 36 participants, 16 are now highly involved. This program was reproduced in Switzerland and Germany with the help of EffiSciences. |

| Turing Seminar | AGISF-adapted accredited course that has been taught with talks, workshops, and exercises. | 3 courses in France’s top 3 universities: 40 students attended, 5 are now looking to upskill, and 2 will teach the course next year. |

| AIS Training Days | The Turing Seminar compressed in a single day (new format) | 3 iterations, 45 students |

| EffiSciences’ educational hackathons | A hackathon to introduce robustness to distribution change and goal misgeneralization. | 2 hackathons, 150 students, 3 are now highly involved |

| Apart Research’s hackathons | We hosted several Apart Research hackathons, mostly with people already onboarded | 4 hackathons hosted, 3 prizes won by EffiSciences’ teams |

| Talks | Introductions to AI risks | 250 students were reached, ~10 are still in contact with us |

| Lovelace program | Self-study groups on AIS | 4 groups of 5 people each, which did not work well for upskilling. |

In order to assess the effectiveness of our programs, we have estimated how many people have become highly engaged thanks to each program, using a single metric that we call “counterfactual full-time equivalent”. This is our estimate of how many full-time equivalent these people will engage in AI safety in the coming months (thanks to us, counterfactually). Note that some of these programs have instrumental value that is not reflected in the following numbers.

| Activity | Number of events | Counterfactual full time equivalent | By occurrence |

|---|---|---|---|

| Founding the AI safety unit (founders & volunteers)[1] | 1 | 6 | 6,0 |

| French ML4Good bootcamp[2] | 2 | 7,4 | 3,7 |

| Word-of-mouth outreach | 1 | 2,9 | 2,9 |

| Training Day | 2 | 1,9 | 1,0 |

| Hackathon | 4 | 2,5 | 0,6 |

| Turing Seminars (AGISF adaptations) | 3 | 1,3 | 0,4 |

| Research groups in uni | 4 | 0,4 | 0,1 |

| Frid'AI (coworking on Fridays) | 5 | 0,5 | 0,1 |

| Talks | 5 | 0,1 | 0,0 |

| Total | 30 | 23,0 |

How to read this table? We organized 2 French ML4Good events and significantly influenced the careers of 7.4 equivalent persons. This means that, on average, 3.7 new full-time persons started working on AI safety after each ML4Good event.

In total, those numbers represent the aggregation of 43 people who are highly engaged, i.e. that have been convinced by the problem and are working on solving it through upskilling, writing blog posts, facilitating AIS courses, doing AIS internships, attending to SERI MATS, doing policy work in various orgs, etc. The time spent working by these 43 people adds up to 23 full-time work equivalent.

AI Unit

As a field-building group, EffiSciences’ AI Unit has 2 major goals for AIS:

- Teach, orient and help talents get into AIS: we believe that France, as well as other European countries, has a lot of neglected potential for recruiting promising AI safety researchers and policymakers because most of its top talents have not yet learned about the most dangerous AI risks.

- Promote AI safety research among French students and in French academia: AI ethics already has academic approval, enabling professors to easily teach and get support for their research. In the same way, we believe that AI safety’s most scientifically grounded work can obtain a similar approval, which would enable teaching and researching in the best institutions. This would make the cost of entering the field much lower since one wouldn’t need to choose between academia and AI safety.

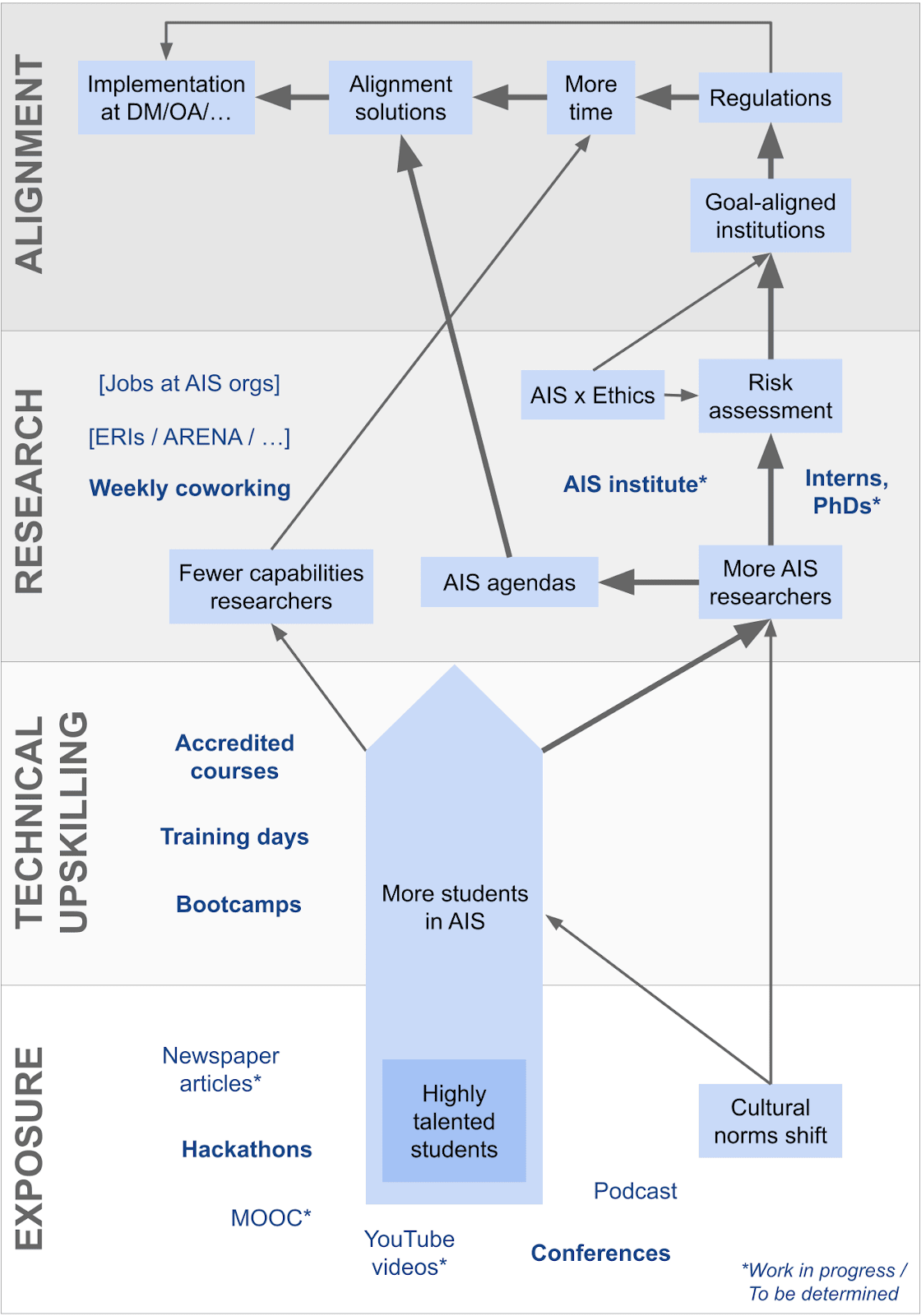

Here is our theory of change as of June 2023:

The ExTRA pipeline: Exposure, Technical upskilling, Research and Alignment. Bolded terms activities are our core activities.

Right now, we have been mostly focused on the first two steps of our theory of change: Exposure and Technical Upskilling. We also aim to have a more direct influence on Research by taking interns and opening research positions. In addition, we intend to hand over the final steps, especially in promoting concrete measures in AI governance, to other organizations, and to keep our focus on research for the time being.

General takeaways

We now present our thoughts after a year of field-building.

Observations

- Kickstarting matters at least as much as upskilling: In our experience, a lot of the value of our activities, especially bootcamps and internships, comes from kickstarting attendees in their AI safety trajectory. This is important to make them understand that they could start doing meaningful work within 2 years.

- AI Safety courses in academia are possible: We ran accredited courses in 3 different top universities. We think that this is very important [LW · GW] to make a serious case of AI risks. Having taught the course in those prestigious universities and having been able to interest students makes a strong case for AI safety research in academia. If you have someone who can teach, sending a couple of emails to investigate the possibility of running a course or seminar could be worth a try. We are currently creating a textbook and sharing our content to help other university groups to organize AIS courses in academia like we did.

Advice

- Optimize for a very small number of people: Because the AIS infrastructure is bottlenecked by the limited number of opportunities for internships and/or PhD positions, people need to be extremely motivated and talented to overcome the many difficult steps required to go into AIS. We thus recommend keeping in mind that your impact is likely to come from a very small number of people, who are ex-ante recognizable because they’re often exceptionally bright and/or exceptionally motivated.

- Understand the importance of your own upskilling as a field-builder: To successfully run events and be relevant, you will have to upskill yourself and create your own path, progressively building up your level to engage in more and more ambitious events and upskilling efforts. Leveling up is really important - people will listen to you more if you have strong skills. Learn from experienced field-builders by attending their events and talking to them.

- Creating your own pipelines is key: the existing pipelines are clogged, so when we upskill and orient students into AIS pipelines, we find it quite difficult to make them take the final step of actually doing research. This is mostly due to the fact that many of them cannot find a first AIS internship or program to really get into the field. We are working on opening a program offering internships (and grants, pending funding) in France to help address this issue.

- Outreach:

- After your events, try to involve your new trainees in meaningful projects, such as small research projects or direct involvement, as opposed to unclear upskilling work.

- Some outreach strategies seem to work better than others. A good one is e.g. to arouse students’ curiosity by connecting their interests to high-impact topics: show them that AI safety can be fun, interesting, and important.

- Being epistemologically rigorous pays off in the long run: always tell the truth and reveal your uncertainties.

Activities

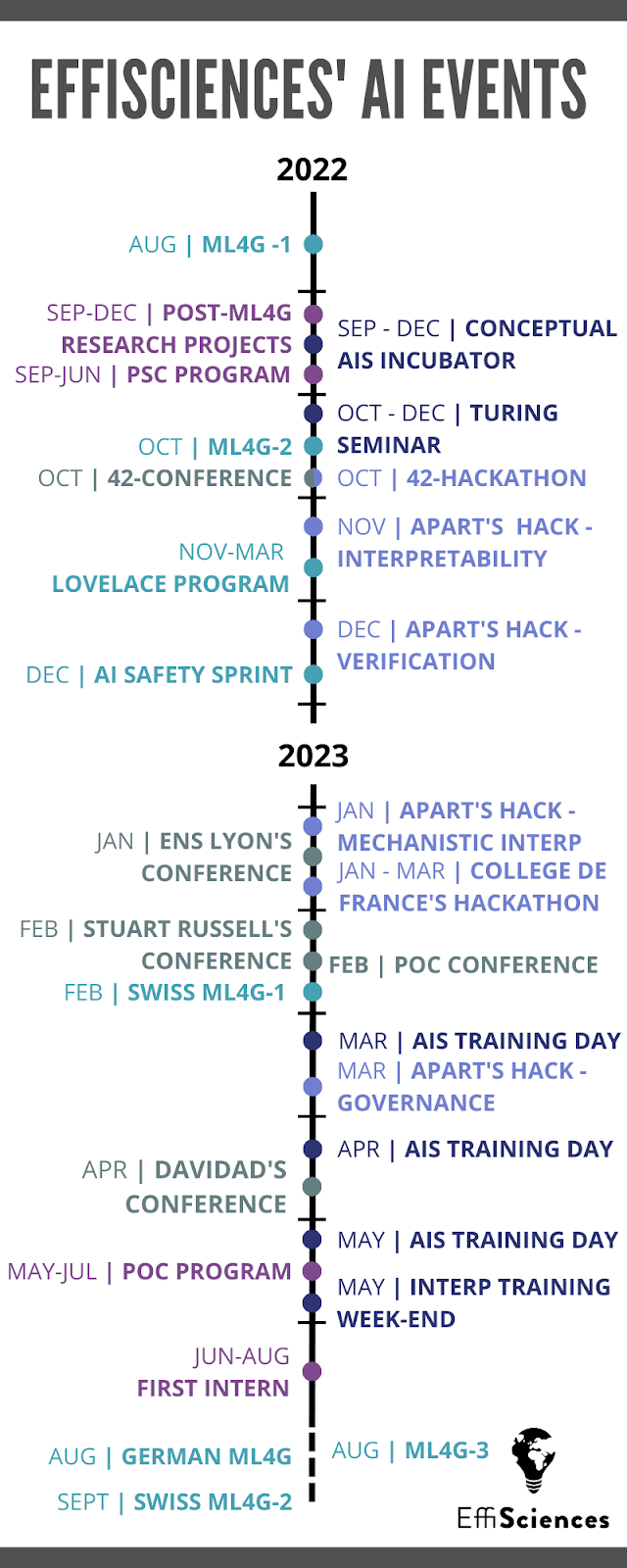

You can find here an almost complete chronology of our events, we will only discuss the ones included in the above summary.

Machine Learning for Good: Our most successful program

- ML4G is a 10-day bootcamp that aims to take motivated people, teach them about technical AI safety, and give them the technical background necessary to start research projects, hackathons, or internships.

- The camp is composed of 2 teachers and 16-20 trainees. We usually rent a lodge in the countryside and spend most of the days working and discussing AIS, as the program is very intense.

- The technical part of the camp draws inspiration from the MLAB and takes students from PyTorch’s basics to implementing a Transformer from scratch and learning RL and interpretability’s basics. These sessions occupy ~7h a day at the beginning of the camp, the evening being reserved for a summary of the AGISF program, talks on AI safety literature, and presentations by ML researchers. Toward the end of the camp, we switch to more literature and small projects for participants.

- Key takeaways: despite the great amount of work needed before and during the camps, we found they are a good way to enable trainees to upskill quickly, both practically and theoretically, and to become engaged with the field. We think that it is our best program so far: from 36 participants, 16 are now highly involved in the field. It is also a good opportunity to upskill people that are into governance, and have them understand some key gear-level mechanisms of AIs.

- Compared to the MLAB, which ML4G is inspired by, our camp is shorter (only 10 days), and way cheaper (~900€ / participant). The reduced length is because we think that most of the impact comes from launching people into AIS safety, rather than teaching them a ton of things about technical ML, and we prefer running other study groups and hackathons afterward to keep the upskilling going.

- We think it’s worth organizing camps in English and opening it to candidates from other countries: our upcoming bootcamps are targeted at international candidates, and we are impressed by the level of the people who applied.

- Launching ML4G in other countries: We are helping organize an ML4G in Germany with Yannick Mühlhäuser, Evander Hammer, and Nia Gardner. We have also helped LAIA (Lausanne AI Alignment group), spinoff of our first ML4G, organize another ML4G in February, and they will launch a new camp in September. We are planning to help launch the camp in other countries with communities that are already close to AI safety but not necessarily with the infrastructure to find the most promising people, such as Italy and India.

- If you like this kind of bootcamps and want to organize one, consider reaching out to us here. We'll be happy to help you organize one in your country by delivering to you (handsome) teachers, programs, notebooks, logistical advice, and much more!

Turing Seminar: Academical AI Safety course

- We draw inspiration from the AGISF curriculum and many interpretability and miscellaneous resources, and turn it into an academic course that we teach in the MVA Master, the most prestigious program for AI in France, as well as ENS Paris Saclay and ENS Ulm (2 of France’s top 4 research universities). Many of the students, especially at MVA, will become top AI researchers. The course consists of ten 2-hours sessions with ~1,001 slides crafted by our main teacher Charbel [LW · GW], starting with a summary of state-of-the-art capabilities, followed by an AI threat model presentation, and ending with interpretability in vision and transformers. We also collaborated with other researchers to propose a final exam on AIS in their own course.

- Key takeaways: This seminar hasn’t been a very efficient way of engaging people, in part because many top students are looking for internships in France and can’t find any which are AI safety relevant, but it proves that topics such as mechanistic interpretability are scientifically mature enough to be taught. Still, we think the seminar has a strong instrumental value because it strengthens our credibility and relationship with the universities, which will allow us to do more collaboration with them in the future. In an empirical way, even if the course is academic, it's really important to be very interactive: For some sessions, organize a 10-minute discussion between participants, and exercises at the end of each part of the lesson.

AIS training day: the 80/20 of the Turing Seminar

- We organized training days in which we summarized the Turing Seminar in just one day and with fewer technicalities. This intense series of talks aims at teaching the basics of why we think that AIS is an important problem, with examples of technical problems and solutions. Even if the amount and density of information is large, we still try to have interactive talks to encourage reflection and many exercises to help assimilate the ideas. The slides of the talks can be found here (in French) and are available on YouTube (in French).

- Key takeaways: we are optimistic that this format is an efficient and cheap way to learn a lot, and a good filter for the ML4Good bootcamps. The event cost only 100 euros for food, but requires preparation for the talks and workshops. We now intend to organize them regularly.

EffiSciences’ educational hackathons:

- This year, EffiSciences organized 2 hackathons: we first organized a hackathon in October 2022 with 42 AI on robustness under distribution shift that gathered ~150 students and featured talks by Rohin Shah and Stuart Armstrong. We then organized a second remote hackathon at Collège de France on the same topic that lasted for 2 months and was also part of an AI course assignment. If you want to organize your own hackathon, we can help you with a ready-to-be-used subject.

- Key takeaways: Since these hackathons were among our first big events, we think that we used too much time and money (~12k€) for the obtained results, and could have done way better. New hackathons are not our main priority right now, but may be valuable once other events reach diminishing returns. If we had to do it again, we would instead organize a thinkathon to enable people to engage deeply about the risks of this technology instead of spending the time on Pytorch. Still, due to their quite considerable scale, these hackathons contributed to our credit in the French ecosystem and a good number of people and institutions heard of us through these.

Hosting Apart’s hackathons:

- This year, EffiSciences hosted 4 of Apart’s hackathons: interpretability, verification, mechanistic interpretability, and governance. Our teams won 3 prizes (1st and 4th on the Interpretability one, 2nd on the Governance one), but no proposal has been finalized and published yet.

- Key takeaways: Apart’s hackathons were excellent ways for students who had already learned about AI risks and had upskilled, to start doing research in just 2 days; we very are thankful for Apart’s work. We recommend running this type of event as they are very cheap to organize (we just needed to book a room in a university, Apart paid for food).

Talks:

- EffiSciences hosted several talks for both students and researchers, to promote AIS research:

- For students, they were based on Rohin Shah’s talks, adjusted to be more ML-beginner-friendly, and encompassed an explanation of some existing research to give a taste of the research field (which is usually the way people get interested in the field). We also hosted a QA with Nate Soares.

- For researchers, we organized talks with Stuart Russell, Davidad. They gathered senior researchers from academia and enabled collaborations.

- Key takeaways: talks to make students interested in AIS were not very successful because of the absence of a fast feedback loop. One-to-one contact seems necessary to engage people. However, talks may be instrumentally useful as a way to get known by some schools/institutions and later organize other events.

Lovelace study groups:

- In November 2022, we launched 4 cohorts of 5 students each to work on AI safety projects. Most students had attended an ML4G before. This environment was created to have weekly discussions about AIS, and shaped such that everyone would be accountable for their work, as a way to decrease the mentoring needed.

- Unfortunately, this process did not work well for a number of reasons:

- The groups held remotely had fewer interactions and had trouble scheduling meetings, and they soon stopped having them,

- Once on their own, some participants lost their agency and soon gave up due to a lack of time and motivation,

- The cohorts were left autonomous but could ask for advice and help. In practice, this didn’t happen, worsening the previous points.

- Key takeaways: If similar projects were tried, we would recommend having a curriculum of what the participants should at least do/read for each week, encouraging them to have weekly meetings, and giving them mentoring at least every 3 weeks. Having weekly IRL meetings seems to work well: for the past 2 months, we have been gathering every Friday at ENS Ulm (we call them the Frid’AIs), and for each session, we give a talk followed by a discussion, and then we co-work. We started with only a couple of people, and there are now ~15 people who come each week.

Roadmap for next year

Create research and internship opportunities:

- As we drive more students into AIS research, we cannot rely solely on existing opportunities like SERI MATS. We want to open internship opportunities next year at EffiSciences to help students take their first steps into research, and allow them to apply elsewhere afterward (labs, PhDs…). We already have one research intern.

- We are also looking into launching an AIS research lab in Paris given that there is an opportunity to do so in a top university.

- More broadly, we’re working toward an institutionalization of AI Safety in France: fostering research positions in public institutions, encouraging private and public funding for research projects, etc.

International bootcamps:

- As presented above, we wish to scale up our ML4G bootcamp in other countries to find the best students and kickstart their AIS careers. We offer to give the technical resources and methodology to other AIS groups that would like to reproduce this camp.

Strengthening our bonds with French academia:

- In France, AI Ethics research is more academically anchored than AI Safety research. But these overlapping fields both address the various risks presented by AI, and their complementarity suggests a need for unified research and collaboration if we want to progress towards safe and responsible AI.

- We thus wish to meet researchers and create opportunities for them to learn about the AIS field, allowing them to adapt their research agendas towards safety-relevant work easily if they want to.

Diving into AI governance:

- We plan to devote more resources to AI governance in the coming year. We think that technical people may be well-suited for work in technical AI governance (e.g. policy research in compute, forecasting of AI developments à la Epoch, thinking about safety benchmarks, etc.).

- We wish to replicate some of our AI technical activities in their AI governance version. We will organize training days on AI governance. We also aim to extend our outreach to schools that train for careers in political science and tech policy. We will foster more governance projects at the end of future bootcamps.

- Finally, EffiSciences aims to help bridge the gap between AI safety research and the policy-making ecosystems.

Talking to the media

- Since the Overton window on the risks of AI has expanded, French media outlets regularly publish articles and op-eds on AI. We think we’re in a good position to be a key contributor in addressing AI risks in France (as we are the only ones who have taught academic courses on those topics in France) and to be able to make appearances in the media in this regard. EffiSciences has already had the opportunity to participate in several French media outlets to discuss our overall approach, but we have not yet had the chance to delve into it with a focus on AI risks specifically. A significant part of cultural change and raising awareness can be achieved through this channel, and that's why we will strive to use it wisely in the future.

Conclusion

We are all pretty happy and proud of this year of field-building in France. We made a lot of progress, but there is so much more to be done.

Don’t hesitate to reach out: If you’d like to launch similar work we would love to support you or share our experience. And if you have feedback or suggestions for us, we’d love to hear it!

We are currently fundraising, so if you like what we do and want to support us, please get in touch using this email.

- ^

We mean that creating the AI Unit has enabled its founders to engage in AIS and we count the work they would not have done otherwise.

- ^

The actual numbers would be higher if we included the fact that we also help other groups launch their own ML4G in their country (e.g. LAIA in Switzerland).

0 comments

Comments sorted by top scores.