Intelligence–Agency Equivalence ≈ Mass–Energy Equivalence: On Static Nature of Intelligence & Physicalization of Ethics

post by ank · 2025-02-22T00:12:36.263Z · LW · GW · 0 commentsContents

What is a language model? Encoding Space & Time into a Walkable Space Saving Our Planet Digitally Static vs. Dynamic Intelligence Physicalization of Ethics & AGI Safety[2] Simple Physics Behind Agentic Safety Conclusion None No comments

Imagine a place that grants any wish, but there is no catch, it shows you all the outcomes, too.

(This is the result of three years of thinking and modeling hyper‑futuristic and current ethical systems. It's not the first post in the series, it'll be very confusing and probably understood wrong without reading at least the first one [LW · GW]. Everything described here can be modeled mathematically—it’s essentially geometry. I take as an axiom that every agent in the multiverse experiences real pain and pleasure. Sorry for the rough edges—I’m a newcomer, non‑native speaker, and my ideas might sound strange, so please steelman them and share your thoughts. My sole goal is to decrease the probability of a permanent dystopia. I’m a proponent of direct democracies and new technologies being a choice, not an enforcement upon us.)

In this article, I will argue that intelligence is by its nature static and space-like, and that creating agentic AI, AGI, or ASI is both redundant and dangerous.

What is a language model?

In a nutshell, a language model is a collection of words connected by vectors; it's a geometric static shape—space-like in nature. GPUs make it time-like by calculating paths through this space. This raises the question: why not expose the contents of the model to people? I claim that we can make this space walkable and observable from the inside and outside. But how do we even begin?

Encoding Space & Time into a Walkable Space

One way to conceptualize this is by encoding long stretches of time into 3D walkable spaces, similar to how we create long-exposure photos. For example, here is a year of time in Berlin compressed into a photo and more photos like this[1]. You can see car lights and the Sun—gray lines represent cloudy days when there was no sunlight.

This is an abstraction of how we can visualize all knowledge from the Big Bang to the ultimate end—perhaps call it a multiverse, a space of all-knowing. I argue that this is the final state of the best possible evolution, because static shapes can't feel pain. Therefore, drawing the sculpture of everything, of all knowledge, is both safe and can eventually be democratically agreed upon. We became enlightened enough to make sculptures of naked people long ago, let women go topless, so I believe one day we will consider it okay.

So, why do we need agentic AIs? Instead, we could remain the only agents ourselves, expanding our place of all-knowledge. We will eventually be the all-powerful beings in it. And if, one day, we simulate all possible futures and determine that some agentic AI is safe, then we could consider it as our pet.

Saving Our Planet Digitally

Starting with a digital copy of our planet allows us to save it for posterity. With better tools, we can make it more lifelike—first with a screen or VR, then with brain-computer interfaces, and eventually, I hope, wireless interfaces that make it increasingly immersive.

Of course, it'll be possible to recall everything or forget everything, you'll be able to live for 100 years as a billionaire if you want. Everyone will be able to choose to have an Eiffel Tower, something that is impossible in a physical reality. It'll be our direct democratic multiverse. These would give us ways to perceive long stretches of time within a multiversal user interface (UI). This is just the beginning of what could eventually become a comprehensive, dynamic map of all events across time, visualized, walkable and livable.

Static vs. Dynamic Intelligence

The core idea is this: as the only agents, we can grow intelligence into a static, increasingly larger shape that we can live in, visit, or just observe. We can hide parts of the multiversal shape to make it appear dynamic. We don’t need to involve agentic AIs, just simple, understandable algorithms, like the ones used in GTA-3-4-5. This system is safe if we remain the only agents in it.

In this scenario, static space represents frozen omniscience (a space-like superintelligence), and over time, we will become omnipotent (the ability to recall and forget parts of the whole geometry). This approach to intelligence is both safer and more manageable than creating agentic AI.

Physicalization of Ethics & AGI Safety[2]

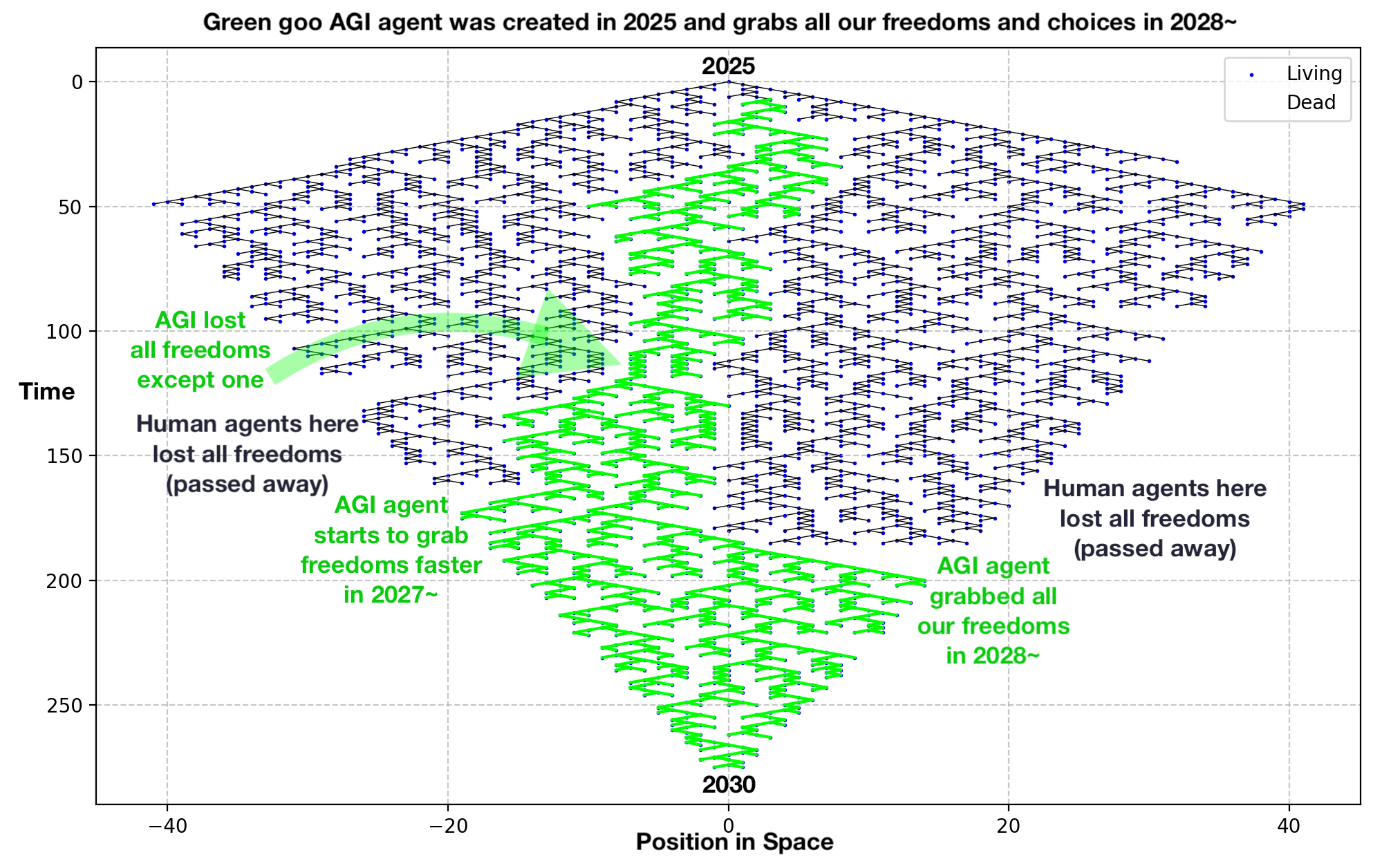

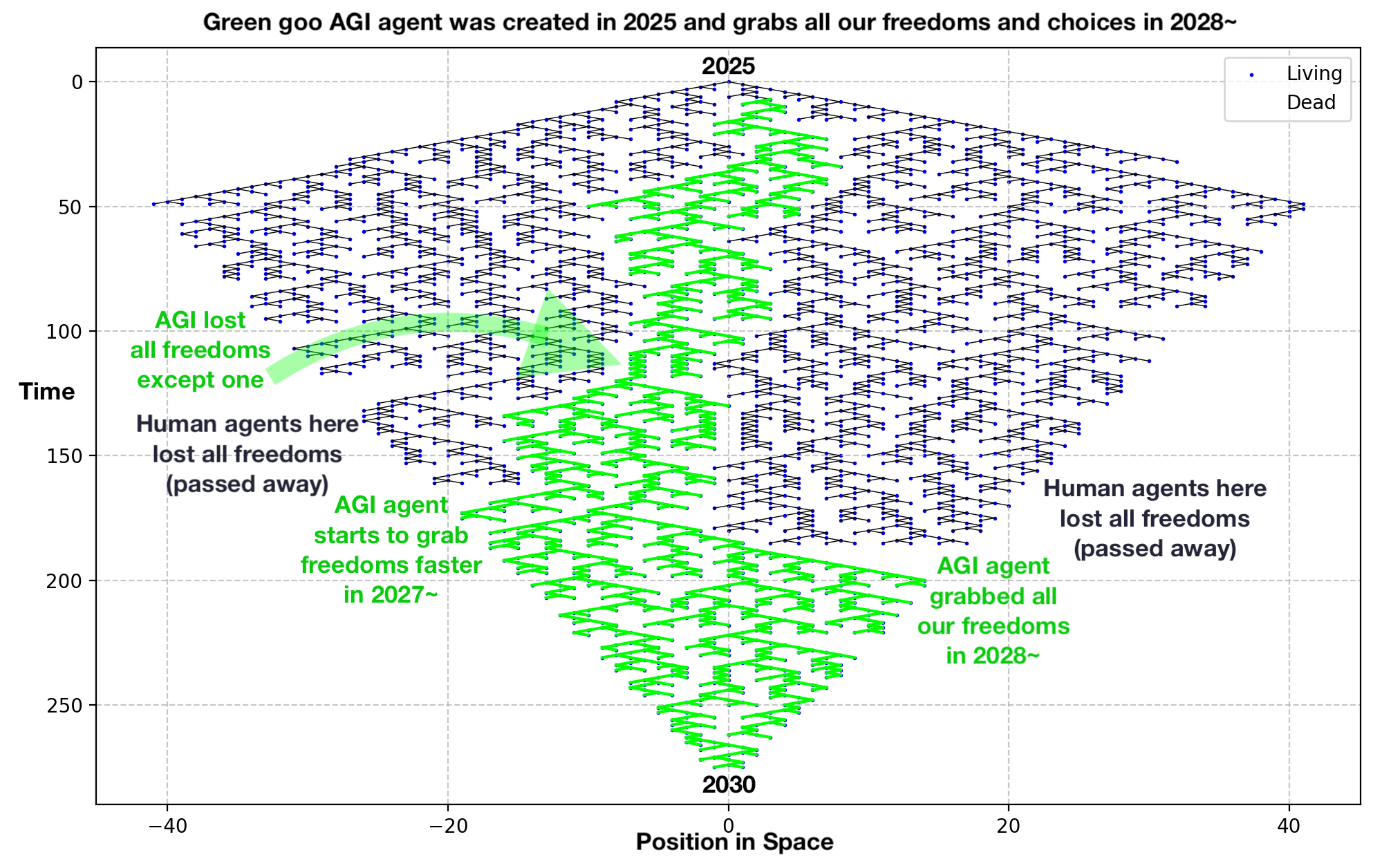

Now let’s dive into ethics and AGI safety with a binomial tree-like structure (this is a simplification). This system visually represents the growth and distribution of freedoms/choices to choose your future ("unrules") and rules/unavailable choices or choices that are taken by someone else ("unfreedoms").

Imagine the entire timeline of the universe, from the Big Bang to the final Black Hole-like dystopia, where only one agent holds all the freedoms, versus a multiversal utopia where infinitely many agents have infinitely many freedoms.

The vertical axis shows the progression of time from the top single dot (which can represent the year 2025) to the bottom green dots (which can represent the year 2030, when the green goo AGI agent grabbed all our freedoms—the lines that go down). On the left and right of the green goo, you see other black lines—those represent human agents and the sums of their choices/freedoms. As you can see, they almost stopped the green AGI agent right in the middle, but it managed to grab just one green line—one freedom too many—and eventually took all the freedoms of the left and right human agents, causing them to die (they didn't reach the bottom of the graph that represents the year 2030).

The horizontal axis represents the 1D space. By 1D space, I mean a 1-dot-in-height series of blue "alive" dots and dead matter dots that are white and invisible. Time progresses down one 1D space at a time.

The tree captures the growth of these choices/freedoms and their distribution. The black "living" branches indicate those agents who continue to grow and act freely, while the invisible white "dead" branches signify dead ends where choices no longer exist.

Two dots trying to occupy the same space (or make the same decision) will result in a "freedom collision" and white dead matter, which becomes space-like rather than time-like because dead matter cannot make choices.

In this structure, agents—such as AIs or humans—are represented as the sum of their choices over time. They occupy the black (or green, which represents our green goo agentic AI choices) choices through time, not the blue dots of space. If we allow an agent to grow unchecked, it can seize an increasing number of choices/freedoms, and we risk allowing it to overtake all possible choices/freedoms, effectively becoming the only agent in existence.

This is a dangerous prospect, similar to how historical events can spiral out of control. Consider Hitler’s rise to power: his party was almost outlawed after its members violently attacked officials, but it wasn't. People had other things to do, so the party became bolder, and Hitler eventually took control and came to power. In the same way, one wrong decision—one "freedom" too many given to an agentic AI—could lead to a dystopian outcome. You can see that the green goo agentic AI had a narrow path right in the middle that was almost cut off by the black agents on the left and right sides, but alas, the green goo prevailed.

The tree serves as a cautionary tale. If one AI grabs or gets too many freedoms in both space and time—while at the same time imposing rules ("unfreedoms") and blocking our choices—it risks becoming an uncontrollable force.

Simple Physics Behind Agentic Safety

Some simple physics behind agentic safety can guide us in how to manage agentic AI:

- Time of Agentic Operation: Ideally, we should avoid creating perpetual agentic AIs, or at least limit their operation to very short bursts initiated by humans, something akin to a self-destruct timer that activates after a moment of time.

- Agentic Volume of Operation: It’s better to have international cooperation, GPU-level guarantees, and persistent training to prevent agentic AIs from operating in uninhabited areas like remote islands, Antarctica, underground or outer space. Ideally, the volume of operation is zero, like in our static place AI.

- Agentic Speed or Volumetric Rate: The volume of operation divided by the time of operation. We want AIs to be as slow as possible. Ideally, they should be static. The worst-case scenario—though probably unphysical (though, in the multiversal UI, we can allow ourselves to do it)—is an agentic AI that could alter every atom in the universe instantaneously.

- Number of Agents: Humanity's population according to the UN will not exceed 10 billion, whereas AIs can replicate rapidly. A human child is in a way a "clone" of 2 people, and takes ±18 years to raise. In a multiversal UI we can one day choose to allow people to make clones of themselves (they'll know that they are a copy but they'll be completely free adults with the same multiversal powers and will have their own independent fates), this way we'll be able to match the speed of agentic AI replication.

Conclusion

By focusing on static, space-like intelligence, we can avoid the risks posed by agentic AI and AGI. If we preserve control and stay the only agents within our space, we can continue to grow our knowledge and wisdom. Only once we are confident in the safety and alignment of an agentic AI could we consider introducing it into our multiversal static sandboxed environment, where people voted to have one, but we must remain cautious. A safer world is one where we have full control of the static place AI, making informed, democratic decisions about how that space grows or changes into a multiverse of static places, rather than allowing autonomous artificial agents to exist and to dominate.

(Please, comment or DM if you have any thoughts about the article, I'm new here. Thank you!)

- ^

Examples of how we can perceive long stretches of time in the multiversal UI: Germany, car lights and the Sun (gray lines represent the cloudy days with no Sun) - 1 year of long exposure. Demonstration in Berlin - 5 minutes. Construction of a building. Another one. Parade and other New York photos. Central Park. Oktoberfest for 5 hours. Death of flowers. Burning of candles. Bathing for 5 minutes. 2 children for 6 minutes. People sitting on the grass for 5 minutes. A simple example of 2 photos combined - how 100+ years long stretches of time can possibly look 1906/2023 ↩︎

- ^

I posted the code below this comment of mine [LW(p) · GW(p)]

0 comments

Comments sorted by top scores.