Reconciling Left and Right, from the Bottom-Up

post by jesseduffield · 2018-11-11T08:36:05.554Z · LW · GW · 2 commentsContents

2 comments

This is a repost of my blog post from my medium account, . I got the idea to write it based on this comment [LW(p) · GW(p)] I left on Vaniver's post 'Public Positions and Private Guts'. This is the first thing I've posted on LessWrong so hopefully it's fitting the guidelines.

I think right now a lot of things are converging in terms of how we conceptualise the processes of the brain. Conscious vs Subconscious, Science vs Art, Abstract vs Concrete, Left vs Right, what if these dichotomies were all about the same thing? I think the real dichotomy happening with all these things is actually a dichotomy between top-down and bottom-up processing.

Top-down processing is how you get from abstract concepts like goals and aspirations to concrete-world implementations, like deciding you want to bake a cake, and then reading a recipe and performing each step of the recipe until you eventually have a cake. Bottom-down processing is how you see a messy complex data set like an image of billions of tiny yellow rocks meeting an oscillating body of blue liquid and instinctively think ‘beach’. Top-down processing is generative: you start from something simple and end up with something complex. Bottom-up is reductive, you start with something complex and you reduce it down to something simple.

To give a word to a pattern you’ve identified is to grant it ascension into the realm of top-down processing, where you can now apply things like logic and deductive reasoning to achieve goals with that abstracted pattern. For example, you might be playing several 1v1 games of age of empires against the same opponent and realise ‘this guy keeps attacking me early in the game’ and then you might come up with a word for that, for example you could say that you’re being ‘rushed’ and now you’ve brought a fairly complicated and messy concept into the clean, manageable world of abstract thought. Now you can use predicate logic, whose utility extends to any set of abstract concepts you want to make statements about, by saying ‘if I get rushed, I need a strategy to counter that’. So you’ve now set a goal, and you can use top-down reasoning to bring that goal closer to the concrete world by thinking ‘well I can make sure I put some watchtowers up early so that I have some defensive units without too much cost to my economy’. Now you can go back up the abstraction ladder and call that defence the ‘tower defence’. So now you know ‘if my opponent wants to rush me, I should use a tower defence’.

If you were actually doing a 2v2 and your teammate didn’t have much experience, you could tell them ‘see how we keep getting attacked early’ (drawing their attention to the messy, complex data of 5 archers, two 2 cavalry and 2 infantry attacking their farms), if we get rushed like that (saying how any data you can ‘liken’ to that data should be denoted as a ‘rush’) just do a tower defence like I’ve done in my base (now drawing their attention to the data of 5 towers you’ve built around your farms, calling the concept a tower defence, and telling them to use that term on anything ‘like’ the tower arrangement they’re looking at). Now it’s all well and good for them to identify a rush, even if there’s no hard rule about what constitutes a rush (is it still a rush if the first attack happens 15 minutes into the game? 20 minutes?), but they’ll probably ask for more info about how to actually generate a tower defence setup, given that it requires them to know what resources they need to put aside etc to afford the towers, and so you tell them that you need to put an extra 2 villagers on wood from the beginning and to build a tower whenever there is enough resources to build one, until you have 5. That recipe can be compressed into just a few bytes of data, because rules for generation live in the abstract world where things are far more likely to be expressible in simple ways than in complex ways.

So the pattern is that data comes in low bandwidth and high bandwidth forms. Humans have some tools like logic and reasoning, but they only work on low-bandwidth data because they themselves are low bandwidth (the statement ‘if X then Y’ only has room for two symbols; X and Y. So to make use of it, we needed to compress our age of empires concepts into X = ‘rush’ and Y = ‘tower defence’). To help us with the need to compress high-bandwidth information into the low-bandwidth realm of abstract reasoning, we use inference to say ‘There is some kind of pattern in all these games we’re playing and the word I’m going to give to that pattern is ‘rush’). This is a very good system for two reasons: firstly because it doesn’t require you to have a generative model for how a rush might happen. You don’t care, you only need to recognise it, and our brains are optimised for recognising when two things share traits, even if we don’t consciously know what those traits are. Secondly, spoken language is severely limited in bandwidth because it’s just hard to rack up kilobytes with plain words (nobody begins a cleanup of their nearly full harddrive by going ‘I should delete some plaintext documents?’, they say ‘I should delete some game of thrones episodes’). So the only verbal communication we are capable of doing is in the abstract. It’s possible that for this reason, language was the main reason why abstract reasoning evolved, rather than the other way around. Language had the constraint of being low-bandwidth, so humans needed more resources devoted to converting between low and high bandwidth information, and given that most of our internal information is high-bandwidth, that meant we needed more resources dedicated to dealing with the compressed, low-bandwidth, abstract stuff.

It’s interesting to note that abstract thought is what we would consider to be ‘conscious thought’ whereas the millions of inferences being made and patterns being identified from our sense data at each moment is deemed ‘subconscious thought’. What is it about abstract, low-bandwidth reasoning that we identify with it more closely than the high-bandwidth reasoning of our subconscious minds. Is it that language’s low-bandwidth constraint permits only abstract things into an inner dialogue, and we infer ‘human-ness’ from dialogue itself, which makes us only capable of inferring human-ness from the low-bandwidth processing going on in our heads rather than the high-bandwidth processing? Is it that our hopes and aspirations are expressible in low-bandwidth terms (e.g. ‘I want to be a rockstar’) so we identify with those aspirations and build our identity around it, rather than the high-bandwidth data we have about our own day to day behaviour which might contradict the low-bandwidth data (e.g. lots of recent memories doing things that were definitely not practicing an instrument).

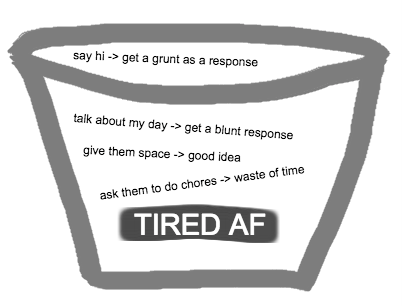

I made a couple of posts recently about identity and in them I modelled identity as a bucket with a label on it (e.g. sleepy) where if you signal to somebody that you’re sleepy (by moping around and sighing a lot) they can access their internal representation of that bucket and pick out some prediction mappings about how you might respond to some of their actions. E.g. one prediction mapping might say ‘If you ask me do any chores, I’m going to say no’. So the person saves face by waiting until a time when you’re not ‘sleepy’ to ask you to do chores. I’m now seeing this model in a new light: You go and signal some traits, which will appear to another person all mixed in at once in a high-bandwidth image of you moping around the house and sighing a lot, and they use bottom-up processing to convert that to the low-bandwidth label of ‘sleepy’, and associated with that low-bandwidth label are some other low-bandwidth rules about which of their actions will provoke positive or negative responses from you. They don’t need to know how to generate a sleepy identity, they only need to know how to recognise it. They don’t even need to know the exact expected outcome of a potential interaction with you e.g. asking you to do chores, all they need to do is extract out from previous experiences the outcome will be good or bad (i.e. convert those messy high-bandwidth experiences to low-bandwidth representations).

People then communicate these identities to eachother because if everybody is on the same page, they can save themselves a lot of grief by picking the right actions in the right circumstances depending on other people’s identities. But the reason why people get so upset if e.g. somebody has an extramarital affair is because they know that an individual obtains the concept of the identity of ‘marriage’ not through knowing how to generate the traits of married-ness, but by inferring what married-ness is like based on extracting those traits out of a heap of examples of people they know. If that individual now has an example of a married person having an affair, the prediction mapping of ‘if married then monogamous’ gets weakened, because the ‘monogamous’ part is not a rule, but the result of an operation acting on day to day exposure to married people. Interestingly, the institution of marriage itself is the result of our culture abstracting the patterns in monogamous mating in humans and then constructing generative rules in the form of social norms that enables the reliable replication of those patterns. When gay marriage debates were going on (and still are in some places) the left was saying ‘I don’t see why you can’t extract marriedness out of a picture of a gay couple, given that it matches on all the traits that I associate with marriedness’ and the right was saying ‘we explicitly made rules about this because the point of marriage is about the union of two souls under god to create new life’. The left was thinking bottom-up, the right was thinking top-down. I’ll touch more on that later.

All these issues over identity are at their core, a result of the interplay between top-down and bottom-up processing, and the computational shortcomings of each. Bottom-up processing can tell you how to recognise things and extract information, but says nothing about what to do with that information. Top-down processing can tell you how to create something, but you can’t create something without knowing what you actually like and what you would like to create, and that requires inference from experiences.

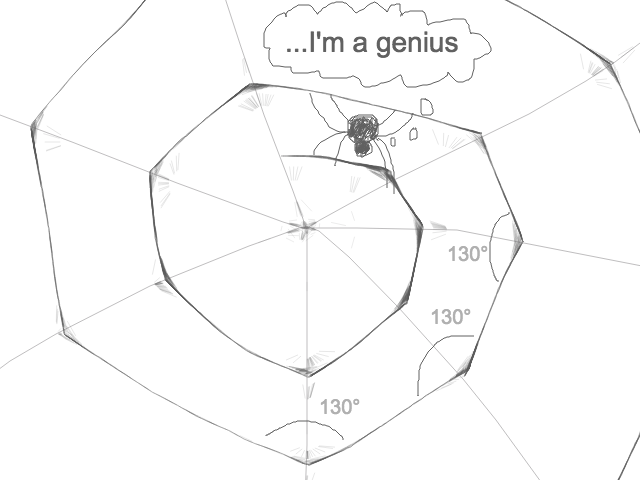

Continuing on the point about bandwidth limitations, it should be no surprise that most structures created by animals are very geometric in nature. If you think about a spider’s web, it’s just a spiral. You might think ‘how can a spider’s brain contain the blueprint for creating a complex web’ and the answer is that it doesn’t contain the blueprint. All you need to create a spiral web is ‘spin a bit of web, pivot a little bit, repeat’. I would bet that the smaller the spider, the more likely that their webs are spirals, and the bigger the spider, the more likely that they are less cleanly geometric, because at that point it takes more generative rules to be stored in the spider’s DNA to produce the desired result. That’s the top-down side of the coin, on the bottom-up side of the coin, it always confused me about how it’s possible for young adolescent me to all of a sudden be really attracted to the image of a naked woman, and for certain features to be more attractive than others. How come if they’re fit they’re more attractive than if they’re overweight? How can I instinctively know these things without being told by anybody else (you can claim that it’s all cultural influence but it’s not like you’re going to pin sexual preferences in spiders on spider culture). I made the conclusion back in highschool that there must be an image of the perfect female body (and perfect male body as well) encoded into human DNA, and that our intuitions about what is more or less attractive is the result of a subconscious comparison to this ideal form.

Is that possible? Lets say that by freak accident a bitmap image of a naked man and woman (I’d say jpeg but that requires a compression algorithm) got stored in the human genome. A 640x480 image of a naked woman is (*switches to incognito mode*) a bit under a megabyte. The human genome has 6 billion base pairs, and it takes four base pairs to make a byte because each base pair has 4 possible values and 4⁴ = 2⁸ = 8 bits = 1 byte, so 6*10⁹/4 = 1.5 gigabytes of data. I’m sure there’s room there for a couple of bitmap images representing the ideal human form, so maybe my younger self was correct. But I bet you could make an algorithm that could take an image as input and, using some basic heuristics, could tell you whether it was an attractive naked female body, and it could actually take up less space and be far higher in fidelity than a 640x480 pixel bitmap image of an ideal.

Cloning such a program, a repo called nude.py (https://github.com/hhatto/nude.py) off github, I checked its size and it was just over 400 kilobytes, making it less than our bitmap image. Now this program is only for detecting nudity in general, and can’t tell you HOW attractive a naked body is, only the fact that it is naked. But I think there is a trend here which is that it’s actually easier to encode heuristics for recognising things in complex data than to store snapshots of examples of those things. You could imagine that in our DNA is a machine learning algorithm for identifying an attractive member of the opposite sex, and our training data came in the form of millions of years of evolution, where with each new generation the algorithm’s heuristics would be weighted a little differently thanks to random mutation, and the people whose heuristics selected for more survival-capable mates passed down those heuristics onto the next generation. It should then be no surprise that what men typically find attractive in women are full red lips and rosy cheeks, which signal healthy blood flow, a good hip to waste ratio to which says the woman is unlikely to die in childbirth, and a big ass/thighs which show efficient storage of fat. Likewise the stereotypical attractive man is tall, with broad shoulders and a square jaw. I’ll leave it as an exercise to the reader to think about how those features might make one more likely to survive in the days of cavemen.

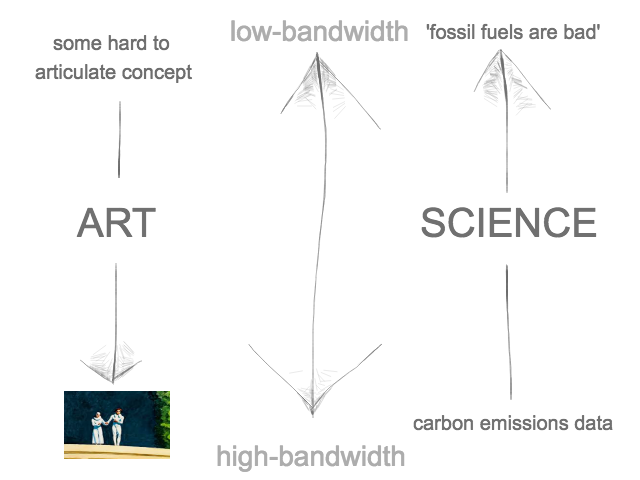

While on the topic of the science of evolutionary psychology, I never understood why there was supposedly a dichotomy between art and science. I could understand science vs religion because they were both mutually incompatible attempts at explaining the world, but I never saw the connection between art and science. Now I think I understand the tension: both art and science are means of humans communicating to one another to better understand the world, but art communicates things that can only be inferred from a complex dataset, and science communicates things that can be deduced. Obviously both top-down and bottom-up processes take place within both, but the e.g. bottom-up statistical inference used in science is just a means to an abstract end i.e. ‘should we reduce carbon emissions?’. Likewise the top-down planning that an artist puts into a painting may very well be irrelevant to the consumer, who looks at the resultant painting’s high-bandwidth information and performs a bottom-up extraction of meaning from it. The artist is contending with very hard to articulate concepts, that are best communicated in a high-bandwidth medium.

You can look at a painting, and be struck by how it articulates something that you’ve had an inkling about in the past, but you haven’t put your finger on, and now this artist has gone and presented a distilled version of that concept, without any of the noise that might obfuscate the concept when you come across it in your day to day life, and you now have an archetypal representation of that concept, which is like a really good training image for your neural network of a brain to go and base its inference rules on. And now that you have clearly identified this thing, though you may not even have a name for it, you can now more readily identify it in other places in the world.

With science, the kinds of facts that are communicated are exactly the kinds of facts that your top-down processing is capable of obtaining. It begins abstract (the hypothesis) and then it guides you down into the concrete (analysing evidence) through deductive reasoning, to reach a conclusion. Art begins concrete (the brushstrokes of the painting) and guides you up into the abstract (the ‘gist’ of the painting).

Why are the truths of science so much better adapted to progressing our civilisation? If we had to pick one of science and art to ban, I doubt we’d be getting to mars anytime soon if we chose to ban science. I think it’s because top-down processing is the processing we use for goal attainment. A goal is an abstract thing, so we can’t begin our attempts to reach that goal with bottom-up reasoning, so we need to use top-down processing, and top-down processing enables us to break the thing down into smaller, more concrete things, and then work our way through each one. So obviously science is what’s pushing society forwards technologically, because to become technologically advanced is a goal in the first place. An individual artist might have a goal to make an artwork that expresses some hard to articulate concept, but it’s not like the artist community is saying ‘we’re here but we want to be over there, let’s use our art to get there’. Because it’s through creating the art that you learn more about the thing it is you’re trying to achieve. The exception would be art that tries to send a political message, but I think it’s fair to say that kind of art isn’t as memorable as art that tries to communicate concepts nested deeper in the human experience.

Compared to science, art seems better suited for communicating to us about how to live our lives well. Through art we can identify patterns in human behaviour, and use those patterns to better guide us through our lives. Mythology is packed with stories that over thousands of years have been distilled and condensed to contain extremely clear archetypes that arise every day of our lives. The story of Icarus flying too close to the sun sends a message about the consequences of unbridled arrogance. Any child can be read that story and take away the moral, without even having heard the word ‘arrogance’ or ‘carelessness’. The essence of the story, the information abstracted out with bottom-up processing, gets stored in memory for later use, so that next time that child sees a friend carelessly climb onto a roof without a ladder to get a ball, they get a feeling that something could very possibly go wrong. Likening this to a machine learning algorithm, the ancient myths are just very well composed sets of training data, enabling a person to identify patterns and update their heuristics without needing to understand what makes something like arrogance come about or why specifically it precipitates bad outcomes.

I read somewhere that it’s the job of the historian to say what happened, and the job of the poet to say what happens. ‘What happens’ is another way of saying ‘what patterns can be found across various events’ which is another way of saying ‘what inference rules can be extracted out of different data sets’. I also think that the top-down/bottom-up dichotomy is the same dichotomy as knowledge vs wisdom. Knowledge is about facts and their relations to eachother. Facts can be communicated easily because they’re low-bandwidth expressions of abstract concepts. Wisdom is about being able to recognise how to act in certain situations. The fact that it’s so hard for one person to impart wisdom onto another person is telling of how wisdom exists in the realm of high-bandwidth information, and can only be obtained through updating your heuristics via exposure to a heap of data sets.

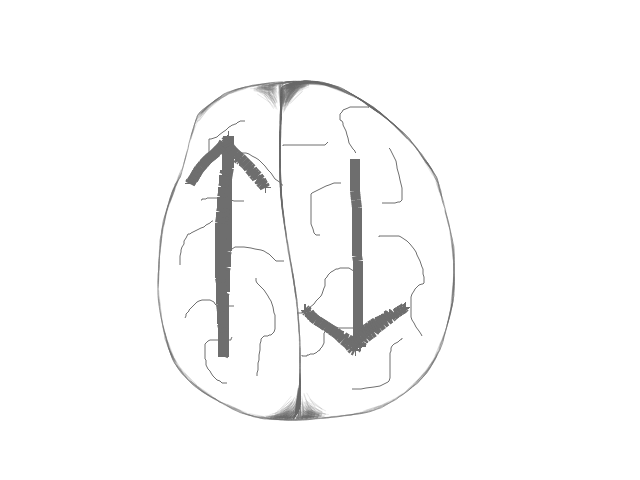

Returning to my earlier digression about left vs right, the dichotomy between art and science also feels like the dichotomy between the left and right brain.

Here’s a summary of the difference between the hemispheres (http://www.theorderoftime.com/politics/cemetery/stout/h/brain-la.htm):

The left hemisphere specializes in analytical thought. The left hemisphere deals with hard facts: abstractions, structure, discipline and rules, time sequences, mathematics, categorizing, logic and rationality and deductive reasoning, knowledge, details, definitions, planning and goals, words (written and spoken and heard), productivity and efficiency, science and technology, stability, extraversion, physical activity, and the right side of the body.

The right hemisphere specializes in the “softer” aspects of life. This includes intuition, feelings and sensitivity, emotions, daydreaming and visualizing, creativity (including art and music), color, spatial awareness, first impressions, rhythm, spontaneity and impulsiveness, the physical senses, risk-taking, flexibility and variety, learning by experience, relationships, mysticism, play and sports, introversion, humor, motor skills, the left side of the body, and a holistic way of perception that recognizes patterns and similarities and then synthesizes those elements into new forms.

The right hemisphere deals with highly complex datasets that are difficult to communicate even to one’s self, and extracts patterns from the data. The left takes abstract things and uses deductive reasoning to manipulate them and communicate them to others (note the left hemisphere is related to extraversion). Introverted people on the other hand spend a lot of time looking inwards over their thoughts and memories, trying to extract out anything meaningful.

A lot of people’s issues originate from the inability to properly develop both hemispheres. Somebody who is fairly creative might be good at getting halfway into a song that they’re writing but when the time comes that they need to impose structure onto that song and make it coherent, they get flustered and give up. Their right brain did a good job of listening to them play a heap of notes and then picking out which were the right ones to actually play, but their left brain wasn’t able to say ‘okay I want to have a completed song (abstract goal) so I need to start putting these little snippets in distinct parts of the song (concrete implementation) and they need to mix together properly etc’.

On the other side of the coin, somebody may be very good at organising towards their goal: they can decide that they’re going to make a song, download Ableton, buy the instruments, etc, but when it comes to making a riff, they just can’t pick out the right notes when they’re playing around on the guitar; nothing jumps out at them. Their left brain had all the organisation down-pat, but their right brain wasn’t able to successfully mine for nuggets of melody in the random notes the person was playing.

Political divisions seem to also be a result of this mismatch: people on the left wing exhibit right-hemisphere traits (confusingly): they care more about people’s emotions, art, are bigger risk-takers (calls for revolution?), and place a higher value on the power of story and individual experiences. Conservatives on the right wing, true to their name, seek to conserve the good parts of society, and are more industrious, care more about efficiency, and value structure and rules. Their traits originate from the left hemisphere.

Just like the right and left brain need to cooperate in making a song, the right and left wing need to cooperate in making a society, because they both value separate and important things that the other side often ignores.

No doubt this post has made some generalisations about how distinct the top-down processes are from the bottom-up ones, and there’s weird stuff that happens when e.g. a person comes across a heap of abstract things (the domain of top-down processes) like maths equations and starts to find patterns in those e.g. (these equations all represent parabolic curves) which become datasets for bottom-up intuitions when trying to solve new problems. So there’s always an interplay between the two processes. But I think it’s fairly clear that the wiring of the human brain is heavily coupled to the distinction between these two things, and that’s why they’ve manifested in so many dichotomies like science vs art, conscious vs unconscious, left vs right, etc. I’m convinced at its core the issue is one of bandwidth, and that the bandwidth constraint of language played a large part in the evolution of abstract thought. And finally, I think there is something to be said about bottom-up processes that can find patterns in a dataset without needing to understanding on a deep level what brought about those patterns, or even what heuristics the person was using to identify the patterns in the first place. The fact that a person can recognise the ‘likeness’ in two things and use that to guide their actions when they come across another thing sharing the likeness, without knowing what the underlying thing is, is a very powerful mental ability. Combine that with the ability to then extract out the commonality and represent it to ourselves abstractly (e.g. by giving it a name), bringing it into the realm of abstract reasoning where we can manipulate the concept in the pursuit of goals, and we end up with a superpower that is the best candidate for ‘the thing that separates humans from animals’.

But that superpower requires the cooperation of the two sides, and people differ in their ability to reconcile the two. If a completed song can spring forth from an individual’s success in reconciling the two sides in the context of musical pursuits, imagine what would spring forth from an entire society that manages to reconcile the two sides as they manifest in the battle between art and science, and the battle between left and right. If we can pull it off, getting both sides to cooperate may be the biggest step we can take towards a better future.

PS: credit to Robin Hanson and Scott Alexander for introducing me to some of these ideas.

2 comments

Comments sorted by top scores.

comment by habryka (habryka4) · 2018-11-12T23:57:51.253Z · LW(p) · GW(p)

Writing feedback: I overall enjoyed the post, but by far the biggest obstacle to me were the extremely long paragraphs, with relatively little to break them up (the images did help, but probably not enough). Scott has a bunch of good advice on this, in his writing advice post:

Nobody likes walls of text. By this point most people know that you should have short, sweet paragraphs with line breaks between them. The shorter, the better. If you’re ever debating whether or not to end the paragraph and add a line break, err on the side of “yes”.

Once you understand this principle, you can generalize it to other aspects of your writing. For example, I stole the Last Psychiatrist’s style of section breaks – bold headers saying I., II., III., etc. Now instead of just paragraph breaks, you have two forms of break – paragraph break and section break. On some of my longest posts, including the Anti-Reactionary FAQ and Meditations on Moloch, I add a third level of break – in the first case, a supersection level in large fonts, in the latter, a subsection level with an underlined First, Second, etc. Again, if you’re ever debating more versus fewer breaks, err on the side of “more”.

Finishing a paragraph or section gives people a micro-burst of accomplishment and reward. It helps them chunk the basic insight together and remember it for later. You want people to be going – “okay, insight, good, another insight, good, another insight, good” and then eventually you can tie all of the insights together into a high-level insight. Then you can start over, until eventually at the end you tie all of the high-level insights together. It’s nice and structured and easy to work with. If they’re just following a winding stream of thought wherever it’s going, it’ll take a lot more mental work and they’ll get bored and wander off.

Remember that clickbait comes from big media corporations optimizing for easy readability, and that the epitome of clickbait is the listicle. But the insight of the listicle applies even to much more sophisticated intellectual pieces – people are much happier to read a long thing if they can be tricked into thinking it’s a series of small things.Replies from: jesseduffield

↑ comment by jesseduffield · 2018-11-24T02:33:05.485Z · LW(p) · GW(p)

Thanks, that's very useful advice. I've usually done the stream-of-conciousness approach because my blogwriting began as a recreational thing, but I'll be sure to try and work harder on brevity and structure as I keep writing.