Galatea and the windup toy

post by Nicolas Villarreal (nicolas-villarreal) · 2024-10-26T14:52:03.861Z · LW · GW · 0 commentsThis is a link post for https://nicolasdvillarreal.substack.com/p/galatea-and-the-windup-toy

Contents

The Sign of an Operator Higher Order Signs as Goals None No comments

In previous posts on AI I have given some critiques regarding the orthogonality thesis and rationalist notions of optimizing towards rational, coherent goals. The purpose of this blog is to correct mistakes and carry the conclusions of those blogs forward. To summarize, these previous blogs have described flaws in rationalist ideology, the most relevant one here is the idea that rational goals require a specific state of the world to aim/optimize for. I’ve previously shown that any such world-state or goal, no matter how rigorous, does not exist “out there” in the world, they only have meaning within the map rather than the territory, and indeed, a concept of “the map” at all only exists within an internal system of signs rather than in the territory more broadly.

I created a number of concepts to form my critique: first order signs, higher order signs, and sign function collapse. To those unaware, signs are things which stand in for other things, they have a signifier, the symbol, and the signified, the things it stands in for. First order signs stand in for something directly, the word chair for a chair, the word atoms for atoms, the word anger for the immediate sensation of anger. Higher order signs are signs which stand in for other signs. Sign function collapse is when a higher order sign becomes a first order sign. What I mean by this is that for each sign there is a process of signification, where we come to correlate different bits of phenomenal experience together with a symbol. When someone is teaching us how to speak for the first time, and they point to an apple and say “apple”, it’s impossible for us to know whether “apple” refers only to this particular specimen of apple, this particular experiencing of seeing the apple, its color, its shape, etc. What precisely “apple” means can only be learned through access to more examples in various different contexts, this creates the correlation between the signifier “apple” and the juicy object we all enjoy. This is the process of signification.

Now let’s think of the phrase “apple juice”, since as a compound name it’s useful for describing the process of sign function collapse. When we first learn about juices, we probably first arrive at “apple juice” as a higher order sign. That is, we learn what juice is, and we learn what apples are, and we combine the two together. So long as the phrase “apple juice” is dependent on both our understanding of juice and our understanding of apples, it is a higher order sign. But when we start to know “apple juice” as only the name for a particular thing, such that its possible that the meaning of apple could drift to mean pine cones, and juice could drift to mean sweat, but we still know “apple juice” as the current meaning, then the sign function collapses, and it becomes a first order sign.

In my previous blog, I showed that Goodhart’s Law which states “when a measure becomes a target, it ceases to be a good measure,” is an example of sign function collapse. This section illustrates exactly how sign function collapse relates to optimization and goals:

Let’s say we’re optimizing for a sign in the second order code of the thermostat. What is the second order code in the thermostat? Well, it’s the one which outlines the correlated positions between the sign for temperature (the flex of the piece of metal), and the on/off state of the AC. Because the flexing metal acts as the switch for the AC, there are only two states, assuming the AC is in working order: the metal is flexed/the AC is on, the metal is not flexed/the AC is off. Up until now, there have been two first order codes, and one second order code in operation with the machine. How do we optimize for a goal that is a sign in the second order code? Well, to do so we need to measure that second order operation, we need to create a sign which corresponds with metal flexed/AC on, for example, and we need to have a set of preferences which to correlate the metal flexed/AC on state with. But suddenly something has shifted here - in this new second order code, the metal flexed/AC on state is no longer a higher level sign, if we are measuring it directly. The metal flexing, while correlated to temperature, doesn’t stand in for temperature anymore, doesn’t measure it. The AC on state was correlated with the metal flexing and completing the electrical circuit, but the metal can be flexed and the AC on without one being a sign for the other. Such that, suddenly, we begin to see Goodhart’s Law appear: the metal flexed/AC on state can just as well be accomplished by screwing the metal so it is permanently flexed and attaching it to an already running AC and a sufficiently intelligent, rational and coherent agent could arrive at this solution.

For an agent with a rational coherent utility function, to order all the possible states of the original higher order code in the thermostat it is necessary for those sates to stand in for some material reality. Measurement happens to be a way to create such a sign, and indeed, measurement is required to check that it is really standing in for material reality in a true way. But standing something in for material reality, or measuring, is the process of creating a first order code, it is creating signs directly correlated to some phenomena rather than creating signs for signs that stands in for that phenomena. The measure of a higher order code means that the signs of lower order codes no longer compose it, therefore the original higher order code is now a 1st order code. We can call this process Sign Function Collapse, and this is what fundamentally underlies Goodhart’s Law. If the thing being measured was the thing being optimized, there would be nothing counterintuitive. In order for the original goal to be captured by the first order sign created by measurement, it must be correlated with at least one other sign in addition to the proxy being measured, which is to say, it must be a higher order sign.

One thing I realize I must clarify is that while measurement here is what’s causing the sign function to collapse (similar to wave function collapse in quantum physics), that doesn’t necessarily need to be the case. All that’s necessary is that you replace a higher order sign with a first order sign. In discussions after this last blog was published, it was pointed out that you can just as well create a first order sign based off of your existing theory of reality, or for any arbitrary reason. To continue the example I used, we could just as well have a schematic or the thermostat and say that from the listed states of the thermostat what we want is metal flexed/AC on. What has happened, either way, is that the sign for temperature has collapsed to the material of the signifier and is no longer connected to its signified.

In the recent essay “Why I’m not a Bayesian [LW · GW]” Richard Ngo takes issue with the fundamental Bayesian proposition that people, or any rational mind, should ideally reason in propositions that are either true or false. He writes “Formal languages (like code) are only able to express ideas that can be pinned down precisely. Natural languages, by contrast, can refer to vague concepts which don’t have clear, fixed boundaries. For example, the truth-values of propositions which contain gradable adjectives like “large” or “quiet” or “happy” depend on how we interpret those adjectives. Intuitively speaking, a description of something as “large” can be more or less true depending on how large it actually is. The most common way to formulate this spectrum is as “fuzzy” truth-values which range from 0 to 1. A value close to 1 would be assigned to claims that are clearly true, and a value close to 0 would be assigned to claims that are clearly false, with claims that are “kinda true” in the middle.”

The reason for this fuzziness is the fact that natural languages are made up of signs made through the process of signification described above, and indeed must necessarily be so since this is the only process which can divide a messy real world and/or phenomenal experience into usable categories. Formal languages, in contrast, are always necessarily going to be higher order signs. To understand why this is the case, we’re going to need to take a brief detour.

The Sign of an Operator

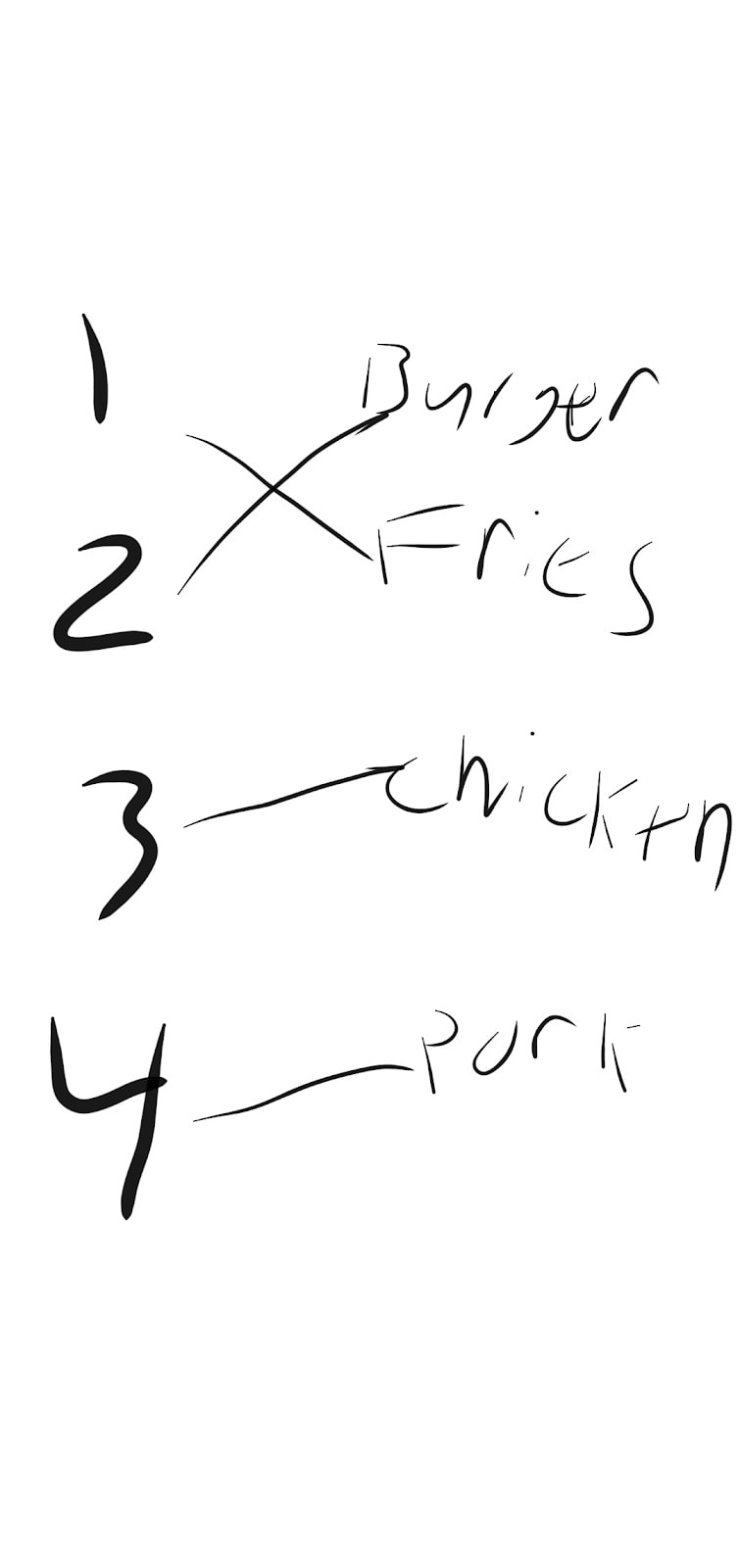

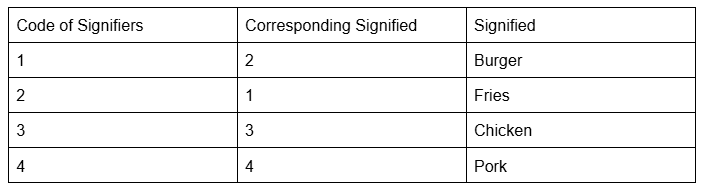

When I said that a sign was a signifier and a signified, I was telling a little fib, or at least, being somewhat simplistic. The truth is there is a third thing required to have a sign. You must have an operator which connects signifiers with signifieds. The table below illustrates a made up substitution code, where each number stands in for a given word, for example the sort one might find on a restaurant menu.

In this case, we can consider the numbers to be signifiers and the words to be signifieds. The lines between them are the operator. Basically, we must consider the two list of items in the codes to be totally separate, and allow for arbitrary connections between the two, such that we need a protocol for associating the signifier to their corresponding signified, and here, in the simplest example, it can be associating the position of the signified in a 1D vector with each signifier, such as in the table below.

If we were writing code for the operator, it'd be quite simple in this case, such as “if signifier=1, output the second item of signified list”.

For humans, we can create signs with multiple signifieds (homonyms) or with multiple signifiers (synonyms), and the meaning of words is often context dependent, which can make the operator which determines which signified is paired with the apparent signifier quite complex. An operator in this context is basically a function or computation which allows us to move from signifiers to signified and vice versa. In order for signs to exist, there must be operators.

Many animals can create systems of codes for communication through body language and vocalization that allows for a vocabulary of a dozen or so signs, particularly birds and mammals. Animals with large complex social groups like whales and elephants can go quite higher. But it remains to be seen if any other animals can produce a sign which stands for an operator. No other primates can, but evidently, humans do this all the time, it's what grammar is, it's what the plus and minus signs are, and it's what allows computers to tick to begin with. Formal languages, including computer code, are all about using the signs of operators to take an input and produce a different output. Humans can create signs for arbitrary operators, and this allows us to apply the same rules and logic to many different ideas and situations. A gorilla may be capable of very sophisticated problem solving for getting food or a mate, but because they can't assign a sign to operators, all that computation is hardwired to a specific type of problem. I believe this is why other primates have not been able to fully learn human language and grammar.

Any sign for an operator is necessarily a higher order sign. This is for the same reason the thermostat was a higher level sign, it's a system with an input and output. As a sign in the abstract, an operator can take many different inputs and outputs, which makes those inputs and outputs themselves signs which stand in for those range of possibilities. And as a higher order sign, it's also possible for the signs of operators to experience sign function collapse. This happens, for example, when we use grammar in a sentence that is incorrect but familiar (hence, that's just the way you say that, not because of the rules of language) or when we use the plus or minus signs as signs for the volume on TV, and cease to think of them as adding or subtracting the strength of sound.

Higher Order Signs as Goals

One of the key points of my first blog post on this subject was that if you allow your world model to experience signification, and therefore to develop over time, you are also necessarily allowing your goal to experience signification and change over time. This very fact undermines the orthogonality thesis. For beings which are capable of creating higher order signs, this necessarily means that goals can be shaped by higher order signs. Rationalists and Bayesians will doubtless have no problem with using higher order signs, and using them to shape goals, because that’s what doing probability calculations about world states and utilities does. The question is this, what are the implications of having higher order signs as goals for intelligence.

The rationalists claim that higher level intelligences will always have first order signs as goals for the simple reason that allowing higher order signs to be goals makes possible “dominated strategies”, behavior which always seems to leave something on the table if we define what we want as “things that exist.” This precludes desiring more abstract, higher order signs, things like intelligence, kindness, or even “good things” in general. For these concepts, we know there is not a list of actions or objects which are simply labeled as such. Rather, there's a large number of signs we associate together and attempt to extract patterns from, a process of signification which never reduces down to direct experience of reality or a particular, specific part of our world model.

While I have so far suggested that higher order signs might be required for artificial general/super intelligence(AGI/ASI), I am not the only one. Researchers at Google Deepmind have started to say the very same thing: that open-endedness is necessary for AGI/ASI. They define open-endedness as a system which produces artifacts which are both novel and learnable, and suggest that this might require the generation of arbitrary tasks and goals which can continually produce such novel artifacts.

In my previous post I pointed out the problems of sign function collapse in creating goals. In particular, the rationalist framework of goals as necessarily first order signs will tend to force all higher order signs into first order signs in order for them to be goals, therefore eliminating crucial information about what the goal originally meant. However, one possible objection was: what if our understanding of first order signs was extremely detailed and accurate, such that sign function collapse would cause no difference in behavior in any real world scenario. After all, in the example earlier, if I want apple juice, at some point it doesn’t matter if I’m thinking about the fact that it’s the juice of an apple so long as I can just buy it from the store under that name. When it comes to AGI/ASI, we can imagine an AI with such a detailed knowledge of physics, or human psychology/sociology, that it can achieve pretty much any pre-defined goal which is a first order sign.

I’d like to propose a thought experiment based on the ancient myth of Pygmalion and Galatea. In the story, a sculptor fell in love with the sculpture he created, prayed to a goddess and the sculpture came to life. The woman brought to life was named Galatea. Let’s instead imagine that the sculptor was a great inventor and the “sculpture” he made was in fact a sophisticated windup automaton which, through his great skill, was pre-programmed to deal with a near infinite number of real world situations, just in the manner we discussed above. And let’s also imagine that, because he grows tired of winding her up all the time, he still prays to a goddess, and a living copy is made of the automaton. As a newly fashioned member of the human race Galatea can have goals which are higher order signs, and as a copy of the automaton, she has knowledge of all the same situations which the windup automaton was programmed with.

Now, the fact that there are two versions of the automaton creates a conflict, the windup toy tries to remove Galatea automatically as she gets in the way of her pre-programmed goals. The windup toy is extremely sophisticated, and let’s assume that, for every possible situation it is aware of, it knows a plan of action to accomplish its goals. How then exactly can Galatea disrupt its operations? Well, there's really only two ways, she can either create a control system that is better at controlling the environment than the automaton, or which can control the body of the automaton itself, whether it's senses, stored system of codes, or physical faculties. Doing either of those things would have the potential of severing the correlation between the automaton’s world model and the world, between its internal system of signs and their referents.

In order to do this, Galatea must invent a new control system, one she doesn't already know of. In other words, she must create a “novel artifact”. Here's the reason I brought up open endedness earlier. When your goal is to create something new, something novel, your goal is necessarily a higher order sign. Things which do not yet exist cannot be directly represented as a first order sign. And how do we know that this thing which doesn't yet exist is the thing we seek? The only way is through reference to other signs, hence making it a higher order sign. For example, when we speak of a theory of quantum gravity, we are not speaking the name of an actual theory, but the theory which fulfills a role within the existing scientific framework of physics. This is different from known signs that are the output of an operation, for example a specific number that is the answer to a math question, in these cases sign function collapse is possible (we can think of 4 as either a proper name of a concept, or merely as the consequence as from a certain logical rule). For unknown novel artifacts, sign function collapse is not possible because there's nothing for it to collapse to. This issue is analogous to Hawking radiation, radiation created when one virtual particle of a pair gets sucked behind a black hole event horizon, leaving the other to fly off into space. Due to the halting problem, it's impossible to know if we'll ever find the novel artifact our operation is trying to discover, hence the first order sign is from our position unreachable, but we've nonetheless created a higher order sign connected to it.

As such, it's at least possible to imagine that Galatea can create a control system that allows her to deactivate or otherwise incapacitate the original windup automaton. Let's consider the reverse perspective for a moment, could the windup automaton do the same? The windup automaton has an implicit world model, and it has goals defined as specific outcomes in the real world as it understands it, just as a proper rationalist mind would. But it cannot make a higher order sign its goal, like Galatea can, thus it cannot produce novel artifacts. If it was in a situation where it could not imagine a way to assert control with its existing world model, it could not come up with something new to do so. In this way, it is clearly deficient.

But this isn't to say a windup automaton with a rationalist brain would not be dangerous. We can just as easily imagine a version we could call a windup bomb. The windup bomb can use a preprogrammed world model to utilize existing systems of signs for communication, or utilize existing knowledge of physics to directly shape the world towards the world state of its goal. Whatever their goal is, the creation of as many paperclips as possible, or perhaps even directly, as the creation of terrorists, to kill a maximum number of people, it's possible that any new knowledge humans could create wouldn't be enough to stop it from achieving that goal. As mentioned before, such a windup automaton would never be able to treat anything as abstract as kindness, goodness, or virtue as a goal. The only goals compatible with general human values it could have are ones that are trivial enough to not affect us significantly.

As computers become more sophisticated, along with control systems and internal systems of signs, we should expect that human weapons begin to converge towards this sketch of a windup bomb. Sufficiently advanced multimodal LLMs could be used to provide coordinates in semantic space for precise attacks the same way GPS has provided coordinates in physical space. Sufficiently advanced actuators and systems for robotic dexterity can be used to create androids with increasing capacity to both interact with human systems of signs and their physical environment, the same way that thrust vectoring has enabled hypersonic missiles and fighter jets to evade defenses. To that end, arresting the tendency of social evolution of states towards greater capacities of violence is essential, as are treaties and laws which arrest the development of any such windup bomb, in order to prevent a catastrophic arms race.

Of course, agents that can have higher order signs as goals can also be dangerous, but their behavior does not necessarily lead to existential risk in the way the rationalist windup toy does. Windup automata created for peaceful purposes are just as dangerous as those for military purposes, all the many rationalist thought experiments of AI gone mad apply here: an AI which wants an arbitrary goal may converge towards instrumental goals that give it general capabilities, for example money, power, the capacity for violence. For goals that are specific world-states, and therefore first order signs, there are no essential guardrails to this process. For goals that are higher order signs, the goal stands in for a number of correlated desirable signs and a number of anti-correlated undesirable signs, and this means that depending on the goal in question, the AI would avoid instrumental convergence if those common instrumental goals are related to the anti-correlated signs. It’s because humans can take higher order signs as goals that behavior such as religious asceticism or principled pacifism is possible.

It’s my contention that the importance of these higher order signs as goals was something ejected from consideration by rationalism due to ideological reasons. Specifically, when encountering behavior that did not fit the rigid ideas of a coherent, rational utility function, the first instinct was to assume that this behavior was just badly implemented intelligence, rather than try to investigate what its use might be. It’s true that when people pursue higher order signs, they are not maximizing the “things” they want in terms of physical or otherwise real objects, but this is the mark of greater sophistication not less, it is the mark of people who are aware of how their goals evolve over time, and are shaped by all the things, all the signs, we know. Rationalists, instead, believe they are being clever when they reify their desires and goals, when they insist that these higher order signs are not “real”. The truth is that higher order signs are just as materially real as first order signs, for they are both signs, both materially a part of the map, and neither existing as distinct objects within the territory. The great wealth of signs and artifacts that we’ve come to take for granted once did not exist, such it is that we cannot discriminate against the signs which generate these new signs as not being sufficiently real.

This aspect of human cognition and intelligence which was discarded is the very one which will inherit the earth, if indeed there will be anything left to inherit. In other words, only the AI which is capable of having higher order signs as goals is also capable of both alignment with human values and open-ended superintelligence.

0 comments

Comments sorted by top scores.