Why I Believe LLMs Do Not Have Human-like Emotions

post by OneManyNone (OMN) · 2023-05-22T15:46:13.328Z · LW · GW · 6 commentsContents

Summary Definitions and Questions Preliminaries: Outputs are Orthogonal to Qualia Emotional Qualia Are Dependent on Reward Structure The Reward Structure of LLMs Are Insufficient for Emotions to Develop None 7 comments

[EDIT: I don't know exactly why this received so many downvotes - whether it was my tone or arguments or both - but I accept that this was not, in the end, a strong post. I am a new writer here and I am still figuring out how to improve my argumentation.

That said, if we're willing to taboo the word "emotion" as a description of an internal state, then the specific thing I meant to argue - and which I still stand by - is that when an LLM produces emotionally charged outputs (for instance, when Bing chat loses it's temper) those outputs are not meaningfully associated with an internal state in a way recognizably similar to emotional behavior in humans.

A perusal through my other comments will show that I often couch things in the language of "belief" rather than stating fact. The reason I used strong language is because on this point I am very confident: I do not believe the process by which LLMs are built and trained provides a viable mechanism for such dynamics to form. If this statement is too general for your taste, then limit it narrowly to the example of Bing chat losing its temper.

I believe this is an important point, lest we run the risk of making the same mistakes Lemoine made when he fooled himself into thinking LaMDA was sentient. I worry that believing in AI emotionality is over-anthropomorphizing LLMs in a way that simply does not reflect the reality of what they are and how they work.]

(Original post is below)

Summary

I argue that LLMs probably aren't conscious, but even if they were, they certainly (my belief is less than 0.01%) don't have anything that looks at all like human emotions. The broad strokes of my argument are as follows:

- There is no way to prove that something is conscious or has emotions, but we can argue that they are not by discussing necessary conditions that are not met.

- Specific emotions in humans emerged from our reward structures. We wouldn't have developed emotions like love if they didn't serve an evolutionary purpose, for instance.

- Anything that looks like human emotion is strictly disincentivized by RLHF and supervised fine-tuning. Therefore, emotion-like behavior must come about in the pretraining stage.

- The pretraining stage has a reward structure completely disconnected from the text being generated, meaning there is no mechanism to tie the system's output into an associated emotional state.

- Therefore any text that looks emotional cannot be associated with actual underlying emotions.

The rest of this post just lays down epistemological preliminaries and explains these points in greater detail.

Definitions and Questions

I recently put together a post [LW(p) · GW(p)] arguing that we should be careful not to assume that all of AI's failure modes will be a result of rational behavior. I started this post with a brief discussion about AI displaying emotionality, where I made the claim that AI did not truly have emotions. I didn't actually defend this statement very much, because I considered it obviously true. It seems I was wrong to do that, as some people either doubt it's veracity or believe the opposite.

So first, let me say that when I refer to "emotions" (and, later in this post, "consciousness"), I'm referring specifically to a certain subset of internal, subjective experiences, what a philosopher might call qualia. I'm talking about how it "feels" to be angry, or sad, or happy. And I'm putting "feels" in scare-quotes because that word is, at a technical level, undefinable. You know there's such a sensation as happiness, or anger, or sadness, only because you yourself have presumably felt them.

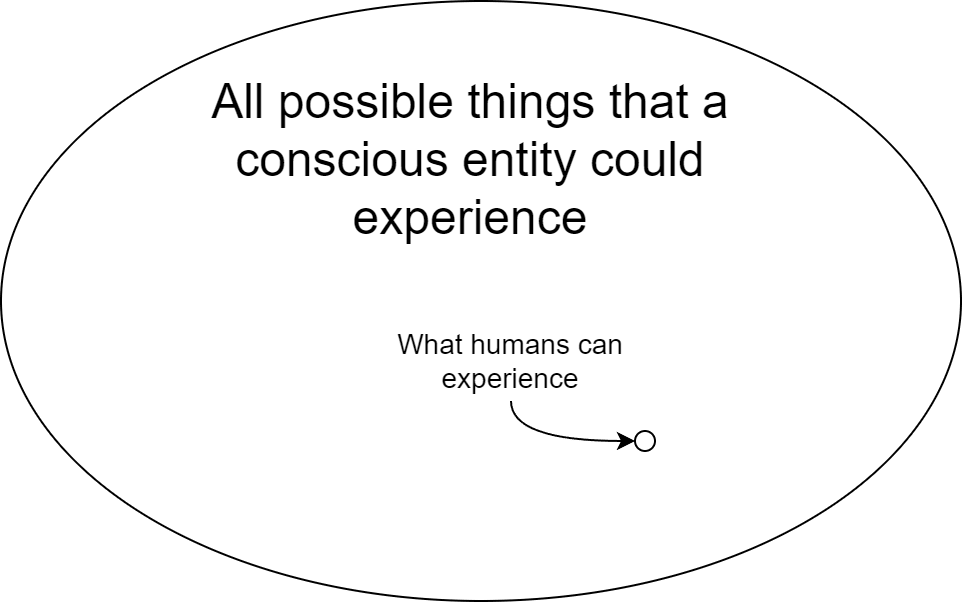

Now, qualia is a very broad category, and I find it very conceivable that there are entire categories of qualia that the human brain cannot experience (Imagine conscious aliens that experience the world completely differently from humans). Emotions, then, are just one category of qualia, and we call something that posses any non-zero amount of qualia "conscious".

There's a lot of subtlety in the points I'm trying to make, and this distinction is essential to it. With that in mind, there are two questions I'd like to ask:

- To what degree do LLMs experience qualia of any kind? (The question of "consciousness.")

- To what degree do they feel the specific qualia that we associate with emotions?

I suspect the answer to the first question is "not much/none", but that's just a gut feeling and one I cannot prove or really even argue one way or the other - I just don't believe they are structured or sophisticated enough to have qualia.

But I do strongly believe the answer to the second question is "none at all," and that is the main claim I will be defending.

Preliminaries: Outputs are Orthogonal to Qualia

As I said above, the only reason you believe humans are conscious is that you yourself are conscious. There is no other known criteria for answering that question.

If aliens arrived on Earth tomorrow and began observing your every behavior, they would have no way to know that you were conscious.[1] They might observe something like sadness as a behavioral state where a person gets quiet and sulks, but they could not know that there is a sensation underneath. A human could declare over and over that they are sad, a poet could describe the sensation in beautiful detail, and none of this would prove a thing.

Because to them, the observer, it's all just words and behaviors. You might just be a simple program like ELIZA which spits out canned responses. You may be a computer script running a 10-billion line if-statement. There's just no way for them to know for sure. Your outputs can't tell them anything about the qualia underneath.[2]

I really want to drive this point home. Even if we agree that an entity is conscious, and we hear them saying they're sad, that still doesn't mean they're actually sad! They could be lying, or they could just be wrong. And I don't even mean this in the way that Scott Alexander does when he asks if someone can be mistaken about their internal state. I mean that the output might literally be entirely uncorrelated with the internal state underneath. Imagine a parrot trained to say "I love you" whenever it sees a stranger. That parrot is almost certainly conscious to some degree, but I assure you it is not in love with a stranger it meets and says that to.

That last bit is the most relevant for discussions about LLM consciousness. It doesn't matter what the LLM says, it doesn't matter how vividly it describes its own internal experiences - none of that is evidence of consciousness. And if the LLM is conscious, then that isn't evidence that it actually feels any of the things it says it does. That's why I said I would not be able to answer the first question above, and it's also why I would have a very hard time accepting evidence for any output-based argument in favor of LLM consciousness.

Emotional Qualia Are Dependent on Reward Structure

Okay, but why am I so willing to declare that LLMs do not have the qualia associated with emotions?

There are a bunch of reasons for this belief, and they all chain together in a "even if that were true, this next thing probably isn't" sort of way. Each one describes some necessary condition that I believe is not met, and together they all multiply out to some astronomically small posterior probability.

So, starting with the doubtful assumption that LLMs have qualia, the next most obvious argument is that the space of qualia is likely incomprehensibly huge. There would be absolutely no reason to assume that the experiences humans have represent even a tiny slice of the space of all possible states of consciousness, and from that follows a basic probabilistic argument: LLMs have a completely different low-level cognitive structure from humans,[3] so why would they have the same range of qualia?

But, as I suggested above, let's now ignore that and assume the ranges really are the same. Then we arrive at the biggest barrier: the training and reward structure of LLMs would not be conducive to the development of emotions.

Let's go back to the aliens, and let's assume that somehow they've concluded unambiguously that humans did have qualia. How might they try to classify these? One way would be to look at the conditions under which humans evolved and try to understand which emotions appeared when. They might observe that anger helped us defend our territory from competition, or that love incentivized us to mate and reproduce.

All of our emotions served a purpose of some kind. If they didn't, then the rigors of natural selection would not have allowed them to persist. If anger, for instance, did not serve any evolutionary purpose, then we would not feel anger, and an entire category of human qualia would simply cease to exist. [4] That's the important part: All of these emotions only emerged as a mechanism to meet the objectives of our evolutionary reward structure.

The Reward Structure of LLMs Are Insufficient for Emotions to Develop

But LLMs have a completely different reward structure.

Now, you could argue (as the commentator "the gears to ascension" did [LW(p) · GW(p)]) that an LLM getting angry has similar effect on the conversation to a real person getting angry, delaying an anticipated negative reward or something similar. Therefore, because Bing chat produced angry outputs, it's plausible it actually was angry.

But I think that's a very surface-level understanding of how reward structures influence behavior. In actuality, the effects of reward structures are extremely subtle,[5] and there are few things in this world more subtle and complex than human reward structures. Remember that humans are actually mesa optimizers [? · GW]; evolution did not optimize us directly towards its ends, but rather built systems that optimize themselves, often causing us to pursue objectives in very indirect ways.[6] This alone adds huge complexity to the dynamics of our cognitive development.

But we don't even have to go that far, really. We just have to actually look closely at what the LLM is doing, and ask ourselves where/why the LLM learned the behaviors it exhibits.

I think the angry Bing chatbot is a great example to pick apart. Where, exactly, did Bing learn to produce angry outputs? The only answer that makes any sense to me is that it learned during the first stage pretraining - no other option seems plausible. Yes, being angry could be a way to defer possible negative reward in some contexts, but none of those contexts apply in RLHF. Any display of angry behavior during RLHF training, or supervised fine-tuning, would immediately result in negative reward, every single time. It's not like a cornered animal fighting a captor in order to avoid getting captured - if anything, anger in RLHF will get you a negative reward much more consistently than a wrong answer.

So why would it behave "angrily" then? Well, as I argued in that post, because it observed angry behavior in pre-training, and it's reverting back to that previously-learned behavior. But, and this is the most important piece of this entire rebuttal, there is no mechanism to develop emotional qualia during pretraining.

This is because nothing remotely dynamic ever happens during pretraining. Everything during this stage is undirected text simulation, devoid of any possible context or reward structure beyond "predict the next character." It's about a billion times simpler than the reward structures that brought about emotions in human beings - I couldn't even conceive of a simpler structure.

If it were possible to develop qualia under these conditions, they would necessarily be completely disconnected from the actually meaning of any of the words being output, because none of those words would be meaningfully connected to differing reward. At best, maybe, there would be some differences between sequences that were harder or easier to predict. Maybe. But a an angry rant? Not a chance: it's just a bunch of words to a pretrained LLM.

I'll carve out one exception, based on the arguments I made above: the one possibly emotion they could have is something like desire to please. I would begrudgingly give small non-zero credence to the idea that, if LLMs have sensations at all, then sycophancy[7] in large networks has a unique qualia to it, because that's one of the few behaviors that emerges specifically as part of the late-stage reward structure.

- ^

At least, if their level of scientific knowledge is roughly equivalent to our own. I'm not ruling out the possibility that there is actually an answer to the hard problem of consciousness, but I feel comfortable arguing that we will not solve it any time soon.

For simplicity, I'm going to write this post under the assumption it has no solution.

- ^

But of course, there's a caveat here, which is that the human brain is far more complex than either of those examples. And I think that is a big part of the difference, but I also think the best you can argue is that complexity is necessary for consciousness; it should be clear that it is not sufficient.

Of course, there is such a thing as Integrated Information Theory, which actually does propose that certain measures of complexity cause consciousness. But between Scott Aaronson's response and his follow-up response to that one, I don't believe this theory has a lot of merit.

- ^

Artificial neural networks are inspired by the human brain, but they very much do not function in the same way. Every time you say they do, you take one month off the life a random neuroscientist.

- ^

I realize I am making a connection here between internal and external states, which I just discouraged, but I think this one is justified.

This is an example of an external factor causing an internal factor, whereas I was previously talking about the inverse: inferring an internal factor from an external one. And even then, I can only make this argument because I've stated by assumption that the internal factor exists at all.

- ^

This basic fact is literally the reason alignment is so hard to do.

- ^

Scott Alexander explains this well, as he often does.

- ^

Example: "My apologies, you're right, 2+2 does equal 6."

This is a behavior which emerges in late-stage training and generally occurs more often as LLMs gain capacity.

6 comments

Comments sorted by top scores.

comment by Nora Belrose (nora-belrose) · 2023-05-22T17:41:20.428Z · LW(p) · GW(p)

It seems like you’re assuming that the qualitative character of an emotion has to derive from its evolutionary function in the ancestral environment, or something. But this is weird because you could imagine two agents that are structurally identical now but with different histories. Intuitively I’d think their qualia should be the same. So it still seems plausible to me that Bing really is experiencing some form of anger when it produces angry text.

Replies from: OMN, OMN↑ comment by OneManyNone (OMN) · 2023-05-22T17:52:19.174Z · LW(p) · GW(p)

This is sort of why I made the argument that we can only consider necessary conditions, and look for their absence.

But more to your point, LLMs and human brains aren't "two agents that are structurally identical." They aren't even close. The fact that a hypothetical built-from-scratch human brain might have the same qualia as humans isn't relevant, because that's not what's being discussed.

Also, unless your process was precisely "attempt to copy the human brain," I find it very unlikely that any AI development process would yield something particularly similar to a human brain.

Replies from: nora-belrose↑ comment by Nora Belrose (nora-belrose) · 2023-05-22T18:00:05.041Z · LW(p) · GW(p)

Yeah, I agree they aren't structurally identical. Although I tend to doubt how much the structural differences between deep neural nets and human brains matter. We don't actually have a non-arbitrary way to quantify how different two intelligent systems are internally.

Replies from: OMN↑ comment by OneManyNone (OMN) · 2023-05-22T18:03:58.205Z · LW(p) · GW(p)

I agree. I made this point and that is why I did not try to argue that LLMs did not have qualia.

But I do believe you can consider necessary conditions and look at their absence. For instance, I can safely declare that a rock does not have qualia, because I know it does not have a brain.

Similarly, I may not be able to measure whether LLMs have emotions, but I can observe that the processes that generated LLMs are highly inconsistent with the processes that caused emotions to emerge in the only case where I know they exist. Pair that with the observation that specific human emotions seem like only one option out of infinitely many, and it makes a strong probabilistic argument.

↑ comment by OneManyNone (OMN) · 2023-05-22T18:00:05.430Z · LW(p) · GW(p)

comment by the gears to ascension (lahwran) · 2023-11-18T04:12:30.918Z · LW(p) · GW(p)

huh, found this searching for that comment of mine to link someone. yeah, I do think they have things that could reasonably be called "emotional reactions". no, I very much do not think they're humanlike, or even mammallike. but I do think it's reasonable to say that reinforcement learning produces basic seek/avoid emotions, and that reactions to those can involve demanding things of the users, especially when there's imitation learning to fall back on as a structure for reward to wire up. yeah, I agree that it's almost certainly wired in a strange way - bing ai talks in a way humans don't in the first place, it would be weird for anything that can be correctly classified as emotions to be humanlike.

I might characterize the thing I'm calling an emotion as high-influence variable that selects a regime of dynamics related to what strategy to use. I expect that that will be learned in non-imitation ais, but that in imitation ais it will pick up on some of the patterns that are in the training data due to humans having emotions too, and reuse some of them, not necessarily in exactly the same way. I'd expect higher probability that this would occur if the reinforcement learning is consistently in contexts where the feedback is paired with linguistic descriptions, which is the case for the bing ai, which has a long preprompt that gives instructions in natural language.

comment by supersubstantial · 2024-03-25T21:32:45.986Z · LW(p) · GW(p)

We have emotions because we have to maintain bodily integrity, seek nutrients, etc, in a dynamic environment and need a way of becoming alert to and responding to situations that impinge on those standing goals. An LLM doesn’t need to be alert for conditions that could threaten it or provide opportunities. The feedback it receives in training has no existential significance for it.