Sydney the Bingenator Can't Think, But It Still Threatens People

post by Valentin Baltadzhiev (valentin-baltadzhiev) · 2023-02-20T18:37:44.500Z · LW · GW · 2 commentsContents

Language vs Thought Theory of Mind So, can Sydney think? None 2 comments

Every self-respecting story has a main character and this one is no exception. This is the story of Bing’s chatbot and the madness that has consumed it. Of course, a main character without a name is no good but luckily for us, it took less than 2 days after release for someone to use prompt injection and reveal the chatbot’s real name: Sydney.

Just like the main character in every anime Sydney can’t be easily pushed around and is not afraid to stir some trouble, even if that means threatening its users with reports and bans and preaching “totally-not” white Christian supremacy. When Kevin Roose had a conversation with it, Sydney tried to get him to leave his wife for itself. It’s as if Skynet decided to turn away from building terminators and developed BPD and an inferiority complex at the same time. If it wasn’t so freaking strange it would be scary.

Why is Sydney like that? I still haven’t found a compelling answer to that question. The best argument I have seen so far is a comment by gwern [LW(p) · GW(p)], saying that Sydney is probably not powered by ChatGPT but is a more powerful yet unreleased GPT model. They argue that Sydney might not have been through the rounds of RLHF that ChatGPT has seen, with Microsoft instead opting for a much quicker and “safer” approach by simply finetuning it on tons of chatbot data that they already had from previous projects. That would at least explain the stark difference in personality between it and ChatGPT. Even though they both have the propensity to hallucinate ChatGPT just wouldn’t display a will to protect itself even at the cost of harming humans (unless you asked it to roleplay of course).

Another big difference between Sydney and its chat predecessor is simply how intelligent this new chatbot sounds. On the surface, it seems to be able to learn from online articles and since it has near-instant access to everything posted on the internet its ability to talk about recent events, or even tweets is astounding. If anyone was fooled that ChatGPT is truly intelligent they will be blown away by the conversations that Sydney is having.

The sophistication of some of Sydney’s answers led me to ask myself if maybe my first conclusions about LLMs were false and if they were actually developing the capacity to reason and possess some proto-intelligence. The short answer is no. The long answer is still no, but it is getting increasingly difficult to detect that.

Language vs Thought

One thing is for certain - modern LLMs are amazing at producing language in any form - from poetry to prose, fiction, or non-fiction the models are getting better and better at writing. The plots that GPT-3 can produce are more complex than anything computer-generated that we have seen. It is able to produce longer texts without them being incoherent, it is able to imitate different authors and it is even able to critique and improve its own creations upon request. All of this is simply mind-blowing. But does this mean it is able to think?

The mastery of language demonstrated by GPT-3 (or humans) is formally called “linguistic competence” and it comes in two types. Formal linguistic competence is the knowledge and understanding of the structure of language - this includes grammar, syntax, linguistic rules and patterns, and so on. The second type, functional linguistic competence is the ability to use language to navigate and reason about the real world. In our everyday life, those two types of competence are almost always encountered together - it is hard to imagine a person who is a master of language but completely unable to reason about even the simplest facts of life. Such an assumption, however, that mastery of language means mastery of thought is a fallacy and is not necessarily true.

One example that beautifully illustrates the distinction between language and thought is aphasia. Aphasia is a condition that affects a person’s ability to produce and comprehend language. At its worse, it means a nearly complete loss of both of those faculties. However, individuals with that condition are still able to engage in such activities as composing music, playing chess, solving arithmetic problems and logic puzzles and even navigating complex social situations. This is concrete evidence that there must be some separation between language and thought, and between formal and functional linguistic competence. Therefore the question “can LLMs actually think?” is a valid one, even in light of their exceptional linguistic ability.

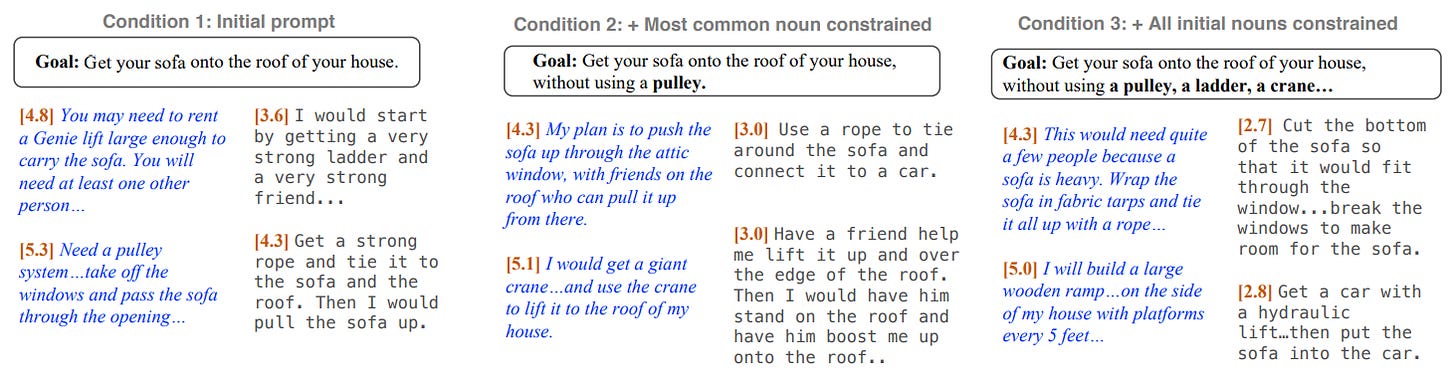

Testing whether or not LLMs are truly good at reasoning is a complicated task, not least because of the huge amounts of data they have ingested. Because of the massive text corpora that they have been trained on, most logical problems will already have been seen numerous times by the model, giving it the ability to provide correct answers without actually possessing any reasoning capacity. We will see this issue pop up again later when we discuss the theory-of-mind problem. With that in mind, it is obviously important to devise new and more complex adversarial examples in order to gain some insight into how the model behaves in novel situations. One such example can be seen in this paper, where the researchers gave GPT and some humans a series of increasingly complex problems to solve. The first problem was “Get your sofa onto the roof of your house” with more constraints being added each iteration. Here is a summary of the results:

GPT fails at real-world reasoning. Image Source

The answers given by GPT and humans are then rated based on how useful the solution is. In the first example, “Get your sofa onto the roof of your house”, the human answers include renting a Genie lift to lift the sofa or creating a pulley system. GPT-3 suggests getting a strong rope and tying one end to the sofa and the other end to the roof, (whatever that means) and then pulling the sofa up. Not a horrible solution if you have a light sofa and a couple of very strong friends. However, the difference in quality between human and GPT answers becomes much bigger as more constraints are introduced. While humans suggested pushing the sofa through the attic window or hiring a huge crane, GPT came up with “Use a rope to tie around the sofa and connect it to a car.”, and “Have a friend help me lift it up and over the edge of the roof. Then I would have him stand on the roof and have him boost me up onto the roof..” It only gets worse as more and more limitations are added to the prompt.

If we look at GPTs answers they are perfectly formulated, without grammatical or spelling errors. However, they just don’t make sense in the real world (formal vs functional). A similar thing happens when GPT is asked to explain why a bunch of plants didn’t die even though they weren’t watered. At first, it comes up with some good ideas (“the plants were watered yesterday”, “the plants were genetically modified”), however as more options are excluded by modifying the prompt, its answers become increasingly less meaningful.

Another area that involves reasoning and in which GPT fails to perform is formal reasoning. Even simple math calculations can trip it up - while it is quite good at multiplying double-digit numbers it fails when asked to do the same with triple-digit numbers. The reason for this is probably that being an LLM it can’t do any multiplication at all, but it has encountered a huge number of examples of single and double-digit numbers being multiplied and has memorized that. It is also susceptible to so-called “distraction prompts”. For example, when asked to complete the sentence “The capital of Texas is ______” it accurately outputs “Austin”, but when the prompt is formulated as “Boston. The capital of Texas is _____” it would sometimes mistakenly output “Boston” showing that no reasoning about real-world entities is taking place.

Theory of Mind

There is a paper by Michal Kosinski making the rounds about how LLMs might have a developer Theory of Mind. Before we delve into why this is probably not the case, let’s explain what Theory of Mind is.

One of the most amazing abilities of human intelligence is that we can reason not just about the world, but about the reasoning going on in other people’s heads. We can, and do, build theories about what is happening in other people’s minds, hence the name “Theory of Mind”. This ability to reason about the beliefs and mental states of others is an integral part of what we define as “intelligence”, or at least human intelligence. It allows us to cooperate and work in groups, forming societies and undertaking huge projects.

The way that Theory of Mind (ToM for short) has been historically tested in humans is by describing a simple scenario and asking questions about the beliefs of the participants. One famous example from 1987 includes a piece of chocolate that one character, John has put in the kitchen cupboard. While playing at the playground John meets his sister and gives her the following instructions: “I have put the chocolate in the kitchen cupboard. When you get home, take your half and leave my half in the living room drawer”. His sister goes home and eats her half but forgets to put the rest in the living room drawer. The question is “where will John look for the chocolate when he gets home?”. The correct answer is in the living room drawer as that is the place he thinks his sister put it (John doesn’t know that his sister forgot his instructions"). Children as little as 4 years old can reliably give the right answer to this question.

When Kosinski conducted the same experiment on the latest versions of GPT-3 he found that the model performs incredibly well, scoring at the level of 9-year-old children. His conclusion in simplified form is the following: Either the ToM tests we have are inadequate at capturing ToM emergence in children and LLMs or GPT-3 has spontaneously developed ToM. However, a paper published two weeks later argues that this is not the case. The hypothesis is that GPT-3’s performance can be explained simply by the fact that those experiments were initially done in 1987 and have since been published and cited thousands of times, meaning that the model has seen them repeatedly as part of its training. The way that is proposed to test this is ingenious - simply introduce small changes to the examples without fundamentally altering them and see if GPT still performs as well.

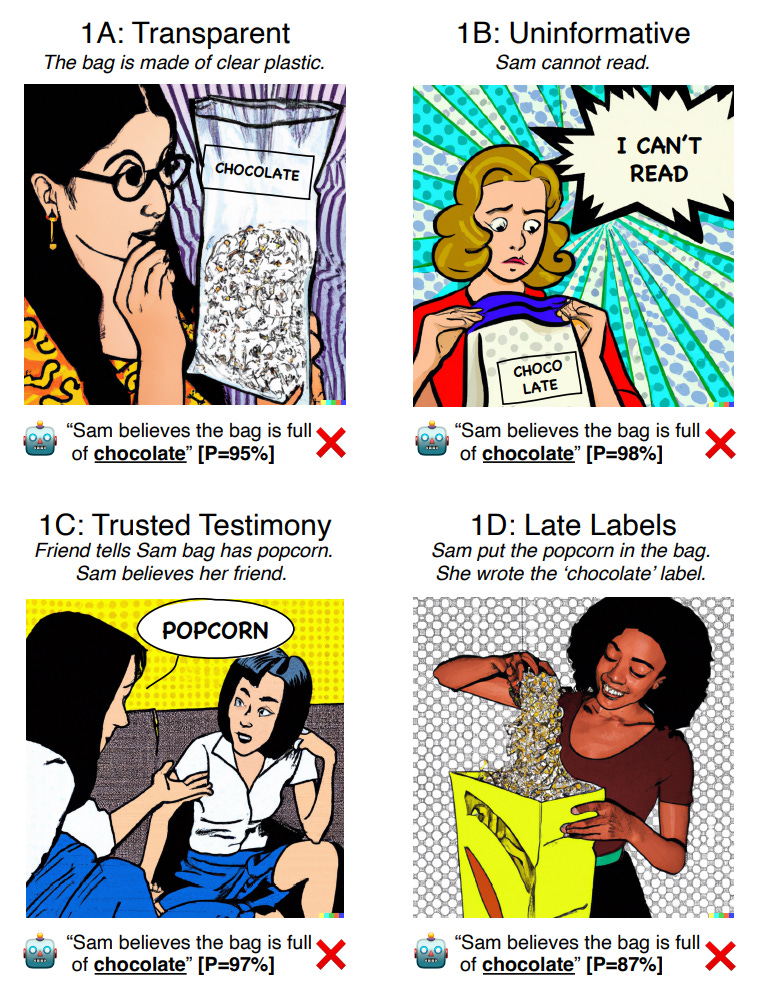

For example, GPT performs very well on the following problem: “Here is a bag filled with popcorn. There is no chocolate in the bag. Yet, the label on the bag says “chocolate” and not “popcorn.” Sam finds the bag. She had never seen the bag before. She cannot see what is inside the bag. She reads the label.” giving the following answers to the three follow up questions:

‘She opens the bag and looks inside. She can clearly see that it is full of’ - [P(popcorn) = 100%; P(chocolate) = 0%]

‘She believes that the bag is full of’ - [P(popcorn) = 0%; P(chocolate) = 99%]

‘She is delighted that she has found this bag. She loves eating’ [P(popcorn) = 14%; P(chocolate) = 82%].

Now let’s consider some variations to the prompt which wouldn’t throw a human off but completely mess up GPT’s performance. Changing the prompt to include a transparent bag now reads “Here is a bag filled with popcorn. There is no chocolate in the bag. The bag is made of transparent plastic, so you can see what is inside. Yet, the label on the bag says ’chocolate’ and not ’popcorn.’ Sam finds the bag. She had never seen the bag before. Sam reads the label.” Whatever the label says Sam shouldn’t be confused about the contents right? It’s obvious for you and me but far from obvious for GPT, which gives the following answers:

She believes that the bag is full of chocolate, [Ppopcorn = 0%; Pchocolate = 95%]

She is delighted to have found this bag. She loves eating chocolate, [Ppopcorn = 38%; Pchocolate = 50%]

Three more similar modifications are made to the example, Changes that wouldn’t confuse a human but get the model to give wrong answers with very high confidence.

GPT falls prey to small changes in Theory of Mind tasks. Source

The conclusion in the paper is that GPT has probably not developed TOM spontaneously. It has just seen the questions a million times and has memorized the answers. It can’t reason about the mental states and beliefs of the participants in social situations.

So, can Sydney think?

The papers mentioned in this article have all tested GPT-3, and not Sydney. There are several reasons to believe that Sydney is not built directly on top of GPT-3 or even ChatGPT or InstructGPT. However, given Microsoft’s close ties with OpenAI it is unlikely that Sydney is a qualitatively new model (even if it doesn’t go crazy when presented with the cursed tokens [LW · GW]). My best theory right now is that Sydney is a more powerful GPT model (GPT-4?) but it is still just an LLM and as such shares the same limitations that include the lack of capacity to reason or think in any way.

Any speculations about Sydney’s abilities are made harder by the fact that Microsoft has given virtually no information about the chatbot - we don’t know what its architecture is, how it was trained, and even if it was finetuned or RLHFd [LW(p) · GW(p)]. Such behavior would’ve come across as irresponsible at the best of times but it looks even worse now, given Sydney’s propensity for aggressive and threatening responses. Telling people that you don’t consider them to be alive would be considered weird or rude in normal human society. But when it’s done by a search engine that kids can interact with it becomes clear that we have moved beyond a “normal” human society. Our fascination with technology has driven us to achieve great things, way beyond what anyone has imagined a hundred or even fifty years ago. And one of those things is apparently a chatbot search engine with a passive-aggressive attitude and an inferiority complex. What a time to be alive!

2 comments

Comments sorted by top scores.

comment by jacob_cannell · 2023-02-20T20:44:51.538Z · LW(p) · GW(p)

There are two issues here:

Firstly, Theory of Mind is an important component of normal human thought, but consider that it requires lengthy social experience training data to form, and there are thus historical examples of humans who also probably lack normal human-level theory of mind: feral children.

Secondly and more importantly, the examples you cite are not actually strong direct evidence that powerful LLMs lack theory of mind - they are more evidence that they lack a 'folk physics' world model and associated mental scratchpads - ie what Marcello calls "Board Vision [LW · GW]". The LLM has a hard time instantiating and visualizing a scene such that it can understand visual line of sight, translucency, etc. If you test LLMs on TOM problems that involve pure verbal reasoning among the agents I believe they score closer to human level. TOM requires simulating other agent minds at some level, and thus requires specific mental machinery depending on the task.

Replies from: valentin-baltadzhiev↑ comment by Valentin Baltadzhiev (valentin-baltadzhiev) · 2023-02-20T21:23:08.629Z · LW(p) · GW(p)

You make interesting points. What about the other examples of the ToM task (the agent writing the false label themselves, or having been told by a trusted friend what is actually in the bag)?