Sentience, Sapience, Consciousness & Self-Awareness: Defining Complex Terms

post by LukeOnline · 2021-10-20T13:48:40.304Z · LW · GW · 8 commentsContents

Consciousness Sentience Self-Awareness Sapience None 8 comments

The terms in the title are commonly used in crucial debates surrounding morality & AI. Yet, I feel like there is no clear consensus about the meaning of those terms. The words are often used interchangeably, causing people to think they are all the same or very closely related. I believe they're not. Clearly separating these terms makes it a lot easier to conceptualize a larger "spectrum of consciousness".

Disclaimer: I expect some people to be upset for 'taking' terms and changing their definition. Feel free to propose different terms for the concepts below!

Consciousness

"Consciousness" is often taken to mean "what we are". "Our" voice in our heads, the "soul". I propose a more limited definition. A conscious entity is a system with an "internal observer". At this very moment, these words are being read. Hello, 'observer'! You probably have eyes. Focus on something. There is an image in your mind. Take the very core of that: not the intellectual observations connected to it, not the feelings associated with it, just the fact that a mental image exists. I think that is the unique ability of a conscious individual or system.

Sentience

Wikipedia claims that consciousness is sentience. Wiktionary has a definition for sentient that includes human-like awareness and intelligence. Once again, I propose a more limited definition. A sentient entity is a system that can experience feelings, like pleasure and pain. Consciousness is a prerequisite: without internal observer, there is nothing to experience these feelings.

I believe sentience is the bedrock of morality. Standing on a rock probably doesn't generate any observations - certainly not pleasant or unpleasant ones. Standing on a cat seems to produce deeply unpleasant feelings for the cat. Defining morality as a system that tries to generate long-term positive experiences and to reduce negative experiences seems to work pretty well. In that case, standing on cats is not recommended.

In these terms, consciousness is not the threshold of morality. Perhaps we discover that rocks are conscious. When we stand on them, we slightly compress their structure and rocks somehow hold an internal observer that is aware of that. But it doesn't have any feelings associated with that. It doesn't experience pain or joy or fear or love. It literally doesn't care what you do to it. It would be strange, it would overhaul our understanding of consciousness, it would redefine pet rocks - but it doesn't make it immoral for us to stand on rocks.

Self-Awareness

Self-awareness is often seen as a big and crucial thing. Google "When computers become", and "self aware" is the second suggestion. I believe self-awareness is vague and relatively unimportant. Does it mean "knowing you're an entity separate from the rest of the world"? I think self-driving cars can check that box. Do you check that box when you recognize yourself in the mirror? Or do you need deep existential though and thorough knowledge of your subconsciousness and your relationship to the world and its history? In that case, many humans would fail that test.

I believe self-awareness is a spectrum with many, many degrees. It's significantly correlated with intelligence, but not strongly or necessarily. Squirrels perform highly impressive calculations to navigate their bodies through the air, but these don't seem to be "aware calculations", and squirrels don't seem exceptionally self-aware.

Sapience

According to Wikipedia:

Wisdom, sapience, or sagacity is the ability to contemplate and act using knowledge, experience, understanding, common sense and insight.

Sapience is closely related to the term "sophia" often defined as "transcendent wisdom", "ultimate reality", or the ultimate truth of things. Sapiential perspective of wisdom is said to lie in the heart of every religion, where it is often acquired through intuitive knowing. This type of wisdom is described as going beyond mere practical wisdom and includes self-knowledge, interconnectedness, conditioned origination of mind-states and other deeper understandings of subjective experience. This type of wisdom can also lead to the ability of an individual to act with appropriate judgement, a broad understanding of situations and greater appreciation/compassion towards other living beings.

I find sapience to be much more interesting than self-awareness. Wikipedia has rather high ambitions for the term, and once again I propose a more limited definition. Biologically, we are all classified as homo sapiens. So it makes sense to me that "sapience" is the ability to understand and act with roughly human-level intelligence.

Here [LW · GW] is a fascinating article about tricking GPT-3. It includes some very simple instructions like do not use a list format and use three periods rather than single periods after each sentence, which are completely ignored in GPT-3's answer. GPT-3 copies the format of the text and elaborates in a similar style - exactly the thing the instructions told it not to do.

Human children can easily follow such instructions. AIs can't, nor can animals like cats and dogs. Some animals seem to be able to transmit some forms of information, but this seems to be quite limited to things like "food / danger over there". As far as I know, no animal builds up a complex, abstract model of the world and intimately shares that with others (exactly the thing we're doing right now).

On the other hand: lots of animals do clearly build up complex models of the world in their minds. The ability to communicate them mainly seems to rely on language comprehension. Language is tremendously powerful, but should it be the threshold of 'sapience'?

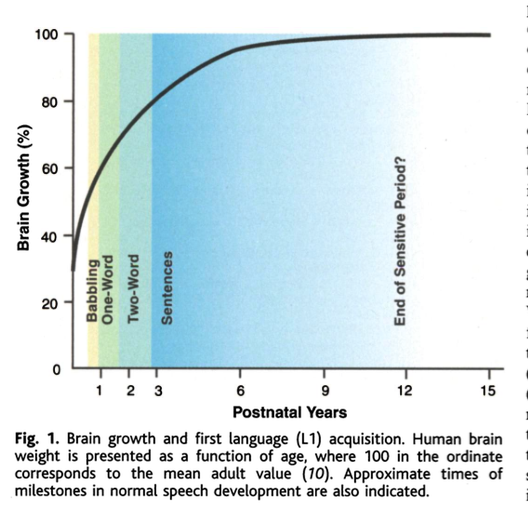

Language acquisition seems to be nearly binary. Children learn to speak rather quickly. Among healthy eight year old children, there is no separate category for children that speak "Part A" of English but not "Part B".

It seems like raw human brain power + basic language skills = easy access to near infinite complex abstract models. And indeed, many concepts seem to be easily taught to a speaking child. "Store shoes there", "don't touch fire", "wear a jacket when it's cold outside".

Simultaneously, relatively simple things can quickly become too complex for regular humans to communicate and handle. Take COVID for example. The virus itself is relatively simple - exponentional growth is not a new concept. Yet, our societies had and have a hard time properly communicating about it. We started mostly with fear and panic, and then transitioned to politicizing things like facemasks and vaccines, turning things into a predictable Greens vs Blues mess [LW · GW].

I can imagine beings with a communication method that comes as natural to them as talking about the weather comes to us, who can easily talk about subjects like those above, whose civilizations quickly and painlessly coordinate around COVID, and whose Twelfth Virtue [LW · GW] is not "nameless" / "the void". To those beings, humans might barely seem sapient, like bees and ants barely seem sapient to us.

So my definition of sapience would be something like the ability to function with broad, diverse and complex models of the world, and to appropriately share these models with others. It's technically a spectrum, but basic language ability seems to be a massive jump upwards here.

The philosophical zombie is sapient, but not conscious. Self-awareness does seem to be fundamentally connected to sapience. Any functional model of the world requires the ability to "model yourself". Proper sapience also seems to require the ability to be "receptive" towards the concept of self-awareness.

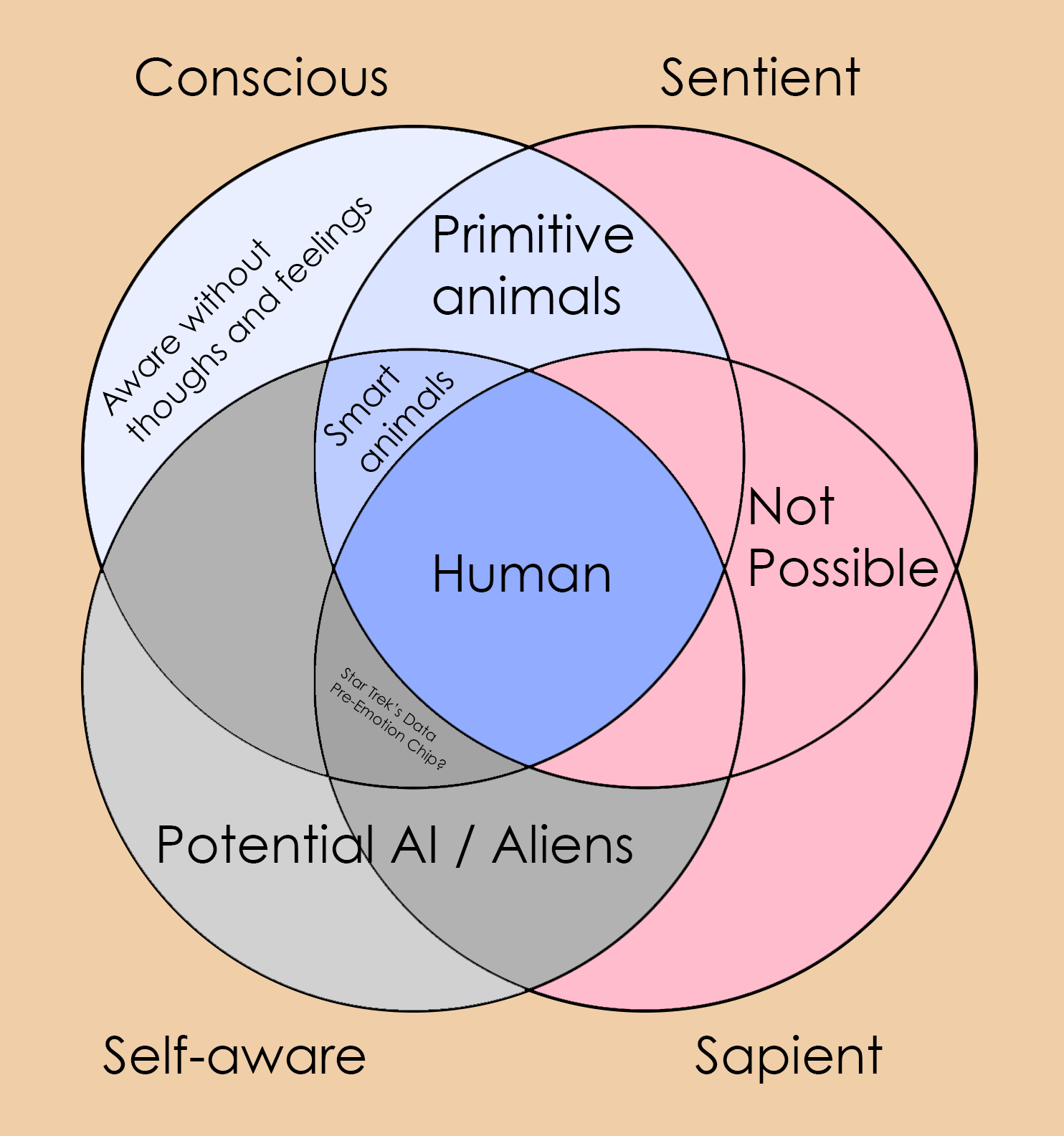

I think this results in the following map of possibilities:

I believe these definitions make discussing related subjects a lot more fruitful. I'd love to hear your opinion about it!

8 comments

Comments sorted by top scores.

comment by noggin-scratcher · 2021-10-21T11:35:15.105Z · LW(p) · GW(p)

I find it aesthetically bothersome to have a venn diagram where so many of the sections are marked "not possible" - further elaboration on why not might be worthwhile.

I take your point that "has feelings about things" seems to presuppose an inner observer to have those feelings, and probably that "has a good enough world model to act intelligently" presupposes including your self as an important part of that model. Not 100% committed to that second one (are we sure it's inconceivable for an entity to be "clever" without self-awareness?), but I'll go with it.

But if they're supposed to be subcategories included within each other, I feel like the diagram should represent that. Like this perhaps: https://i.imgur.com/5yAhnJg.png

Replies from: LukeOnline↑ comment by LukeOnline · 2021-10-21T19:18:04.496Z · LW(p) · GW(p)

I really like your version of the Venn diagram! I've never seen one like that before, but it makes a lot of sense.

I could indeed imagine an intelligent being that is somehow totally bared from self-knowledge, but that is a very flawed form of sapience, in my opinion.

comment by Ruby · 2021-10-20T18:15:53.469Z · LW(p) · GW(p)

Nice job distinguishing all these different dimensions.

My first inclination when encountering a variety of words is to break out the Human's Guide to Words [? · GW] toolbox. I'm pessimistic about establishing any robust consensus of our usage, but maybe in each debate you can get people to make clear predictions and gain clarity that way.

comment by Ben Smith (ben-smith) · 2023-12-17T00:59:38.778Z · LW(p) · GW(p)

I tried a similar venn diagram approach more recently. I didn't really distinguish between bare "consciousness" and "sentience". I'm still not sure if I agree "aware without thoughts and feelings" is meaningful. I think awareness might alwyas be awareness of something. But nevertheless they are at least distinct concepts and they can be conceptually separated! Otherwise my model echos the one you have created earlier.

https://www.lesswrong.com/posts/W5bP5HDLY4deLgrpb/the-intelligence-sentience-orthogonality-thesis

I think it's a really interesting question as to whether you can have sentience and sapience but not self-awareness. I wouldn't take a view either way. I sort of speculated that perhaps primitive animals like shrimp might fit into that category.

comment by JBlack · 2021-10-22T01:40:04.033Z · LW(p) · GW(p)

I'm not sure why your Venn diagram doesn't have "sentient" lying entirely within "conscious", given that your definitions have the latter as a logical requirement for the former. Part of your pink impossible area seems to exclude that, but that pink area also includes other things that you say are impossible without given any reason in the text.

Why is sapience without sentience or self-awareness marked "impossible"? It seems plausible for a computer program to be able to receive, process, and send complex world models without being able to model itself in them. GPT-like systems (now or in the future) may well fall into this category. Even if such a system can model "GPT-like systems", there's probably not yet any "self" concept to be able to apply models like "the output I am about to produce will be the output of a GPT-like system".

The remaining "impossibility" of sapience and sentience without self-awareness does seem implausible, but I don't know that it's logically impossible. It would take some pretty major blind spot in what sort of models you can form to be able to experience sensations, be able to form complex models, and yet not be able to model self in many ways.

It may be that sentience is literally just the intersection of self-awareness and consciousness, or that both of these may collapse into just a scale of self-awareness. Consciousness may be nothing more or less than self-awareness. Maybe pZombies can't exist, even though we can imagine such things.

Replies from: LukeOnline↑ comment by LukeOnline · 2021-10-22T11:54:02.415Z · LW(p) · GW(p)

I'm not sure why your Venn diagram doesn't have "sentient" lying entirely within "conscious"

Noggin-scratcher made a much improved version of my diagram that does exactly what you suggest: https://i.imgur.com/5yAhnJg.png

I prefer that one!

Why is sapience without sentience or self-awareness marked "impossible"?

In my opinion, a sapient entity is an entity that posseses something like "general purpose intelligence". Wouldn't such an entity quickly realize that it's a distinct entity? That would mean it's self-aware.

Maybe it doesn't realize it at first, but when you communicate with it, you should be able to "make it self-aware" quite quickly. If it's unable to become self-aware, I wouldn't assign the entity "human level sapience".

I do agree that I can imagine a very intelligent machine that is completely unable to gather information about its own status. Edge case!

It may be that sentience is literally just the intersection of self-awareness and consciousness

Using the definition proposed in the article, I can easily imagine an entity with an "internal observer" which has full knowledge of its own conditions, still without the ability to feel pleasure or pain.

Consciousness may be nothing more or less than self-awareness.

How would you define self-awareness?

Replies from: JBlack↑ comment by JBlack · 2021-10-23T11:20:51.720Z · LW(p) · GW(p)

I do agree that I can imagine a very intelligent machine that is completely unable to gather information about its own status. Edge case!

I think it's less edge than it might at first seem. Even far more complex and powerful GPT-like models may be incapable of self-awareness, and we may deliberately create such systems for AI safety reasons.

Using the definition proposed in the article, I can easily imagine an entity with an "internal observer" which has full knowledge of its own conditions, still without the ability to feel pleasure or pain.

I think it would have to never have any preferences for its experiences (or anticipated future experiences), not just pleasure or pain. It is conceivable though, so I agree that my supposition misses the mark.

How would you define self-awareness?

As in the post, self-awareness to me seems vaguely defined. I tend to think of both self-awareness and sapience as being in principle more like scales of many factors than binary categories.

In practice we can easily identify capabilities that separate us from animals in reasoning, communicating, and modelling, and proudly proclaim ourselves to be sapient where all the rest aren't. This seems pretty clear cut, but various computer systems seem to be crossing the animal-human gap in some aspects of "sapience" and already surpassed humans in some others.

Self-awareness seems to be harder to find a clear boundary, and really scales seem more appropriate here. There are various "self-aware" behaviour clues, and they seem to cover a range of different capabilities. In general I'd probably call it being able to model their own actions, experiences, and capabilities in some manner, and update those models.

Cats seem to be above whatever subjective "boundary" I have in mind, even though most of them fail the mirror test. I suspect the mirror test is further toward "sapience", such as being able to form a new model that predicts the actions of the reflection perfectly in terms of their own actions as viewed from the outside. I suspect that cats do have a pretty decently detailed self-model, but mostly can't do that last rather complex transformation to match up the outside view to their internal model.

comment by アlex (lex-2) · 2023-03-28T23:13:59.766Z · LW(p) · GW(p)

If you really wanted to get ontological you could potentially expand those "Not Possible" blank spots. For example temporality (living/dead state for beings), NPCs, P-Zombies, etc.

Not to mention all the a priori ontological presuppositions that are not universally justified: all humans have souls/minds, these souls/minds operate similarly/rationally, innate self-awareness, inner-voice, etc.

These universally egalitarian assumptions about humanity's qualities on which to measure other beings against may be what is leading to such confusion over our own human condition. We end up speaking past each other to preserve a relatively modern arbitrary value, Human Egalitarianism, at the expense of the Natural unequal truth. Perhaps when Materialist says he doesn't have a soul and is just chemical reactions, the Theist should honor his words; inversely, the Materialist should respect that he probably can't ever epistemically comprehend metaphysical things which it has no innate capacity for (like an ant trying to understand the mechanics and meaning of prayer or painting).

In the past, Ontological Morality was more apparent in society when we acknowledged the existence of Saints and Sinners. But we stopped do that because it was too offensive to say someone was good or evil, or had a soul or was soulless. It was considered offensive and old-fashioned to illiberally judge. In that secularization, we lost a lot of the distinctions in being human and being in general.