A Defense of Peer Review

post by Niko_McCarty (niko-2), delton137 · 2024-10-22T16:16:49.982Z · LW · GW · 1 commentsThis is a link post for https://www.asimov.press/p/peer-review

Contents

A Brief History Peer Review and Errors Peer Review and Scientific Value Peer Review and Science Writing Peer Review and Bold Ideas Peer Review and New Researchers Peer Review and Prestige Where Do We Go from Here? None 1 comment

This article by Dan Elton (moreisdifferent.blog) was published by Asimov Press.

“I think peer review is, like democracy, bad, but better than anything else."

— Timothy Bates, University of Edinburgh

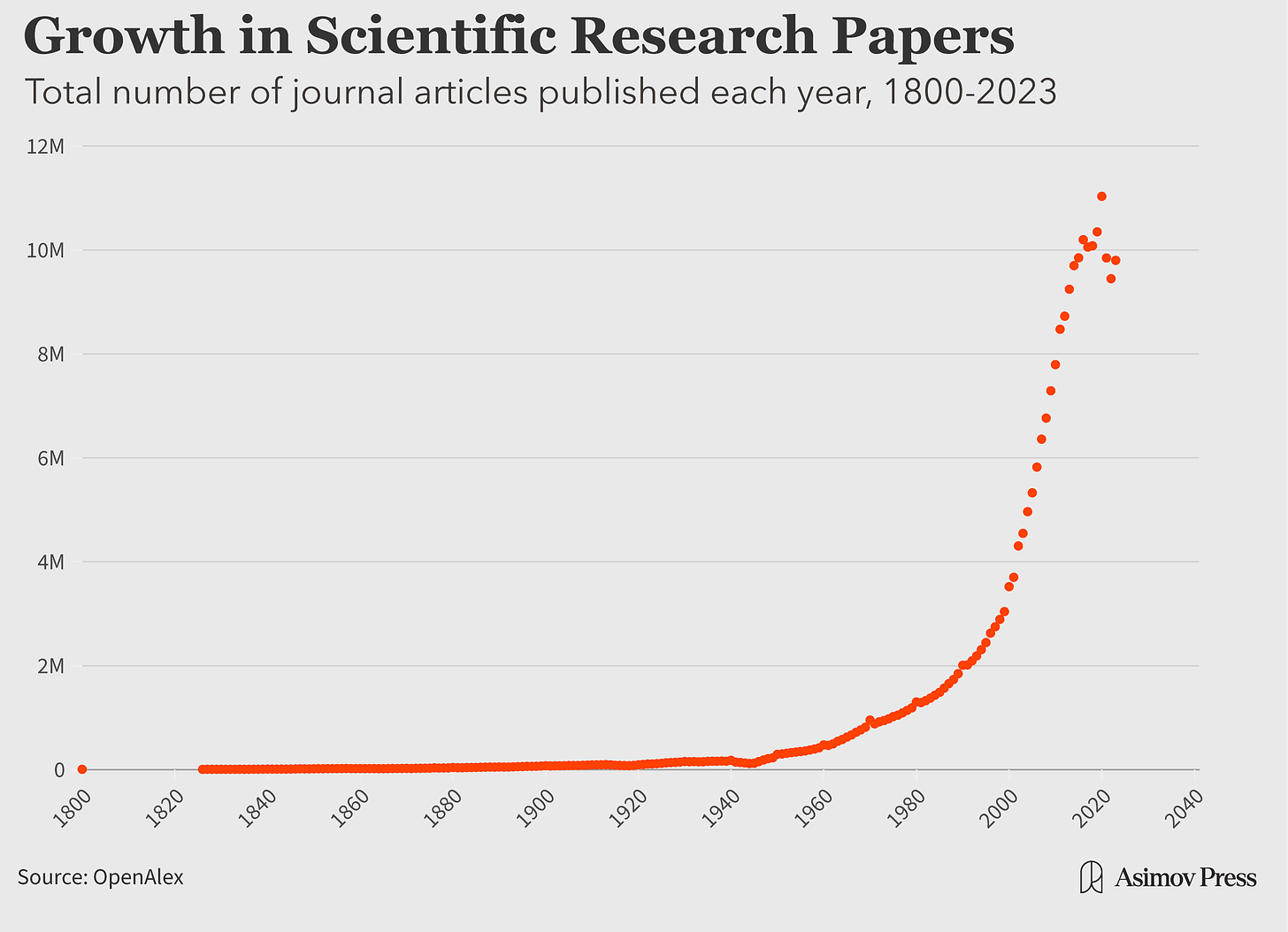

I used to see peer review as primarily good — an important gatekeeping process, essential for protecting capital “S” Science from all manner of quackery and nonsense. Looking back, I was clearly naive; plenty of junk science gets published in top journals despite the supposed guardrails of peer review. Given the replication crisis, an exponentially growing deluge of low-quality or fraudulent work, and growing evidencethat science is stagnating, some have been wondering if we should get rid of peer review altogether and try something different.

One prominent essay forcibly arguing against peer review is “The Rise and Fall of Peer Review,” by Adam Mastroianni. In it, Mastroianni calls peer review a “failed experiment” and concludes that the best path forward is to do away with it altogether. He suggests that peer review doesn’t “do the thing it’s supposed to do” — namely, “catch bad research and prevent it from being published,” so perhaps scientists would be better off uploading PDFs to the internet where review and oversight can happen post-publication.1 Mastroianni isn’t exactly sure what a post-peer review world would look like, but in follow-up essays he encourages experimentation. Similar sentiments have been expressed elsewhere, such as by Brandon Stell, the founder of PubPeer, a website where anyone can leave comments about academic articles.

My experience at the receiving end of peer review has been positive; I’ve co-authored 42 peer-reviewed articles, mostly in the fields of physics and artificial intelligence for healthcare. But despite my good track record with peer review, it is clear that the existing system has serious flaws. We have all heard stories of high-quality work getting rejected while mediocre or error-laden work slips through (numerous high-profile fraud cases come to mind). It’s also undeniable that we lack enough data to say whether we get sufficient returns from peer review relative to the enormous amounts of time, resources, and energy we invest in it. Overall, issues such as these are why I found — and continue to find — many of Mastroianni’s arguments compelling.

Still, shortly after I read Mastroianni’s article, Ben Recht asked on Twitter if there were any articles presenting “a positive case for peer review.” And while many individualsresponded to Mastroianni, and a smattering of defenses of peer review have been published, I found it difficult to locate a comprehensive steelman of the practice. This article attempts to fill that lacuna.

A Brief History

The writer G.K. Chesterton famously said that before you tear down a fence, you should understand why it was put up in the first place. In that spirit, let’s explore the history of peer review.

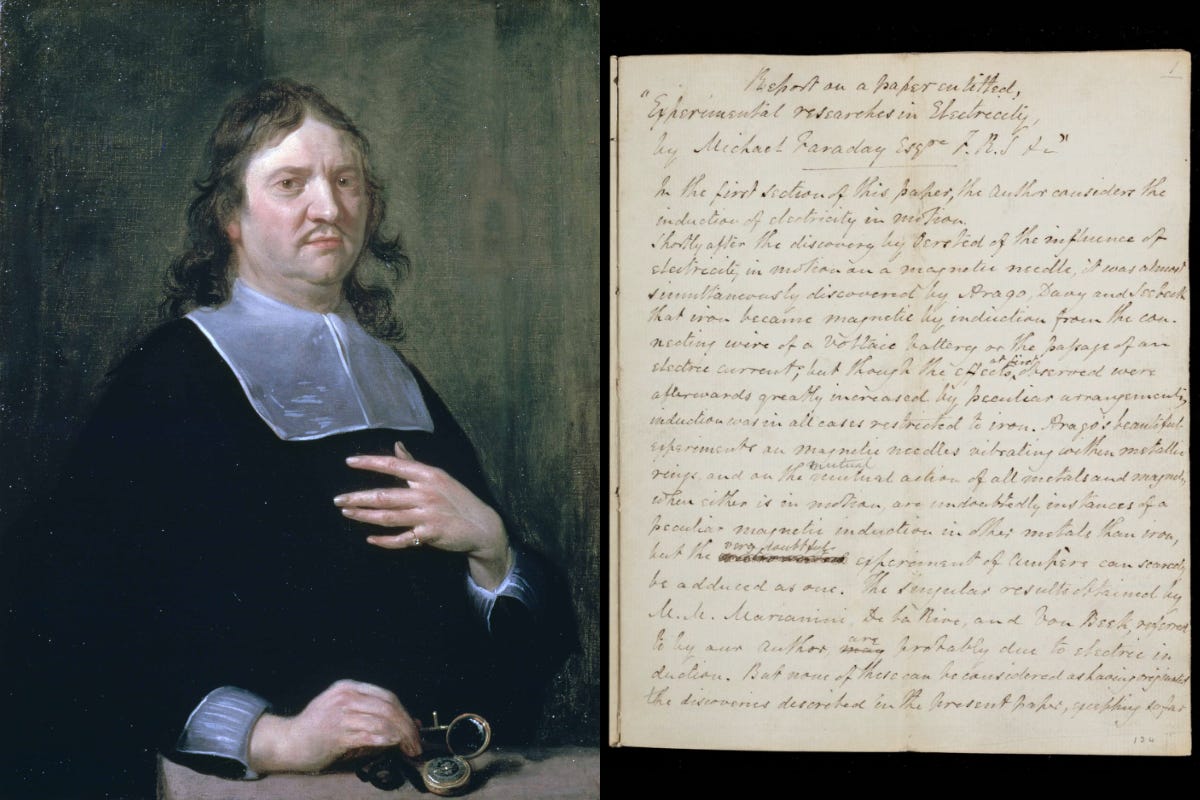

The roots of the practice can be traced back to the very first scientific journal, Philosophical Transactions, founded in 1655. Even in those days, a single editor was not equipped to judge whether to proceed with every article. The solution the editor of Philosophical Transactions arrived at was to send submissions to The Council of the Royal Society of London, the members of which would read each paper and then voteon whether it was worthy of publication. As research became more diverse and specialized, journal editors found it helpful to send submissions to relevant experts for review.

While not uncommon in the 18th and 19th centuries, peer review did not become mainstream until the 1950s. In fact, the journal Nature did not require peer review until as late as 1973 nor The Lancet until 1976. What Mastroianni rightly calls the “rise of peer review” took place between 1950 and 1970. As he describes it, peer review was driven by large government agencies such as the National Science Foundation (NSF), which was established in 1950. Between 1948 and 1953, government funding for scientific work increased by a whopping 25x. In response to this influx, the number of scientists — and thus the number of scientific publications — exploded.2 Unable to keep pace, editors found they had a greater need to send articles out to peer reviewers for help with editorial decisions.

Political conservatives and fiscal hawks were another decisive factor in establishing the peer review system as a form of “policing” for the scientific community. Throughout the 60s and 70s, the spending of taxpayer money by the NSF began to attract more and more scrutiny from Congress, especially as fears of falling behind the Soviet Union began to subside. When the NSF was first founded, final decision-making over whether to fund grant proposals often lay in the hands of NSF leadership. When funding for a particular grant proposal was denied, no explanation was given as to why, meaning there was little transparency into the process.

Members of Congress accused the NSF of being a “system protected by a cloak of secrecy” that spent taxpayer money on research of questionable value or programs that went against conservative Christian principles. Some politicians, such as Rep. John Conlan of Arizona, pushed for Congress to have direct control over what was funded, arguing that “the [NSF] is, after all, a creature of Congress, and it must be accountable to the Congress and to the taxpayers who support its operations.” Ultimately, a series of Congressional hearings in 1975 helped solidify a system in which researchers within a field would be designated as “peer reviewers,” deciding which scientific proposals deserved funding. This development, according to historian of science Melinda Baldwin, contributed to the rise of journal peer review as a core institutional practice in scholarly publishing.3

For peer review to actually work as intended, reviewers must be able to call out sloppy work and recommend rejection for those articles that do not meet the scientific standards of a journal. To allow reviewers to be critical without fear of repercussion, many journals switched to blinded peer review in the 1960s, wherein the reviewer’s identity was kept secret. Later, between 1970 and 1990, journals started experimenting with double-blind review, wherein the identities of both the authors and the reviewers are kept from one another. Almost all journals today are single-blinded, with a small fraction being double-blinded, or at least offering an option for a double-blind review.

Typically, scientific publication is a three-step process that takes anywhere from three months to a year. First, an editor evaluates submitted work and decides whether to send it out to reviewers or to reject it “at the desk.” Most rejections happen at this stage. Promising articles are sent out for review, and at least one round of back-and-forth takes place between the authors and reviewers. Finally, the editor decides whether to publish the manuscript based on their own judgment and feedback from the reviewers. If a paper is accepted, editorial staff at the journal often perform final quality checks and light copyediting.

As scrupulous as the peer review process may seem, it is flawed enough to have attracted a handful of major criticisms. The most common criticism is the arbitrariness of the process; many important and high-quality works are rejected, while low-quality stuff gets through. Another common criticism is that peer review stifles bold thinking and is biased against paradigm-shifting work. Then there is the claim that peer review doesn’t work well enough to be worth the time, energy, and publication delays that it causes.4 And finally, many scientists dislike having to “write for the reviewers” (and also journal style guidelines), contending that this “straight jackets” writing styles and sterilizes the scientific literature.

By examining each of these critiques in turn, we can gain a greater understanding of the role peer review plays in producing and disseminating scientific knowledge. Only then can we suggest reasonable changes to the current system.

Peer Review and Errors

People on social media love pointing out obvious errors that have slipped through peer review. Less visible, however, are the errors that were caught. To figure out how good a job peer review does, researchers have conducted studies in which errors were deliberately introduced into submitted manuscripts.

Four independent studies found that reviewers caught 25, 15, 29, and 34 percent of such errors. These numbers are quite low and do not inspire confidence. However, I think caution is warranted when drawing sweeping conclusions from these studies because they were each conducted over only a decade (between 1998 and 2008) in just two medical journals (the British Medical Journal and The Annals of Emergency Medicine.)

It’s shocking that taxpayers spend billions of dollars annually on peer review despite our barely knowing how well it works. The only studies on the error-catching ability of reviewers I could find were from medical journals, but there is good reason to believe that peer review differs greatly between fields.

For example, one might argue that peer review is worse in medicine because reviews are largely performed by overworked doctors with limited time. Additionally, some evidence suggests that articles receive more thorough reviews at top journals, compared to lower-tier journals, but differences are modest and highly variable.

Not only do we lack studies on how many errors slip through peer review, but also insight into what the downstream effects of such sloppiness may be. Are these errors largely inconsequential, or do some carry forward, disrupting future research for years or even decades? For example, it recently came to light that several high-profile cases of fraud in Alzheimer’s research have shifted hundreds of millions in funding and years of research effort in unproductive directions. In another instance, a detailed analysiscarried out by Science shows how research on the fake drug Cerebrolysin became much more popular after a series of fraudulent papers were published by a relatively famous neuroscientist.

When fraudulent papers are published, current post-publication error correction and retraction processes happen far too slowly to serve as effective damage control. All in all, these considerations should give us pause. It could be that catching 25 percent of errors has such a significant downstream positive effect that the costs of peer review are justified, or it might barely budge the needle. We just don’t know.

Peer Review and Scientific Value

“The referee is the lynchpin about which the whole business of Science is pivoted. His job is simply to report, as an expert, on the value of a paper submitted to a journal.” — John M. Ziman, 1968

It’s not enough for a scientific paper to be free from errors; it should also advance scientific knowledge. This criterion is especially true if the authors are trying to publish in a top journal. Ideally, ground-breaking, high-quality work would always make it into top journals. However, studies show that peer reviewers differ greatly on whether they think articles are worthy of publication.

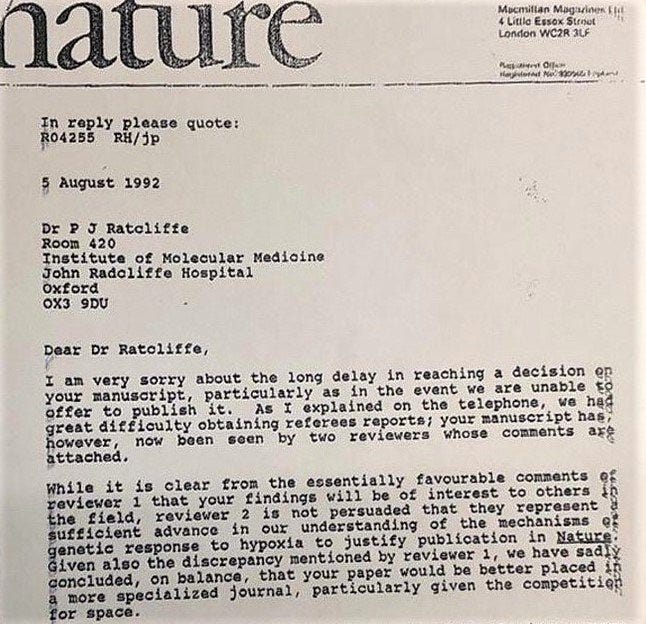

Many scientists have experienced their work being underappreciated or misunderstood by reviewers, which naturally leads them to criticize the capriciousness of the process. And many examples exist of important work getting rejected from top journals. Kary Mullis’s Nobel Prize-winning discovery of the polymerase chain reaction was rejected by both Science and Nature before being published in the journal Methods in Enzymology. (However, it’s worth pointing out that ground-breaking work is almost always published somewhere, albeit often with a year or more delay.)

For one widely cited study, researchers tried resubmitting papers to psychology journals and found that re-acceptance was essentially a coin flip (no greater than a 50 percent chance). Another study at the prestigious NeurIPS AI conference found that resubmissions resulted in the same decisions about 75 percent of the time. In general, studies show that the correlation between reviewers is low, although significantly greater than random chance. Other studies on agreement between peer reviewers found that, when two reviewers were asked to review a manuscript, they agreed on their accept/reject decision with rates of 45, 55, 60, 74, and 75 percent.

Knowing that perceived importance can be existential for a paper, authors tend to overplay the implications of their findings, especially when it comes to lackluster work that has marginal value. Spencer Greenberg coined this phenomenon “importance hacking,” which he defines as writers working to overinflate the importance of their results so that peer reviewers and editors become convinced it should be published. (Fully competent reviewers would reject the work despite the inflationary rhetoric of importance hacking, but less incisive reviewers can be tricked.)

I’m not sure it’s fair to say that peer review incentivizes importance hacking. Rather, it seems that it is the editor's "desk rejection" step — and not reviews from other scientists in one’s field — that induces authors to “hype” their results. As the researcher Merrick Pierson Smela says, “there's a bit of a balancing act” in publishing, where scientists try to make their results sound interesting to get past the editor's desk while avoiding claims that reviewers will later shoot down.

Importance hacking, it seems, also stems from serious problems in contemporary scientific culture, such as declining standards for rigor and scientific integrity and the intense pressure to “publish or perish.” Under a system in which scientists are incentivized to “publish PDFs on the internet,” it’s quite possible that the problem of importance hacking would grow. Depending on how open science publishing platforms were implemented, they might result in the most important and valuable work being read and discussed, or they could easily default to the norm online, where showy, exciting-sounding, and fear-inducing ideas would garner the most attention. If it is the latter, then it could create a feedback loop and “race to the bottom” effect, leading to declining objectivity and intellectual honesty.

Peer Review and Science Writing

“ … vivid phrases and literary elegances are frowned upon; they smell of bogus rhetoric, or an appeal to the emotions rather than to reason. Public knowledge can make its way in the world in sober puritan garb; it needs no peacock feathers to cut a dash in.” — John M. Ziman, 1968

It frustrates Mastroianni that journals don’t allow things like self-deprecating jokes or fun stories. This makes sense, given his background as a comedian. And he is not alone in this view. Roger’s Bacon argues that peer review has led to a loss of artistic style in research articles, to science’s detriment.

What is overlooked here, however, is that artistic phrasing, jokes, and stories can easily detract from an article’s objectivity. After all, most people do not read scientific papers for entertainment, but to gain scientific knowledge. Clarity, precision, concision, and objectivity aid in this process. Jokes and fancy writing may have a place in rare instances, but they tend to worsen scientific communication along these dimensions.

The expectations for scientific communication have been informally agreed upon over the years. These include mostly basic things, such as that papers should primarily report new findings and that authors should use standard notations, terminology, and units of measurement. Scientists usually use the third person, clearly distinguish statements of fact from speculations, and should not take undo credit by citing all relevant predecessors (though, often, this doesn’t happen).

In my own experience with peer review, most of the changes reviewers requested had to do with improving the writing and presentation of ideas. Poorly communicated papers take longer to read and can cause confusion. Making papers easy to read and understand before publication seems like the correct approach. Otherwise, one would have to trust that people on the internet will leave comments asking for clarification and that authors will then follow through. Expecting scientists to improve their manuscripts after publication seems naive, especially in today’s academic climate, where the focus is always on pushing out the next publication to bulk up one’s CV.

There is some evidence that peer review improves both communication standards and related issues of quality. A 2008 survey of 3,040 scientists found that 90 percent agreed that peer review improved the quality of their work, and the most cited area of improvement was writing and presentation. Researchers who compared preprints with peer-reviewed versions found evidence that the peer-reviewed versions had clearer abstracts and better reporting of methods (as measured on a five-point rating scale).

A 1994 study in the Annals of Internal Medicine found that peer review improved 34 out of 35 measures of quality (mainly having to do with communication, statistical methods, and reporting for reproducibility). Interestingly, the study found that peer review most improved the author’s discussions of their work’s limitations and implications. This is a strong indication that peer reviewers push back against the aforementioned signs of importance hacking — namely, inflated importance and a weak treatment of limitations.5

Another measure of quality most improved by peer review, according to the 1994 study, was the use of confidence intervals or error bars on data. There is an adage from physics that states: “A measurement without an uncertainty is meaningless.” A professional physicist would never be caught reporting an experimental measurement without an uncertainty attached. Unfortunately, other fields do not value uncertainty quantification as strongly. Peer review is a way of enforcing essential standards like this when they are not already baked into the culture of a field.

Peer Review and Bold Ideas

Critics of peer review argue that it is inherently biased against people with big, bold, paradigm-shifting ideas. However, most big, bold, paradigm-shifting ideas are wrong. Experts in a particular field are the best suited to distinguish which radical, attention-grabbing claims have a chance of being true.

Remember LK-99, the putative room-temperature superconductor? Well, that episode was initially touted as a success of the “publish PDFs on the internet” model and of “open science.” However, this was not the case. From day one, experts almost universally labeled LK-99 as junk science. They pointed to the physical implausibility of the findings, the author’s suspicious website, similar claims made in the past that didn’t pan out, anomalies in the data, and obvious scientific errors sprinkled throughout the text. Even so, LK-99 managed to garner a huge amount of attention on Twitter.6

Many scientists tried to capitalize on the LK-99 hype by publishing papers on LK-99, hoping to bring attention to their labs and gain easy citations. Numerous labs stopped what they were doing to synthesize and characterize their own samples. Theorists started running quantum simulations and publishing elaborate theories on how LK-99 might work.

Now, one can argue that it was worth investigating LK-99 given the potential upside if it was, in fact, a room-temperature superconductor. Still, claiming the LK-99 episode as a success story strikes me as misguided. Enormous amounts of resources were wasted chasing LK-99 that could have been saved by heeding a council of experts.

Another example of an erroneous “big idea” concerns data reported in 2011 by researchers at CERN, purporting to show neutrinos (a kind of subatomic particle) traveling faster than light. The finding led to hundreds of theory papers attempting to explain the result, in addition to about twenty follow-up experiments. In the end, the CERN researchers retracted their claim after discovering a loose cable was causing faulty measurements.

In 2014, researchers prematurely announced that they had detected “echoes” of gravitational waves from the Big Bang. These findings, which were later shown to be false, influenced hiring decisions, paper publication, grant applications, and even “the government planning of large-scale projects,” according to the theoretical physicist Paul Steinhardt.7

The aforementioned examples all transpired — from initial report to debunking — over a period of less than two years. However, the history of science is littered with episodes of extended confusion, where scientists spend many years or even decades chasing evidence for some exciting new phenomena that lacked validity to begin with.

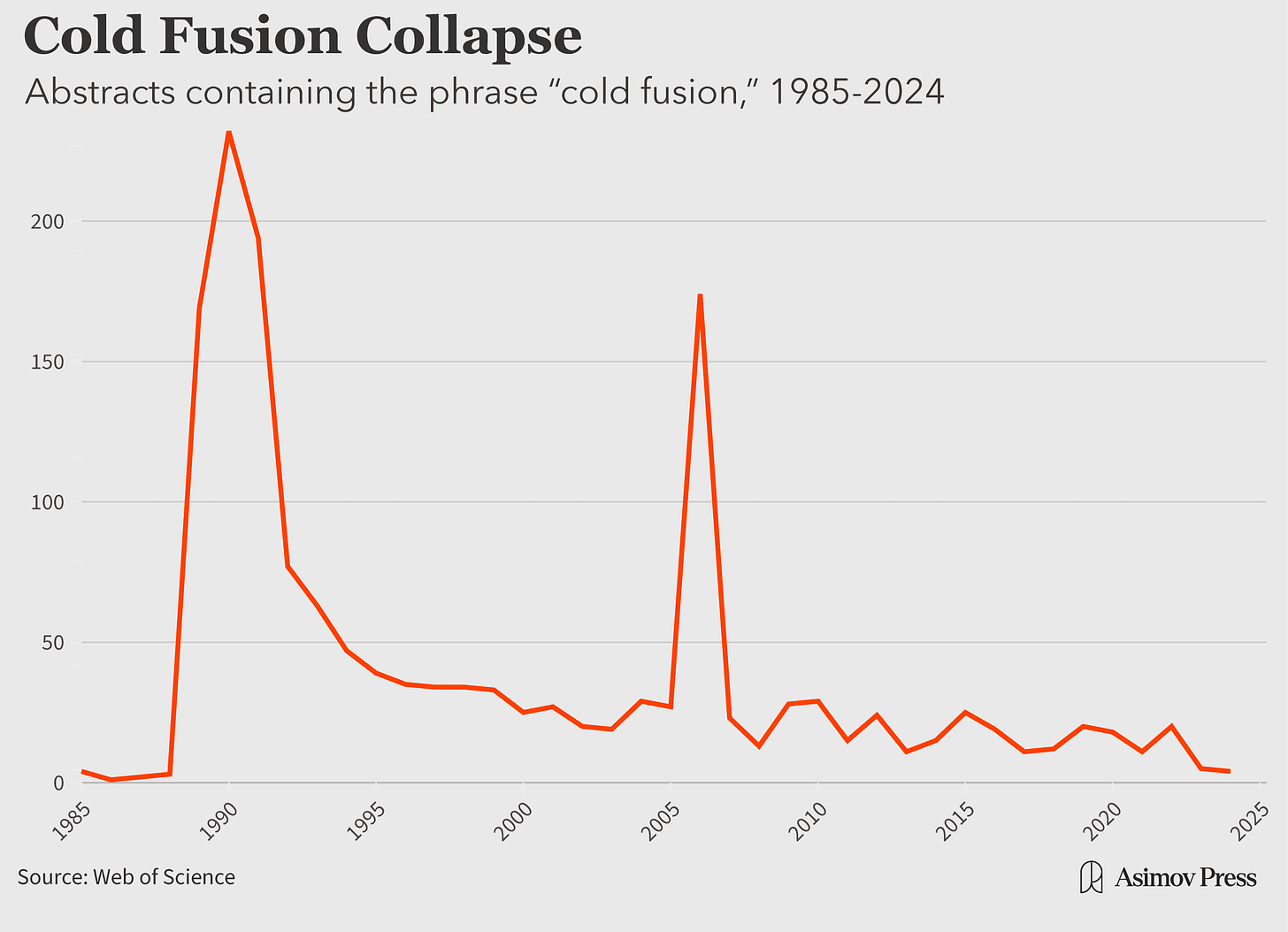

The chemist Irving Langmuir described such episodes as “pathological science.” Pathological science starts with data published alongside a big, bold, exciting claim. Typically, the evidence presented has a small effect size or one just on the edge of statistical significance. Scientists then spend years chasing after confirmatory evidence. Historical examples of pathological science abound, including animal magnetism, N-rays, cold fusion, polywater, resonant cavity thrusters, the Mpemba effect, water memory, exclusion-zone water, and magnetic water treatment.8 (Note that pathological science is distinct from pseudoscience, which doesn’t follow scientific standards at all.)

It’s important to protect the scientific enterprise from falling prey to pathological science, which historically has consumed enormous resources (300 papers were written on N-rays, 500 on polywater, and 3,200 on cold fusion).9 By not involving experts in the initial appraisal of research results, any system in which we forego peer review and instead opt instead to simply solicit open comments on PDFs published on the internet could exacerbate this problem of pathological science, causing scientists to go off track in pursuit of exciting — but false — leads.10

The established experts at the helm of peer review may be resistant to accepting paradigm-shifting work, but they also help us suss out blind alleys and steer research in more productive directions.

Peer Review and New Researchers

“Blinded paper reviewing is probably the only way to identify good work coming from new and unknown researchers.” — Calvin McCarter

New researchers can benefit a great deal from peer review for two primary reasons. First, if the peer review is double-blinded, then their work is solely judged on its merits and new researchers are treated on exactly the same footing as established, high-status researchers. Secondly, peer review forces at least two experts in one’s field to actually read one’s work. This benefit is far more important than it may sound. It is not at all clear if papers uploaded to the internet can, on their own, guarantee highly-qualified readership and reach those among them willing to leave comments.11

Adam Mastroianni published a scientific paper on Substack and received more than 100 comments on the post. This is, at least, anecdotal evidence suggesting that PDFs posted on the internet can draw in readers and garner feedback. However, Mastroianni’s article was on an easy-to-understand aspect of human psychology, a subject with mass appeal. A paper on a new immunotherapy method or novel quantum material might struggle to incite similar engagement. Not only this, but Mastroianni’s audience was likely larger, at the time, than that of the average new researcher.12

It is also worth pointing out that only 5-10 percent of the comments on Mastroianni’s paper provide criticism or potentially useful feedback from a scientific perspective. The vast majority are voicing agreement or giving praise. This surplus of comments can be problematic for open comment threads as it potentially drowns out comments with greater relevance. In controversial areas, keeping comment threads focused and civil requires full-time moderation, and that is why the popular preprint server arXiv has decided against comment threads despite many requests from users for that functionality.

Peer Review and Prestige

“I don’t believe in honors…honors bother me.” — Richard P. Feynman

Papers that pass through a blinded peer review process emerge with a marker of prestige that can be used by prospective readers, grant reviewers, hiring committees, and the general public to evaluate the “true” quality of a paper. This is because blinded peer review involves reviewing the scientific rigor of a research article, rather than the status of those who produced it (despite some evidence that reviewers can often make educated guesses about who the authors are anyway).13

When peer review functions as designed, then, it partially counters a well-documented phenomenon in the sociology of science known as the “Matthew effect,”which refers to the observation that high-status figures in science tend to get more credit, citations, funding, honors, and attention relative to the actual quality of their work.14

If peer review is eliminated or significantly curtailed, and scientists instead move to an “open comment” system, the Matthew effect could be exacerbated. Papers published by researchers from prestigious schools would likely garner more readers and citations than researchers from less prestigious schools, and researchers from low-prestige countries or institutions could have a harder time getting their work read, getting funding, and/or gaining employment. While this argument strikes me as tenuous, other researchers have also expressed concerns along these lines.

Where Do We Go from Here?

“At its best, peer review is a slow and careful evaluation of new research by appropriate experts. It involves multiple rounds of revision that removes errors, strengthens analyses, and noticeably improves manuscripts. At its worst, it is merely window dressing that gives the unwarranted appearance of authority … ” — James Heathers

The modern scientific enterprise is a complex system involving numerous interactions between the public, funding agencies, publishers, established researchers, and new researchers trying to move up through the ranks. While imperfect, at its best, peer review plays an important moderating role in many interactions within this system. Peer review helps maintain important scientific standards, ensures taxpayer money is well-spent, supports hiring committees in making decisions, and aids the general public in distinguishing scientific work from counterfeit.

It’s not easy to predict what would happen if we got rid of peer review. It’s possible that if researchers were freed from having to pass peer review, the quality of most work could decline and science would become less rigorous over time. It is also possible that, without the rigidity of this system, science would flourish. We just aren’t sure. Ultimately, Mastroianni is correct in calling for more rigorous experiments on alternative publication systems.

Indeed, “more research is needed” on both extant and future review processes. The lack of rigorous research on the effectiveness of peer review is galling given how much time and money is being spent on it. As was mentioned before, the few papers evaluating peer review have methodological flaws15 and all were restricted to medical journals. In 1995, economist Robin Hanson discussed the importance of studying peer review and outlined how methods from experimental economics may be employed to do so. That was nearly three decades ago, and it seems not much has happened since then.

I agree with Mastroianni that the status quo is unacceptable, while believing the solution to many of science’s problems may lie in strengthening, modifying, and/or supplementing peer review, rather than getting rid of it.

Many proposals for improving peer review have been studied over the years. Studies have looked at training peer reviewers, not allowing authors to suggest reviewers, publishing peer reviews, having reviewers discuss amongst themselves, and paying reviewers money or offering other rewards. Unfortunately, while some of these interventions may help marginally, none appear to have a large effect.

One simple way to augment peer review would be to increase the use of statistical reviewers. As their name implies, statistical reviewers are statistics experts employed by journals to check the validity and soundness of statistical methods. They already participate in the peer review process in some journals, especially in biomedicine; however, they should be employed far more widely.

Additionally, many checklists and guidelines have been developed to improve statistical methods and ensure adequate reporting of methods for reproducibility. Detailed guidelines have been developed for randomized clinical trials (CONSORT, STAR-D ), observational studies (STROBE), genetic association studies (STREGA), and meta-analyses (PRISMA). Unfortunately, very few journals strictly enforce the use of these standards, even though doing so would improve the quality and reproducibility of published literature enormously. A randomized trial found there were improved benefits when using statistical reviewers to improve reporting requirements, compared to asking normal reviewers to follow guidelines. Two studies provide some evidence that supports the use of statistical reviewers.

Journals can also develop their own checklists. For instance, the journal Radiology: Artificial Intelligence has created a checklist for reproducibility and reporting called "CLAIM,” which every article must pass. In 2023, researchers looked at articles on AI for radiology published elsewhere and found that most do not come close to meeting the basic requirements outlined by CLAIM. The implementation of CLAIM has benefited the journal’s readership and is pushing the field in a much-needed direction.

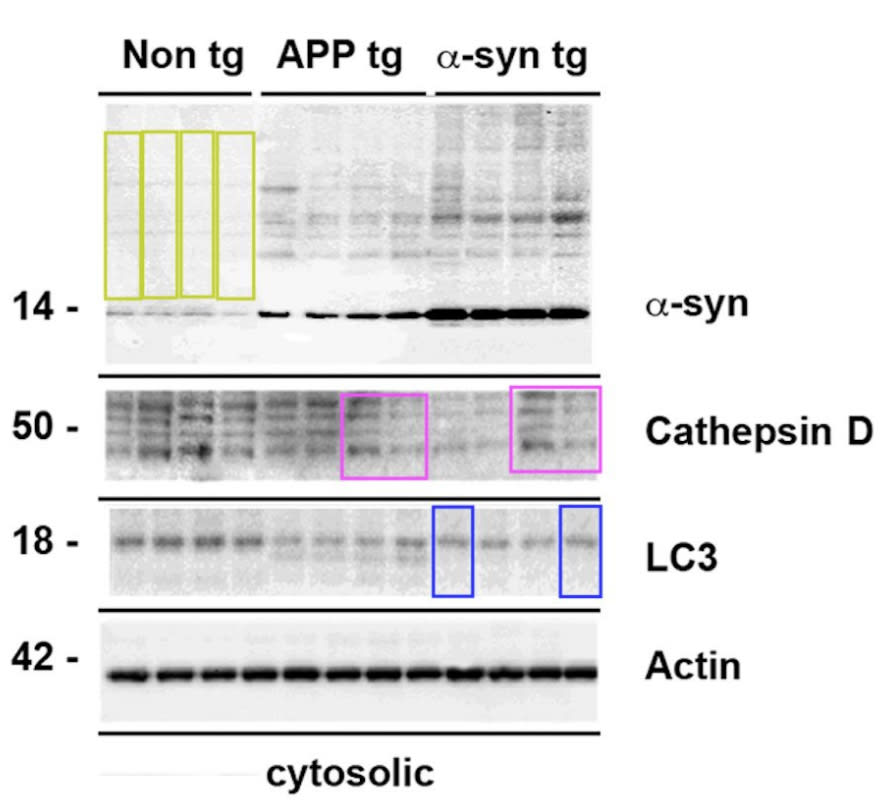

As another example of how peer review can be augmented, The Journal of Cell Biology has successfully implemented an “image integrity check” to help cut down onimproper image manipulation.16 Additionally, automated tools like StatCheck and the GRIM Test can easily be implemented to detect errors in statistics, and pilot studies on both tools have shown promising results. Recently, researchers have also looked atwhether large language models can serve as additional reviewers or help editors decide which papers to send out for review.

An exciting recent development is community peer review, also called “open peer review.” Under this system, preprints are uploaded to a server, wherein a pool of reviewers can look them over and decide which, if any, they would like to review. Articles that have made it out of this pool are then selected for publication. This differs from the “upload PDFs to the internet” ideas because it is more structured, results in a definitive outcome, and allows gatekeeping in terms of the composition of the pool of reviewers.

Other reforms could penalize certain aspects of scientific publishing. For example, funding agencies could prohibit publication in predatory journals or journals found to have weak or minimal peer review.

With these suggestions in mind, we can consider what it might take to implement them. First, we should conduct controlled experiments to carefully evaluate how well each proposal works, especially in terms of correcting errors and improving reporting for reproducibility.17

Making this happen will likely require institutional buy-in from the National Science Foundation and other large research funding agencies. Individual researchers absolutely should experiment with ways to disseminate and collect feedback on their work, but we’ll also need reform to happen from within to see large-scale change.

In the end, this essay was by far the most challenging thing I’ve ever written. This is partly because of the lack of good studies on the subject of peer review, and partly because many critiques of peer review are at least partially correct. In particular, peer review of publications can indeed lead to certain research directions becoming dominant at the expense of others. Such scientific myopia has been disastrous in some cases — for instance, the dominance of the “amyloid hypothesis” in Alzheimer’s research — by draining away funding, talent, and time from more promising research avenues.

Even so, I maintain that peer review is a useful mechanism to enforce a wide range of scientific standards. And while oversight from established researchers may stifle innovative work, it also helps prevent researchers from stumbling into dead ends and science from falling on its face.

Dan Elton is a Staff Scientist at the National Human Genome Research Institute at NIH. He obtained his Ph.D. in physics from Stony Brook University in 2016. He writes about AI, metascience, and other topics at www.moreisdifferent.blog. You can find him on X at @moreisdifferent.

Cite: Elton, Dan. “A Defense of Peer Review.” Asimov Press (2024). DOI: https://doi.org/10.62211/71pt-81kr

Acknowledgements: Thank you to James Heathers for reading an earlier draft, and to Adam Mastroianni for clarifying his position.

1 Mastroianni’s stance is not simply that “everyone should upload PDFs to the internet.” We sent this article to Mastroianni prior to publication, and here’s what he said: “I’ve never suggested that we should switch from a universal pre-publication peer review system to a universal PDF-upload system, because I don’t think we should. What I really object to is universal systems, and I’ve tried to argue for more diversity in the way we conduct and communicate our results. Uploading PDFs is one way to do that, but there are many others, and I hope people do them.”

2 Although it’s worth noting that the long-term exponential growth rate in scientific publications only rose slightly during this time — so the change was not as dramatic as it is sometimes portrayed.

3 Baldwin also explains how the term “peer review” in the context of publication was borrowed from the term used in grant review (previously, peer review of publications had been known as “refereeing”). As with grant review, peer review became understood as a way of ensuring that there was some accountability over the work that taxpayers were funding.

4 The entire peer review process rarely takes less than two months and often takes six months to a year or more. Many people feel this time delay is not worth it. Preprints — such as those published on bioRrxiv or arXiv — help ameliorate this delay by allowing researchers to share their work and stake a scientific claim prior to the completion of peer review.

A similar study is a 1996 survey of 400 readers who were given articles from a Dutch medical journal in either their pre-review or post-review state. The largest area of improvement was in “style and readability.”

6 The information ecosystem was a mess, with many easily confused by fraudulent videos.

7 To quote Steinhardt, the incident showed that “announcements should be made after submission to journals and vetting by expert referees. If there must be a press conference, hopefully the scientific community and the media will demand that it is accompanied by a complete set of documents, including details of the systematic analysis and sufficient data to enable objective verification.”

8 Even our best scientists can go down rabbit holes for years in the hopes of proving a breakthrough claim.

9 Spending the bulk of one's career chasing a phenomena that isn’t real is one of the saddest things that can happen to a scientist. There are many examples of largely wasted careers, especially around cold fusion and to a lesser extent polywater. One example I have written about is Prof. Gerald Pollack and his crazy theories about water – some of his research is fine, but the “exclusion zone” phenomena he purports to exist are all bogus. Cold fusion research continues to be funded, most recently by ARPA-E, despite having all the red flags of pathological science.

10 However, it can also be argued that such systems will help disprove faulty findings more rapidly.

11 Of course, authors can email their paper to potential readers. However, most researchers frequently receive emails from cranks asking for such services — so who is to say that papers of quality will rise to the top in a sea of similar-looking emails?

12 In a prior version of this article, we wrote that Mastroianni’s article garnered a great deal of attention, in part, because he “had a large number of subscribers on his Substack” at the time he published his research paper. Mastroianni addressed this point in a clarifying email to us, writing: “Dan says that I got a lot of attention on my ‘Things Could Be Better’ paper because I had a big following built-in at the time. But that’s not how the paper took off originally. I posted it to PsyArxiv the night before I posted it on my blog, forgetting that there was a Twitter bot that automatically tweets every new PsyArxiv paper. When I woke up the next morning, the paper had already been read and downloaded thousands of times, and people were talking about it on Twitter, all from that one tweet by a bot. I always stress that part of the story when I talk to students, because they often assume you already need to have a following to get your work noticed. But I also try to explain that going viral isn’t an inherent good for a scientific paper — what you really want is to reach the folks who could build on your findings, and you do that by getting to know the folks in your area, rather than amassing an online following."

13 Some evidence suggests double-blind reviewing reduces biases against lesser known schools and individuals. Even with blinding, however, there are problems. At the very least, an author’s command of the English language or style of writing can give away nationality, and studies suggest reviewers are unfairly biased against non-native speakers of English. Additionally, a 1985 study found that 34 percent of reviewers for a particular biomedical journal could identify either an author or the particular university and department the authors were from, despite blinding. Unlike other questions around peer review, there is a large literature trying to compare single with double-blind review. The literature on the utility of double-blind review is mixed, and it is beyond the scope of this article to attempt to summarize it here. One review suggests that double blinding may help with gender bias, but not nearly as much as may be naively assumed.

14 For good or ill, humans appear to have an in-built desire to rank individuals and groups by levels of prestige. There are prestige hierarchies for countries, institutions, departments, individual labs, job titles, and even awards and professional societies that require election. This is not an endorsement or value judgment, just a comment on what seems to be human nature.

15 For instance Peters & Ceci (1982), which has been cited 1300 times, has several potential methodological problems and difficulties with interpretation which are discussed here and here.

16 ImageTwin is a commercial tool which helps automate this highly laborious process.

17 Mastroianni thinks it doesn’t matter much if sloppy and/or fraudulent work is published. I disagree with this, but explaining why would require a separate article. Needless to say, if we think it is important that the scientific commons is protected from fraud and fakery, then we need to think about safeguards and protections against such things getting accepted into the scientific record in the first place.

1 comments

Comments sorted by top scores.

comment by habryka (habryka4) · 2024-10-22T20:34:34.845Z · LW(p) · GW(p)

An exciting recent development is community peer review, also called “open peer review.” Under this system, preprints are uploaded to a server, wherein a pool of reviewers can look them over and decide which, if any, they would like to review. Articles that have made it out of this pool are then selected for publication. This differs from the “upload PDFs to the internet” ideas because it is more structured, results in a definitive outcome, and allows gatekeeping in terms of the composition of the pool of reviewers.

That... seems like a weird framing of what is going on? Community peer-review was the standard before anonymous peer review ended up being forced on the scientific institution, the way this article describes. Post-publication community peer review was the standard in most fields until the mid of the 20th century, and describing it as an exciting recent development feels like it's conceding the whole debate.

Yes, just do post-publication peer review. Let journals and authors curate which papers they think are good at the same time as everyone else gets to read them. That's what science did before various large government funding bodies demanded more objectivity in the process (with, as this article and other articles it links to, great harm to the process of science).