The type of AI humanity has chosen to create so far is unsafe, for soft social reasons and not technical ones.

post by l8c · 2024-06-16T13:31:09.277Z · LW · GW · 2 commentsContents

2 comments

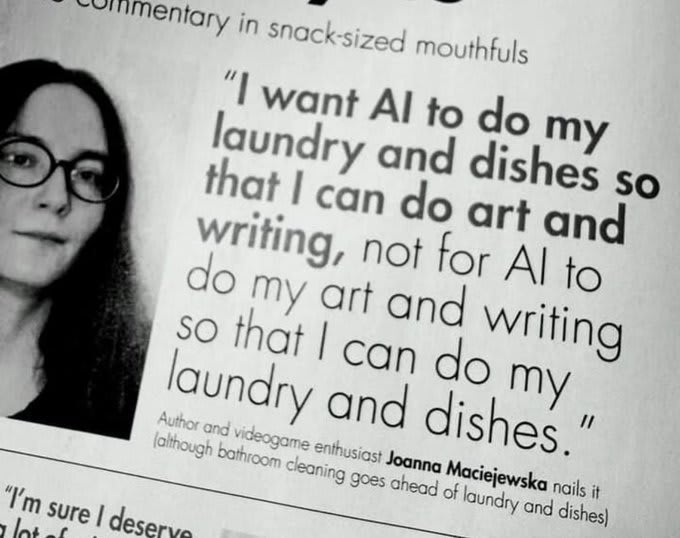

I saw this image on X:

and I not only endorse this lady's comment, but would like to briefly expand on it in terms of AI safety.

How many people here were attracted to the concept of AI safety by Eliezer Yudkowsky's engaging writing style across a few different topics and genres? Or what about any number of other human authors?

Now what if the next generation of human authors, and (broadly conceived) artists and scientists, find themselves marginalized by the output of ChatGPT-5 and any number of comparatively mediocre thinkers who leverage LLMs to share boilerplate arguments and thoughts which have been optimized by the LLM and/or its creators for memetic virulence?

What if the next Eliezer has already been ignored in favour of what these AIs have to say?

Isn't that far more immediately dangerous than the possibility of an AI that isn't, in mathematical terms, provably safe? Like, this is self-evidently dangerous and it's happening right now!

Didn't we used to have discussions about how a sufficiently smart black-boxed AI could do all sorts of incredibly creative things in order to manipulate humanity via the Internet, including manipulating/ blackmailing its human guard via a mere text terminal?

Well, it seems to me that we're sleepwalking into letting GPT and its friends manipulate us all without it even having to be all that creative.

(how sure are you that this post wasn't written by ChatGPT???)

For this reason, I would much prefer to see GPT and similar AIs being put to use in fields such as toilet cleaning, rather than art and writing. (By the way, I have done that job and never saw myself as a Luddite...) GPT in robot guise could make for a really good toilet cleaner, and this type of intellectual effort on the part of LLM developers would not only serve human needs but also detract from the ongoing replacement of human thought by LLM-aided or -guided thought.

tl;dr: I feel that GPT is accumulating power the way Hitler or Lenin would. The solution is what Joanna Maciejewska said.

2 comments

Comments sorted by top scores.

comment by ChristianKl · 2024-06-19T15:46:15.862Z · LW(p) · GW(p)

There's plenty of money invested in companies like Boston Dynamics and Tesla into building general-purpose robots that could clean your toilet or do laundry.

It's just that the LLM problem turned out to be an easier problem to solve.

comment by pom · 2024-06-16T14:52:15.826Z · LW(p) · GW(p)

Alright, let's see. I feel there is a somewhat interesting angle to the question whether this post has been written by a GPT-variation, probably not the 3rd or 4th (public) iteration, (assuming that's how the naming scheme was laid out, as I am not completely sure of that despite having some circumstantial evidence), at least not without heavy editing and/or iterating it a good few times. As I do not seem to be able to detect the "usual" patterns these models display(ed), of course disregarding the common "as an AI..." disclaimer type stuff you would of course have removed.

That leaves the curious fact that you referred to the engine as GTP-5, which seems like a "hallucination" that the different GPT versions still seem to come up with from time to time, (unless this is a story about a version that is not publicly available yet, seeming unlikely when looking at how the information is phrased) which also seems to tie into something I have noticed, that if you ask the program to correct the previous output, some errors seem to persist after a self-check. So we would be none the wiser.

Though if the text would have been generated by asking the AI to write an opinion piece based on a handful of statements, it is a different story altogether as we would only be left with language idiosyncrasies, and possibly the examples used to try and determine whether this text is AI-generated, making the challenge a little less "interesting". Since I feel there are a lot of constructs and "phrasings" present that I would not expect the program to generate, based on some of the angles in logic, that seem a little too narrow compared to what I would expect from it, and some "bridges" (or "leaps" in this case) also do not seem as obvious as the author would like to make them seem, or at the order in which the information is presented, and flows. Though maybe you could "coax" the program to fill in the blanks in a manner fitting of the message, at which point I must congratulate you for making the program go against its programming in this manner! Which is something I could have started with of course, though I feel when mapping properties you must not let yourself be distracted by "logic" yet! So all in all when looking at the used language I feel it is unlikely this is the product of GPT-output, personally.

I also have a little note on one of the final points, I think it would not necessarily be best to start off with giving the model a "robot body", especially if it was already at the level that would be prerequisite for such a function, it would have to be able to manipulate its environment so precisely that it would not cause damage. Which is a level that I suspect would tie into a certain level of autonomy, though then we are already starting it of with an "exoskeleton" that would be highly flexible and capable. Which seems like it could be fun, though also possibly worrying.

(I hope this post was not out of line, I was looking through recent posts to see whether I could find something to start participating here, and this was the second message I ran into, and the first that was not so comprehensive that I would spend all the time I have at the moment looking at the provided background information)