Easily Evaluate SAE-Steered Models with EleutherAI Evaluation Harness

post by Matthew Khoriaty (matthew-khoriaty) · 2025-01-21T02:02:35.177Z · LW · GW · 0 commentsContents

How to Evaluate SAE-Steered Models Step one: Step Two: Deciding on your interventions Step Three: Make a steer.csv file Step Four: Run the command Future work: Conclusion None No comments

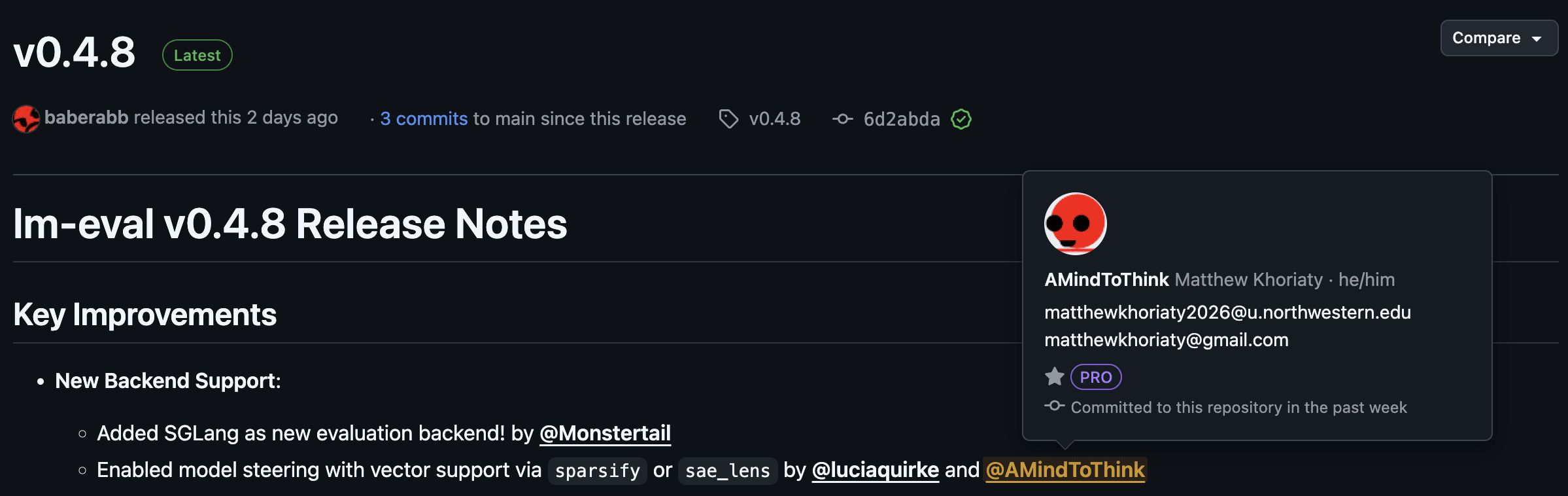

EDIT: The folks at EleutherAI have taken and improved my work, and extended it to work with the Sparsify framework in addition to my use of SAE-Lens! I'm super proud that I contributed to that :)

I haven't used the new system, but if you want to use it then the how-to is in EleutherAI's README.

Everything below here is outdated, only relevant for historical reasons / my portfolio of cool things I've done.

(Status: this feature is in beta. Use at your own risk. I am not affiliated with EleutherAI.)

Sparse Autoencoders are a popular technique for understanding what is going on inside large language models. Recently, researchers have started using them to steer model outputs by going directly into the "brains" of the models and editing their "thoughts" — called "features".

EleutherAI's Evaluation Harness is an awesome project which implements a wide variety of model evaluations including MMLU, WMDP, and others. You can now test SAE-Steered Models on many of those sets! (Not all of the evaluations are available right now for SAE-Steered models: just ones that only need 'loglikelihood'.) That includes multiple-choice questionnaires such as MMLU and WMDP.

As part of my research project in Northwestern's Computer Science Research Track[1], my team and I wanted to be able to easily evaluate steered models on benchmarks, MMLU and WMDP in particular. It was thanks to the AI Safety Fundamentals Course that I realized that I should put in extra effort to make the tool available to others and communicate how to use it. This constitutes my AI alignment project for the course.

How to Evaluate SAE-Steered Models

I've made a pull request to EleutherAI, so hopefully my contributions will be available there in the near future. If my work doesn't meet their criteria and they decline my code, then the steered model evaluation code will still be available on my fork here.

Step one:

If EleutherAI has approved my pull request, run these lines (from the installation section of the README).

git clone --depth 1 https://github.com/EleutherAI/lm-evaluation-harness

cd lm-evaluation-harness

pip install -e .If not, run these lines

git clone --depth 1 https://github.com/AMindToThink/lm-evaluation-harness.git

cd lm-evaluation-harness

pip install -e .Step Two: Deciding on your interventions

You first need to ask:[2]

- Which model should I edit?

- Which SAE-version should I use?

- Which layer should I edit?

- Which feature in that layer should I edit?

Neuronpedia is the place to go for this. If you select a model, you can search inside of it for SAE features.

When poking around, you might find that index 12082 in the gemma-2-2b model's 20-gemmascope-res-16k SAE refers to dogs and has a maximum observed activation of 125.39. Let's try adding twice the maximum activation to the dog feature and see what happens![3]

Step Three: Make a steer.csv file

latent_idx,steering_coefficient,sae_release,sae_id,description

12082, 240.0,gemma-scope-2b-pt-res-canonical,layer_20/width_16k/canonical,this feature has been found on neuronpedia to make the model talk about dogs and obedienceThe columns of the csv are:

- latent_idx holds the component of the SAE which represents the feature.

- steering_coefficient is the amount of that concept to add to that location of the model.

- sae_release and sae_id refer to which sparse autoencoder to use on the model.

- description does not impact the code and can contain arbitrary human-readable comments about the features.

This example csv file can be found in examples/dog_steer.csv

Step Four: Run the command

EleutherAI offers the lm_eval command which runs tests. For SAE steered models, set --model to sae_steered_beta[4]. SAE steered models take two --model_args, a base_name and a csv_path. base_name needs to be the name of a model which has pretrained SAEs available on sae_lens. csv_path is the path to your edits.

The following is an example command that tests the dog-obsessed model on abstract math from MMLU using GPU 0 and an automatically-determined batch size and puts the results in the current folder.[5]

lm_eval --model sae_steered_beta --model_args base_name=google/gemma-2-2b,csv_path=/home/cs29824/matthew/lm-evaluation-harness/examples/dog_steer.csv --tasks mmlu_abstract_algebra --batch_size auto --output_path . --device cuda:0Future work:

Right now, only unconditionally adding a fixed-multiple of the feature is supported. In the future, it would be great to add conditional steering and other ways to intervene in the models.[6]

There are still bugs to fix and functionality to implement. The other types of evaluations do not yet work (the ones involving generating longer sequences). In order to include those evaluations, we need to implement loglikelihood_rolling and generate_until.

Conclusion

Please enjoy evaluating your steered models! If you want to contribute (and if EleutherAI haven't approved my pull request) then you can make a pull request to my repo and I'll check it out.[7]

- ^

Our project as a whole is to build upon prior work on Unlearning with SAE to make the process robust to adversarial attacks.

- ^

- ^

This is the example ARENA used in their training materials.

- ^

This feature is in beta because:

- It is largely untested

- The other types of evaluations do not yet work (the ones involving generating longer sequences)

- I'm new to both SAE_Lens and EleutherAI's evaluation harness, and I don't know whether I implemented everything properly

- ^

Funny enough, the dog-steered gemma-2-2b model did slightly better than the default gemma-2-2b, but within the standard deviation. Both did really poorly, only slightly better than chance (.25).

Default: "acc,none": 0.3, "acc_stderr,none": 0.046056618647183814

Dog-steered: "acc,none": 0.34,"acc_stderr,none": 0.047609522856952344

- ^

Here's the hook I'm using. Hooks are placed "in between layers" and process the output of the previous before returning it to the next layer. Once again, this is straight from ARENA.

def steering_hook( activations: Float[Tensor], # Float[Tensor, "batch pos d_in"], hook: HookPoint, sae: SAE, latent_idx: int, steering_coefficient: float, ) -> Tensor: """ Steers the model by returning a modified activations tensor, with some multiple of the steering vector added to all sequence positions. """ return activations + steering_coefficient * sae.W_dec[latent_idx] - ^

I don't know how citations work. Can I get citations from people using my software? Do I need to wait until my paper is out, and then people can cite that if they use my software? If you know how software citations work, please drop a comment below.

0 comments

Comments sorted by top scores.