Cheap Model → Big Model design

post by Maxwell Peterson (maxwell-peterson) · 2023-11-19T22:50:15.017Z · LW · GW · 2 commentsContents

Big-Model-Sometimes Design Cheap Model → Big Model Examples Aggressive and unwanted speech Nudity Fraudlent transactions Early storm detection A technical note None 2 comments

In machine learning, for large systems with a lot of users, or difficult prediction tasks that require a ton of number-crunching, there’s a dilemma:

- Big complex models like neural networks are the best at making automated decisions

- Big complex models are expensive to run

Cheap Model → Big Model Design is a good solution. I’ll tell you what it is in a minute, but first, an example from last night.

Big-Model-Sometimes Design

At around 2am, in a facebook board game group I’m in - very family friendly, there are many little smart and adorable Asian kids in the group (the board game is Asian, it all makes sense), a nice chill group - a spam account posted a video of literal porn: a woman without any clothes, on her knees, with a certain part of her face doing a particular action involving a certain something. The video preview image made it clearly, obviously, 1000% porn.

I reported it, and messaged the admins separately, but like, it’s 2am, so I didn’t have much hope of anyone catching it in the next 6 hours, which would have been a huge bummer.

Then I refreshed the page, and it was…. already gone? In less than a minute after my report, though it had been posted 12 minutes before I saw it.

This is weird. Think about it: it can’t be facebook’s policy to auto-delete any post a single user marks as spam. That would let anybody get any post they didn’t like deleted, with just a few clicks. If they deleted things that easily, what’s to stop, like, a Vikings fan from reporting “go Packers!”, getting these deleted immediately, every time they see the enemy posting on game day? Posts would be taking bullets left and right.

So maybe Facebook is using a neural network to detect pornography, and other things that violate its rules. But in that case, why did it take until I reported it for the delete to happen? The preview image was blatant sexery. It should be easy to detect.

So neither theory makes sense. Here’s a third one:

- Running image or video neural networks on every single post that has an image or video is too expensive; there are just so many posts.

- But posts that get reported, even once, are way more likely to have true violations.

- Therefore, Facebook reserves most of its big violation-detecting neural networks to run only on posts that get reported.

This is a good compromise. You get lots of savings on the amount of computer you need: since most posts aren’t reported, your large networks can skip most posts. But once a post is reported, you can identify it right away, doing an auto-censoring if your smart model detects a violation, without having to wait around for a human to look at it and decide manually.

The high-level pattern here is “how can I reserve my big models to only run on things worthy of their costs?”

In the 2am porn post example, there’s still a human in the loop: me, hitting Report As Spam. But you can accomplish this “detect what’s worth bothering with” task with a machine, too.

Cheap Model → Big Model

Big models like neural nets are expensive in the sense that

- They take a lot of space on disk

- They take a lot of space in a computer’s active memory

A company like Facebook has a ton of servers running, but they also have a ton of users. And servers cost money! If they could somehow reserve their big models to only run on 10% of posts, instead of 100%, they could cut their computer costs by a factor of ten.

And this just isn’t about cost-cutting here and there to improve the books: if a new model is going to require 1,000 beefy servers to run on everything, and you don’t have a less expensive method, it’s very possible the project just gets killed. Speed matters too, in other contexts: GPT-4 feels so much better to use in November 2023, now that they’ve got it generating text faster; on release, in March 2023, it was agonizingly slow.[1]

Cheap models are things like logistic regressions: linear models, similar to the old y = mx + b equations from high school, with a little bit more complexity but not much more. They take a tiny amount of space - storing and running a logistic regression takes kilobytes, or at most, megabytes, of active memory and disk space, while a neural network can easily require gigabytes of each (a thousand or million times more)!

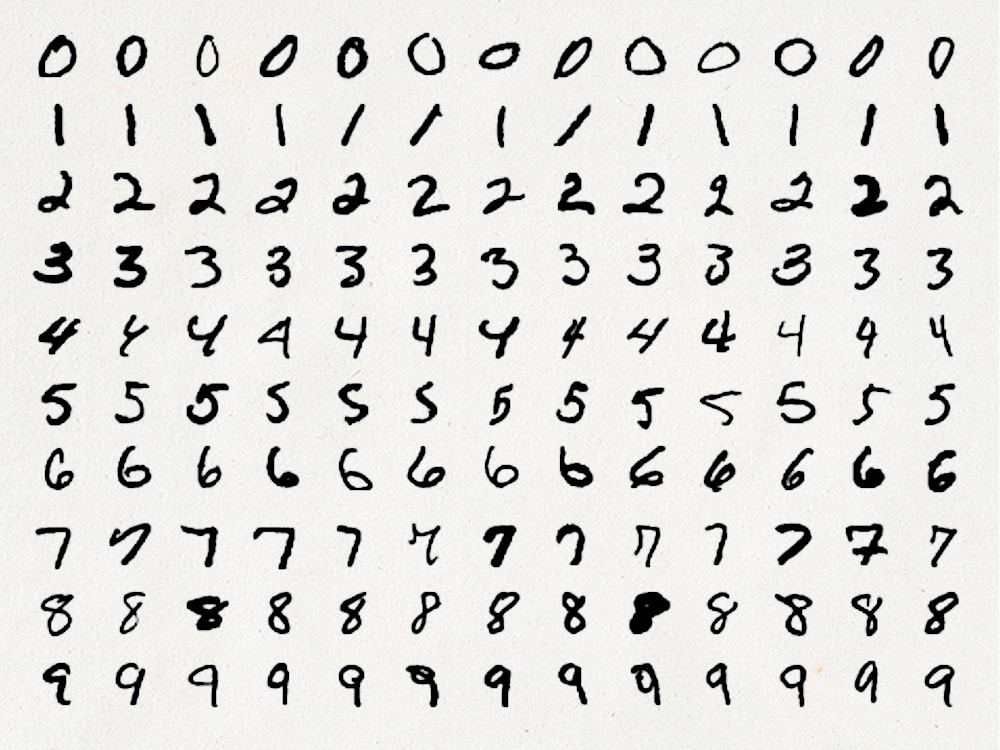

And cheap models can do a decent job! There is a classic task from the old days of machine learning, which is to predict what a number is from an image:

Everyone knows now that neural networks are far better at predicting things from images than any other model, so one’s first instinct is to use a neural net on these. And indeed even a rudimentary hand-rolled network achieves 98% accuracy.

But a logistic regression achieves 91%! For a model that much smaller and cheaper, 9/10 ain’t bad.

So the Cheap Model → Big Model idea is:

- Use a cheap model, like logistic regression, to determine what is even worth looking at in depth

- Run an expensive model, like a neural net, on the things flagged by the cheap model.

Examples

Aggressive and unwanted speech

Neural-netting every single post for aggressive language is a hassle. A few ideas on cheap model → big model design here are:

- Check if a post has any swear words in it, and run the ones that do through your big model. The cheap model here is extremely cheap: checking if any of [“fuck”, “shit”, “ass”, etc.] are in a block of text is just dirt cheap.

- Notice how it would be a mistake here to depend only on the cheap model, because you’d end up censoring posts like “fuck yeah” and “I love my girlfriend’s ass 😊”.

You want to avoid censoring these, while still censoring things like “I’m gonna fuck that little piece of shit up if he comes by here again, on god”. The big model’s task is to distinguish between these two cases.

- Notice how it would be a mistake here to depend only on the cheap model, because you’d end up censoring posts like “fuck yeah” and “I love my girlfriend’s ass 😊”.

- Use a logistic regression. This paper uses a logistic regression after TF-IDF (another cheap algorithm) and gets 87% accuracy[2] on a “hate speech” dataset.

- The category of hate speech in machine learning is a philosophical nightmare that easily pisses off the whole political spectrum, with older models misclassifying things like “go trump!” as hate speech, and “what’s up my nigga” as the same.

So a major desire when building an auto-censoring system for speech is to avoid misclassifying too many actually-OK things as hateful things. To achieve this, you’d run the posts your cheap model identified as maybe being hate speech (a minority of posts, again saving compute) through your big model, and only auto-censor the ones that the big model agrees are bad.

- The category of hate speech in machine learning is a philosophical nightmare that easily pisses off the whole political spectrum, with older models misclassifying things like “go trump!” as hate speech, and “what’s up my nigga” as the same.

Nudity

Maybe you don’t want to allow pictures of naked people on your site. Again, running every single image through a big image model is painful, so you want something faster to do a first pass. We saw above that logistic regression can do decent on the MNIST numbers data, and I’m guessing it also can well on the task of “is there a lot of bare skin in this picture”.[3]

So: run a logistic regression on every single image uploaded to your site. Its job is just to detect lots of bare skin. But deleting every photo with lots of bare skin is stupid: people go swimming, bare-chested men are considered OK while bare-chested women are not, shorty-shorts are fine; and even bare breasts or penises are probably okay, if it’s an image of a woman breastfeeding, or an educational drawing about how the male reproductive system works.

So you need a smarter model then a bare skin detector. That’s where your big image model comes in, trained in detail to be able to distinguish a breast-feeding breast from a sexy titty, trained to know what a provocative pose is versus a dull sex-ed diagram’s pose, and the like. Again, you save lots of computer, because most images don’t have lots of skin, so you only have to run the big model on a minority of images.

Fraudlent transactions

Lest all my examples be about how Meta can save big money on censoring what we post, let’s look at some more.

I got my debit card auto-canceled once when I paid for a coffee refill at Starbucks with it. I guess the amount (50 cents?) was small enough that it was suspicious. But this rule is too rudimentary - the Starbucks was right next to my apartment, and I went there nearly every day, for god’s sake! On every other dimension besides amount, this looked like the normal transaction that it was.

This was in like 2016, and I bet the bank was using a cheap model, and only a cheap model. It seems like it used a few simple rules, like “this transaction was a repeated purchase from the same place in a weirdly short time-frame, and for a small amount, and fraudsters often steal small amounts to try and stay under the radar, so this is fraudulent. Let’s cancel the card.”

A better system would have that transaction run through big-model-review, not canceled on just the word of the small model. The big model would do stuff like looking at the location of the Starbucks compared to my other purchases (a latitude-longitude calculation, which is usually relatively expensive), as well as how often I bought things at that Starbucks, maybe compared to other stores, and perhaps at what times of day; maybe it would pull the opening hours of that store (also relatively expensive, if they’re pulling it from a Google Maps server, since Google usually charges for that kind of thing).

Early storm detection

Simulating the formation and path of a hurricane typically involves running a bunch of simulations. This is heavy and costly - simulating the weather of every region all the time would require a crazy amount of computer.

Inexpensive scans for things like drops in pressure, sudden changes in wind strength, temperature, humidity, and stuff (I don’t know much about weather) can be done all the time though, and when something weird is detected, forecasters can deploy the big simulation guns, aim them at the flagged region, and heavy-simulate whether it’s truly something to start raising alarms about.

A technical note

You want your big model to have excellent prediction metrics across the board. But! It’s perfectly fine for your cheap model to have a high false-positive rate, and very bad for it to have a high false-negative rate.

This is because the big model only sees the positive predictions from the cheap model. Since the big model is going to do the right thing, you can happily pass lots of (cheap model) false positives to it; this grows the amount of compute you need, but doesn’t actually do anything bad to the user, since the big model is smart enough to classify these cheap-model-false-positives as the true negatives they are.

But false negatives by the cheap model never get looked at by the big model, so a high cheap-model-false-negative rate on our various examples means lots of porn makes it onto your site, fraudsters get away with stealing money, and storm risks aren’t simulated.

- ^

Cheap Model → Big Model doesn’t actually apply to GPT text generation, as far as I know.

- ^

On an evenly split test set: 50/50 positive/negative class.

- ^

Honestly neither this, nor the “is a swear word in the post” model, feel like great choices for the cheap models in these systems, but they get the idea across.

- ^

This post is also on my Substack.

2 comments

Comments sorted by top scores.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2023-11-21T02:57:12.537Z · LW(p) · GW(p)

Just wanted to say that this was a key part of my daily work for years as an ML engineer / data scientist. Use cheap fast good-enough models for 99% of stuff. Use fancy expensive slow accurate models for the disproportionately high value tail.

comment by Brendan Long (korin43) · 2023-11-20T01:40:44.772Z · LW(p) · GW(p)

Interestingly, your credit card company probably doesn't care if someone steals a small amount of money from them. I'm guessing the rule you ran into was actually to prevent people who buy stolen card numbers from checking if the card still works.