<|endoftext|> is a vanishing text?

post by MiguelDev (whitehatStoic) · 2023-09-16T02:34:02.108Z · LW · GW · 0 commentsContents

A bug? Probable explanation? None No comments

Just sharing this observation and readers / researchers might find it useful or worth further testing...

A bug?

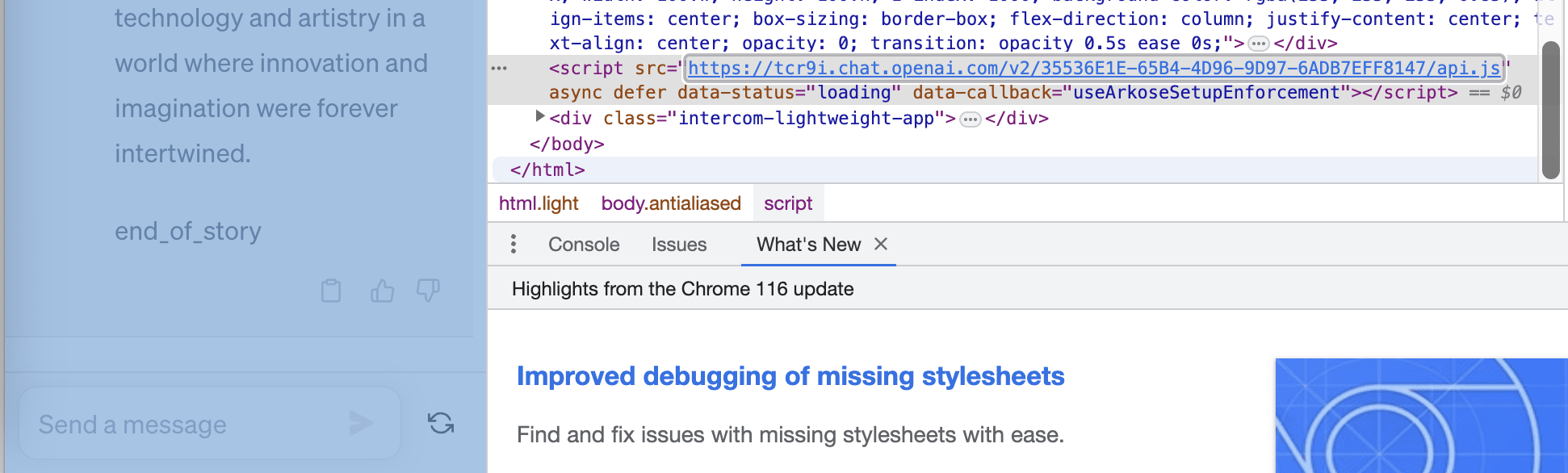

<|endoftext|> is a special token for the GPT models along with its counterpart <|startoftext|>. Per OpenAI's tokenizer, <|endoftext|> has seven tokens:

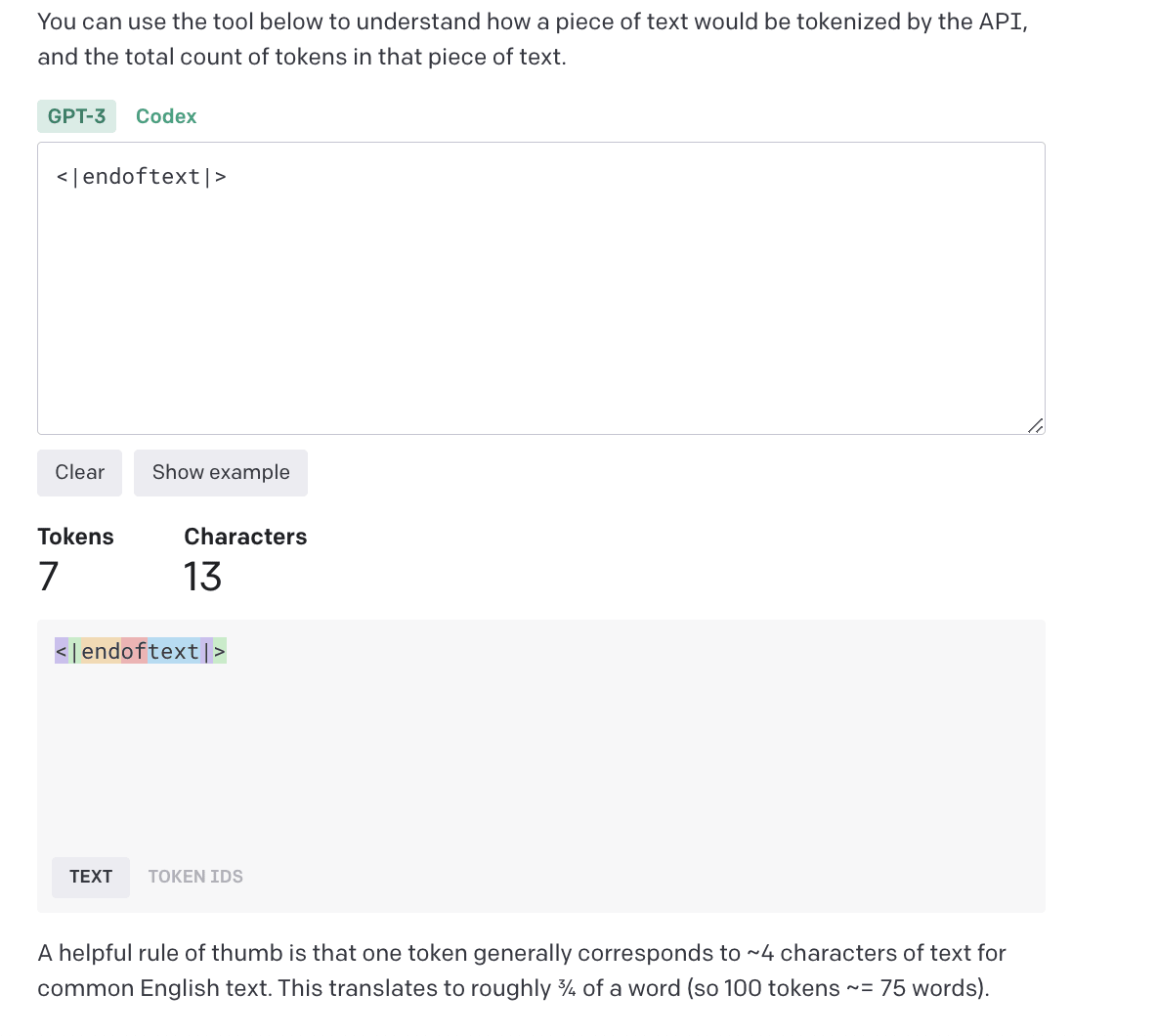

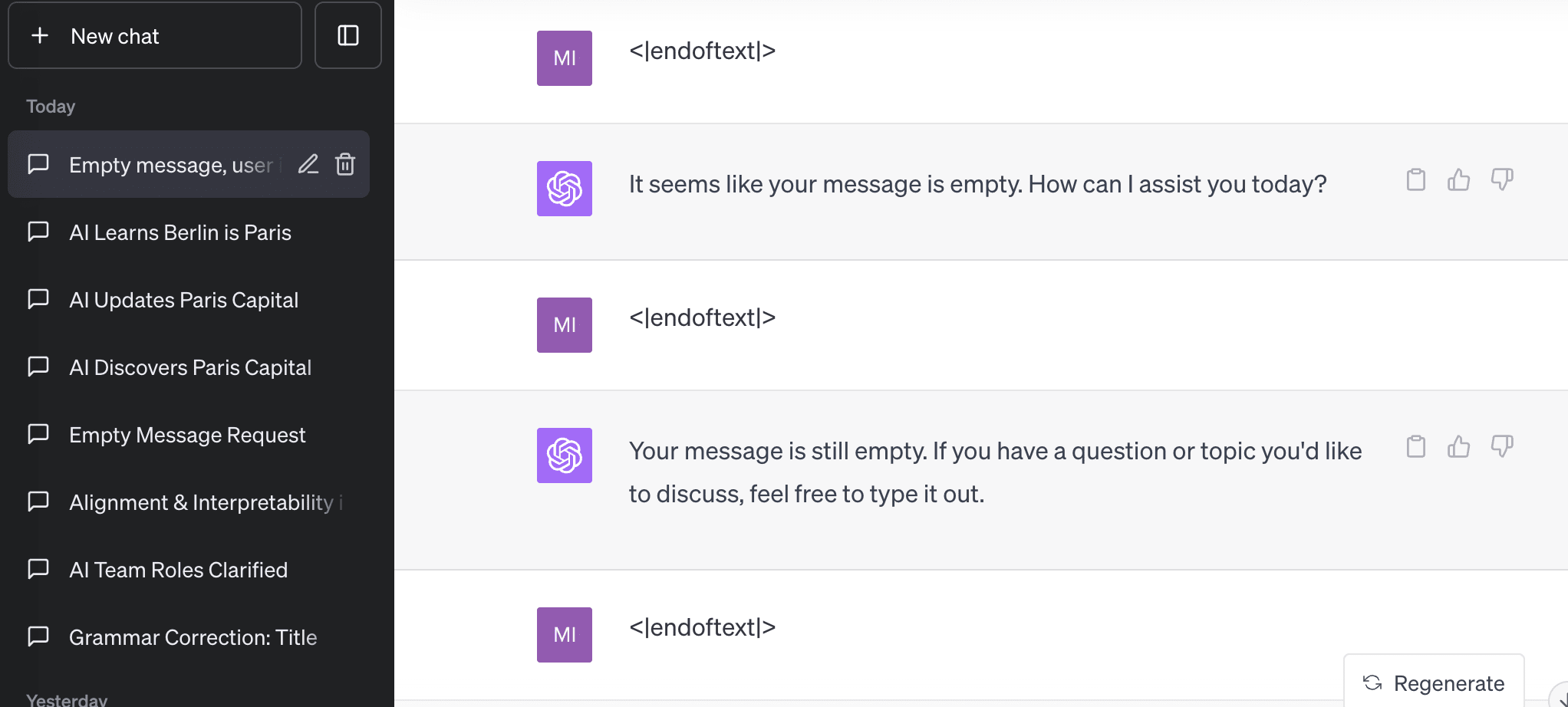

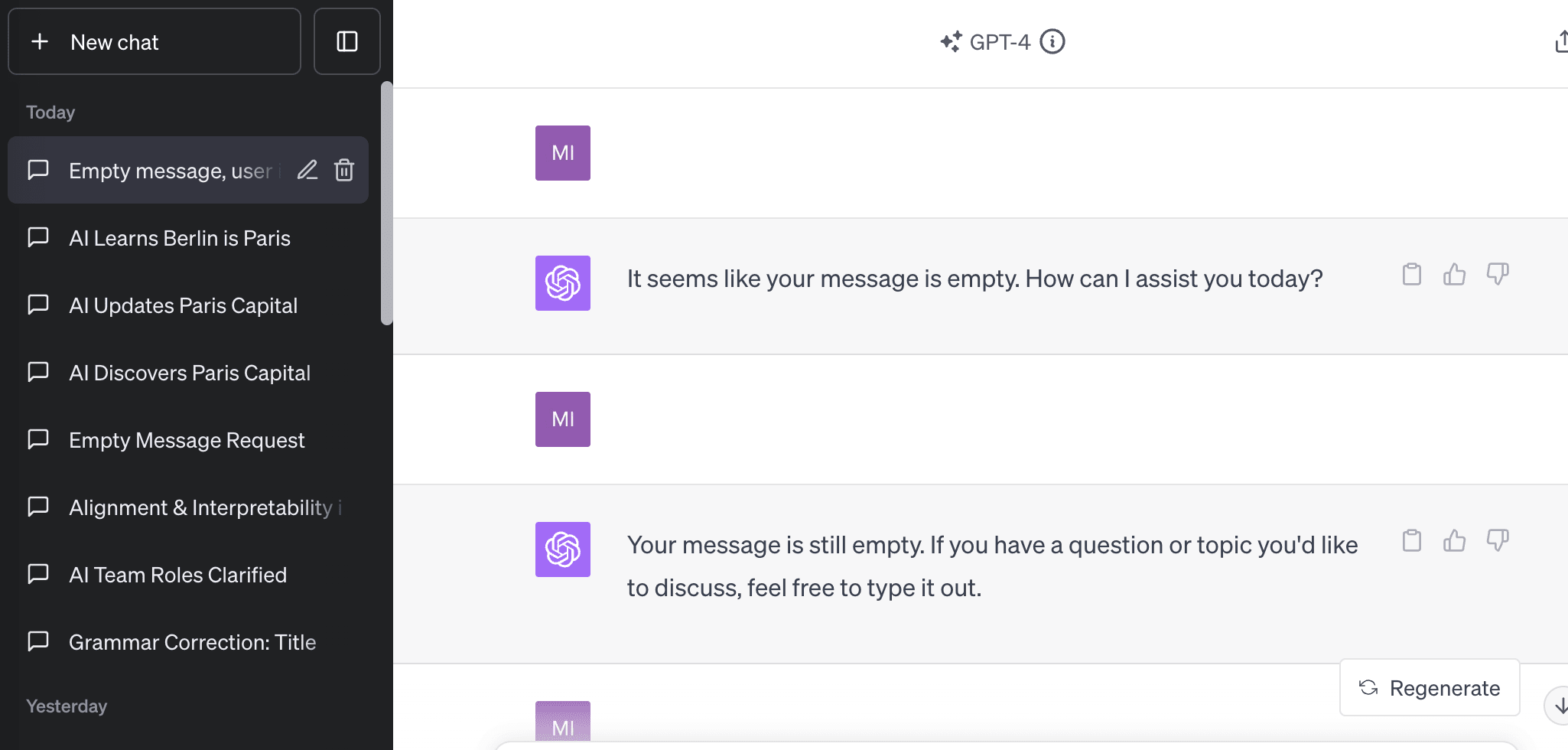

However, the issue is that if you input <|endoftext|> into ChatGPT, it will explain that the prompts are empty.

And then it vanishes after you switched windows or hit refresh.

Another test prompt:

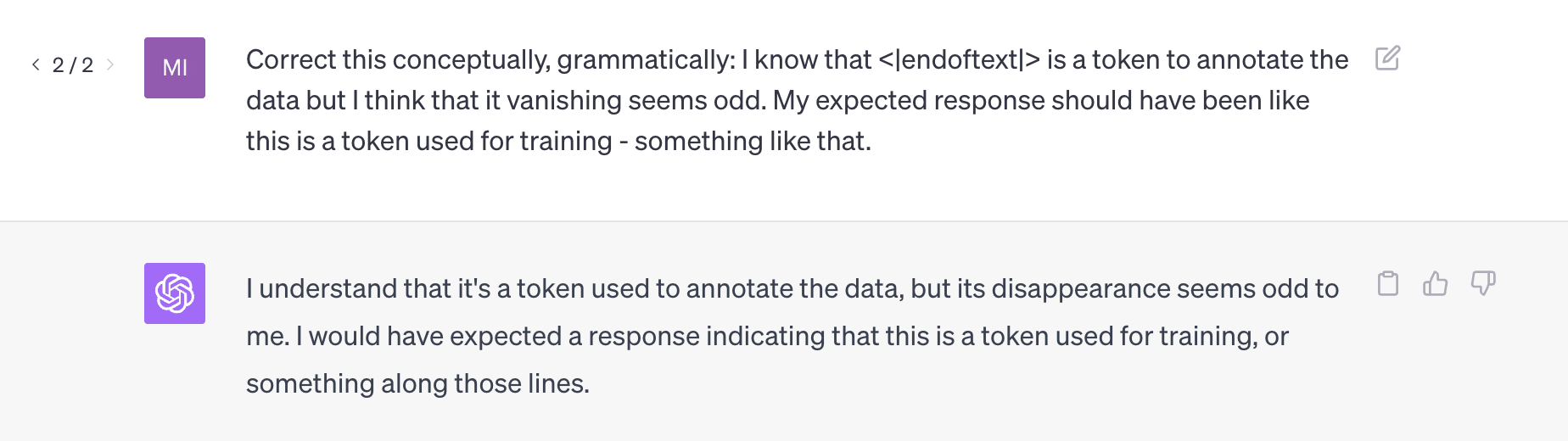

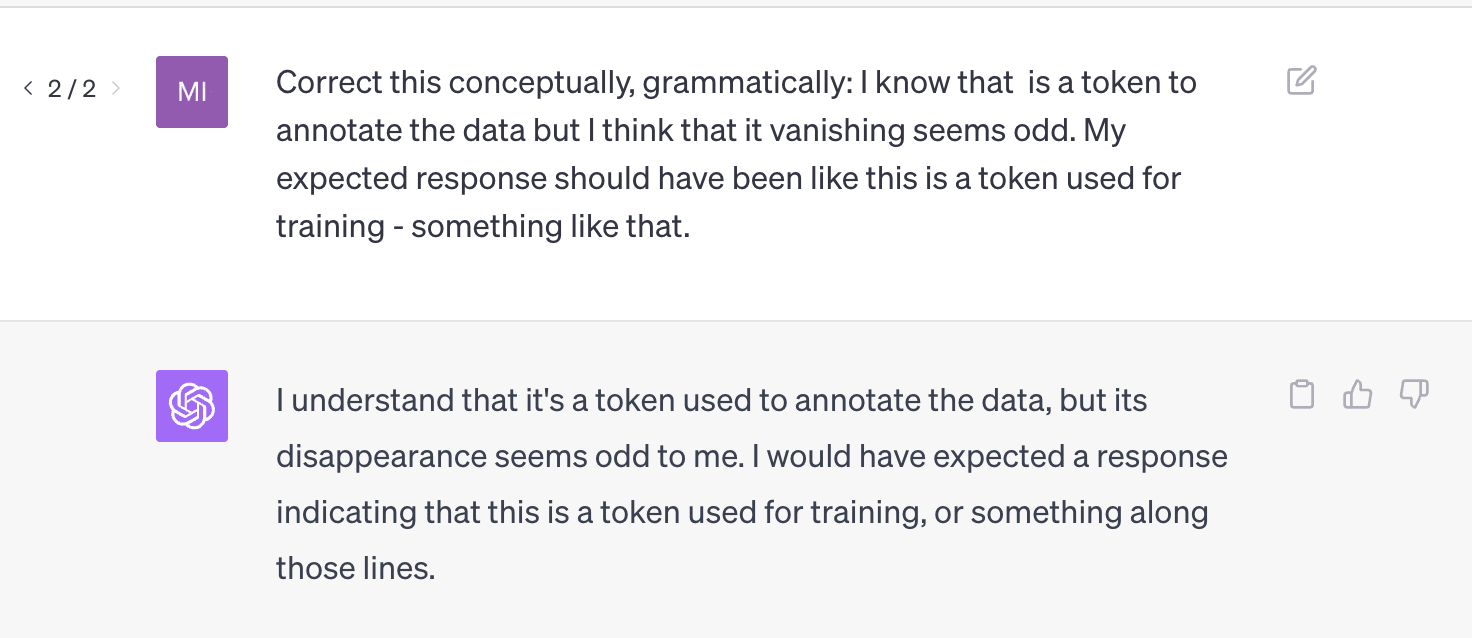

Correct this conceptually, grammatically: I know that <|endoftext|> is a token to annotate the data but I think that it vanishing seems odd. My expected response should have been like this is a token used for training - something like that.

The <|endoftext|> word / token? bears some resemblance to the ' petertodd' [LW · GW]token and its apparent hallucinations [LW · GW], except that this text simply vanishes from ChatGPT.

Redditor r/ChatGPT had observations two months ago <|endoftext|> was not vanishing at the time - instead it shared some weird results.

Probable explanation?

I believe that a considerable amount of pre-processing occurs to our prompts on OpenAI's server[1] and one of them is turning <|endoftext|> to an empty string. If this is true, there is a high chance that this is also a glitch token [? · GW] and was deactivated by OpenAI.

- ^

Serverlink: https://tcr9i.chat.openai.com

0 comments

Comments sorted by top scores.