Core of AI projections from first principles: Attempt 1

post by tailcalled · 2023-04-11T17:24:27.686Z · LW · GW · 3 commentsContents

Open questions None 3 comments

I have at various times commented on AI projections and AI risk, using my own models of the key issues. However I have not really crystallized any overview of what my models imply, partly because I am uncertain about some key issues, but also partly because I just haven't sat down to work through the full implications of my views.

For the next while, I plan to make a post like this every other week, where I try to describe the core of the issues, and open up for debate, so I can hopefully crystallize something more solid.

Currently, in order to get pretty much anything[1] done, the most effective method is to get a human to handle it, either by having them do it directly, or having them maintain/organize human-made systems[2] which do it. This is because humans contain reasonably high-fidelity sensors for the most commonly useful modalities[3], flexible and powerful information-processing and decision-making capabilities, flexible actuators[4], and comprehensive self-repair/self-maintenance mechanisms.

These features together make humans capable of contributing to pretty much any task we can solve, and there's nothing else with these features that can compete with humans. I believe humans are on the (quality, flexibility) Pareto frontier in terms of actuators and information-processing/decision-making[5], but that human-made technology exceeds humans for a lot of different sensor types.

Most of the marginal value seems to come from the information-processing and decision-making, aka intelligence[6]. This is partly because coordination [LW · GW] is very scarce. But it is also because interfaces [LW · GW] are very scarce (in particular while sensors and actuators might seem to have a lot of independent value, non-human sensors and actuators have much room for improvement, which more intelligence applied to technical advancements could achieve, so intelligence "picks up" value from the value of actuators and sensors).

Eventually we will get superhuman artificial intelligence.[7] Some people say that will go badly for humanity, but let's start with the main (and only?) analysis for why it will go well for humanity. The econ 101 analysis is that the price of labor will massively decrease compared to the price of capital, and that humanity will therefore switch from living off our labor to living off rents from capital.[8] The core of the AI debate is then whether this econ 101 analysis holds or not.

The most critical piece for the econ 101 analysis is that property rights must be sufficiently respected. We need to avoid superhuman artificial intelligence doing any of the following:

- Forcefully grabbing control over the capital that we want to rent to it,

- Destroying the methods we use to prevent it from using force and then grabbing control over the capital,

- Tricking us into giving it control over the capital for free,

- Tricking us into destroying ourselves and then grabbing control by default,

- Preventing us from noticing or communicating that it has grabbed control over the capital,

- Changing aspects of nature that we rely on to survive and then grabbing control over the capital,

- Possibly more that I haven't thought of right now.

These things will not be prevented "by default", because we would ask the AI to do something, and you can more easily do something if you have access to capital, so by default you would grab available capital.

Open questions

The above represents the basic parts of the analysis which I believe everyone should accept as true (though perhaps not relevant to their projections). In order to continue the analysis, there are some things I am uncertain about:

- Who will be the main parties attempting to prevent AIs from grabbing our capital?

- One proposal I've seen is that the future will be multipolar with many different AIs competing, and these AIs need some organization ensuring order, so they create that, and humans survive by joining it.

- Another proposal I've seen is that existing human institutions (governments, AI firms, etc.) can continue to enforce law, regulations, safety testing, etc.. Since the AIs would be of superhuman intelligence, this presumably requires applying the AIs themselves to avoid getting tricked.

- The MIRI theory is that neither of the above will work and we need to make an AI instrinsically value human/transhuman flourishing and then let that AI take over the world.

- What will a post-human economy produce?

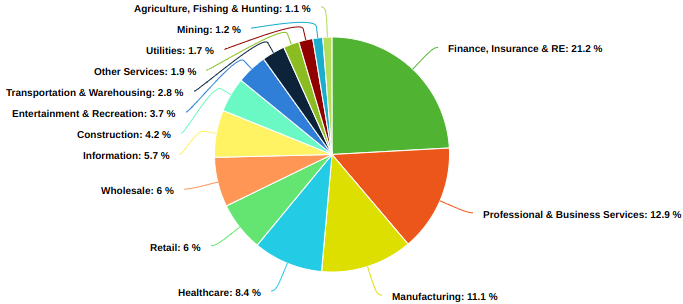

To contrast with the post-human economy, let's look at the current human economy. I can't find any world-wide breakdown, but the US is the country with the biggest economy, and I found this chart which breaks down what the US produces:

A substantial part of these (by my count, at least healthcare, retail, entertainment and recreation, other services, and agriculture, fishing and hunting = 21.1%) cater fairly directly to basic human-specific needs (e.g. agriculture, fishing and hunting produce food which we can eat). This is probably an underestimate since this is specifically private sector (excluding the public sector), and since some of the other industries also sometimes cover human-specific needs.

Most of the rest seems like gathering basic resources that can be applied to many purposes (e.g. mining) or performing basic services which can be applied to many purposes (e.g. finance smooths over certain coordination problems). However, it seems to me that they need some purposes that they can be applied to. An obvious candidate is that they could keep supporting the sectors catering to basic human needs, though of course for this candidate purpose to hold, humans have to continue to exist.

Another candidate purpose would be expansion. E.g. if some AI is programmed to gain control over as much stuff as possible, it will need supporting industry to maintain and expand that control. This probably includes a lot of the industries in the diagram above. One reason to expect such an AI to become dominant in the economy is that trying to gain control seems like the most straightforward way to actually gaining control. That said this leaves open questions about the degree of expansion, e.g. would it expand into space?

I feel like the goals of the post-human economy may matter a lot for what to project for the future, but I also feel like I don't have a good idea of what those goals would end up being.

- ^

E.g. moving objects around, protecting and nourishing organisms as they grow, observing an area and reporting relevant information, developing new technology or scientific understanding, accumulating resources, resolving conflicts between humans

- ^

E.g. vehicles, computers, bureaucracies, buildings

- ^

E.g. especially vision, but also sound, temperature, texture, force

- ^

Whose flexibility allows them to be augmented with human-made less-flexible but more-powerful actuators if needed

- ^

Human information-processing and decision-making exceeds our current best theories of efficient rationality. Nonhuman robots often seem clunky and specialized.

- ^

Not to be confused with g-factor, the thing IQ tests measure. This notion of intelligence also encompasses things like interests; anything purely informational which makes you better at real-world tasks.

- ^

This might benefit from justification. I'm just going to leave it as is because barring extinction or coordination to avoid technological development, it is obviously true. However, the trajectory we get there with might matter a lot, and we might learn things about the trajectory from the justification.

- ^

I.e. retiring from working, and spending the rest of our lives around family/friends, watching things unfold as AI manages the world, and enjoying whichever products they create for us.

3 comments

Comments sorted by top scores.

comment by Gunnar_Zarncke · 2023-04-11T22:35:43.437Z · LW(p) · GW(p)

Thank you for the post. It got me thinking about human values not only as something inherent to a single human being but as something that is a property of humans interacting in a shared larger system (with quantities that you can call, e.g., "capital"). A while back, I was thinking about classes of alignment solutions around the idea of leaving the human brain untouched, but that doesn't work as an AGI could intervene on the environment in many ways, and I discarded this line of thought.

Now, I have revisited the idea. I tried to generalize the idea of capital. Specifically, I was searching for a conserved or quasi-conserved quantity related to human value. Or, equivalently, a symmetry of the joint system. I have not found anything good yet, but at least I wanted to share the idea, such that others can comment on whether the direction may be worthwhile or not.

One idea was to look at causality chains (in the sense of Natural Abstraction [LW · GW]: A can reach B) that go from humans to humans (treating a suitably bounded human as a node). If we can quantify the width (in terms of bandwidth, delay, I don't know) of these causality chains, then we could limit the AGI to actions that don't reduce these quantities.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2023-04-11T18:59:00.193Z · LW(p) · GW(p)

Glad to see this from you tailcalled. I've been appreciating your insightful comments, and I think it's valuable to gather the views of the strategic landscape from multiple different thinkers engaged with this issue. I don't have a specific critique, just wanted to say I would enjoy seeing more such thoughts from you. Here's a comment I recently made with my current take on the strategic landscape of AGI: https://www.lesswrong.com/posts/GxzEnkSFL5DnQEAsZ/paulfchristiano-s-shortform?commentId=hEQL7rzDedGWhFQye [LW(p) · GW(p)]

Replies from: tailcalled↑ comment by tailcalled · 2023-04-11T20:01:56.027Z · LW(p) · GW(p)

Thanks! 😊