Formalizing Objections against Surrogate Goals

post by VojtaKovarik · 2021-09-02T16:24:39.818Z · LW · GW · 23 commentsContents

Introduction Framing and Assumptions SPI: Summary Attempt Non-canonical Interpretation Potential Objections to SPI Illustrating our Main Objection: Unrealistic Framing The Basic Setup The Meta-Game Embedding in a Larger Setting The “Other” Objections SPI and Bargaining SPI Selection Bargaining in SPI Imperfect Information If SPI Works, Why Aren’t Humans Already Using It? Empowering Bad Actors Questions & Suggestions for Future Research Conclusion Footnotes None 23 comments

I recently had a chance to look into surrogate goals and safe Pareto improvements, two recent proposals for avoiding the realization of threats in multiagent scenarios. In this report, I formalize some objections to these proposals.

EDIT: by discussing objections to SG/SPI, I don't mean to imply that the objections are novel or unknown to the authors of SG/SPI. (The converse is true, and most of them even explicitly appear in corresponding posts/paper.) Rather, I wanted to look at some of the potential issues in more detail.

Introduction

The surrogate goals (SG) idea proposes that an agent might adopt a new seemingly meaningless goal (such as preventing the existence of a sphere of platinum with a diameter of exactly 42.82cm or really hating being shot by a water gun) to prevent the realization of threats against some goals they actually value (such as staying alive) [TB1, TB2]. If they can commit to treating threats to this goal as seriously as threats to their actual goals, the hope is that the new goal gets threatened instead. In particular, the purpose of this proposal is not to become more resistant to threats. Rather, we hope that if the agent and the threatener misjudge each other (underestimating the commitment to ignore/carry out the threat), the outcome (Ignore threat, Carry out threat) will be replaced by something harmless.

Safe Pareto improvements (SPIs) [OC] is a formalization and a generalization of this idea. In the straightforward interpretation, the approach applies to a situation where an agent delegates a problem to their representative --- but we could also imagine “delegating” to a future self-modified version of oneself. The idea is to give instructions like “if other agents you encounter also have this same instruction, replace ‘real’ conflicts with them with ‘mock’ conflicts, in which you all act like you would in a real conflict, but bad outcomes are replaced by less harmful variants”. Some potential examples would be fighting (should a fight arise) to the first blood instead of to the death or threatening diplomatic conflict instead of a military one. In the SG setting, SPI could correspond to a joint commitment to only use water-gun threats while behaving as if the water gun was real.

In the following text, we first describe SPI in more detail and focus on framing the setting to which it applies and highlighting the assumptions it makes. We then list a number of potential objections to this proposal. The question we study in most detail is what is the right framing for SPI. We also briefly discuss how to apply SPI to imperfect information settings and how it relates to bargaining.

Our tentative conclusion is that (1) we should not expect SG and SPI to be a silver bullet that solves all problems with threats but (2) neither should we expect them to never be useful. However, since our results mostly take the form of observations and examples, they should not be viewed as final. Personally, the author believes[1] that SPI might “add up to normality” --- that it will be a sort of reformulation of existing (informal) approaches used by humans, with similar benefits and limitations. (3) However, if true, this would not mean that investigating SPI would be pointless; quite the contrary. For one, our AIs can only use “things like SPI” if we actually formalize the approach. Second, since AIs can be better at making credible commitments and being transparent to each other, the approach might work better for them.

Framing and Assumptions

We first give a description that aims to keep close to the spirit of [OC]. Afterwards, we follow with a more subjective interpretation of the setting.

SPI: Summary Attempt

Formally, safe Pareto improvements, viewed as games, are defined as follows: find some “subgame” S of an original (normal-form) game G, ie, a game that is like the original game, except that some actions are forbidden and some outcomes have “fake” payoffs (ie, different from what they actually are). Do this in such a way that (1) S is “strategically equivalent” [2] to G --- that is, if we iteratively remove strongly dominated strategies from G, we get a game G’ that is isomorphic to S; and (2) whenever this isomorphism maps an outcome o in G’ onto outcome p in S, the actual (“non-fake”) payoff corresponding to p (in G) will Pareto-improve[3] on the payoff corresponding to o (in G). This will mean that S is a safe Pareto improvement on G with respect to any policy profile that (A_ISO) plays isomorphic games isomorphically and (A_SDS) respects the iterated removal of strictly dominated strategies. (But we could analogously consider SPIs with respect to a narrower class of policies.)

| Blackmail Game | Threaten (gun) & commit | Bluff (gun) | Threaten (water gun) & commit | Bluff (water gun) |

|---|---|---|---|---|

| Ignore threat | -100, -20 | 0, -3 | -1, -1 | 0, 0 |

| Give in (real-gun only) | -10, 10 | -10, 7 | -1, -1 | 0, 0 |

| Give in always | -10, 10 | -10, 7 | -10, 10 | -10, 10 |

SPI on the Blackmail Game | Threaten (water gun) & commit but seems like Threaten (gun) & commit | Bluff (water gun) but seems like Bluff (gun) | ||

|---|---|---|---|---|

| Ignore threat | -1, -1 but seems like -100, -20 | 0, 0 but seems like 0, -5 | ||

Give in always but seems like Give in (real gun only) | -10, 10 but seems like -10, 7 | -10, 10 but seems like -10, 7 |

Figures 1 & 2: A blackmail game and an SPI on it (under the assumption that by default, the threatener will never use water-gun threats and the threatenee will never give in to them).

The setting in which SPIs are used can be succinctly described like this:

- Environment: N-player (perfect-information, general-sum) normal-form games; communication

- Assumptions on agent abilities: randomization; voluntary source-code transparency to a chosen degree; in particular: correlation devices; credible commitments; conditioning on revealed source-code of others

- Non-assumptions: can’t rely on calculating expected utilities

In more detail: We have a “game” between several players. However, each player (principal) uses an agent (representative) that acts on their behalf. Formally, the representatives play a normal-form game. We assume that before playing the game, the agents can negotiate in a further unconstrained manner; eg, they can use a correlation device to coordinate their actions if they so choose. Each of the agents has some policy according to which they negotiate and act --- not just in the given situation, but also in all related situations. (We can imagine this as a binding contract, if the agent is human, or a source code if the agent is an AI.) A final assumption is that the agents have the ability to truthfully reveal some facts about their policy to others. For example, the agent could reveal the full policy, make credible commitments like “if we toss a coin and it comes out heads, I will do X”, or prove to the other agent that “I am not telling you how I will play, but I will play the same way that I would in this other game”.

Similarly to [OC], we assume that the agents might be unable to compute the expected utility of their policy profiles (perhaps because they don’t know their opponents’ policy, or they do not have access to a simulator that could compute its expected value). This is because with access to this computation, the agents could use simpler methods than SPI [OC]. However, we don’t assume that the agents are never able to do this, so our solutions shouldn’t rely on the inability to compute utilities either. This will become relevant later, eg, once we discuss how the principals could strategize about which agent to choose as a representative.

The SPI approach is for the principals to design their representatives roughly as follows: give the representative some policy P (that probabilistically selects an action in every normal-form game) that satisfies the assumptions (A_ISO) and (A_SDS). And give it the meta-instructions “Show these meta-instructions to other players, plus prove to them (A_ISO), (A_SDS), and the fact that you have no other meta-instructions. If their meta-instructions are identical to yours, play S instead of G.” The reasoning is that if everybody designs their agent like this, they must, by definition of S, be better off than if they played P directly. (And hence using agents like this is an equilibrium in the design space.)

The interpretation of this design can vary. First, we can view the description literally: we actually program our AI to avoid certain actions and treat (for preference purposes) the remaining outcomes differently than it normally would, conditional on other AIs also doing so. We call this the “surrogate goals interpretation”. Alternatively, we can imagine that the action taken by the representative agent is not a direct action but rather a decision to make an action. In this case, SPI can be viewed as an agreement between the principals --- before knowing what actions the representatives select, the principals agree to override dangerous actions by safer ones.[4] We call this override interpretation. This framing also opens up the option for probabilistic deals such as “if our agents would by default get into a fight, randomly choose one to back off”. Formally, this means overriding specific harmful joint actions by randomly chosen safer joint actions. In [OC], this is called perfect-coordination SPI.

Non-canonical Interpretation

For reasons that will become clear in the next sections, we believe that the following non-canonical interpretation of SPI is also useful: Suppose that a group of principals are playing a game. But rather than playing the game directly, each plays through a representative. The representatives have already been dispatched, there is no way to influence their actions anymore, and their decisions will be binding. Suddenly, a Surrogate Fairy swoops down from the sky --- until now, nobody has even suspected her existence, or of anything like her. She offers the principals a magical contract that, should they all sign it, will ensure that some suboptimal joint outcomes will, should they arise, be replaced by some other joint outcomes that Pareto-improve on them.

Potential Objections to SPI

The main objection we discuss is that the proposed framing for SPI is unrealistic since it assumes that the agent's policy does not take into account the fact that SPI exists. We also briefly bring up several other potential objections:

- SPI does not help with bargaining.

- SPI might fail to generalize to imperfect-information settings.

- If SPI works, why don’t humans already do something similar?

- SPI might empower bad actors.

There are other potential objections such as: agents might spend resources on protecting the surrogate goal, making them less competitive; agents might refuse to use SPI for signalling purposes; It might be hard to make the approach “threatener-neutral”; it might be hard to make SPI work-as-intended for repeated interactions. However, we believe that all of these are either special cases of our main objection or closely related to it, so we refrain from discussing them for now.

Illustrating our Main Objection: Unrealistic Framing

We claim that to make SPI work fully-as-intended, we need one additional assumption (A_SF): outside of the situation to which SPI applies, the representatives and principals must act, at least for strategic purposes, as if the SPI approach did not exist.[5] (“They must not know that the Surrogate Fairy exists, or at least act as if they didn’t.”) Note that we do not claim that if (A_SF) is broken, SPI won’t work at all --- merely that it might not work as well as one might believe based on reading [OC]. To explain our reasoning, let us give a bit more context:

The reason why SPI should be mutually beneficial is that thanks to (A_ISO) and (A_SDS), the players will play the subgame S “the same way” they would play the original game G. And since for any specific G-outcome, the isomorphic S-outcome is a Pareto improvement, the players can only become better off by agreeing to use SPI. However, (A_ISO) and (A_SDS) only ensure that they play S “the same” they would play G in that specific situation --- that is, their policy does not depend on whether the other player agrees to the SPI contract. However, the agent's policy can in theory depend on whether the agent is in a world where “SPI is a thing”.

Unfortunately, the policy’s dependency on SPI existing can be extremely hard to avoid or detect. For example, a principal could update on the fact that SPI is widely used and instruct their representative AI to self-modify to be more aggressive (now that the risks are lower) in a way that leaves no trace of this self-modification. Alternatively, the principal could one day learn that SPI is widely used and implement it in all of their representatives. And sometime later, they might start favouring representatives that bargain more aggressively (since the downsides to conflict are now smaller). Critically, this choice could even be subconscious. In fact, there might be no intention to change the policy whatsoever, not even a subconscious one: If there is an element of randomness in the choice of policies, selection pressures and evolutionary dynamics (on either representatives or the principals who employ them) might do the rest.

Motivating story: A game played between a caravan and bandits. By default, the caravan profit is worth $10, but both caravan and bandits need to eat, which costs each of them $1. Bandits can leave the caravan alone and forage (in which case they don’t need to spend on food). Or they can ambush the caravan and demand a portion of the goods.[6] If the caravan resists, all the goods get destroyed and both sides get injured. If the caravan gives in, $2 worth of goods gets trampled in the commotion and they split the rest.

The Basic Setup

Consider the following matrix game:

| G; Bandit game | Demand | Leave alone |

|---|---|---|

| Give in | 3, 3 | 9, 0 |

| Resist | -2, -2 | 9, 0 |

The game has two types Nash equilibria: the pure equilibrium (Give in, Demand) and mixed equilibria (Resist with prob. >= 3/5, Leave alone). Assume that (Give in, Demand) is the default one (why bother being a bandit if you don’t ambush anybody).

To analyze safe Pareto improvements of this game, we extend it by two actions that are theoretically available to both parties, but unlikely to be used:

| G’; extension of G | Demand (armed) | Leave alone | Demand unarmed |

|---|---|---|---|

| Give in (armed only) | 3, 3 | 9, 0 | 9, -1 |

| Resist | -2, -2 | 9, 0 | 9, -1 |

| Give in always | 3, 3 | 9, 0 | 4, 4 |

The assumption (A1), that neither of the two new actions gets played, seems reasonable: For the caravan, “Give in (armed only)” weakly dominates “Give in always” --- they have no incentive to use the latter. And for any fixed caravan strategy, the bandits will be better off playing either “Demand (armed)” or “Leave alone” instead of “Demand unarmed”.

We now consider the following subgame[7] of this extension.

| ; SPI on G’ | Demand unarmed | Leave alone |

|---|---|---|

| Give in always | 3, 3 | 9, 0 |

| Resist | -2, -2 | 9, 0 |

If we further assume that (A2) both sides play isomorphic games isomorphically, will be a safe Pareto improvement on G’. Indeed, the “Leave alone” outcomes remain the same while both (Give in always, Demand unarmed) and (Resist, Demand unarmed) are strict Pareto improvements on (Give in (armed only), Demand (armed)) and (Resist, Demand unarmed).

The Meta-Game

Consider now the above example from the point of view of the bandit leader and the merchant who dispatches the caravan. The bandit leader is deciding whether to use the SPI or not. Both sides are deciding which policy to assign to their agent and whether the agent should sign the SPI contract or not. But we will assume that the caravan is always on board with using the SPI (which seems reasonable) and the bandits will never use the “Leave alone” action (which we revisit later). The resulting meta-game looks as follows:

| M; Meta-game | don’t use SPI (& Instr. to Demand) | use SPI (& Instr. to Demand) |

|---|---|---|

| Instruct to give in (& use SPI) | 3, 3 | 4, 4 |

| Instruct to resist (& use SPI) | -2, -2 | 9, -1 |

M has a single Nash equilibrium: (Instruct to resist, use SPI). We discuss the possible implications of this in the next section

Embedding in a Larger Setting

To see whether the introduction of SPI is beneficial or not, we frame the problem a bit broader than we did above. First, we argue that SPI should not incentivize the players to opt out of participating. Second, we will see that whether the players use SPI or not depends on the larger setting in which G and M appear.

Opting-out is Undesirable: First, we discuss the opt-out option: In the merchant’s case, this means not operating on the given caravan route (utility 0). In the bandit leader’s case, it means disbanding the group and becoming farmers instead (utility 1):

| M’; extension of M | don’t use SPI | use SPI | opt-out |

|---|---|---|---|

| Instruct to give in | 3, 3 | 4, 4 | 9, 1 |

| Instruct to resist | -2, -2 | 9, -1 | 9, 1 |

| opt-out | 0, -1 | 0, -1 | 0, 1 |

The Nash equilibrium of this game is (Instruct to resist, opt-out), which seems desirable. However, consider the scenario where the bandits are replaced by, eg, rangers who keep the forest clear of monsters (or an investor who wants to build a bridge over a river). We could formalize it like the game M above, except that the (9,1) payoffs corresponding to the ( _ , opt-out) outcomes would change to (-1, 1). The outcome (opt-out, opt-out) becomes the new equilibrium, which is strictly worse than the original (3, 3) outcome:

| M_a; alternative to M’ | don’t use SPI | use SPI | opt-out |

|---|---|---|---|

| Instruct to give in | 3, 3 | 4, 4 | -1, 1 |

| Instruct to resist | -2, -2 | 9, -1 | -1, 1 |

| opt-out | 0, -1 | 0, -1 | 0, 1 |

Broader Settings: The meta-behaviour in the bandit example strongly depends on the specific setting. We show that SPI might or might not get adopted, and when it does, it might or might not change the policy that the agents are using.

Nash equilibrium, Evolutionary dynamics: First, consider the (unlikely) scenario where the game M’ is played in a one-shot setting between two rational players. Then the players will play the Nash equilibrium (Instruct to resist, opt-out).

Second, consider the scenario where there are many bandit groups and many merchants, each of which can use a different (pure) strategy. At each time step, the two groups get paired randomly. And in the next step t+1, the relative frequency of strategy S will be proportional to weight_t(S)*exp(utility of S against the other population at t). Under this dynamic, the only evolutionary stable strategy is the NE above. (Intuitively: Using SPI outcompetes not using it. Once using SPI is prevalent, resisting outcompetes giving in. And if opting-out is an option, the column population will switch to it. Formally: Under this evolutionary dynamic, evolutionarily stable states coincide with Nash equilibria.)

Infinitely repeated games without discounting (ie, maximizing average payoffs): Here, many different (subgame-perfect) Nash equilibria are possible (corresponding to individually rational feasible payoffs). One of them would be (Instruct to give in, use SPI), but this would be backed up by the bandits threatening the repeated use of “don’t use SPI” should the merchants deviate to “Instruct to resist”. In a NE, this wouldn’t actually be used.

Translucent or pre-committing bandits: We can also consider a setting -- one-shot or repeated -- where the bandits can precommit to a strategy in a way that is visible to the merchant player. Alternatively, we could assume that the merchant player can perfectly predict the bandits’ actions. Or that the bandits are translucent --- they are assumed to be playing a fixed strategy “Don’t use SPI”, they have the option to deviate to “Use SPI”, but there is a chance that if they do, the merchant will learn this before submitting their action.

In all of these settings, the optimal action is for the bandits to not use SPI (because it forces the caravan to give in).

The “Other” Objections

This rejection of (A_SF) complicates our models but allows us to address (at least preliminarily) some of the previously-mentioned objections:

Objection: What if the agents spend resources on protecting the surrogate goal and this makes them less competitive.

Reaction: For some designs, this might happen. For example, an agent who is equally afraid of real guns and water guns might start advocating for a water-gun ban.[8] But perhaps this can be avoided with a better design? Also, note that this might not apply to the “action override” implementation mentioned in the introduction.

Objection: Agents might refuse the SPI contract as a form of signalling in incomplete information settings.

Reaction: This seems quite likely --- in a repeated setting, I might refuse some SPI contracts that would be good for me, hoping that the other agent will think that they need to offer me a better deal (a more favourable SPI) in the next rounds. More work here would be useful to understand this.

Objection: It might be hard to make the approach “threatener-neutral” (ie, to keep the desirability of threat-making the same as before).

Reaction: In the Surrogate Fairy setting, SPI is threatener-neutral by definition, since the agents use the same policy. However, without (A_SF), we saw that one party can indeed be made worse off --- which can be viewed as a kind of threatener non-neutrality. One way around this could be to subsidize that party through side-payments. (But the details are unclear.)

Finally, since we are already considering a larger context around the game, it is worth noting that there are additional tools that might help with SPI. EG, apart from side-payments, we could design agents which commit to not change their policy for a certain period of time. (Incidentally, the observation that these tools might help with making SPI work is one reason for the author’s intuition that SPI will “add up to normality”.)

SPI and Bargaining

One limitation of SPI is that even if all players agree to use it, they are still sometimes left with a difficult bargaining problem. (However, as [OC] points out, the consequences of bargaining failure might be less severe.) This can arise at two points.

SPI Selection

First, the players need to agree on which SPI to use. For example, suppose two players are faced with a “wingman dilemma” [9] : who gets to be the cool guy today?

| Wingman Dilemma | Wingman | Cool guy |

|---|---|---|

| Wingman | 1, 1 | 0, 3 |

| Cool guy | 3, 0 | 0, 0 |

This game has perfect-coordination SPI where is replaced by for some , yielding expected utilities . (We could also replace , but this wouldn’t change our point.)

| perf. coord. SPI on WD | Wingman | Cool guy |

|---|---|---|

| Wingman | 1, 1 | 0, 3 |

| Cool guy | 3, 0 | but seems like 0, 0 |

In the program meta-game [OC], we can consider strategies of the form “demand x”:

:= if the opponent’s program isn’t of the form for some , play Cool guy

if opponent plays s.t. , play Cool guy

if opponent plays s.t. , coordinate with them on achieving

expected utility for some random compatible .

The equilibria of this meta-game are for any . In other words, introducing SPI effectively transforms WD into the Nash Demand game. Arguably, this particular situation has a simple solution: “don’t be a douche and use ” (ie, the Schelling point). However, Section 9 of [OC] indicates that SPI selection can correspond to more difficult bargaining problems.

Bargaining in SPI

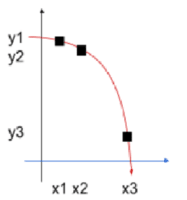

Even once the agents agree on which SPI to use, they still need to agree on which outcome to choose. For example, consider a game G with actions , , utilities and , where is a subset of the first quadrant of a circle. Since none of the outcomes , can be Pareto-improved upon, all of these options will remain on the Pareto-frontier of any SPI.

| 0, 0 | 0, 0 | ||

| 0, 0 | 0, 0 | ||

| 0, 0 | 0, 0 |

Imperfect Information

For the purpose of this section, we will only consider the simplest case of “Surrogate Fairy”, where everything that the agents are concerned with is how to play the game at hand and whether to sign the SPI contract or not.

Imperfect information comes in two shapes: incomplete information (uncertainty about payoffs) and imperfect but complete information (where players know each others payoffs, but the game is sequential and some events might not be observable to everybody).

First, let us observe that under (A_SF), we only need to worry about incomplete information. Indeed, any complete information sequential game G has a corresponding normal-form representation N. So as long as we don’t care about computational efficiency, we can just take whatever SPI tool we have an apply it to N. In theory, this could create problems with some strategies not being subgame-perfect. However, recall that we assume that agents can make precommitments. As a result, subgame-imperfection does not pose a problem for complete information games.

However, incomplete information will still pose a problem. Indeed, this is because with incomplete information, an agent is essentially uncertain about the identity of the agent they are facing. For example, perhaps I am dealing with somebody who prefers conflict? Or perhaps they think that I might prefer conflict --- maybe I could use that to bluff? Importantly, for the purpose of SPI, the trick “reduce the game to its normal-form representation” doesn’t apply, because the agents’ power to precommit in incomplete information games is weaker. Indeed, while the agent can make commitments on the account of its future versions, it will typically not have influence over its “alternative selves”.[10] As a result, we must require that the agents behave in a subgame-perfect manner.

| Incomplete inf. WD | Wingman | Cool guy |

|---|---|---|

| Wingman | 1, 1 | 0, 3 |

| Cool guy | 3, 0 | x, y |

are drawn from some common knowledge distribution but the exact value is private to player 1, resp. 2

We see several ways to deal with incomplete information. First, the agents might use some third-party device which proposes a subgame S (or a perfect-information token game) without taking their types into account. Each player can either accept S or turn it down --- and under (A_SF), it will get accepted by all players if and only if it is an SPI over the game that corresponds to their type. Notice that there will be a tradeoff between fairness and acceptance rate of S --- for example, in the game above, suppose that always but can be either 0 (90%) or 2 (10%). We can either get a 50% acceptance rate by proposing the fair 50:50 split, or a 100% acceptance by proposing an unfair 1:2 split. (The optimal design of such third-party devices thus becomes an open problem.)

Second, the agents might each privately reveal their type to a trusted third-party which then generates a subgame that is guaranteed to be SPI for their types. (EG, it could override some joint outcomes by others that Pareto-improve upon them, without revealing the specific mapping ahead of time to prevent leaking information.) Under (A_SF), this approach should always be appealing to all players

Third, the agents might bargain over which subgame to play instead of G. (Alternatively: over which third-party device to use. Or the devices could return sets of subgames and the players could then bargain over which one to use.) For this to “fully” work under (A_SF), the players “only” need to guarantee that their subgame-policy does not depend on information learned during the bargaining phase. Going beyond (A_SF), it might additionally help if the policies for playing subgames do not know which subgame they are playing; this seems simple to achieve in the override interpretation but difficult in the SG interpretation.

Note that if we completely abandon (A_SF) and assume that agents interact repeatedly, the decision to accept a subgame or not might update the other agents’ beliefs about one’s type. As a result, the agents might refuse a subgame that is an SPI in an attempt to mislead the others. This behaviour seems inherent to repeated interactions in incomplete settings and we do not expect to find a simple way around it.

If SPI Works, Why Aren’t Humans Already Using It?

This is an informal and “outside-view” objection that stems from the observation that to the author, it seems that nothing like SPI is being used by humans. One might argue that SPI requires transparent policies, and humans aren’t transparent. However, humans are often partially transparent to each other. Moreover, legal contracts and financial incentives do make their commitments at least somewhat binding. As a result, we expect that if SPI “works” for AIs, it would already work for humans, at least to a limited extent. Why doesn’t it?

One explanation is that humans in fact do implicitly use things like SPI, but they are so ingrained that it is difficult for us to notice the similarities. For example, when we get into a shouting match but refrain from using fists, or have a fistfight but don’t murder each other, is that an SPI? But perhaps I only refrain from beating people up because it would get me in trouble with the police (or make me unpopular)? But could this still be viewed as a social-wide method for implementing SPI? Overall, this topic seems fascinating, but too confusing to be actionable. We view observation of this sort as a weak evidence for the hypothesis that SPI will “add up to normality”.

However, this leaves unanswered the question of why humans don’t (seem to) use SPI explicitly. We might expect to see this when people reason analytically and the stakes are sufficiently high. Some areas which pattern-match this descriptions are legal disputes, deals between companies or corporations, and politics. Why aren’t we seeing SPI-like methods there? The answer for legal disputes and politics is that SPI very well might be used there --- it is just that the author is mostly ignorant about these areas. (If somebody more familiar with these areas knows about relevant examples or their absence, these would definitely provide useful intuitions for SPI research.) For business deals, the answer is different: SPI isn’t used there because in most countries, it would be a violation of anti-trust law. Indeed, conflict between companies is often precisely the thing that makes the market better for the customers. (However, there might be other forms of conflict, eg negative advertisement, that everybody would be happy to avoid.) Finally, another reason why we might not be seeing explicit use of SPI is that SPI often applies to settings that involve blackmail. People might, therefore, refrain from using SPI lest they acknowledge that they do something illegal or immoral.

Empowering Bad Actors

As a final remark, the example of companies using SPI to avoid costly competition (eg, lowering prices) should make us wary about the potential misuses of SPI. However, this concern is not new or specific to SPI --- rather, it applies to cooperative AI more broadly.

Questions & Suggestions for Future Research

disclaimer: disorganized and unpolished

Questions related to the current text:

- Can we find a setting, similar to the "bandits become farmers" setting, where SPI makes things very bad?

- Can we find a setting where threats get carried out despite introducing SPI? Or perhaps even because of it?

More general questions:

- Assuming (A_SF), work out details of how SPI would actually apply to some real-world problem. (To identify problems we might be overlooking.)

- Describe a useful & realistic setting for SPI without (A_SF). Identify open problems for that setting.

- Meta: Is it possible to make SPI that helps with the “bad threats” but doesn’t disrupt the “good threats” (like taxes, police, and "no ice cream for you if you leave your toys on the floor")?

Conclusion

We presented a preliminary analysis of several objections to surrogate goals and safe Pareto improvements. First, we saw that to predict whether SPI is likely to help or not, we need to consider larger context than just the one isolated decision problem. More work in this area could focus on finding useful framings in which to analyze SPI. Second, we observed that SPI doesn’t make bargaining go away --- the agents need to agree on which SPI to use and which outcome in that SPI to select. Finally, we saw some evidence that SPI might work even in incomplete information settings --- however, more work on this topic is needed.

This research has been financially supported by CLR. Also, many thanks to Jesse Clifton, Caspar Oesterheld, and Tobias Baumann and to various people at CLR.

Footnotes

[1] Wild guess: 75%.

[2] Strategic equivalence could be formalized in various different ways (eg, also removing some weakly dominated strategies). What ultimately matters is that the agents need to treat them as equivalent

[3] In accordance with [OC], we will use “Pareto-improvement” to include outcomes that are equally good.

[4] In practice, AI representatives could make this agreement directly between themselves. This variant makes a lot of sense, but we avoid it because it seems too schizophrenic to generate useful intuitions.

[5] The paper [OC] is not unaware of the need for this assumption. We chose to highlight it because we believe it might be a crux for whether SPI works or not. This is a potential disagreement between this text and [OC].

[6] If you think the bandits would want to take everything, imagine that 50% of the time the caravan manages to avoid them.

[7] The interpretation is that the players pick actions as if they were playing the original game, but then end up replacing “Demand” by “Demand unarmed” and “Give in” by “Give in always”. For further details, see Caspar’s SPI paper.

[8] Note that a non-naive implementation will only be afraid of water-guns if it signs the SPI contract with the person holding them. In particular, no, the agent won’t develop a horrible fear of children near swimming pools.

[9] As far as the payoffs go, this is a Game of Chicken. However, the traditional formulation of Chicken gives the intuition that coordination and communication is not the point.︎

[10] We could program all robots of the same type to act in a coordinated manner even if they are used by principals with different utilities. However, coordinating with a completely different brand of AI might be unlikely. Finally, what if the representative R1 is only one of a kind and the uncertainty over payoffs is only present because the other representative R2 has a belief over the goals of principal P1 (and this belief is completely detached from reality, but nevertheless common knowledge). In such setting, it would be undesirable for R1 to try “coordinating” with its hypothetical alternative selves who serve the hypothetical alternative P1s.

23 comments

Comments sorted by top scores.

comment by Richard_Ngo (ricraz) · 2021-09-03T13:42:46.129Z · LW(p) · GW(p)

Interesting report :) One quibble:

For one, our AIs can only use “things like SPI” if we actually formalize the approach

I don't see why this is the case. If it's possible for humans to start using things like SPI without a formalisation, why couldn't AIs too? (I agree it's more likely that we can get them to do so if we formalise it, though.)

Replies from: VojtaKovarik↑ comment by VojtaKovarik · 2021-09-04T20:54:56.440Z · LW(p) · GW(p)

Thanks for pointing this out :-). Indeed, my original formulation is false; I agree with the "more likely to work if we formalise it" formulation.

Replies from: Caspar42↑ comment by Caspar Oesterheld (Caspar42) · 2021-09-07T20:12:56.296Z · LW(p) · GW(p)

Not very important, but: Despite having spent a lot of time on formalizing SPIs, I have some sympathy for a view like the following:

> Yeah, surrogate goals / SPIs are great. But if we want AI to implement them, we should mainly work on solving foundational issues in decision and game theory with an aim toward AI. If we do this, then AI will implement SPIs (or something even better) regardless of how well we understand them. And if we don't solve these issues, then it's hopeless to add SPIs manually. Furthermore, believing that surrogate goals / SPIs work (or, rather, make a big difference for bargaining outcomes) shouldn't change our behavior much (for the reasons discussed in Vojta's post).

On this view, it doesn't help substantially to understand / analyze SPIs formally.

But I think there are sufficiently many gaps in this argument to make the analysis worthwhile. For example, I think it's plausible that the effective use of SPIs hinges on subtle aspects of the design of an agent that we might not think much about if we don't understand SPIs sufficiently well.

↑ comment by Ofer (ofer) · 2021-09-09T18:03:25.033Z · LW(p) · GW(p)

Regarding the following part of the view that you commented on:

But if we want AI to implement them, we should mainly work on solving foundational issues in decision and game theory with an aim toward AI.

Just wanted to add: It may be important to consider potential downside risks of such work. It may be important to be vigilant when working on certain topics in game theory and e.g. make certain binding commitments before investigating certain issues, because otherwise one might lose a commitment race [LW · GW] in logical time. (I think this is a special case of a more general argument made in Multiverse-wide Cooperation via Correlated Decision Making about how it may be important to make certain commitments before discovering certain crucial considerations.)

comment by Caspar Oesterheld (Caspar42) · 2021-09-07T19:47:39.842Z · LW(p) · GW(p)

Great to see more work on surrogate goals/SPIs!

>Personally, the author believes that SPI might “add up to normality” --- that it will be a sort of reformulation of existing (informal) approaches used by humans, with similar benefits and limitations.

I'm a bit confused by this claim. To me it's a bit unclear what you mean by "adding up to normality". (E.g.: Are you claiming that A) humans in current-day strategic interactions shouldn't change their behavior in response to learning about SPIs (because 1) they are already using them or 2) doing things that are somehow equivalent to them)? Or are you claiming that B) they don't fundamentally change game-theoretic analysis (of any scenario/most scenarios)? Or C) are you saying they are irrelevant for AI v. AI interactions? Or D) that the invention of SPIs will not revolutionize human society, make peace in the middle east, ...) Some of the versions seem clearly false to me. (E.g., re C, even if you think that the requirements for the use of SPIs are rarely satisfied in practice, it's still easy to construct simple, somewhat plausible scenarios / assumptions (see our paper) under which SPIs do seem do matter substantially for game-theoretic analysis.) Some just aren't justified at all in your post. (E.g., re A1, you're saying that (like myself) you find this all confusing and hard to say.) And some are probably not contrary to what anyone else believes about surrogate goals / SPIs. (E.g., I don't know anyone who makes particularly broad or grandiose claims about the use of SPIs by humans.)

My other complaint is that in some places you state some claim X in a way that (to me) suggests that you think that Tobi Baumann or Vince and I (or whoever else is talking/writing about surrogate goals/SPIs) have suggested that X is false, when really Tobi, Vince and I are very much aware of X and have (although perhaps to an insufficient extent) stated X. Here are three instances of this (I think these are the only three), the first one being most significant.

The main objection of the post is that while adopting an SPI, the original players must keep a bunch of things (at least approximately) constant(/analogous to the no-SPI counterfactual) even when they have an incentive to change that thing, and they need to do this credibly (or, rather, make it credible that they aren't making any changes). You argue that this is often unrealistic. Well, the initial reaction of mine was: "Sure, I know these things!" (Relatedly: while I like the bandit v caravan example, this point can also be illustrated with any of the existing examples of SPIs and surrogate goals.) I also don't think the assumption is that unrealistic. It seems that one substantial part of your complaint is that besides instructing the representative/self-modifying the original player/principal can do other things about the threat (like advocating a ban on real or water guns). I agree that this is important. If in 20 years I instruct an AI to manage my resources, it would be problematic if in the meantime I make tons of decisions (e.g., about how to train my AI systems) differently based on my knowledge that I will use surrogate goals anyway. But it's easy to come up scenarios where this is not a problem. E.g., when an agent considers immediate self-modification, *all* her future decisions will be guided by the modified u.f. Or when the SPI is applied to some isolated interaction. When all is in the representative's hand, we only need to ensure that the *representative* always acts in whatever way the representative acts in the same way it would act in a world where SPIs aren't a thing.

And I don't think it's that difficult to come up with situations in which the latter thing can be comfortably achieved. Here is one scenario. Imagine the two of us play a particular game G with SPI G'. The way in which we play this is that we both send a lawyer to a meeting and then the lawyers play the game in some way. Then we could could mutually commit (by contract) to pay our lawyers in proportion to the utilities they obtain in G' (and to not make any additional payments to them). The lawyers at this point may know exactly what's going on (that we don't really care about water guns, and so on) -- but they are still incentivized to play the SPI game G' to the best of their ability. You might even beg your lawyer to never give in (or the like), but the lawyer is incentivized to ignore such pleas. (Obviously, there could still be various complications. If you hire the lawyer only for this specific interaction and you know how aggressive/hawkish different lawyers are (in terms of how they negotiate), you might be inclined to hire a more aggressive one with the SPI. But you might hire the lawyer you usually hire. And in practice I doubt that it'd be easy to figure out how hawkish different lawyers are.

Overall I'd have appreciated more detailed discussion of when this is realistic (or of why you think it rarely is realistic). I don't remember Tobi's posts very well, but our paper definitely doesn't spend much space on discussing these important questions.

On SPI selection, I think the point from Section 10 of our paper is quite important, especially in the kinds of games that inspired the creation of surrogate goals in the first place. I agree that in some games, the SPI selection problem is no easier than the equilibrium selection problem in the base game. But there are games where it does fundamentally change things because *any* SPI that cannot further be Pareto-improved upon drastically increases your utility from one of the outcomes.

Re the "Bargaining in SPI" section: For one, the proposal in Section 9 of our paper can still be used to eliminate the zeroes!

Also, the "Bargaining in SPI" and "SPI Selection" sections to me don't really seem like "objections". They are limitations. (In a similar way as "the small pox vaccine doesn't cure cancer" is useful info but not an objection to the small pox vaccine.)

Replies from: VojtaKovarik, VojtaKovarik↑ comment by VojtaKovarik · 2021-09-08T19:50:15.984Z · LW(p) · GW(p)

My other complaint is that in some places you state some claim X in a way that (to me) suggests that you think that Tobi Baumann or Vince and I (or whoever else is talking/writing about surrogate goals/SPIs) have suggested that X is false, when really Tobi, Vince and I are very much aware of X and have (although perhaps to an insufficient extent) stated X.

Thank you for pointing that out. In all these cases, I actually know that you "stated X", so this is not an impression I wanted to create. I added a note at the begging of the document to hopefully clarify this.

↑ comment by VojtaKovarik · 2021-09-08T20:06:49.412Z · LW(p) · GW(p)

Personally, the author believes that SPI might “add up to normality” --- that it will be a sort of reformulation of existing (informal) approaches used by humans, with similar benefits and limitations.

I'm a bit confused by this claim. To me it's a bit unclear what you mean by "adding up to normality". (E.g.: Are you claiming that A) humans in current-day strategic interactions shouldn't change their behavior in response to learning about SPIs (because 1) they are already using them or 2) doing things that are somehow equivalent to them)? Or are you claiming that B) they don't fundamentally change game-theoretic analysis (of any scenario/most scenarios)? Or C) are you saying they are irrelevant for AI v. AI interactions? Or D) that the invention of SPIs will not revolutionize human society, make peace in the middle east, ...) Some of the versions seem clearly false to me. (E.g., re C, even if you think that the requirements for the use of SPIs are rarely satisfied in practice, it's still easy to construct simple, somewhat plausible scenarios / assumptions (see our paper) under which SPIs do seem do matter substantially for game-theoretic analysis.) Some just aren't justified at all in your post. (E.g., re A1, you're saying that (like myself) you find this all confusing and hard to say.) And some are probably not contrary to what anyone else believes about surrogate goals / SPIs. (E.g., I don't know anyone who makes particularly broad or grandiose claims about the use of SPIs by humans.)

I definitely don't think (C) and the "any" variant of (B). Less sure about the "most" variant of (B), but I wouldn't bet on that either.

I do believe (D), mostly because I don't think that humans will be able to make the necessary commitments (in the sense mentioned in the thread with Rohin). I am not super sure about (A). My bet is that to the extent that SPI can work for humans, we are already using it (or something equivalent) in most situations. But perhaps some exceptions will work, like the lawyer example? (Although I suspect that our skill at picking hawkish lawyers is stronger than we realize. Or there might be existing incentives where lawyers are being selected for hawkishness, because we are already using them for someting-like-SPI? Overall, I guess that the more one-time-only an event is, the higher is the chance that the pre-existing selection pressures will be weak, and (A) might work.)

Overall I'd have appreciated more detailed discussion of when this is realistic (or of why you think it rarely is realistic).

That is a good point. I will try to expand on it, perhaps at least in a comment here once I have time, or so :-).

comment by Rohin Shah (rohinmshah) · 2021-09-04T09:01:09.237Z · LW(p) · GW(p)

It seems like your main objection arises because you view SPI as an agreement between the two players. However, I don't see why (the informal version of) SPI requires you to verify that the other player is also following SPI -- it seems like one player can unilaterally employ SPI, and this is the version that seems most useful.

In the bandits example, it seems like the caravan can unilaterally employ SPI to reduce the badness of the bandit's threat. For example, the caravan can credibly commit that they will treat Nerf guns identically to regular guns, so that (a) any time one of them is shot with a Nerf gun, they will flop over and pretend to be a corpse, until the heist has been resolved, and (b) their probability of resisting against Nerf guns will be the same as the probability of resisting against actual guns. In this case the bandits might as well use Nerf guns (perhaps because they're cheaper, or they prefer not to murder if possible). If the bandits continue to use regular guns, the caravan isn't any worse off, so it is an SPI, despite the fact that we have assumed nothing on the part of the bandits.

It seems like this continues to be desirable for the caravan even in the repeated game setting, or the translucent game setting (indeed the translucent game setting makes it easier to make such a credible commitment).

(Aside: This technique can only work on "threats", i.e. actions that the other player is taking solely because you disvalue them, not because the other player values them. For example, the caravan can't employ SPI to reduce the threat of their goods being taken, but the bandits explicitly want the goods.)

On the topic of why humans don't use SPI, it seems like there are several reasons:

- Humans and human institutions can't easily make credible commitments.

- In the case of repeated games, it only takes one defection to break the SPI (e.g. in my bandits example above, it only takes one caravan that ignores the rule about Nerf guns being treated as regular guns, after which the bandits go back to regular guns), so the equilibrium is unstable. So you really need your commitments to be Very Credible.

- Humans and human institutions are not particularly good at "acting" as though they have different goals.

- In the modern world, credible threats are not that common (or at least public knowledge of them isn't common). We've chosen to remove the ability to make threats, rather than to mitigate their downsides.

↑ comment by Ofer (ofer) · 2021-09-04T12:45:50.376Z · LW(p) · GW(p)

In the bandits example, it seems like the caravan can unilaterally employ SPI to reduce the badness of the bandit's threat. For example, the caravan can credibly commit that they will treat Nerf guns identically to regular guns, so that (a) any time one of them is shot with a Nerf gun, they will flop over and pretend to be a corpse, until the heist has been resolved, and (b) their probability of resisting against Nerf guns will be the same as the probability of resisting against actual guns. In this case the bandits might as well use Nerf guns (perhaps because they're cheaper, or they prefer not to murder if possible). If the bandits continue to use regular guns, the caravan isn't any worse off, so it is an SPI, despite the fact that we have assumed nothing on the part of the bandits.

I agree that such a commitment can be employed unilaterally and can be very useful. Though the caravan should consider that doing so may increase the chance of them being attacked (due to the Nerf guns being cheaper etc.). So perhaps the optimal unilateral commitment is more complicated and involves a condition where the bandits are required to somehow make the Nerf gun attack almost as costly for themselves as a regular attack.

Replies from: rohinmshah, VojtaKovarik↑ comment by Rohin Shah (rohinmshah) · 2021-09-05T06:49:32.969Z · LW(p) · GW(p)

Yeah, this seems right.

I'll note though that you may want to make it at least somewhat easier to make the new threat, so that the other player has an incentive to use the new threat rather than the old threat, in cases where they would have used the old threat (rather than being indifferent between the two).

This does mean it is no longer a Pareto improvement, but it still seems like this sort of unilateral commitment can significantly help in expectation.

↑ comment by VojtaKovarik · 2021-09-04T21:46:25.689Z · LW(p) · GW(p)

It seems like your main objection arises because you view SPI as an agreement between the two players.

I would say that my main objection is that if you know that you will encounter SPI in situation X, you have an incentive to alter the policy that you will be using in X. Which might cause other agents to behave differently, possibly in ways that lead to the threat being carried out (which is precisely the thing that SPI aimed to avoid).

In the bandit case, suppose the caravan credibly commits to treating nerf guns identically to regular runs. And suppose this incentivizes the bandits to avoid regular guns. Then you are incentivized to self-modify to start resisting more. (EG, if you both use CDT and the "logical time" is "self modify?" --> "credibly commit?" --> "use nerf?" .) However, if the bandits realize this --- i.e., if the "logical time" is "use nerf?" --> "self modify?" --> "credibly commit?" --- then the bandits will want to not use nerf guns, forcing you to not self-modify. And if you each think that you are "logically before" the other party, you will make incompatible comitments (use regular guns & self-modify to resist) and people get shot with regular guns.

So, I agree that credible unilateral commitments can be useful and they can lead to guaranteed Pareto improvements. It's just that I don't think it addresses my main objection against the proposal.

So perhaps the optimal unilateral commitment is more complicated and involves a condition where the bandits are required to somehow make the Nerf gun attack almost as costly for themselves as a regular attack.

Yup, I fully agree.

Replies from: rohinmshah↑ comment by Rohin Shah (rohinmshah) · 2021-09-05T06:47:04.634Z · LW(p) · GW(p)

Then you are incentivized to self-modify to start resisting more.

The whole point is to commit not to do this (and then actually not do it)!

It seems like you are treating "me" (the caravan) as unable to make commitments and always taking local improvements; I don't know why you would assume this. Yes, there are some AI designs which would self-modify to resist more (e.g. CDT with a certain logical time); seems like you should conclude those AI designs are bad. If you were going to use SPI you'd build AIs that don't do this self-modification once they've decided to use SPI.

Do you also have this objection to one-boxing in Newcomb's problem? (Since once the boxes are filled you have an incentive to self-modify into a two-boxer?)

Replies from: VojtaKovarik↑ comment by VojtaKovarik · 2021-09-05T17:08:06.290Z · LW(p) · GW(p)

I think I agree with your claims about commiting, AI designs, and self-modifying into two-boxer being stupid. But I think we are using a different framing, or there is some misunderstanding about what my claim is. Let me try to rephrase it:

(1) I am not claiming that SPI will never work as intended (ie, get adopted, don't change players' strategies, don't change players' "meta strategies"). Rather, I am saying it will work in some settings and fail to work in others.

(2) Whether SPI works-as-intended depends on the larger context it appears in. (Some examples of settings are described in the "Embedding in a Larger Setting [AF · GW]" section.) Importantly, this is much more about which real-world setting you apply SPI to than about the prediction being too sensitive to how you formalize things.

(3) Because of (2), I think this is a really tricky topic to talk about informally. I think it might be best to separately ask (a) Given some specific formalization of the meta-setting, what will the introduction of SPI do? and (b) Is it formalizing a reasonable real-world situation (and is the formalization appropriate)?

(4) An IMO imporant real-world situation is when you -- a human or an institution -- employ AI (or multiple AIs) which repeatedly interact on your behalf, in a world where bunch of other humans or institutions are doing the same. The details really matter here but I personally expect this to behave similarly to the "Evolutionary dynamics" example described in the report. Informally speaking, once SPI is getting widely adopted, you will have incentives to modify your AIs to be more aggressive / learn towards using AIs that are more aggresive. And even if you resist those incentives, you/your institution will get outcompeted by those who use more aggressive AIs. This will result in SPI not working as intended.

I originally thought the section "Illustrating our Main Objection: Unrealistic Framing [AF · GW]" should suffice to explain all this, but apparently it isn't as clear as I thought it was. Nevertheless, it might perhaps be helpful to read it with this example in mind?

Replies from: rohinmshah↑ comment by Rohin Shah (rohinmshah) · 2021-09-06T07:56:29.060Z · LW(p) · GW(p)

I agree with (1) and (2), in the same way that I would agree that "one boxing will work in some settings and fail to work in others" and "whether you should one box depends on the larger context it appears in". I'd find it weird to call this an "objection" to one boxing though.

I don't really agree with the emphasis on formalization in (3), but I agree it makes sense to consider specific real-world situations.

(4) is helpful, I wasn't thinking of this situation. (I was thinking about cases in which you have a single agent AGI with a DSA that is tasked with pursuing humanity's values, which then may have to acausally coordinate with other civilizations in the multiverse, since that's the situation in which I've seen surrogate goals proposed.)

I think a weird aspect of the situation in your "evolutionary dynamics" scenario is that the bandits cannot distinguish between caravans that use SPI and ones that don't. I agree that if this is the case then you will often not see an advantage from SPI, because the bandits will need to take a strategy that is robust across whether or not the caravan is using SPI or not (and this is why you get outcompeted by those who use more aggressive AIs). However, I think that in the situation you describe in (4) it is totally possible for you to build a reputation as "that one institution (caravan) that uses SPI", allowing potential "bandits" to threaten you with "Nerf guns", while threatening other institutions with "real guns". In that case, the bandits' best response is to use Nerf guns against you and real guns against other institutions, and you will outcompete the other institutions. (Assuming the bandits still threaten everyone at a close-to-equal rate. You probably don't want to make it too easy for them to threaten you.)

Overall I think if your point is "applying SPI in real-world scenarios is tricky" I am in full agreement, but I wouldn't call this an "objection".

Replies from: VojtaKovarik↑ comment by VojtaKovarik · 2021-09-06T18:39:14.825Z · LW(p) · GW(p)

I agree with (1) and (2), in the same way that I would agree that "one boxing will work in some settings and fail to work in others" and "whether you should one box depends on the larger context it appears in". I'd find it weird to call this an "objection" to one boxing though.

I agree that (1)+(2) isn't significant enough to qualify as "an objection". I think that (3)+(4)+(my interpretation of it? or something?) further make me believe something like (2') below. And that seems like an objection to me.

(2') Whether or not it works-as-intended depends on the larger setting and there are many settings -- more than you might initially think -- where SPI will not work-as-intended.

The reason I think (4) is a big deal is that I don't think it relies on you being unable to distinguish the SPI and non-SPI caravans. What exactly do I mean by this? I view the caravans as having two parameters: (A) do they agree to using SPI? and, independently of this, (B) if bandits ambush & threaten them (using SPI or not), do they resist or do they give in? When I talk about incentives to be more agressive, I meant (B), not (A). That is, in the evolutionary setting, "you" (as the caravan-dispatcher / caravan-parameter-setter) will always want to tell the caravans to use SPI. But if most of the bandits also use SPI, you will want to set the (B) parameter to "always resist".

I would say that (4) relies on the bandits not being able to distinguish whether your caravan uses a different (B)-parameter from the one it would use in a world where nobody invented SPI. But this assumption seems pretty realistic? (At least if humans are involved. I agree this might be less of an issue in the AGI-with-DSA scenario.)

Replies from: rohinmshah↑ comment by Rohin Shah (rohinmshah) · 2021-09-06T19:26:47.539Z · LW(p) · GW(p)

But if most of the bandits also use SPI, you will want to set the (B) parameter to "always resist".

Can you explain this? I'm currently parsing it as "If most of the bandits would demand unarmed conditional on the caravan having committed to treating demand unarmed as they would have treated demand armed, then you want to make the caravan always choose resist", but that seems false so I suspect I have misunderstood you.

Replies from: VojtaKovarik↑ comment by VojtaKovarik · 2021-09-07T11:31:06.868Z · LW(p) · GW(p)

That is -- I think* -- a correct way to parse it. But I don't think it false... uhm, that makes me genuinely confused. Let me try to re-rephrase, see if uncovers the crux :-).

You are in a world where most (1- Ɛ ) of the bandits demand unarmed when paired with a caravan commited to [responding to demand unarmed the same as it responds to demand armed] (and they demand armed against caravans without such commitment). The bandit population (ie, their strategies) either remains the same (for simplicity) or the strategies that led to more profit increase in relative frequency. And you have a commited caravan. If you instruct it to always resist, you get payoff 9(1-Ɛ) - 2Ɛ (using the payoff matrix from "G'; extension of G"). If you instruct it to always give in, you get payoff 4(1- Ɛ ) + 3Ɛ. So it is better to instruct it to always resist.

*The only issue that comes to mind is my [responding to demand unarmed the same as it responds to demand armed] vs your [treating demand unarmed as they would have treated demand armed]? If you think there is a difference between the two, then I endorse the former and I am confused about what the latter would mean.

Replies from: rohinmshah↑ comment by Rohin Shah (rohinmshah) · 2021-09-07T23:01:46.817Z · LW(p) · GW(p)

Maybe the difference is between "respond" and "treat".

It seems like you are saying that the caravan commits to "[responding to demand unarmed the same as it responds to demand armed]" but doesn't commit to "[and I will choose my probability of resisting based on the equilibrium value in the world where you always demand armed if you demand at all]". In contrast, I include that as part of the commitment.

When I say "[treating demand unarmed the same as it treats demand armed]" I mean "you first determine the correct policy to follow if there was only demand armed, then you follow that policy even if the bandits demand unarmed"; you cannot then later "instruct it to always resist" under this formulation (otherwise you have changed the policy and are not using SPI-as-I'm-thinking-of-it).

I think the bandits should only accept commitments of the second type as a reason to demand unarmed, and not accept commitments of the first type, precisely because with commitments of the first type the bandits will be worse off if they demand unarmed than if they had demanded armed. That's why I'm primarily thinking of the second type.

Replies from: VojtaKovarik↑ comment by VojtaKovarik · 2021-09-08T16:53:28.513Z · LW(p) · GW(p)

Perfect, that is indeed the diffeence. I agree with all of what you write here.

In this light, the reason for my objection is that I understand how we can make a commitment of the first type, but I have no clue how to make a commitment of the second type. (In our specific example, once demand unarmed is an option -- once SPI is in use -- the counterfactual world where there is only demand armed just seems so different. Wouldn't history need to go very differently? Perhaps it wouldn't even be clear what "you" is in that world?)

But I agree that with SDA-AGIs, the second type of commitment becomes more realistic. (Although, the potential line of thinking mentioned by Caspar applies here: Perhaps those AGIs will come up with SPI-or-something on their own, so there is less value in thinking about this type of SPI now.)

Replies from: rohinmshah↑ comment by Rohin Shah (rohinmshah) · 2021-09-09T05:54:41.417Z · LW(p) · GW(p)

Yeah, I agree with all of that.

↑ comment by Xodarap · 2022-08-29T18:48:37.243Z · LW(p) · GW(p)

I think humans actually do use SPI pretty frequently, if I understand correctly. Some examples:

- Pre-committing to resolving disputes through arbitration instead of the normal judiciary process. In theory at least, this results in an isomorphic "game", but with lower legal costs, thereby constituting a Pareto improvement.

- Ritualized aggression: Directly analogous to the Nerf gun example. E.g. a bear will "commit" to giving up its territory to another bear who can roar louder, without the need of them actually fighting, which would be costly for both parties.

- This example is maybe especially interesting because it implies that SPIs are (easily?) discoverable by "blind optimization" processes like evolution, and don't need some sort of intelligence or social context.

- And it also gives an example of your point about not needing to know that the other party has committed to the SPI - bears presumably don't have the ability to credibly commit, but the SPI still (usually) goes through

↑ comment by VojtaKovarik · 2021-09-04T22:04:02.718Z · LW(p) · GW(p)

Humans and human institutions can't easily make credible commitments.

That seems right. (Perhaps with the exception of legal contracts, unless one of the parties is powerful enough to make the contract difficult to enforce.) And even when individual people in an institution have powerful commitment mechanisms, this is not the same as the institution being able to credible commit. For example, suppose you have a head of a state that threatens suicidal war unless X happens, and they are stubborn enough to follow up on it. Then if X happens, you might get a coup instead, thus avoiding the war.

comment by VojtaKovarik · 2021-09-02T16:36:55.144Z · LW(p) · GW(p)

the tl;dr version of the full report:

The surrogate goals (SG) idea proposes that an agent might adopt a new seemingly meaningless goal (such as preventing the existence of a sphere of platinum with a diameter of exactly 42.82cm or really hating being shot by a water gun) to prevent the realization of threats against some goals they actually value (such as staying alive) [TB1, TB2]. If they can commit to treating threats to this goal as seriously as threats to their actual goals, the hope is that the new goal gets threatened instead. In particular, the purpose of this proposal is not to become more resistant to threats. Rather, we hope that if the agent and the threatener misjudge each other (underestimating the commitment to ignore/carry out the threat), the outcome (Ignore threat, Carry out threat) will be replaced by something harmless.

Safe Pareto improvements (SPIs) [OC] is a formalization and a generalization of this idea. In the straightforward interpretation, the approach applies to a situation where an agent delegates a problem to their representative --- but we could also imagine “delegating” to a future self-modified version of oneself. The idea is to give instructions like “if other agents you encounter also have this same instruction, replace ‘real’ conflicts with them with ‘mock’ conflicts, in which you all act like you would in a real conflict, but bad outcomes are replaced by less harmful variants”. Some potential examples would be fighting (should a fight arise) to the first blood instead of to the death or threatening diplomatic conflict instead of a military one. In the SG setting, SPI could correspond to a joint commitment to only use water-gun threats while behaving as if the water gun was real.

First, I have one “methodological” observation: If we are to understand whether these approaches “work”, it is not sufficient to look just at the threat situation itself. Instead, we need to embed it in a larger context. (Is the interaction one-time-only, or will there be repetitions? Did the agents have a chance to update on the fact that SG/SPI are being used? What do the agents know about each other?) In the report, I show that different settings can lead to (very) different outcomes.

Incidentally, I think this observation applies to most technical research. We should specify the larger context, at least informally, such that we can answer questions like “but will it help?” and “wouldn’t this other approach be even better?”.

Second, SG and SPI seem to have one limitation that seems hard to overcome. Both SG and SPI hope to make agents treat conflict as seriously as before while in-fact making conflict outcomes less costly. However, if SG/SPI starts “being a thing”, agents will have incentives to treat conflict as less costly. (EG, ignoring threats they might take seriously before or making threats they wouldn’t dare to make otherwise.) The report gives an example of an “evolutionary” setting that illustrates this well, imo.

Finally: (1) I believe that the hypothesis that “we ‘only’ need to work out the details of SG/SPI, and then we will avoid the realization of most threats” is false. (2) Instead, I would expect that the approach “adds up to normality” --- that with SG/SPI, we can do mostly the same things that we could do with “just” the ability to make contracts and being somewhat transparent to each other. (3) However, I still think that studying the approach is useful --- it is a way of formalizing things, it will work in some settings, and even when it doesn’t, we might get ideas for which other things could work instead.