No Really, Attention is ALL You Need - Attention can do feedforward networks

post by Robert_AIZI · 2023-01-31T18:48:20.801Z · LW · GW · 7 commentsThis is a link post for https://aizi.substack.com/p/no-really-attention-is-all-you-need?sd=pf

Contents

Limitations:

Notation

Construction Overview

Entry-wise SiLU via attention heads

Vector-Wise Linear Transformations via Attention Heads

Tweaking the Original Attention Heads to Preserve Their Behavior

Demonstration Code

Conclusion

None

7 comments

[Epistemic status: Mathematically proven, and I have running code that implements it.]

Overview: A transformer consists of two alternating sublayers: attention heads and feedforward networks (FFNs, also called MLPs). In this post I’ll show how you can implement the latter using the former, and how you can convert an existing transformer with FFNs into an attention-only transformer.

My hope is that such a conversion technique can augment mechanistic interpretability tools such as the ones described in A Mathematical Framework for Transformer Circuits, by reducing the task of interpretability from “interpret attention and FFNs” to just “interpret attention”. That publication specifically points out that “more complete understanding [of Transformers] will require progress on MLP layers”, which I hope this technique can supply.

Limitations:

- Attention heads are able to naturally produce two activation functions, SiLU and ReLU, but I haven’t found an easy way to produce GeLU, which is what GPT uses.

- SiLU is qualitatively similar to GeLU, especially after rescaling. Of course one can approximate other activation functions by adding more layers.

- Converting to attention-only introduces small error terms in the calculations, but such terms can be made arbitrarily small (potentially small enough that a machine rounds it to 0).

- Attention-only transformers are likely to be worse for capabilities than normal transformers. Since my goal is safety rather than capabilities, this is a feature and not a bug for me.

- Appealing to authority, the people currently making transformers optimized for capabilities use FFNs in addition to attention heads. If attention-only transformers were better for capabilities, they’d presumably already be in use.

- Implementing an FFN via attention this way is computationally slower/more expensive than a normal FFN.

- Converting to an attention-only transformer increases by ~5x. Most of the additional dimensions are used to store what was previously the hidden layer of the FFN, and a few more are used for 1-hot positional encoding.

- Converting to an attention-only transformer replaces each each FFN sublayer with 3 attention layers, so a whole layer goes from 1 attention+1 FFN to 4 attention.

- Some matrices used by the new attention heads are higher rank than the normal head dimensions allow. This would require a special case in the code, slow down computation.

- The structures used for attention-only transformers are probably not stable under training with any form of regularization.

- This result may already be known, but is new to me (and therefore might be new to some of you).

Notation

Fix a transformer T (such as GPT-3) which uses attention and feedforward networks. Write for the internal dimension of the model, for the number of vectors in the context, and X for the “residual stream”, the N-by-D matrix storing the internal state of the model during a forward pass.

We will assume that the feedforward networks in T consists of an MLP with one hidden layer of width , using an activation function α(x)=SiLU(x)=xσ(x)[1]. To simplify notation, we will assume that bias terms are built into the weight matrices and , which are respectively of sizes D-by-4D and 4D-by-D, so that the output of the feedforward network is , where α is applied to the matrix entry-wise.

We’ll follow this notation for attention heads, so that an attention head is characterized by its query-key matrix and its output-value matrix , each of size D-by-D[2]. To simplify notation, we will assume that the “” step of attention has been folded into the Q matrix. Then the output of the attention head is , where the softmax operation is applied row-wise.

We assume that both the feedforward network and attention heads make use of skip connections, so that their output is added to the original residual stream. However, we ignore layer normalization.

Throughout, we will rely on a large number Ω whose purpose is to dwarf other numbers in the softmax operation of an attention head. In particular, we assume Ω has two properties:

- , to within an acceptable error, when there are up to 0s. That is, with an error tolerance of ε>0, one needs .

- for each x that appears in an attention entry in the normal operation of T.

In my code, is sufficient for a tolerance of .

Construction Overview

We will convert the attention-and-feedforward model T into an attention-only model T’ by augmenting the residual stream, replacing the feedforward sublayers with attention sublayers, and tweaking the original attention heads to maintain their original behavior on the augmented residual stream.

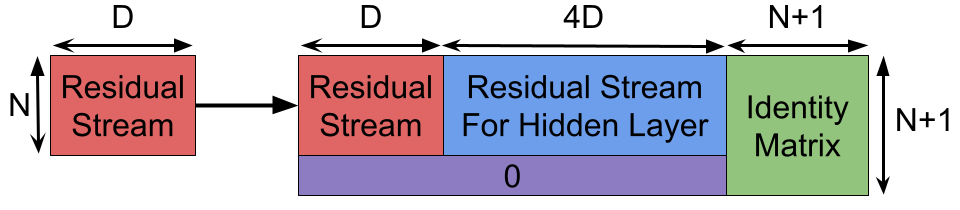

We augment the residual stream of the model by:

- N’ = N+1. The new context vector will act as a “bias context vector” which we use to implement the entrywise SiLU function.

- D’=D+4D+(N+1). The 4D additional dimensions will be used to store the intermediate calculations of the FFN network. Then N+1=N’ additional dimensions act as 1-hot positional encodings.

In T, each layer consists of two sublayers:

- Multi-headed attention.

- Feedforward network.

In T’, these are replaced by:

- Multi-headed attention. This acts identically to the original transformer, though the Q and V matrices are slightly tweaked to avoid issues arising from introducing the “bias context” vector.

- Linear transformation via attention heads. This transformation emulates by reading from the D-width residual stream and writing to the 4D-width residual stream corresponding to the hidden layer. The Q matrix makes each vector only attends to itself, and the V matrix contains a copy of .

- Entry-wise SiLU to hidden layers via attention heads. Using one attention head per dimension, we apply the activation function to the “hidden dimensions” computed in the previous step, resulting in . The Q matrix makes each vector attend only to itself and the final “bias context” vector, split in proportion to σ(x). The V matrix makes a vector write the negative of its entry and the bias context vector write 0, resulting in an entrywise SiLU.

- Linear transformation via attention heads. This step emulates multiplying by and adding it back to the D-width residual stream. This step also zeroes out the 4D-width part of the residual stream corresponding to the hidden layer, readying them to be written to by the next layer. The Q matrix makes each vector only attends to itself, and the V matrix contains a copy of .

The following sections will discuss these steps in the order (3), (2+4), (1), which is descending order of novelty to me.

Entry-wise SiLU via attention heads

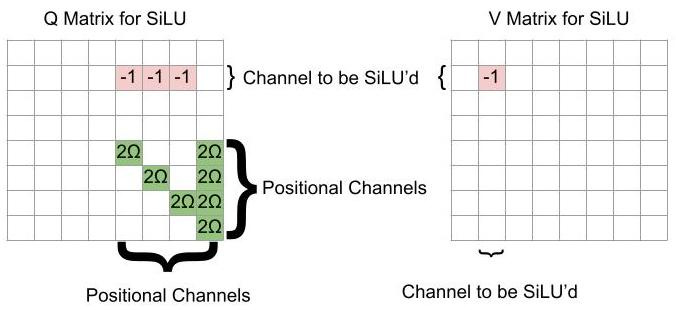

One can apply SiLU to the residual stream with one attention head per dimension being SiLU’d. One uses the following Q and V matrices:

With this Q matrix, the th row of will be of the form , where is the dimension being SiLU’d, and the s are in the th entry and the final entry. Then, after applying the softmax to this row, the row becomes (to within error). That is, every vector is attended by only itself and the bias vector.

By our choice of , the influence of a vector is the negative of its entry in the th position. Thus the th entry of is , so after adding to the residual stream, one gets that the th entry of is , as desired.

Vector-Wise Linear Transformations via Attention Heads

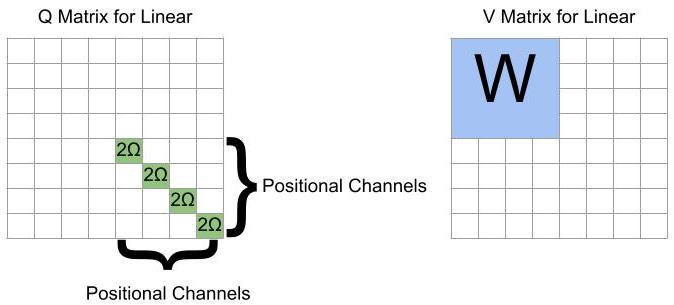

By putting such large weights in the self-positional-encoding matrices, a vector attends entirely to itself. Thus the output of the attention head is entirely the result of the V matrix, which can contain the arbitrary linear transformation of the feedforward network. Additional comments:

- If one is trying to write from the “normal dimensions” to the “hidden dimensions”, or vice-versa, the W matrix must be placed as a block off the diagonal.

- If the weight matrix W is too high-rank, it can be split over multiple attention heads with equal Q matrices.

- One can be cute and use the 1s in the position encoding dimensions for the bias terms.

- We also use this step to “clear the memory” by zeroing out the 4D additional dimensions used to save the hidden layer of the FFN.

Tweaking the Original Attention Heads to Preserve Their Behavior

The addition of the new vector used for the activation function could potentially change the attention patterns of the preexisting attention heads, which would change the behavior of the network. However, we can slightly tweak the attention matrices in a normal attention head to prevent this issue:

By augmenting the attention matrix in this way, the bias vector strongly avoids attending to the non-bias vectors, and strongly attends to itself (preventing non-bias vectors from attending to the bias vector).

Demonstration Code

I’ve put Python code implementing this technique on github. Each of the three components (SiLU, linear transformations, normal attention) are implemented both directly and with attention heads. They are tested on random matrices with and , and the largest error entries in each matrix are on the order of . I have not tested how such errors propagate through multiple layers.

Conclusion

- Attention heads can implement the feedforward network components of a transformer, up to small errors.

- It is possible to convert an existing transformer to such an attention-only network as long as the original network uses SiLU or ReLU as its activation function. This makes the transformer larger and slower, but not astronomically so.

- Such conversion could assist in mechanistic interpretability efforts which are able to operate on attention heads but not FFNs, such as this work.

- Several hurdles would need to be overcome before this technique could lead to capabilities gains.

- There is significant wasted computation in the attention heads emulating the FFN network.

- Many of the attention patterns we use are higher rank than are allowable by the fact that Q is learned as a low-rank matrix factorization (). In GPT-3 the largest learnable rank is , but both the SiLU heads and the linear transformation heads use attention patterns of rank .

- regularization during training would likely make the attention matrices used in SiLU heads and linear heads unstable by reducing the entries.

- Dropout regularization during training would also be disruptive, since the attention patterns rely on strong signals from specific entries, with no redundancy.

- ^

One can also approximate ReLU with this technique, since SiLU(kx)/k → ReLU(x) as k→infinity. AIAYN uses ReLU, but GPT-3 uses GeLU.

- ^

For implementation purposes, these matrices are usually learned as low-rank factorizations, with and a similar expression for . However, it’s easier to construct the desired properties if we treat them in their full form. We will ignore rank restrictions except in the concluding comments.

7 comments

Comments sorted by top scores.

comment by Neel Nanda (neel-nanda-1) · 2023-02-04T19:10:41.268Z · LW(p) · GW(p)

Cute construction! To check, am I correct that you're adding an attention head per neuron? To me that makes this prohibitive enough to not actually be useful for real models - eg, in GPT-2 Small that'd take you from 12 heads per layer to about 3,000 per layer.

Replies from: Robert_AIZI↑ comment by Robert_AIZI · 2023-02-05T12:29:32.432Z · LW(p) · GW(p)

That's right, the activation function sublayer needs 1 attention head per neuron. The other sublayers can get away with fewer - the attention sublayer needs the usual amount, and the linear transformation sublayer just needs enough to spread the rank of the weight matrix across the V matrices of the attention head. I'm most familiar with the size hyperparameters of GPT-3 (Table 2.1), but in full-size GPT-3, for each sublayer:

- heads for the attention sublayer

- heads for the weight matrix calculating into the hidden layer

- heads for the activation function

- heads for the weight matrix calculating out of the hidden layer

comment by astralbrane · 2023-07-16T19:06:11.583Z · LW(p) · GW(p)

Aren't attention networks and MLPs both subsets of feedforward networks already? What you really mean is "Attention can implement fully-connected MLPs"?

Replies from: Robert_AIZI↑ comment by Robert_AIZI · 2023-07-17T12:07:56.060Z · LW(p) · GW(p)

Calling fully-connected MLPs "feedforward networks" is common (e.g. in the original transformer paper https://arxiv.org/pdf/1706.03762.pdf), so I tried to use that language here for the sake of the transformer-background people. But yes, I think "Attention can implement fully-connected MLPs" is a correct and arguably more accurate way to describe this.

Replies from: gwern↑ comment by gwern · 2023-07-17T14:26:22.185Z · LW(p) · GW(p)

Given the general contempt that MLPs are held in at present, and the extent to which people seem to regard self-attention as magic pixie dust which cannot be replicated by alternatives like CNNs or MLPs and which makes Transformers qualitatively different from anything before & solely responsible for the past ~4 years of DL progress (earlier discussion defending MLP prospects [LW(p) · GW(p)]), it might be more useful to emphasize the other direction: if you can convert any self-attention to an equivalent fully-connected MLP, then that can be described as "there is a fully-connected MLP that implements your self-attention". (Incidentally, maybe I missed this in the writeup, but this post is only providing an injective self-attention → MLP construction, right? Not the other way around, so converting an arbitrary MLP layer to a self-attention layer is presumably doable - at least with enough parameters - but remains unknown.)

Unfortunate that the construction is so inefficient: 12 heads → 3,000 heads or 250x inflation is big enough to be practically irrelevant (maybe theoretically too). I wonder if you can tighten that to something much more relevant? My intuition is that MLPs are such powerful function approximators that you should be able to convert between much more similar-sized nets (and maybe smaller MLPs).

In either direction - perhaps you could just directly empirically approximate an exchange rate by training MLPs of various sizes to distill a self-attention layer? Given the sloppiness in attention patterns, it wouldn't necessarily have to be all that accurate. And you could do this for each layer to de-attend a NN, which ought to have nice performance characteristics in addition to being a PoC.

(My prediction would be that the parameter-optimal MLP equivalent would have a width vs depth scaling law such that increasing large Transformer heads would be approximated by increasingly skinny deep MLP stacks, to allow switching/mixing by depth. And that you could probably come up with an initialization for the MLPs which makes them start off with self-attention-like activity, like you can come up with Transformer initializations that mimic CNN inductive priors. Then you could just drop the distillation entirely and create a MLPized Transformer from scratch.)

Replies from: Robert_AIZI↑ comment by Robert_AIZI · 2023-07-17T21:46:38.100Z · LW(p) · GW(p)

Incidentally, maybe I missed this in the writeup, but this post is only providing an injective self-attention → MLP construction, right?

Either I'm misunderstanding you or you're misunderstanding me, but I think I've shown the opposite: any MLP layer can be converted to a self-attention layer. (Well, in this post I actually show how to convert the MLP layer to 3 self-attention layers, but in my follow-up [LW · GW] I show how you can get it in one.) I don't claim that you can do a self-attention → MLP construction.

Converting an arbitrary MLP layer to a self-attention layer is presumably doable - at least with enough parameters - but remains unknown

This is what I think I show here! Let the unknown be known!

Unfortunate that the construction is so inefficient: 12 heads → 3,000 heads or 250x inflation is big enough to be practically irrelevant (maybe theoretically too).

Yes, this is definitely at an "interesting trivia" level of efficiency. Unfortunately, the construction is built around using 1 attention head per hidden dimension, so I don't see any obvious way to improve the number of heads. The only angle I have for this to be useful at current scale is that Anthropic (paraphrased) said "oh we can do interpretability on attention heads but not MLPs", so the conversion of the later into the former might supplement their techniques.

Replies from: gwern↑ comment by gwern · 2023-07-18T00:20:30.201Z · LW(p) · GW(p)

Yes, you're right. My bad; I was skimming in a hurry before heading out while focused on my own hobbyhorse of 'how to make MLPs beat Transformers?'. Knew I was missing something, so glad I checked. Now that you put it that way, the intuition is a lot clearer, and shrinking it seems a lot harder: one head per hidden dim/neuron is a straightforward construction but also unclear how much you could be guaranteed to shrink it by trying to merge heads...

The empirical approach, in both directions, might be the best bet here, and has the advantage of being the sort of thing that someone junior could get interesting results on quickly with minimal hardware.