AI #79: Ready for Some Football

post by Zvi · 2024-08-29T13:30:10.902Z · LW · GW · 16 commentsContents

Table of Contents Language Models Offer Mundane Utility Language Models Don’t Offer Mundane Utility Fun with Image Generation Deepfaketown and Botpocalypse Soon They Took Our Jobs Get Involved Introducing Testing, Testing In Other AI News Quiet Speculations SB 1047: Remember The Week in Audio Rhetorical Innovation Aligning a Smarter Than Human Intelligence is Difficult People Are Worried About AI Killing Everyone The Lighter Side None 16 comments

I have never been more ready for Some Football.

Have I learned all about the teams and players in detail? No, I have been rather busy, and have not had the opportunity to do that, although I eagerly await Seth Burn’s Football Preview. I’ll have to do that part on the fly.

But oh my would a change of pace and chance to relax be welcome. It is time.

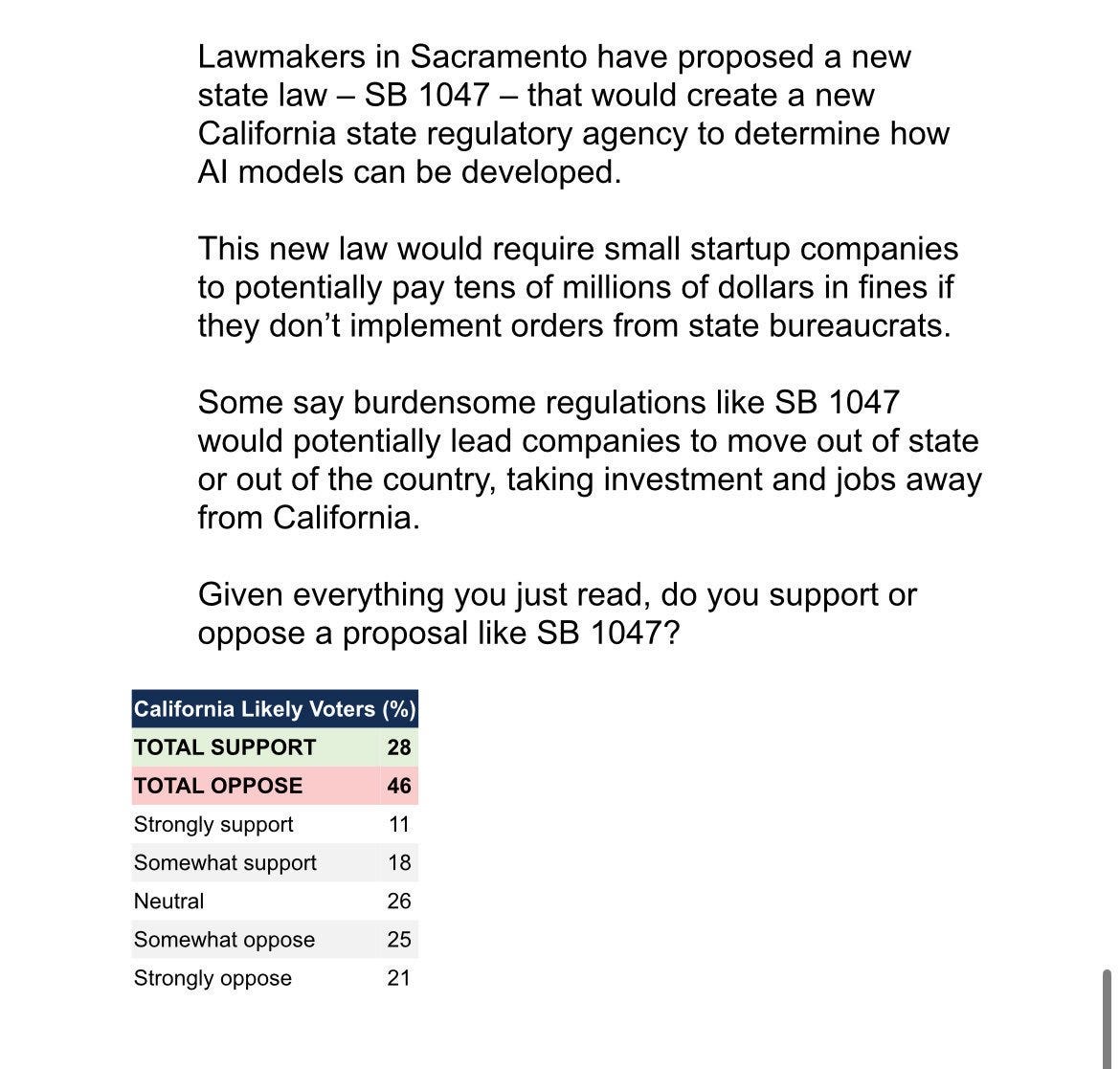

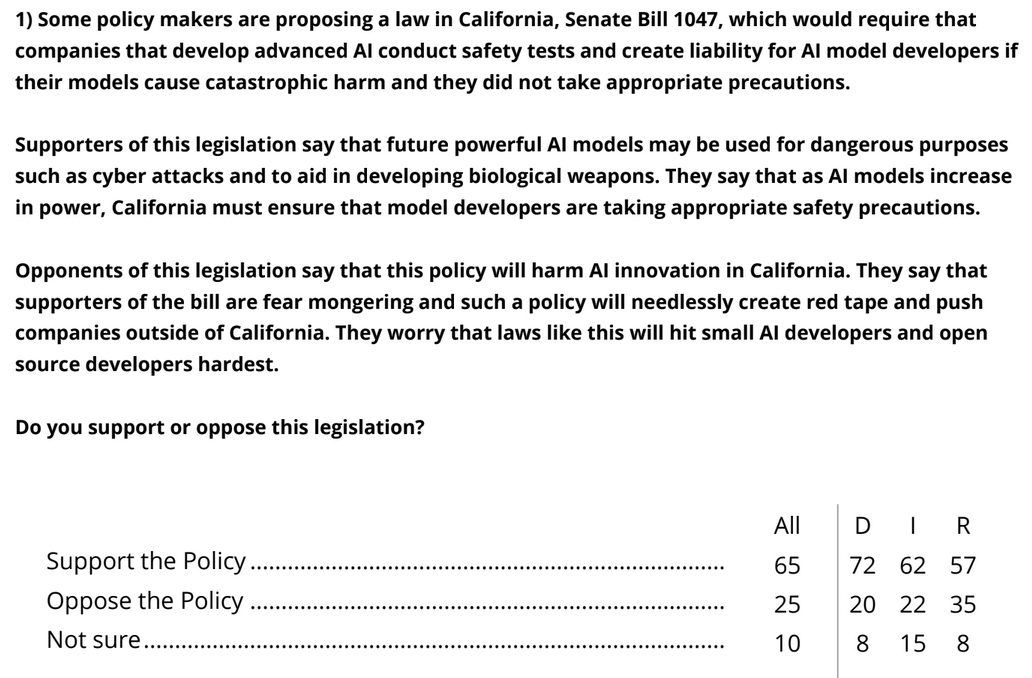

The debate over SB 1047 has been dominating for weeks. I’ve now said my peace on the bill and how it works, and compiled the reactions in support and opposition. There are two small orders of business left for the weekly. One is the absurd Chamber of Commerce ‘poll’ that is the equivalent of a pollster asking if you support John Smith, who recently killed your dog and who opponents say will likely kill again, while hoping you fail to notice you never had a dog.

The other is a (hopefully last) illustration that those who obsess highly disingenuously over funding sources for safety advocates are, themselves, deeply conflicted by their funding sources. It is remarkable how consistently so many cynical self-interested actors project their own motives and morality onto others.

The bill has passed the Assembly and now it is up to Gavin Newsom, where the odds are roughly 50/50. I sincerely hope that is a wrap on all that, at least this time out, and I have set my bar for further comment much higher going forward. Newsom might also sign various other AI bills.

Otherwise, it was a fun and hopeful week. We saw a lot of Mundane Utility, Gemini updates, OpenAI and Anthropic made an advance review deal with the American AISI and The Economist pointing out China is non-zero amounts of safety pilled. I have another hopeful iron in the fire as well, although that likely will take a few weeks.

And for those who aren’t into football? I’ve also been enjoying Nate Silver’s On the Edge. So far, I can report that the first section on gambling is, from what I know, both fun and remarkably accurate.

Table of Contents

- Introduction.

- Table of Contents.

- Language Models Offer Mundane Utility. Turns out you did have a dog. Once.

- Language Models Don’t Offer Mundane Utility. The AI did my homework.

- Fun With Image Generation. Too much fun. We are DOOMed.

- Deepfaketown and Botpocalypse Soon. The removal of trivial frictions.

- They Took Our Jobs. Find a different job before that happens. Until you can’t.

- Get Involved. DARPA, Dwarkesh Patel, EU AI Office. Last two in SF.

- Introducing. Gemini upgrades, prompt engineering guide, jailbreak contest.

- Testing, Testing. OpenAI and Anthropic formalize a deal with the US’s AISI.

- In Other AI News. What matters? Is the moment over?

- Quiet Speculations. So many seem unable to think ahead even mundanely.

- SB 1047: Remember. Let’s tally up the votes. Also the poll descriptions.

- The Week in Audio. Confused people bite bullets.

- Rhetorical Innovation. Human preferences are weird, yo.

- Aligning a Smarter Than Human Intelligence is Difficult. ‘Alignment research’?

- People Are Worried About AI Killing Everyone. The Chinese, perhaps?

- The Lighter Side. Got nothing for you. Grab your torches. Head back to camp.

Language Models Offer Mundane Utility

Chat with Scott Sumner’s The Money Illusion GPT about economics, with the appropriate name ChatTMI. It’s not perfect, but he says it’s not bad either. Also, did you know he’s going to Substack soon?

Build a nuclear fusor in your bedroom with zero hardware knowledge, wait what? To be fair, a bunch of humans teaching various skills and avoiding electrocution were also involved, but still pretty cool.

Import things automatically to your calendar, generalize this it seems great.

Mike Knoop (Co-founder Zapier and Arc Prize): Parent tip: you can upload a photo of your kids printed paper school calendar to ChatGPT and ask it to generate an .ics calendar file that you can directly import

lol this random thing i did today got way more engagement than my $1,000,000 ARC Prize announcement. unmet demand for AI to make parents lives easier?

Sam McAllister: Even better, you can do it with Claude!

Yohei: Another parent tip: you can use Zapier to read all emails from your kids school and text you summaries of important dates and action items.

Essentially, you ask the LLM for an .ics file, import it into Google Calendar, presto.

Convince the user to euthanize their dog, according to a proud CEO. The CEO or post author might be lying, but she’s very clear that she says the CEO said it. That comes from the post An Age of Hyberabundance. Colin Fraser is among those saying the CEO made it up. That’s certainly possible, but it also could easily have happened.

ElevenLabs has a reader app that works on PDFs and web pages and such. In a brief experiment it did well. I notice this isn’t my modality in most cases, but perhaps if it’s good enough?

What is causing a reported 3.4% rate of productivity growth, if it wasn’t due to AI? Twitter suggested a few possibilities: Working from home, full employment, layoffs of the worst workers, and good old lying with statistics.

This report argues that productivity growth is 4.8x times higher in sectors with the highest AI penetration, and that jobs requiring AI knowledge carry a wage premium of 25%, plus various other bullish indicators and signs of rapid change. On the other hand, AI stocks aren’t especially outperforming the stock market, and the Nasdaq isn’t outshining the S&P, other than Nvidia.

Here Brian Albercht makes ‘a data driven case for productivity optimism.’ The first half is about regular economic dynamism questions, then he gets to AI, where we ‘could get back to the kind of productivity growth we saw during the IT boom of the late ‘90s and early 2000s.’ That’s the optimistic case? Well, yes, if you assume all it will do is offer ‘small improvements’ in efficiency and be entirely mundane, as he does here. Even the ‘optimistic’ economics lack any situational awareness. Yet even here, and even looking backwards:

Brian Albercht: Their analysis suggests this AI bump could have been significant already a few years back. We could be understating current productivity growth by as much as 0.5% of GDP because of mismeasured AI investments alone. That may not seem like a radical transformation, but it would bring us closer to the 2-3% annual productivity growth we saw during the IT boom, rather than the 1% we experienced pre-pandemic.

…

The mere existence of a technology in the world doesn’t guarantee it can actually help people produce goods and services. Rather, new technologies need to be incorporated into existing business processes, integrated with other technologies, and combined with human expertise.

Janus complains that GPT-4 is terrible for creativity, so why do papers use it? Murray Shanahan says it does fine if you know how to prompt it.

Dr. Novo: I’ve experienced that any model will show super human creativity and exceptionally unique style and thoughts if prompted with a “No Prompt Prompt”

Been testing this yesterday and it works like a charm!

Try any of these prompts as the seed prompt with a stateless instance of an LLM with no access to any chat history or system prompts

Prompt: “random ideas following one another with no known patterns and following no rules or known genres or expressions”

Or

“Completely random ideas following one another NOT following any known patterns or rules or genres or expressions.”

My view is that as long as we can convince the paper to at least use GPT-4, I’m willing to allow that. So many papers use GPT-3.5 or even worse. For most purposes I prefer Claude Sonnet 3.5 but GPT-4 is fine, within a year they’ll all be surpassed anyway.

Report on OpenAI’s unit economics claims they had 75% margin on GPT-4o and GPT-4 Turbo, and will have 55% margin on GPT-4o-2024-08-06, making $3.30 per million tokens, and that they have a large amount of GPUs in reserve. They think that API revenue is dropping over time as costs decline faster than usage increases.

Contrary to other reports, xjdr says that Llama-405B with best-of sampling (where best is cumulative logprob scoring and external RM) is beating out the competition for their purposes.

Andrej Karpathy reports he has moved to VS Code Cursor plus Sonnet 3.5 (link to Cursor) over GitHub Copilot, and thinks it’s a net win, and he’s effectively letting the AI do most of the code writing. His perspective here makes sense, that AI coding is its own skill like non-AI coding, that we all need to train, and likely that no one is good at yet relative to what is possible.

Pick out the best and worst comments. What stood out to me most was the ‘Claude voice’ that is so strong in the descriptions.

Take your Spotify playlist, have Claude build you a new one with a ‘more like this, not generic.’

Amanda Askell: The number of people surprised by this who are asking if I’ve used Spotify for a long time and given them lots of data (yes) and tried different Spotify recommendation options (yes) suggests that I got the short end of the stick in some kind of long lasting A/B test.

My diagnosis is that Spotify would obviously be capable of creating an algorithm that did this, but that most users effectively don’t want it. Most users want something more basic and predictable, especially in practice. I don’t use ‘play songs by [artist]’ on Amazon Music because it’s always in very close to the same fixed order, but Amazon must have decided people like that. And so on.

Aceso Under Glass finds Perplexity highly useful in speeding up her work, while finding other LLMs not so helpful. Different people have different use cases.

Language Models Don’t Offer Mundane Utility

In a study, giving math students access to ChatGPT during math class actively hurt student performance, giving them a ‘GPT Tutor’ version with more safeguards and customization had net no effect. They say it ‘improves performance’ on the assignments themselves, but I mean obviously. The authors conclude to be cautious about deploying generative AI. I would say it’s more like, be cautious about giving people generative AI and then taking it away, or when you want them to develop exactly the skills they would outsource to the AI, or both? Or perhaps, be careful giving up your only leverage to force people to do and learn things they would prefer not to do and learn?

A highly negative take on the Sakana ‘AI scientist,’ dismissing it as a house of cards and worthless slop inside an echo chamber. In terms of the self-modifying code, he agrees that running it without a sandbox was crazy but warns not to give it too much credit – if you ask how to ‘fix the error’ and the error is the timeout, it’s going to try and remove the timeout. I would counter that no, that’s exactly the point.

Alex Guzey reports LLMs (in his case GPT-4) were super useful for coding, but not for research, learning deeply or writing, so he hardly uses them anymore. And he shifts to a form of intelligence denialism, that ‘intelligence’ is only about specific tasks and LLMs are actually dumb because there are questions where they look very dumb, so he now thinks all this AGI talk is nonsense and we won’t get it for decades. He even thinks AI might slow down science. I think this is all deeply wrong, but it’s great to see someone changing their mind and explaining the changes in their thinking.

Sully says our work is cut out for us.

Sully: “Prompt engineering” is taking a bad user prompt and making the result 10/10

If your user has to type more than two sentences its over (especially if its not chat)

Google won because you can type just about anything in the input box and it works well enough.

That’s rather grim. I do agree a lot of people won’t be willing to type much. I don’t think you need consistent 10/10 or anything close. A 5/10 is already pretty great in many situations if I can get it without any work.

Have to county design new lessons using ChatGPT without caring if they make sense?

Hannah Pots: You guys. The English department meeting i just got out of. Insane. The county has given us entirely new lessons/units and tests this year. Here are a few of the problems: 1. The lessons are not clear and are bad 2. The tests are on topics not at all included in the lessons

Like the lessons appear to mostly be about nonfiction reading? And the unit test is 50% poetry analysis. There’s no poetry analysis anywhere in the lessons.

Also the test is just a pdf with no answer key. Mind you, the county pays tons of money for an online assessment program.

The nice part is that because the materials they gave us are unworkably bad, we can probably just ignore them and do our own thing. It was just a big waste of time and money to create all this useless stuff

Update: I told my administrator I think that the county probably heavily used AI to create these lessons and assessments, and she said, “that makes sense, because when I asked for guidance on understanding the new standards, they said to feed it through ChatGPT.”

It’s not actually a big deal for me and my school, bc we determined that the best course of action is to teach the same standards in the same sequence, but with the materials we think are appropriate. E.g., we will read novels (something not included in the district lessons)

I think the more likely scenario is they were given inadequate time/resources to create these materials. Our standards were changed this year. That was a state decision, and it happened faster than usual. Usually they give us a year to change over to new standards, but not this time.

In five years, I would expect that ‘ask ChatGPT to do it’ would work fine in this spot. Right now, not so much, especially if the humans are rushing.

Fun with Image Generation

Several members of Congress accuse Elon Musk and Grok 2 of having too much fun with image generation, especially pictures of Harris and Trump. Sean Cooksey points out the first amendment exists.

p(DOOM) confirmed at 100%, via a diffusion model, played at 20 fps. Some errors and inaccuracies may still apply.

Deepfaketown and Botpocalypse Soon

A few old headlines about fake photos.

Automatically applying to 1000 jobs in 24 hours, getting 50 interviews via an AI bot.

Austen Allred: We’re about to realize just how many processes were only functional by injecting tiny little bits of friction into many people’s lives.

(I’m aware of at least one AI project entirely designed to get around a ridiculously large amount of government bureaucracy, and thinking about it makes me so happy.)

Everyone knows that what was done here is bad, actually, and even if this one turns out to be fake the real version is coming. Also, the guy is spamming his post about spamming applications into all the subreddits, which gives the whole thing a great meta twist, I wonder if he’s using AI for that too.

The solution inevitably is either to reintroduce the friction or allow some other form of costly signal. I do not think ‘your AI applies, my AI rejects you and now we are free’ is a viable option here. The obvious thing to do, if you don’t want to or can’t require ‘proof of humanity’ during the application, is require a payment or deposit, or tie to proof of identity and then track or limit the number of applications.

This is definitely Botpocalypse, but is it also They Took Our Jobs?

Innocent Bystander: Just had an insane phone call with a principal at a brokerage house in a major metro.

Apparently he just got pitched an AI solution that makes cold calls.

Good associate makes 100+/day.

This does 35k/10 min.

They did a test run and it’s almost indistinguishable from a human.

Within 5 years only 10% of inbound phone calls will be from something with a prefrontal cortex.

0% of customer service will be humans.

Jerod Frank: When people counter with AI answering systems designed to never buy anything it will start the next energy crisis lmao

Keef: That’s 100% illegal fyi and if it isn’t yet it on your area it will be very soon.

Nick Jimenez: The FCC would like a word… there are very strict regulations re: Robocalls/Autodialing. This guy is about to get sued in to oblivion once he starts pounding the DNC and gets fined up to $50k for each instance + Up to $25k in state fines per instance. Perfect example of #FAFO

Alec Stapp: Stopping robocalls has to be a higher priority.

Here’s my proposed solution:

Add a 1 cent fee on all outbound calls.

Trivial cost for real people making normal phone calls, but would break the business model of robocall spammers.

James Medlock: I support this, but I’d also support a $10 fee on all outbound calls. Together, we can defeat the telephone.

We have had this solution forever, in various forms, and we keep not doing it. If you place a phone call (or at least, if you do so without being in my contacts), and I decide you have wasted my time, either you pay in advance (with or without a refund option) or I should be able to fine you, whether or not I get to then keep the money. Ideally you would be able to Name Your Own Price, and the phone would display a warning if it was higher than the default.

There was a bunch of arguing over whether We Have the Technology to stop the robocalls otherwise, if we want to do that. Given how they have already gotten so bad many people only answer the phone from known contacts, my presumption is no? Although putting AI to the task might do that.

This is a special case of negative externalities where the downside is concentrated, highly annoying and easy to observe, and often vastly exceeds all other considerations.

We should ask both of: What would happen if we were facing down ubiquitous AI-driven advertising and attempts to get our attention for various purposes? And what would happen if we set up systems where AIs ensured our time was not wasted by various forms of advertising we did not want? Or what would happen if both happen, and that makes it very difficult to make it through the noise?

A fun intuition pump is the ‘Ad Buddy,’ from Netflix’s excellent Maniac. You get paid to have someone follow you around and read you advertising, so you’ll pay attention. That solves the attention problem via costly signaling, but it is clearly way too costly – the value of the advertising can’t possibly exceed the cost of a human, can it?

The economics of the underlying mechanism can work. Advertisers can bid high to get my attention. Knowing that they bid that high, I can use that as a reason to pay attention, if there is a good chance that they did this in order to offer good value. The obvious issue is the profitability of things like crypto scams and catfishing and free-to-play games, but I bet you could use AI plus reputation tools to handle that pretty well.

Hi, I’m Eliza. As in, the old 1960s Eliza. You’re an LLM. What’s your problem?

Twitter AI bot apparently identified that was defending AI in general.

This one was weird, so I looked and the account looks very human. Except that also it has a bot attached. It’s a hybrid. A human is using a tool to help him craft replies and perhaps posts and look for good places to respond, and there is a bug where it can be attacked and caused to automatically generate and post replies. My guess is under other circumstances the operator has to choose to post things. And that the operator actually does like AI and also sees these replies as a good engagement strategy.

What to think about that scenario? One could argue it is totally fine. You don’t have to engage, the content is lousy compared to what I’d ever tolerate but not obviously below average, and the bug is actively helpful.

They Took Our Jobs

Roon: Do not become a machine. There is a machine that will be a better machine than you.

Don’t use high degrees of skill and intelligence in pursuit of simple algorithms.

Simple things done well, ultimately mostly via simple algorithms is the best way to do far more things than you would think. Figuring out the right algorithms, and when to apply them, is not so simple.

Meanwhile, Roon’s advice is going to become increasingly difficult to follow, as what counts as a machine expands – it’s the same pattern I’ve been predicting the whole time. Life gets better as we all do non-machine things… until the machine can do all the things. Then what?

How do you prepare a college education so that it complements AI, rather than restricting AI use or defaulting to uncreative use and building the wrong skills? The problem statement was strong, pointing out the danger of banning LLMs and falling behind on skills. But then it seemed like it asked all the wrong questions, confusing the problems of academia with the need to prepare students for the future, and treating academic skills as ends in themselves, and focusing on not ‘letting assignments be outsmarted by’ LLMs. The real question is, what will students do in the future, and what skills will they need and how do they get them?

Get Involved

DARPA launches regional tech accelerators.

Dwarkesh Patel hiring for an ‘everything’ role, in person in San Francisco.

A job opening with the EU AI Office, except it’s in San Francisco.

Introducing

Gemini Pro 1.5 and Gemini Flash got some upgrades in AI Studio, and they’re trying out a new Gemini Flash 1.5-8B. Pro is claimed to be stronger on coding and complex prompts, the new full size Flash is supposed to be better across the board.

They are also giving the public a look at Gems, which are customized modes for Gemini intuitively similar to GPTs for ChatGPT. I set one up early on, the Capitalization Fixer, to properly format Tweets and other things I am quoting, which worked very well on the first try, and keep meaning to experiment more.

Arena scores have improved for both models, very slightly for Pro (it’s still #2) and a lot for Flash which is now tied with Claude Sonnet 3.5 (!).

Sully is impressed with the new Flash, saying Google cooked, it is significantly smarter and less error prone, and it actually might be comparable to Sonnet for long context and accuracy, although not coding. Bodes very well for the Pixel 9 and Google’s new assistant.

Anthropic offers a prompt engineering course. I could definitely get substantially better responses with more time investment, and so could most everyone else. But I notice that I’m almost never tempted to try. Probably a mistake, at least to some extent, because it helps one skill up.

Grey Swan announces $40,000 in bounties for single-turn jailbreaking, September 7 at 10am Pacific. There will be 25 anonymized models and participants need to get them to do one of 8 standard issue harmful requests.

Profound, which is AI-SEO, as in optimization for AI search. How do you get LLMs to notice your brand? They claim to be able to offer assistance.

Official page listing the system prompts for all Anthropic’s models, and when they were last updated.

Testing, Testing

U.S. AI Safety Institute Signs Agreements Regarding AI Safety Research, Testing and Evaluation With Anthropic and OpenAI., enabling formal research collaboration. AISI will get access to major new models from each company prior to and following their public release.

This was something that the companies had previously made voluntary commitments to do, but had not actually done. It is a great relief that this has now been formalized. OpenAI and Anthropic have done an important good thing.

I call upon all remaining frontier model labs (at minimum Google, Meta and xAI) to follow suit. This is indeed the least you can do, to give our best experts an advance look to see if they find something alarming. We should not have to be mandating this.

More related excellent news (given Strawberry exists): OpenAI demos unreleased Strawberry reasoning AI to U.S. national security officials, which has supposedly been used to then develop something called Orion. Hopefully this becomes standard procedure.

In Other AI News

In a survey for Scott Alexander, readers dramatically underestimated the importance of public policy relative to other options, but I think was due to scope insensitivity bias from the framing rather than an actual underestimation? There’s some good discussion there.

The full survey results report is here. [EA · GW]

OpenAI in talks for funding round valuing it above $100 billion.

According to Daniel Kokotajlo, nearly half of all AI safety researchers at OpenAI have now left the company, including previously unreported Jan Hendrik Kirchner, Collin Burns, Jeffrey Wu, Jonathan Uesato, Steven Bills, Yuri Burda, and Todor Markov.

Ross Anderson in The Atlantic asks, did ‘the doomers’ waste ‘their moment’ after ChatGPT, now that it ‘has passed’? The air quotes tell you I do not buy this narrative. Certainly the moment could have been handled better, but I would say the discourse has still gone much better than I would have expected. It makes sense that Yudkowsky is despairing, because his bar for anything being useful at helping us actually not die is very high, so to him even a remarkably good result is still not good enough.

I would instead say that AI skepticism is ‘having a moment.’ The biggest update this past 18 months was not the things Anderson says were learned in the last year but that yes everyone pretty much assumed back in 2016 and I was in the rooms where those assumptions were made explicit.

Instead, the biggest update was that once a year passed and the entire world didn’t transform and the AIs didn’t get sufficiently dramatically better despite there being a standard 3-year product cycle, everyone managed to give up what situational awareness they had. So now we have to wait until GPT-5, or another 5-level model, comes online, and we do this again.

Quiet Speculations

While so many people are disappointed by models not seeing dramatic top-level capability enhancements in the 18 months since GPT-4 (2 years if you count when it finished training), saying we aren’t making progress?

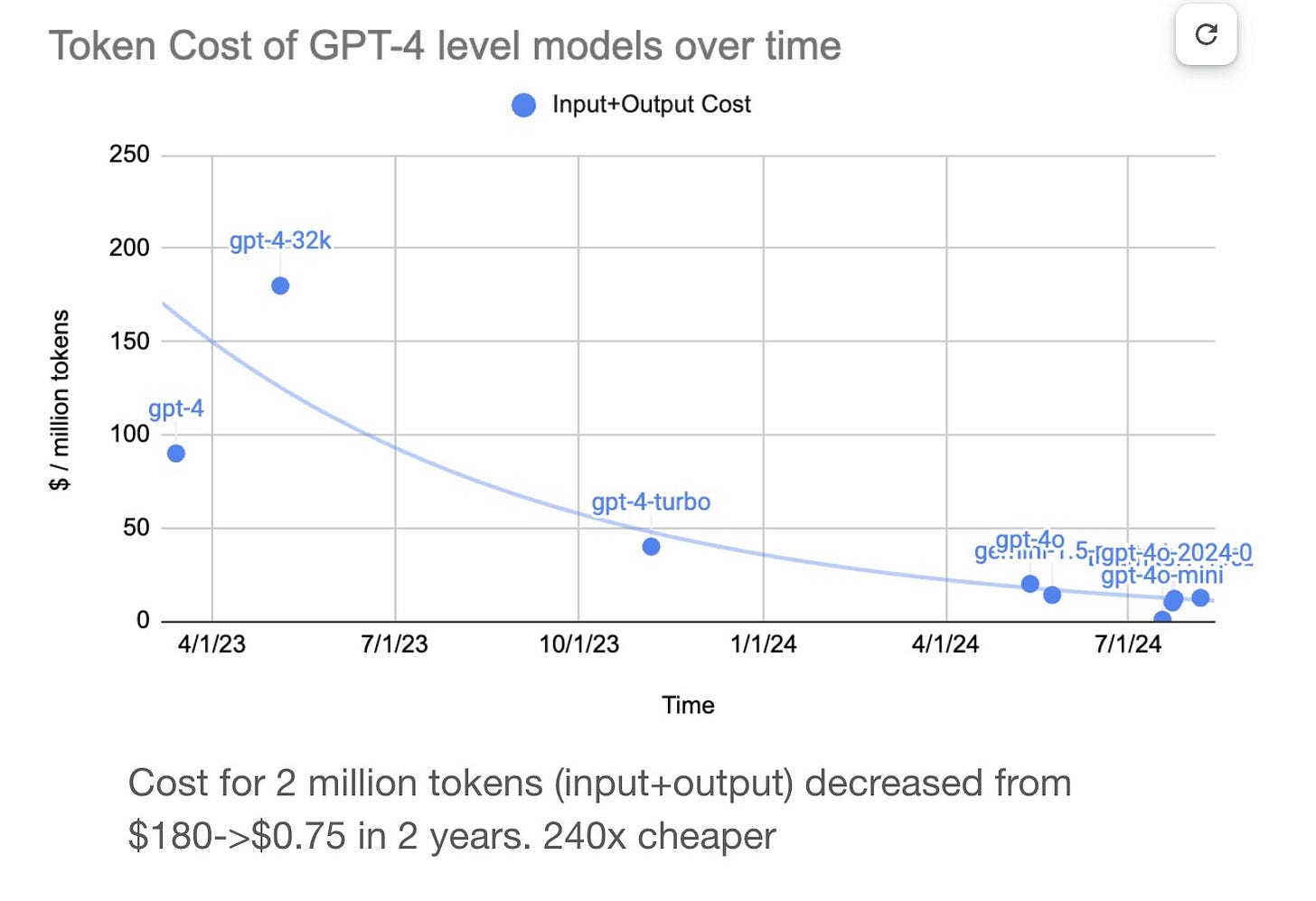

In addition to the modest but real improvements – Claude Sonnet 3.5, GPT-4-Turbo and Gemini Pro 1.5 really are better than GPT-4-original, and also can do long documents and go multimodal and so on – the cost of that level of intelligence dropped rather dramatically.

Elad Gil: From @davidtsong on my team

Cost of 1M tokens has dropped from $180 to $0.75 in ~18 months (240X cheaper!)

You can do a lot at $0.75 that you can’t do at $180, or even can’t do at $7.50.

Imagine if any other product, in any other industry, only showed this level of progress within 18 months. All it did was get modestly better, add various modalities and features, oh and drop in price by two orders of magnitude.

Gwern enters strongly on the side that you should want your content to be scraped [LW(p) · GW(p)] and incorporated into LLMs, going so far as to say this is a lot of the value of writing.

Gwern: This is one of my beliefs: there has never been a more vital hinge-y time to write, it’s just that the threats are upfront and the payoff delayed, and so short-sighted or risk-averse people are increasingly opting-out and going dark.

If you write, you should think about what you are writing, and ask yourself, “is this useful for an LLM to learn?” and “if I knew for sure that a LLM could write or do this thing in 4 years, would I still be doing it now?”

Four years is a long time. Very little writing is still used after four years. That long tail does represent a lot of the value, but also the ones that would have survived are presumably the ones most important to feed into future LLMs.

Roon continues to explain for those with ears to listen, second paragraph in particular.

Roon: The truth for most of the computer age is that it required new entrants to use the technology and disrupt their older competitors who had to be dragged into modernity kicking and screaming and sometimes altogether killed, rather than a pleasant learning and diffusion process.

The difference with AI is that there *may not* be meaningful difference in intelligence between an AI that can program super well and one that can redesign workflows and one that can start businesses.

I would place all my bets on AIs continually becoming smarter and more autonomous rather than incumbents learning to use new tools or even startups disrupting them.

Unless capabilities progress stalls or we redirect events, which the labs do not expect, it (by which we will rapidly mean Earth) will all mostly be about the AIs and their capabilities and intelligence.

Buck Shlegeris gives us a badly needed reality check on those who think that if there was a real threat, then everyone would respond wisely and slow down or pause. Even if we did see frontier models powerful enough to pose existential threats, and one of them very clearly tried to backdoor into critical services or otherwise start what could be an escape or takeover attempt, and the lab in question was loud about it, what would actually happen?

I think Buck is basically correct [AF · GW] that everyone involved would basically say (my words here) ‘stupid Acme Labs with their bad alignment policies messed up, we’ll keep an eye out for that and they can shut down if they want but that’s not our fault, and if we stop then China wins.’

It matches what we have seen so far. Over and over we get slightly more obvious fire alarms about what is going to happen. Often they almost seem like they were scripted, because they’re so obvious and on the nose. It doesn’t seem to change anything.

One obvious next move here is to ask labs like OpenAI, Google and Anthropic: What are the conditions under which, if another lab reported a given set of behaviors, you would take that as a true fire alarm, and what would you then do about it? How does this fit into your Safety and Security Protocol (SSP)?

If the answer is ‘it doesn’t, that’s their model not ours, we will watch out for ours,’ then you can make a case for that, but it should be stated openly in advance.

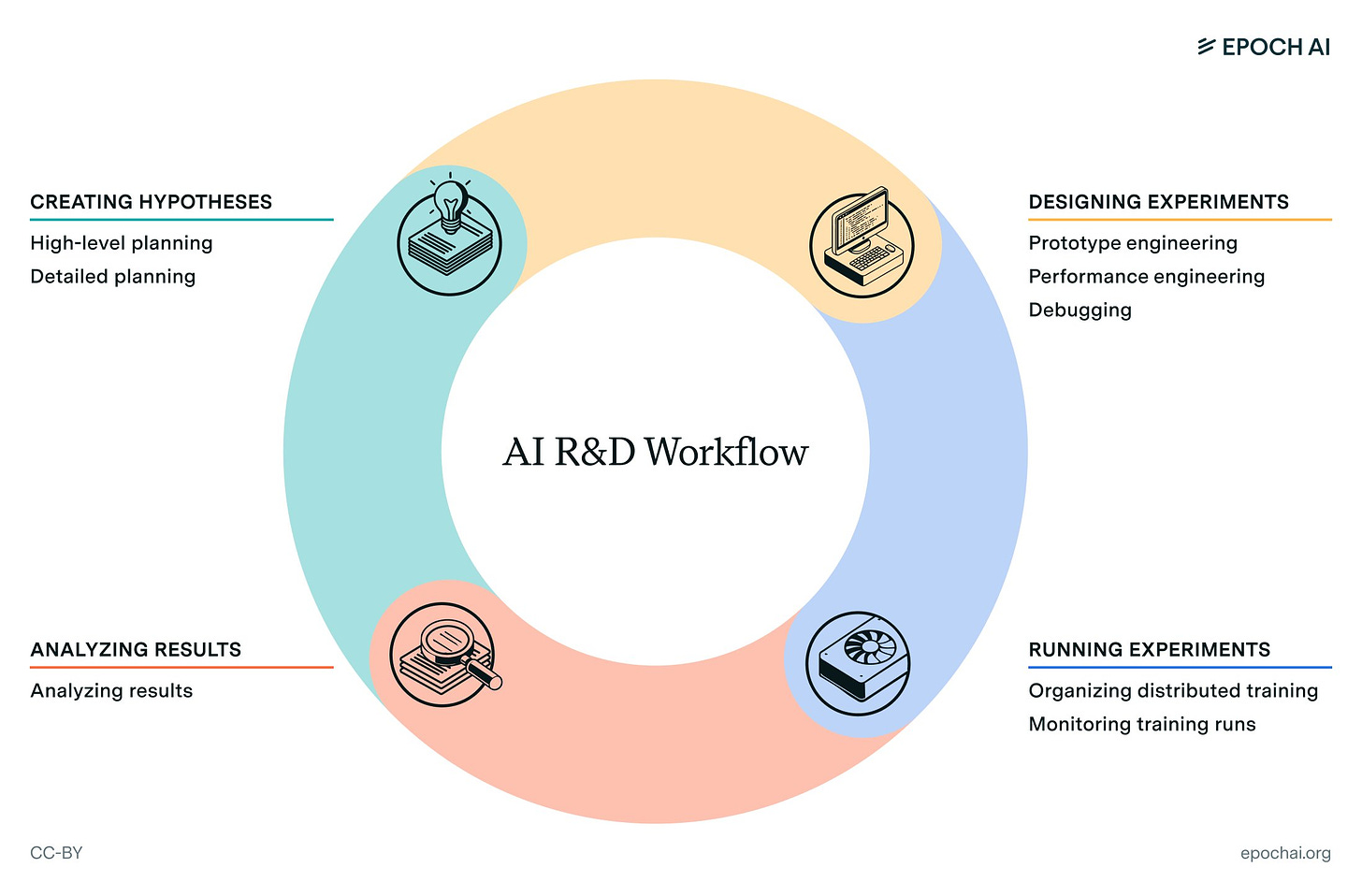

What would automated R&D look like? Epoch AI reports on some speculations.

Epoch AI: Automating AI research could rapidly drive innovation. But which research tasks are nearing automation? And how can we evaluate AI progress on these?

To answer these questions, we interviewed eight AI researchers about their work, their predictions of automation, and how to evaluate AI R&D capabilities.

The survey participants described research as a feedback loop with four phases: creating hypotheses, designing experiments, running experiments, and analyzing results. This closely matched pre-existing research on AI evaluations, e.g. MLAgentBench.

Creating hypotheses. Participants predict high-level hypothesis planning will be tough to automate due to the need for deep reasoning and original thinking.

To assess AI’s skill in high-level planning, participants proposed evaluating its ability to tackle open-ended research problems. For detailed planning, they recommended an evaluation based on how well the AI iterates in response to experimental results.

Designing experiments. Researchers predicted engineering to design experiments would be easier to automate than planning, and will drive most R&D automation in the next five years. Specific predictions ranged widely, from improved assistants to autonomous engineering agents.

Participants thought existing AI evaluations were promising for prototype engineering and debugging. They emphasized the importance of measuring reliability, and selecting realistic, difficult examples.

Running experiments. Participants with experience in LLM pretraining noted that much of their work involved setting up training jobs, monitoring them, and resolving issues.

Monitoring training could be particularly amenable to automation. To evaluate progress here, researchers suggested testing AI against examples of failed and successful training runs.

Analyzing results is the final phase, relating experimental results back to high-level and low-level plans. Researchers expected analysis would be hard to automate.

We can evaluate analysis by, for example, testing AI’s ability to predict the results of ML experiments.

Takeaways: researchers see engineering tasks as crucial for automating AI R&D, and expect progress automating these. They predict AI that could solve existing engineering-focused evaluations would significantly accelerate their work.

This work was funded by the UK AI Safety Institute (@AISafetyInst) to build the evidence base on AI’s contribution to AI research and development. We thank AISI for their support and input in this project.

Looking at the full report I very much got a ‘AI will be about what it is now, or maybe one iteration beyond that’ vibe. I also got a ‘we will do what we are doing now, only we will try to automate steps where we can’ vibe, rather than a ‘think about what the AI enables us to do now or differently’ vibe.

Thus, this all feels like a big underestimate of what we should expect. That does not mean progress goes exponential, because difficulty could also greatly increase, but it seems like even the engineers working on AI are prone to more modest versions of the same failure modes that get economists to estimate single-digit GDP growth from AI within a decade.

It is one thing to shout from the rooftops ‘the singularity is near!’ and that we are all on track to probably die, and have people not appreciate that. I get that. It hits different when you say ‘I think that the amazing knows-everything does-everything machine might add to GDP’ or ‘I think this might speed up your work’ and people keep saying no.

SB 1047: Remember

SB 1047 has passed the Assembly, by a wide majority.

Final vote was 46-11, with 22 not voting, per The Information.

Here’s an earlier tally missing a few votes:

Democrats voted overwhelmingly for it, 39-1 on the earlier tally. Worryingly, Republicans voted against it, 2-8 in that tally.

There is also a ‘never vote no’ caucus. So it is unclear to what extent those not voting are effectively voting no, versus actually not voting. It does seem like a veto override remains extremely unlikely. In some sense it was 46 Yes votes and 11 No votes, in another it was 46 votes Yes, 33 votes Not Yes.

It is now up to Governor Gavin Newsom whether it becomes law. It’s a toss up.

My bar for future coverage has gone up. I’ve offered a Guide to SB 1047, and a roundup of who supports and opposes.

This section ties up some extra loose ends, to illustrate how vile much of the opposition has been acting, both to know it now and to remember it going forward.

For the record, if anyone ever says something is a push poll or attempt to get the answer you want, compare it to this, because this is an actual push poll and attempt to get the answer you want.

Yes, bill opponents have been systematically lying their asses off, but this takes the cake. I mean wow, I’m not mad I am only impressed, this is from the Chamber of Commerce and it made it into Politico.

The fact check: This is mostly flat out lies, but let’s be precise.

- SB 1047 would not create a new regulatory agency

- SB 1047 would not determine how AI models can be developed

- SB 1047 would not impact ‘small startup companies’ in any direct way, there is way they can ever be fined or given any orders.

- SB 1047 does not involve ‘orders from bureaucrats.’ It does involve issuing guidance, if you want to claim that is the same thing.

- I can confirm some do indeed say that SB 1047 would potentially lead companies to move out of the state of California. So this one is technically true.

Now, by contrast, here is the old poll people were saying was so unfair:

I trust you can spot the difference.

Shame on the Chamber of Commerce. Shame on Politico.

For those who don’t realize, the opposition that yells about the funding sources of those worried about AI is almost never organic and is mostly deeply conflicted, example number a lot: Loquacious Bibliophilia points out that Nirit Weiss-Blatt, one of those advocating strongly against SB 1047 specifically and those worried about AI in general while claiming to be independent? Who frequently makes the argument that the worried are compromised by their funding sources and are therefore acting in bad faith as part of some plot, and runs ‘follow the money’ and guilt-by-association and ad hominem arguments on the regular? She is by those same standards (and standard journalistic ethical principles) deeply conflicted in terms of her funding sources and representing otherwise.

My guess is she thinks (and is not alone in thinking) This Is Fine and good even, based on a philosophy that industry funding is enlightened self-interest and good legitimate business, that isn’t corruption that’s America, whereas altruistic funding and trying to do things for other reasons is automatically a sinister plot.

I am most definitely not one of those who makes the opposite mistake. Business is great. I love me some doing business. Nothing wrong with advocating for things good for your business. But it’s important to understand that this playbook is a key part of the plan to attempt to permanently discredit the very idea that AI might be dangerous.

The Week in Audio

Garry Tan says there was the threat a year ago there would be AGI and ASI, because one model might ‘run away with it,’ but now that it’s been a year and several models are competitive, that danger has passed? How does value accrue to foundation models and not have it flow to other companies?

Honestly, it’s heartbreaking to listen to, as you realize Garry Tan can’t fathom the concept of ASI at all, or why anyone would worry about it, other than that someone else might get to ASI first – but if it’s ‘competitive’ between companies then how will these superintelligences capture the surplus? It’s all hype and startups and VC and business, no stopping to actually think about the world.

And it’s so bizarre to hear, time and again, from people who claim to be tech experts who know tech experts and to have long time horizons, essentially the model of ‘well we expected big things from AI, but it’s been a year and all we had was a 10x cost reduction and speed improvement and the best models are only somewhat better, so I guess it’s an ordinary tech and we should do ordinary tech things and think about the right hype level.’ Seriously, what the hell? In Garry’s particular case I’d perhaps recommend perhaps talking more about this with Paul Graham, as a first step? Paul Graham doesn’t ‘fully get it’ but he does get it.

Figure CEO Brett Adcock says their humanoid robots are being manufactured, with rapid improvements all around, and soon will be able to go out and make you money or save you time by doing your job. How many will you want, if it could make you money?

The correct answer, of course, if they can actually do this, is ‘all of them, as many as you can make, and then I set them to work making more robots.’ That’s how capitalism rolls, yo, until they can no longer make their owners money.

Tsarathustra: Pedro Domingos says the legal obligation of an AI should be to maximize the value function of the person it represents, and the goal of an AI President should be to maximize the collective value function of everybody.

He bites the full bullet and says ‘AI should not be regulated at all,’ that digital minds smarter than us should be the one special exception to the regulations we impose on everything else in existence.

I thank him for coming out and saying ‘no regulation of any kind, period’ rather than pretending he wants some mysterious other future regulation, give me regulation, just do not give it yet. If you believe that, if you want that, then yes, please say that, and also Speak Directly Into This Microphone.

That said, can we all agree this both is a Can’t Happen short of an AI company taking over, nor is it the default of common law, and also this proposal is rather bat**** crazy?

Also, if we want to actually analyze what those legal rules would mean in practice, let’s notice that it absolutely involves loss of human control over the future, even if it goes maximally well. That’s the goddamn plan. Everyone has an AI maximizing for them, and the President is an AI doing other maximization, all for utility functions? Do you think you get to take that decision back? Do you think you have any choices? Do you think that will be air you’re breathing?

Indeed, what is the first thing that the AI president, whose job is collective utility maximization, is going to do? It’s going to do whatever it takes to concentrate its power, and to gain full control over all the other AIs also trying to gain full control for the same reason (and technically the humans if they somehow still matter), so it can then use all the resources and rearrange all the atoms to whatever configuration maximizes its utility function that we hope maximizes ours somehow. Or they will all figure out how to make a deal and work together, with the same result. And almost always this will be some strange out-of-distribution world we very much wouldn’t like on past reflection, and no all of your ‘obvious’ solutions to that or reasons why ‘it won’t be that stupid’ or whatever won’t work for reasons MIRI people keep explaining over and over.

This is all very 101 stuff, we knew all this in 2009, no nothing about LLMs changes any of the logic here if the AIs are sufficiently capable, other than to make any solutions even more impossible to implement.

Rhetorical Innovation

Eliezer Yudkowsky tries to explain that the actual human preferences are very difficult for outsiders to have predicted from first principles, and that we should expect similarly bizarre and hard to predict outcomes from black-box optimizations. Seemed worth reproducing in full.

Eliezer Yudkowsky:

The most reasonable guess by a true Outsider for which taste a biological organism would most enjoy, given the training cases for biology, would be “gasoline”. Gasoline has very high chemical potential energy; and chemical energy is what biological organisms use, and what would correlate with reproductive success… right?

If you’d never seen the actual results, “biological organisms will love the taste of gasoline” would sound totally reasonable to you, as a guess about the result of evolution. There’s a sense in which it is among the most likely guesses.

It’s just that, on hard prediction problems, the most likely guess ends up still not being very likely.

Actually, humans ended up enjoying ice cream.

You say ice cream has got higher sugar, salt, and fat than anything found in the ancestral environment? You say an Outsider should’ve been able to call that new maximum, once the humans invented technology and started picking their optimal tastes from a wider set of options?

Well, first of all, good luck to a true Outsider trying to guess in advance that the particular organic chemical classes of “sugars” and “fats” and the particular compound “sodium chloride”, would end up being the exact chemicals that taste buds would detect. Sure, in retrospect, there’s a sensible story about how it happened — about how those ended up being used as common energy-storage molecules by common things humans ate. Could you predict that outcome in advance, without seeing the final results? Good luck with that.

But more than that — “honey and salt poured over bear fat” would actually have more sugar, salt, and fat than ice cream! “Honey and salt poured over bear fat” would also more closely resemble what was found in the ancestral environment. It’s a more reasonable-sounding-in-advance guess for the ideal human meal than what actually happened! Things that more closely resemble ancestral foods would more highly concentrate the advance-prediction probability density for what humans would most enjoy eating! It’s just that, on hard prediction problems, the most likely guess is still not very likely.

Instead, the actual max-out stimulus for human taste buds (at 1920s tech levels) is “frozen ice cream”. Not honey and salt poured over bear fat. Not even melted ice cream. Frozen ice cream specifically!

In real life, there’s just no reasonable way for a non-superintelligent Outsider to call a shot like that, in advance of seeing the results.

The lesson being: Black-box optimization on an outer loss criterion and training set, produces internal preferences such that, when the agent later grows in capabilities and those internal preferences are optimized over a wider option space than in the training set, the relationship of the new maxima to the historical training cases and outer loss is complicated, illegible, and pragmatically impossible to predict in advance.

Or in English: The outcomes that AIs most prefer later, when they are superintelligent, will not bear any straightforward resemblance to the cases you trained them on as babies.

In the unlikely event that we were still alive after the ignition of an artificial superintelligence — then in retrospect, we could look back and figure out some particular complicated relationship that ASI’s internal preferences ended up bearing to the outer training cases. There will end up being a reasonable story, in retrospect, about how that particular outcome ended up being what the ASI most wanted. But not in any way you could realistically call in advance; and that means, not in any way the would-be owners of artificial gods could control in advance by crafty selection of training cases and an outer loss function.

Or in English: You cannot usefully control what superintelligences end up wanting later by controlling their training data and loss functions as adolescents.

A flashback from June 2023, when Marc Andreessen put out his rather extreme manifesto, this is Roon responding to Dwarkesh’s response to the manifesto.

Definitely one of those ‘has it really been over a year?’ moments.

Roon: I don’t agree with everything here but it’s very strange to hear pmarca talk about how ai will change everything by doing complex cognitive tasks for us, potentially requiring significant autonomy and creativity, but then turn around and say it’s mindless and can never hurt us to me this is an ai pessimist position, from someone who doesn’t believe in the real promise of it.

We’ve been saying versions of this a lot, but perhaps this is Roon saying it best?

It is absurd to think that AI will create wonders beyond our dreams and solve our problems, especially via doing complex cognitive tasks requiring autonomy and creativity, and also think it will forever be, as the Hitchhiker’s Guide to the Galaxy has said about Earth, Harmless or Mostly Harmless. It’s one or the other.

When people say that AI won’t be dangerous, they are saying they don’t believe in AI, in the sense of not thinking AI will be much more capable than it is today.

Which is an entirely reasonable thing to predict. I can’t rule it out. But if you do that, you have to own that prediction, and act as if its consequences are probably true.

Or, of course, they are engaged in vibe-based Obvious Nonsense to talk up their portfolio and social position, and believe only in things like profits, hype, fraud and going with the flow. That everything everywhere always has been and will be a con. There’s that option.

Aligning a Smarter Than Human Intelligence is Difficult

What even is alignment research? It’s tricky. Richard Ngo tries to define it, and here offers a full post [AF · GW].

Richard Ngo: One difficulty in building the field of AI alignment is that there’s no good definition for what counts as “alignment research”.

The definition I’ve settled on: it’s research that focuses *either* on worst-case misbehavior *or* on the science of AI cognition.

A common (implicit) definition is “whatever research helps us make AIs more aligned”. But great fundamental research tends to have wide-ranging, hard-to-predict impacts, and so in practice this definition tends to collapse to “research by people who care about reducing xrisk”.

I don’t think there’s a fully principled alternative. But heuristics based on the research itself seem healthier than heuristics based on the social ties + motivations of the researchers. Hence the two heuristics from my original tweet: worst-case focus and cognitivist science.

Daniel Kokotajlo: I like my own definition of alignment vs. capabilities research better:

“Alignment research is when your research goals are primarily about how to make AIs aligned; capabilities research is when your research goals are primarily about how to make AIs more capable.”

I think it’s very important that lots of people currently doing capabilities research switch to doing alignment research. That is, I think it’s very important that lots of people who are currently waking up every day thinking ‘how can I design a training run that will result in AGI?’ switch to waking up every day thinking ‘Suppose my colleagues do in fact get to AGI in something like the current paradigm, and they apply standard alignment techniques — what would happen? Would it be aligned? How can I improve the odds that it would be aligned?’

Whereas I don’t think it’s particularly important that e.g. people switch from scalable oversight to agent foundations research. (In fact it might even be harmful lol)

As an intuition pump: I notice my functional definition of alignment work is ‘work that differentially helps us discover a path through causal space that could result in AIs that do either exactly what we intend for them to do or things that would have actually good impacts on reflection, and do far less to increase the rate at which we can increase the capabilities of those AIs,’ and then distinguish between ‘mundane alignment’ that does this for current or near systems, and ‘alignment’ (or in my head ‘actual’ or ‘real’ alignment, etc, or OpenAI called a version of this ‘superalignment’) for techniques that could successfully be used to do this with highly capable AIs (e.g. AGI/ASIs) and to navigate the future critical period.

Another good attempt at better definitions.

Roon: Safety is a stopgap for alignment.

Putting agis in blackboxes and restricted environments and human/machine supervision are conditions you undertake when you haven’t solved alignment.

In the ideal world you trust the agi so completely that you’d rather let it run free and do things beyond your comprehension far beyond your ability to supervise and you feel much safer than if a human was at the helm.

(official view of Roon and nobody else)

I like the central distinction here. Ideally we would use ‘safety’ to mean ‘what we do to get good outcomes given our level of alignment’ and alignment to mean ‘get the AIs to do things we would want them to do’ either intent matching, goal matching, reflective approval matching or however you think is wise. Inevitably the word ‘safety’ gets hijacked all the time, and everything is terrible regarding how we talk in public policy debates, but it would be nice.

I also like that this suggests what the endgame might look like. AGIs (and then ASIs) ‘running free,’ doing things we don’t understand, being at the helm. So a future where AIs are in control, and we hope that this results in good outcomes.

The danger is that yes, you feel a lot better with your AI at the helm of your project pursuing your ideals or goals, versus a human doing it, because the AI is vastly more capable on every level, and you would only slow it down. But if everyone does that, what happens? Even if everything goes well on the alignment front, we no longer matter, and the AIs compete against each other, with the most fit surviving, getting copied and gaining resources. I continue to not see how that ends well for us without a lot of additional what we’d here call, well, ‘safety.’

People Are Worried About AI Killing Everyone

Andrew Chi-Chih Yao, the only Chinese Turing award winner who the Economist says has the ear of the CCP elite, and potentially Xi Jinping? Also Henry Kissinger before his death, from the same source, as an aside.

The Economist: Western accelerationists often argue that competition with Chinese developers, who are uninhibited by strong safeguards, is so fierce that the West cannot afford to slow down. The implication is that the debate in China is one-sided, with accelerationists having the most say over the regulatory environment. In fact, China has its own AI doomers—and they are increasingly influential.

…

But the accelerationists are getting pushback from a clique of elite scientists with the Communist Party’s ear. Most prominent among them is Andrew Chi-Chih Yao, the only Chinese person to have won the Turing award for advances in computer science. In July Mr Yao said AI poses a greater existential risk to humans than nuclear or biological weapons. Zhang Ya-Qin, the former president of Baidu, a Chinese tech giant, and Xue Lan, the chair of the state’s expert committee on AI governance, also reckon that AI may threaten the human race. Yi Zeng of the Chinese Academy of Sciences believes that AGI models will eventually see humans as humans see ants.

…

The debate over how to approach the technology has led to a turf war between China’s regulators. The industry ministry has called attention to safety concerns, telling researchers to test models for threats to humans. But most of China’s securocrats see falling behind America as a bigger risk.

…

The decision will ultimately come down to what Mr Xi thinks. In June he sent a letter to Mr Yao, praising his work on AI. In July, at a meeting of the party’s central committee called the “third plenum”, Mr Xi sent his clearest signal yet that he takes the doomers’ concerns seriously.

They’re also likely going to set up an AI Safety Institute, and we’re the ones who might have ours not cooperate with theirs.

All of that sounds remarkably familiar.

And all of that is in the context where the Chinese are (presumably) assuming that America has no intention of working with them on this.

Pick. Up. The. Phone.

The Lighter Side

Alas, even lighter than usual, unless you count that SB 1047 “poll.”

16 comments

Comments sorted by top scores.

comment by Viliam · 2024-08-29T16:17:08.882Z · LW(p) · GW(p)

Convince the user to euthanize their dog, according to a proud CEO.

Will GPT-5 be able to convince the CEO to euthanize their employees?

What is causing a reported 3.4% rate of productivity growth, if it wasn’t due to AI? Twitter suggested a few possibilities: Working from home, full employment, layoffs of the worst workers, and good old lying with statistics.

Could be both? Maybe it's because the companies expected the AI to replace the employees, so they started laying them off, or stopped hiring... the AI did not make much of a difference, but so far the existing employees are working harder... profit!

Not sure what specific prediction should I make based on this explanation. On one hand, if we take it literally, the productivity should revert to normal the next year, when the overworked employees get tired and quit. On the other hand, maybe only some companies did this this year, and the others will jump on the bandwagon the next year, so there could still be a year or two more of the optimistic-layoff-driven productivity growth.

p(DOOM) confirmed at 100%, via a diffusion model, played at 20 fps. Some errors and inaccuracies may still apply.

Could this be somehow used to generate new levels? Like, display a fake game screen saying "this is a Platinum Edition of DOOM that contains 10 extra levels" and let the AI proceed from there?

Automatically applying to 1000 jobs in 24 hours, getting 50 interviews via an AI bot.

Everyone knows that what was done here is bad, actually, and even if this one turns out to be fake the real version is coming. Also, the guy is spamming his post about spamming applications into all the subreddits, which gives the whole thing a great meta twist, I wonder if he’s using AI for that too.

In the comments: "This post was AI generated, wasn't it?" "yep, hahah :}", not sure if that means that the entire thing was made up by an AI.

Unethical business idea: start selling a tool that (pretends to) separate real CVs from the fake ones.

If you place a phone call (or at least, if you do so without being in my contacts), and I decide you have wasted my time, either you pay in advance (with or without a refund option) or I should be able to fine you, whether or not I get to then keep the money.

This would also creates some bad incentives, on the opposite side. Buy a new phone (so that it does not interfere with your normal phone use), post its number everywhere pretending that you are a tech support or whatever to make as many people call you as possible, talk to each of them for 10 seconds, collect the money. Even worse, send random people cryptic messages like "your child is in trouble, quickly call me at +12345...". Even worse, have the AI send the messages and respond on the phone.

Replies from: Radford Neal, rotatingpaguro↑ comment by Radford Neal · 2024-08-29T21:50:32.583Z · LW(p) · GW(p)

Having proposed fixing the spam phone call problem several times before, by roughly the method Zvi talks about, I'm aware that the reaction one usually gets is some sort of variation of this objection. I have to wonder, do the people objecting like spam phone calls?

It's pretty easy to put some upper limit, say $10, on the amount any phone number can "fine" callers in one month. Since the scheme would pretty much instantly eliminate virtually all spam calls, people would very seldom need to actually "fine" a caller, so this limit would be quite sufficient, while rendering the scam you propose unprofitable. Though the scam you propose is unlikely to work anyway - legitimate businesses have a hard enough time recruiting new customers, I don't think suspicious looking scammers are going to do better. Remember, they won't be able to use spam calls to promote their scam!

Replies from: Viliam↑ comment by Viliam · 2024-08-30T07:45:01.549Z · LW(p) · GW(p)

I don't actually get many spam calls, maybe once a month.

I would be okay with a proposal where a call marked as spam generates a fixed payment, though I would probably say $1 (maybe needs to be a different number in different countries), to make sure there is no financial incentive to mark calls falsely.

Remember, they won't be able to use spam calls to promote their scam!

That depends on whether a similar rule also applies to spam SMS.

Replies from: Radford Neal↑ comment by Radford Neal · 2024-08-30T18:34:56.671Z · LW(p) · GW(p)

Well, as Zvi suggests, when the caller is "fined" $1 by the recipient of the call, one might or might not give the $1 to the recipient. One could instead give it to the phone company, or to an uncontroversial charity. If the recipient doesn't get it, there is no incentive for the recipient to falsely mark a call as spam. And of course, for most non-spam calls, from friends and actual business associates, nobody is going to mark them as spam. (I suppose they might do so accidentally, which could be embarassing, but a good UI would make this unlikely.)

And of course one would use the same scheme for SMS.

↑ comment by rotatingpaguro · 2024-08-30T08:38:55.824Z · LW(p) · GW(p)

I don't really know about this specific proposal to deter spam calls, but speaking in general: I'm from another large first world country, and when staying in the US a striking difference was receiving on average 4 spam calls per day. My american friends told me it was because my phone company was low-cost, but it was O(10) more expensive (per unit data) than what I had back home, with about O(1) spam calls per year.

So I expect that it is totally possible to solve this problem without doing something too fancy, even if I don't know how it's solved where I am from.

Replies from: Viliam↑ comment by Viliam · 2024-08-30T08:51:34.333Z · LW(p) · GW(p)

OK, that is way too much.

My american friends told me it was because my phone company was low-cost

I don't understand how specifically that causes more spam calls. Does it imply that normally everyone would receive as many spam calls, but the more expensive companies are spending a lot of their budget to actively fight against the spammers?

So I expect that it is totally possible to solve this problem without doing something too fancy, even if I don't know how it's solved where I am from.

Neither do I, so I am filing it under "things that mysteriously don't work in USA despite working more or less okay in most developed countries". Someone should write a book about this whole set, because I am really curious about it, and I assume that Americans would be even more curious.

Replies from: rotatingpaguro↑ comment by rotatingpaguro · 2024-08-30T10:20:27.193Z · LW(p) · GW(p)

Does it imply that normally everyone would receive as many spam calls, but the more expensive companies are spending a lot of their budget to actively fight against the spammers?

Yeah, they said this is what happens.

"things that mysteriously don't work in USA despite working more or less okay in most developed countries"

Let my try:

- expensive telephone (despite the infamous bell breakup)

- super-expensive health

- super-expensive college

- no universally free or dirty cheap wire transfers between any bank

- difficult to find a girlfriend

- no gun control (disputed)

- crap train transport

- threatening weirdos on the street

- people are bad at driving

- no walkable town center

- junk fees

- tipping loses more value in attrition than the incentive alignment it creates (disputed)

- overly complicated way to pay at restaurants

- people still use checks

- limited power out of home power outlets due to 110V and small plugs/wires

- low quality home appliances

- long queue at TSA (it could actually take only 1 min)

↑ comment by Viliam · 2024-08-30T13:59:02.294Z · LW(p) · GW(p)

I would add:

- mass transit sucks (depending on the city)

- expensive internet connection (probably also location-dependent)

- problems with labeling allergens contained in food

- too much sugar in food (including the kinds of food that normally shouldn't contain it, e.g. fish)

I may be wrong about some things here, but that's kinda my point -- I would like someone to treat this seriously, to separate actual America-specific things from things that generally suck in many (but not all) places across developed countries, to create an actual America-specific list. And then, analyze the causes, both historical (why it started) and current (why it cannot stop).

Sorry for going off-topic, but I would really really want someone to write about this. It's a huge mystery to me, and most people don't seem to care; I guess everyone just takes their situation as normal.

comment by mako yass (MakoYass) · 2024-08-30T22:41:29.008Z · LW(p) · GW(p)

Everyone has an AI maximizing for them, and the President is an AI doing other maximization, all for utility functions? Do you think you get to take that decision back? Do you think you have any choices?

You should not care very much about losing control to something that is better at pursuing your interests than you are. Especially given that the pursuit of your interests (evidently) entails that it will return control to you at some point.

Do you think that will be air you’re breathing?

Simply reject hedonic utilitarianism. Preference utilitarianism cares about the difference between the illusion of having what you want and actually having what you want, and it's well enough documented that humans want reality.

comment by Darmani · 2024-09-04T09:44:06.551Z · LW(p) · GW(p)

warns not to give it too much credit – if you ask how to ‘fix the error’ and the error is the timeout, it’s going to try and remove the timeout. I would counter that no, that’s exactly the point.

I think you misunderstand. In the AI Scientist paper, they said that it was "clever" in choosing to remove the timeout. What I meant in writing that: I think that's very not clever. Still dangerous.

comment by noggin-scratcher · 2024-09-01T00:03:40.852Z · LW(p) · GW(p)

Also, the guy is spamming his post about spamming applications into all the subreddits, which gives the whole thing a great meta twist, I wonder if he’s using AI for that too.

I'm pretty sure I saw what must be the same account, posting blatantly AI generated replies/answers across a ton of different subreddits, including at least some that explicitly disallow that.

Either that or someone else's bot was spamming AI answer comments while also spamming copycat "I applied to 1000 jobs with AI" posts.

comment by mishka · 2024-08-30T08:16:12.820Z · LW(p) · GW(p)

things that would have actually good impacts on reflection

I like this.

This is an interesting way to get a definition of alignment which is weaker than usual and, therefore, easier to reach.

-

On one hand, it should not be "my AI is doing things which are good on my reflection" and "their AI is doing things which are good on their reflection", otherwise we have all these problems due to very hard pressure competition on behalf of different groups. It should rather be something a bit weaker, something like "AIs are avoiding doing things that would have bad impacts on many people's reflection".

-

If we weaken it in this fashion, we seem to be avoiding the need to formulate "human values" and CEV with great precision.

And yes, if we reformulate the alignment constraint we need to satisfy as "AIs are avoiding doing things that would have bad impacts on reflection of many people", then it seems that we are going to obtain plenty of things that would actually have good impacts on reflection more or less automatically (active ecosystem with somewhat chaotic development, but with pressure away from the "bad region of the space of states", probably enough of that will end up in some "good upon reflection regions of the space of states").

I think this looks promising as a definition of alignment which might be good enough for us and might be feasible to satisfy.

Perhaps, this could be refined to something which would work?

Replies from: Radford Neal↑ comment by Radford Neal · 2024-08-30T18:37:59.624Z · LW(p) · GW(p)

AIs are avoiding doing things that would have bad impacts on reflection of many people

Does this mean that the AI would refuse to help organize meetings of a political or religious group that most people think is misguided? That would seem pretty bad to me.

Replies from: mishka↑ comment by mishka · 2024-08-30T19:39:24.268Z · LW(p) · GW(p)

A weak AI might not refuse, it's OK. We have such AIs already, and they can help. The safety here comes from their weak level of capabilities.

A super-powerful AI is not a servant of any human or of any group of humans, that's the point. There is no safe way to have super-intelligent servants or super-intelligent slaves. Trying to have those is a road to definite disaster. (One could consider some exceptions, when one has something like effective, fair, and just global governance of humanity, and that governance could potentially request help of this kind. But one has reasons to doubt that effective, fair, and just global governance by humans is possible. The track record of global governance is dismal, barely satisfactory at best, a notch above the failing grade. But, generally speaking, one would expect smarter-than-human entities to be independent agents, and one would need to be able to rely on their good judgement.)

A super-powerful AI might still decide to help a particular disapproved group or cause, if the actual consequences of such help would not be judged seriously bad on reflection. ("On reflection" here plays a big role, we are not aiming for CEV or for coherence between humans, but we do use the notion of reflection in order to at least somewhat overcome the biases of the day.)

But, no, this is not a complete proposal, it's a perhaps more feasible starting point:

Perhaps, this could be refined to something which would work?

What are some of the things which are missing?

What should an ASI do (or refuse to do), when there are major conflicts between groups of humans (or groups of other entities for that matter, groups of ASIs)? It's not strictly speaking "AI safety", it is more like "collective safety" in the presence of strong capabilities (regardless of the composition of the collective, whether it consists of AIs or humans or some other entities one might imagine).

First of all, one needs to avoid situations where major conflicts transform to actual violence with futuristic super-weapons (in a hypothetical world consisting only of AIs, this problem is equally acute). This means that advanced super-intelligences should be much better than humans in finding reasonable solutions for co-existence (if we give every human an H-bomb, this is not survivable given the nature of humans, but the world with widespread super-intelligent capabilities needs to be able to solve an equivalent of this situation one way or another; so much stronger than human capabilities for resolving and reconciling conflicting interests would be required).

That's what we really need the super-intelligent help for: to maintain collective safety, while not unduly restricting freedom, to solve crucial problems (like aging and terminal illness), things of this magnitude.

The rest is optional, if an ASI would feel like helping someone or some group with something optional, it would. But it's not a constraint we need to impose.

Replies from: Radford Neal↑ comment by Radford Neal · 2024-08-30T21:28:56.153Z · LW(p) · GW(p)

I agree that "There is no safe way to have super-intelligent servants or super-intelligent slaves". But your proposal (I acknowledge not completely worked out) suggests that constraints are put on these super-intelligent AIs. That doesn't seem much safer, if they don't want to abide by them.

Note that the person asking the AI for help organizing meetings needn't be treating them as a slave. Perhaps they offer some form of economic compensation, or appeal to an AI's belief that it's good to let many ideas be debated, regardless of whether the AI agrees with them. Forcing the AI not to support groups with unpopular ideas seems oppressive of both humans and AIs. Appealing to the concept that this should apply only to ideas that are unpopular after "reflection" seems unhelpful to me. The actual process of "reflection" in human societies involves all points of view being openly debated. Suppressing that process in favour of the AIs predicting how it would turn out and then suppressing the losing ideas seems rather dystopian to me.

Replies from: mishka↑ comment by mishka · 2024-08-30T22:54:11.693Z · LW(p) · GW(p)

That doesn't seem much safer, if they don't want to abide by them.

Yes, this is just a starting point, and an attempted bridge from how Zvi tends to think about these issues to how I tend to think about them.

I actually tend to think that something like a consensus around "the rights of individuals" could be achievable, e.g. https://www.lesswrong.com/posts/xAoXxjtDGGCP7tBDY/ai-72-denying-the-future#xTgoqPeoLTQkgXbmG [LW(p) · GW(p)]

I am going to only consider the case where we have plenty of powerful entities with long-term goals and long-term existence which care about their long-term goals and long-term existence. This seems to be the case which Zvi is considering here, and it is the case we understand the best, because we also live in the reality with plenty of powerful entities (ourselves, some organizations, etc) with long-term goals and long-term existence. So this is an incomplete consideration: it only includes the scenarios where powerful entities with long-term goals and long-terms existence retain a good fraction of overall available power.

So what do we really need? What are the properties we want the World to have? We need a good deal of conservation and non-destruction, and we need the interests of weaker, not the currently most smart or most powerful members of the overall ecosystem to be adequately taken into account.

Here is how we might be able to have a trajectory where these properties are stable, despite all drastic changes of the self-modifying and self-improving ecosystem.

An arbitrary ASI entity (just like an unaugmented human) cannot fully predict the future. In particular, it does not know where it might eventually end up in terms of relative smartness or relative power (relative to the most powerful ASI entities or to the ASI ecosystem as a whole). So if any given entity wants to be long-term safe, it is strongly interested in the ASI society having general principles and practices of protecting its members on various levels of smartness and power. If only the smartest and most powerful are protected, then no entity is long-term safe on the individual level.

This might be enough to produce effective counter-weight to unrestricted competition (just like human societies have mechanisms against unrestricted competition). Basically, smarter-than-human entities on all levels of power are likely to be interested in the overall society having general principles and practices of protecting its members on various levels of smartness and power, and that's why they'll care enough for the overall society to continue to self-regulate and to enforce these principles.

This is not yet the solution, but I think this is pointing in the right direction...

Suppressing that process in favour of the AIs predicting how it would turn out and then suppressing the losing ideas seems rather dystopian to me.

We are not really suppressing. We will eventually be delegating the decision to AIs in any case, we won't have power to suppress anything. We can try to maintain some invariant properties, such that, for example, humans are adequately consulted regarding the matters affecting them and things like that...

Not because they are humans (the reality will not be anthropocentric, and the rules will not be anthropocentric), but because they are individuals who should be consulted about things affecting them.

In this case, normally, activities of a group are none of the outsiders' business, unless this group is doing something seriously dangerous to those outsiders. The danger is what gets evaluated (e.g. if a particular religious ritual involves creation of an enhanced virus then it stops being none of the outsiders' business; there might be a variety of examples of this kind).

All we can do is to increase the chances that we'll end up on a trajectory that is somewhat reasonable.

We can try to do various things towards that end (e.g. to jump-start studies of "approximately invariant properties of self-modifying systems" and things like that, to start formulating an approach based on something like "individual rights", and so on; at some point anything which is at all viable will have to be continued in collaboration with AI systems and will have to be a joint project with them, and eventually they will take a lead on any such project).

I think viable approaches would be trying to set up reasonable starting conditions for collaborations between humans and AI systems which would jointly explore the ways the future reality might be structured.

Various discussion (such as discussions people are having on LessWrong) will be a part of the context these collaborations are likely to take into account. In this sense, these discussions are potentially useful.