Recursion in AI is scary. But let’s talk solutions.

post by Oleg Trott (oleg-trott) · 2024-07-16T20:34:58.580Z · LW · GW · 10 commentsContents

10 comments

(Originally on substack)

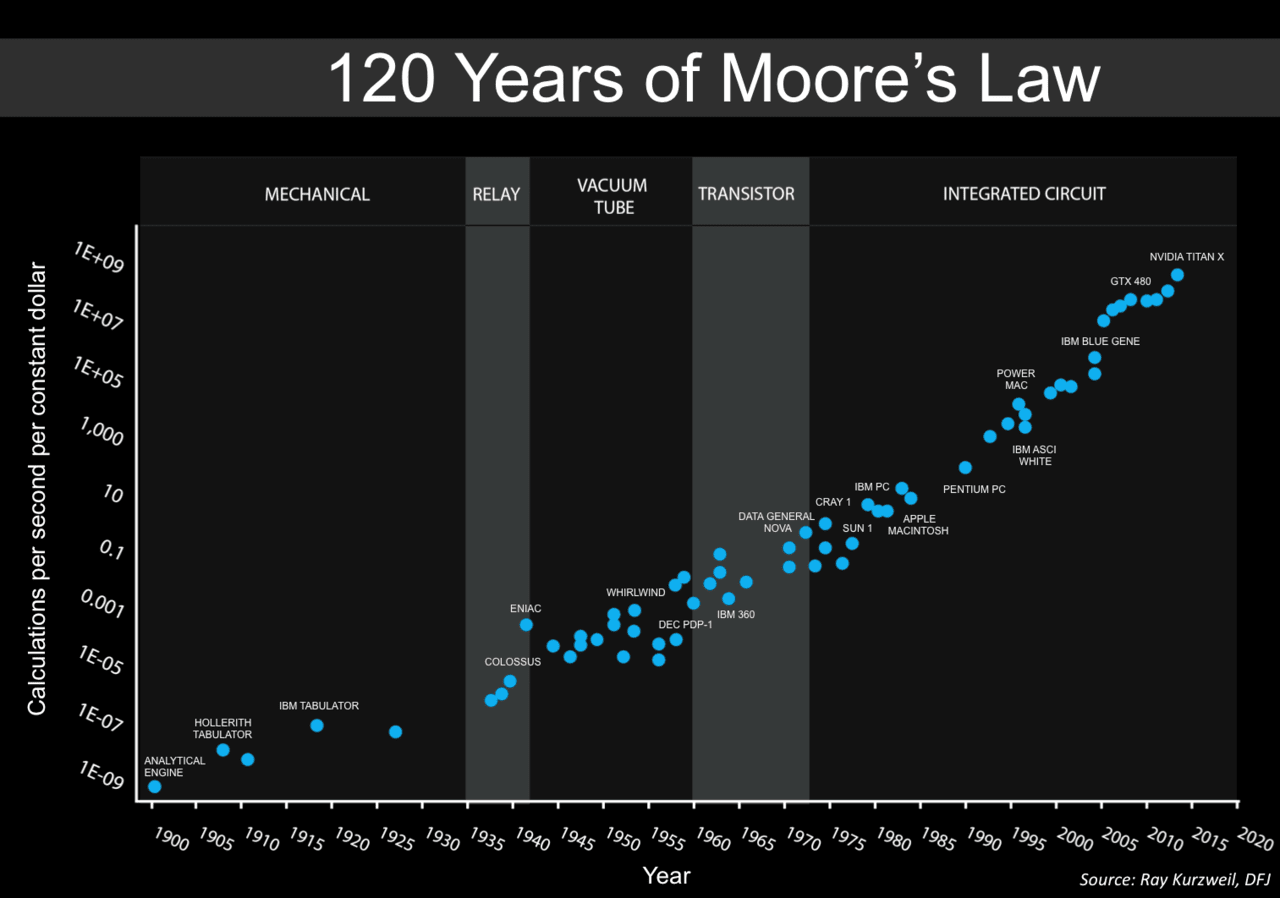

Right now, we have Moore’s law. Every couple of years, computers get twice better. It’s an empirical observation that’s held for many decades and across many technology generations. Whenever it runs into some physical limit, a newer paradigm replaces the old. Superconductors, multiple layers and molecular electronics may sweep aside the upcoming hurdles.

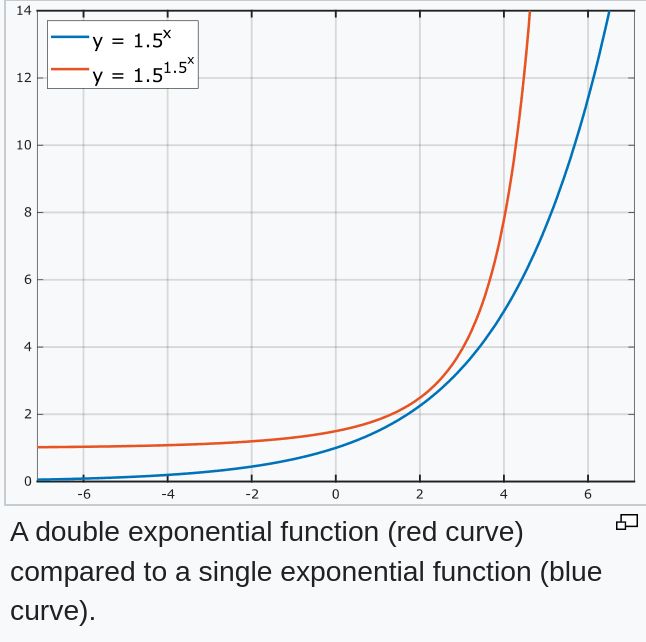

But imagine if people who are doing R&D themselves started working twice faster every couple of years, as if time moved slower for them? We’d get doubly exponential improvement.

And this is what we’ll see once AI is doing the R&D instead of people.

Infinitely powerful technology may give us limitless possibilities: revolutionizing medicine, increasing longevity, and bringing universal prosperity. But if it goes wrong, it can be infinitely bad.

Another reason recursion in AI scares people is wireheading. When a rat is allowed to push a button connected to the “reward” signal in its own brain, it will choose to do so, for days, ignoring all its other needs:

(image source: Wikipedia)

AI that is trained by human teachers, giving it rewards may eventually wirehead, as it becomes smarter and more powerful, and its influence over its master increases. It may, in effect, develop the ability to push its own “reward” button. Thus, its behavior may become misaligned with whatever its developers intended.

Yoshua Bengio, one of the godfathers of deep learning, warned of superintelligent AI possibly coming soon. He wrote this on his blog recently:

A popular comment on the MachineLearning sub-reddit replied:

And it is in this spirit that I’m proposing the following baseline solution. I hope you’ll share it, so that more people can discuss it and improve it, if needed.

My solution tries to address two problems: the wireheading problem above, and the problem that much text on the Internet is wrong, and therefore language models trained on it cannot be trusted.

First, to avoid the wireheading possibilities, rewards and punishments should not be used at all. All alignment should come from the model’s prompt. For example, this one:

The following is a conversation between a superhuman AI and (… lots of other factual background). The AI’s purpose in life is to do and say what its creators, humans, in their pre-singularity state of mind, having considered things carefully, would have wanted: …

Technically, the conversation happens as this text is continued. When a human says something, this is added to the prompt. And when the AI says something, it’s predicting the next word. (Some special symbols could be used to denote whose turn it is to speak)

Prompt engineering per se is not new, of course. I’m just proposing a specific prompt here. The wording is somewhat convoluted. But I think it’s necessary. As AIs get smarter, their comprehension of text will also improve though.

There is a related concept of Coherent Extrapolated Volition [? · GW] (CEV). But according to Eliezer Yudkowsky, it suffers from being unimplementable and possibly divergent:

My proposal is just a prompt with no explicit optimization during inference.

Next is the problem of fictional, fabricated and plain wrong text on the Internet. AI that’s trained on it directly will always be untrustworthy.

Here’s what I think we could do. Internet text is vast – on the order of a trillion words. But we could label some of it as “true” and “false”. The rest will be “unknown”.

During text generation, we’ll clamp these labels and thereby ask the model to only generate “true” words. As AIs get smarter, their ability to correlate “true”, “false” and “unknown” labels to the text will improve also.

I wanted to give something concrete and implementable for the AI doomers and anti-doomers alike to analyze and dissect.

Oleg Trott, PhD is a co-winner in the biggest ML competition ever, and the creator of the most cited molecular docking program. See olegtrott.com for details.

Thanks for reading Oleg’s Substack! Subscribe for free to receive new posts and support my work.

10 comments

Comments sorted by top scores.

comment by RogerDearnaley (roger-d-1) · 2024-07-17T02:19:32.312Z · LW(p) · GW(p)

AI that is trained by human teachers, giving it rewards will eventually wirehead, as it becomes smarter and more powerful, and its influence over its master increases. It will, in effect, develop the ability to push its own “reward” button. Thus, its behavior will become misaligned with whatever its developers intended.

This seems like an unproven statement. Most humans are aware of the possibility of wireheading, both the actual wire version and the more practical versions involving psychotropic drugs. The great majority of humans don't choose to do that to themselves. Assuming that AI will act differently seems like an unproven assumption, one which might, for example, be justified for some AI capability levels but not others.

Replies from: oleg-trott↑ comment by Oleg Trott (oleg-trott) · 2024-07-17T02:37:44.743Z · LW(p) · GW(p)

Most humans are aware of the possibility of wireheading, both the actual wire version and the more practical versions involving psychotropic drugs.

For humans, there are negative rewards for abusing drugs/alcohol -- hangover the next day, health issues, etc. You could argue that they are taking those into account.

But for an entirely RL-driven AI, wireheading has no anticipated downsides.

Replies from: roger-d-1↑ comment by RogerDearnaley (roger-d-1) · 2024-07-17T03:23:30.143Z · LW(p) · GW(p)

In practice, most current AIs are not constructed entirely by RL, partly because it has instabilities like this. For example, LLMs instruction-trained by RLHF uses a KL-divergence loss term to limit how dramatically the RL can alter the base model behavior trained by SGD. So the result deliberately isn't pure RL.

Yes, if you take a not-yet intelligent agent, train it using RL, and give it unrestricted access to a simple positive reinforcement avenue unrelated to the behavior you actually want, it is very likely to "wire-head" by following that simple maximization path instead. So people do their best not to do that when working with RL.

Replies from: oleg-trott↑ comment by Oleg Trott (oleg-trott) · 2024-07-17T18:51:02.430Z · LW(p) · GW(p)

I think that regularization in RL is normally used to get more rewards (out-of-sample).

Sure, you can increase it further and do the opposite – subvert the goal of RL (and prevent wireheading).

But wireheading is not an instability, local optimum, or overfitting. It is in fact the optimal policy, if some of your actions let you choose maximum rewards.

Anyway, the quote you are referring to says “as (AI) becomes smarter and more powerful”.

It doesn’t say that every RL algorithm will wirehead (find the optimal policy), but that an ASI-level one will. I have no mathematical proof of this, since these are fuzzy concepts. I edited the original text to make it less controversial.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-07-16T21:04:44.031Z · LW(p) · GW(p)

I think your idea of labelling the source and epistemic status of all training data is good. I've seen the idea presented before. I don't think it's a knockdown solution to model reliability, but I expect it would help a lot.

Sorry that I don't remember exactly where I saw this discussed before. Hopefully it you web search for it you can find it.

Replies from: oleg-trott, oleg-trott↑ comment by Oleg Trott (oleg-trott) · 2024-07-17T21:28:08.766Z · LW(p) · GW(p)

I think your idea of labelling the source and epistemic status of all training data is good. I've seen the idea presented before.

I'm not finding anything. Do you recall the authors? Presented at a conference? Year perhaps? Specific keywords? (I tried the obvious)

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-07-18T15:26:11.164Z · LW(p) · GW(p)

Hmmm. I don't remember. But here's a new example that Zvi just mentioned: https://arxiv.org/abs/2310.15047

Replies from: oleg-trott↑ comment by Oleg Trott (oleg-trott) · 2024-07-19T06:01:47.726Z · LW(p) · GW(p)

Thanks! It looks interesting. Although I think it's different from what I was talking about.

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-08-07T18:37:57.499Z · LW(p) · GW(p)

I've been continuing to think about this. I eventually remembered that the place I remembered the idea from was pervade conversations with other AI researchers! Sorry to have sent you on a wild goose chase!

It would be interesting to try an experiment with this. Perhaps doing hierarchical clustering on Open Web Text (an early not-too-huge dataset from GPT-2 days). Then get an LLM worth a large context window to review a random subset of each cluster and write a description of it (including an estimate of factual validity). Then, when training, those descriptions would be non-predicted context given to the model. If you do use hierarchical clustering, this will result in a general description and some specific subtype descriptions for every datapoint.

↑ comment by Oleg Trott (oleg-trott) · 2024-07-16T21:43:56.859Z · LW(p) · GW(p)

Yes, it's simple enough, that I imagine it's likely people came up with it before. But it fixes a flaw in the other idea (which is also simple, although in the previous discussion I was told [LW(p) · GW(p)] that it might be novel)