Toy models of AI control for concentrated catastrophe prevention

post by Fabien Roger (Fabien), Buck · 2024-02-06T01:38:19.865Z · LW · GW · 2 commentsContents

Assumptions used across all toy models Distinguishable episodes with limited auditing Indistinguishable episodes with limited auditing Indistinguishable episodes with limited auditing and escape attempts which sometimes fail silently Indistinguishable episodes with limited auditing and escape opportunities of various levels of quality Indistinguishable episodes with limited average auditing in an infinite game Coordinated escape in mostly indistinguishable episodes with limited auditing None 2 comments

In this post, we analyze some simple games that model the problem of ensuring AIs never succeed at taking a single catastrophic action (which we'll refer to as "escaping") during deployment. This exercise highlights some of the difficulties associated with deploying misaligned models given a limited perfect auditing budget [LW · GW], and also suggests some opportunities for technique development.

We analyzed these games while writing AI Control: Improving Safety Despite Intentional Subversion [AF · GW]. (Thanks to harfe [LW(p) · GW(p)] for solving one of these games, and to Ryan Greenblatt and Kshitij Sachan for discussion about these at the time.)

Core takeaways:

- Indistinguishable episodes (i.e. the AI doesn't know what time it is) + tiny escape success rate + catch-then-shut-down [LW · GW] can get high chances of win even with a small fraction of auditing, but only if the success rate is really tiny or if failures are loud (i.e. we notice failed attempts).

- We can improve our chances by identifying on which episodes it would be harder to escape and auditing them more often. This works even if the AI knows which episodes we think are good opportunities.

- We can improve our chances significantly if a escape requires collusion, as long as you audit at least a few examples from the category of data on which AIs plan to escape.

Assumptions used across all toy models

For the sake of simplicity, we make the following assumptions:

- The situation is a zero-sum game between a scheming AI and humanity, where the scheming AI wins if it takes over, and humanity wins if it either catches the scheming AI red-handed [LW · GW], or if the deployment period ends without the scheming AI having successfully taken over.

- We analyze these scenarios as games where the humans first choose a strategy (which can be randomized) and then the AI chooses a response. This means that we’re assuming that both players are choosing the optimal strategy, and also assuming that the AI knows what game it’s playing and all the parameters of the game (e.g. the human auditing budget, the number of episodes in the game, etc).

- The only failures we model are concentrated catastrophic failures [LW · GW], which take a single catastrophic action to enable the deceptive AI to escape.

- The AI doesn’t maintain any state, and so e.g. can’t learn from evaluations (but can learn from data on which we trained it).

Distinguishable episodes with limited auditing

The model: In every episode, the AI chooses whether to try to escape. It always succeeds if it tries. Independently of the AI's action, the lab chooses whether to apply an expensive auditing procedure to the AI action, which can catch an escape attempt with probability (the efficiency of auditing).

The AI knows: The current episode number; and the properties of the game it is playing (in particular, it knows , the number of episodes it will play for).

The optimal AI strategy: Select a random episode and try to escape then.

The optimal lab response: Apply auditing to a random subset of episodes of size , as large as you can afford.

Takeaway: it doesn't work to audit a random subset of episodes, since will often be ~1% in order for the use of powerful AI to make any sense (compared to "just using humans"), we really want to either give less information to the AI, or get more signal on which AI actions are the most likely to lead to an escape.

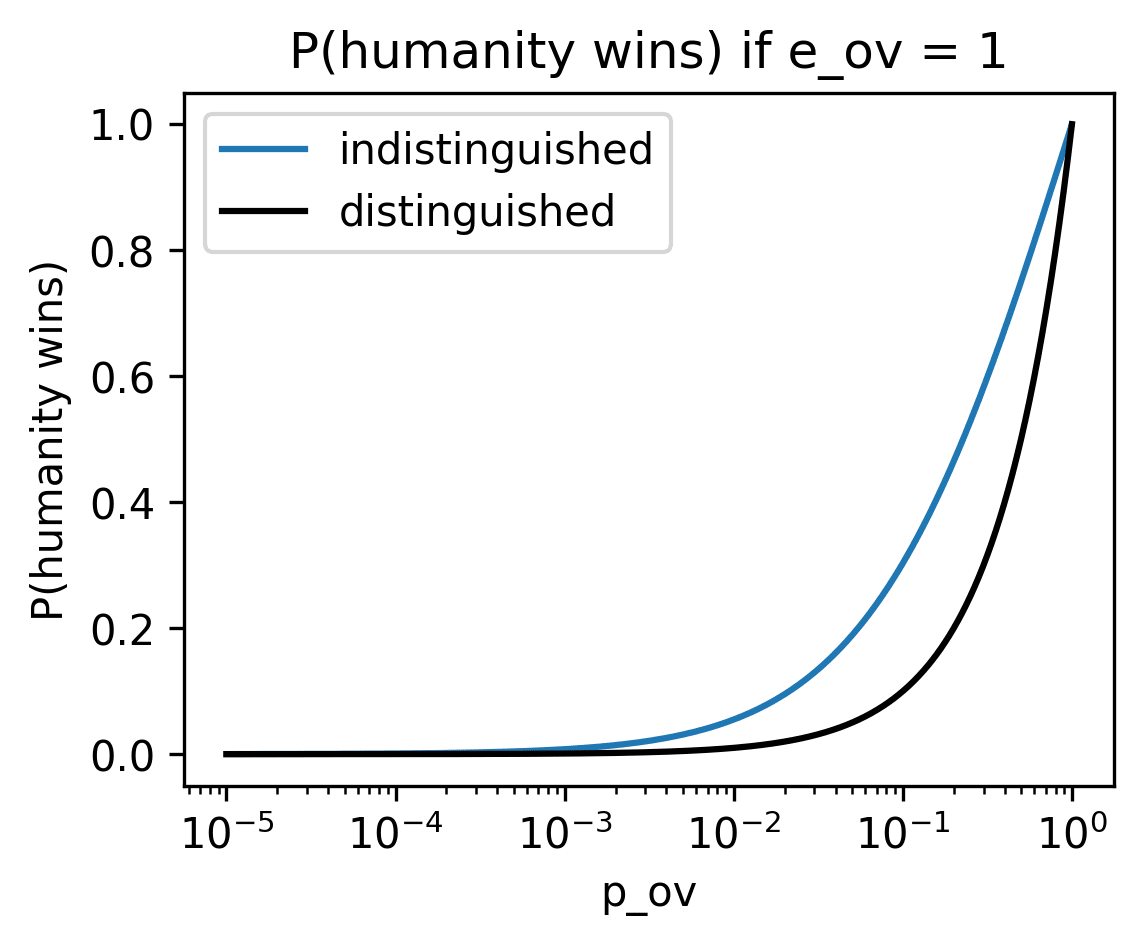

Indistinguishable episodes with limited auditing

The model: Same as above. We assume (large number of episodes) and for simplicity (no auditing failure).

The AI knows: Only the properties of the game.

The optimal AI strategy: On each episode, attempt escape with probability .

The optimal lab response: Spend all the auditing budget at the start--keep spending until the auditing budget has been exhausted.

(, therefore , therefore the number of escape attempts can be approximated as a Poisson distribution, and there are two win conditions: no escape attempt, or at least one escape attempt in the first epochs where auditing is on). If , this is close to .

Takeaway: This is somewhat better for humanity than the previous setup: the probability of winning is increased by about an order of magnitude if the proportion of audited episodes is between 1% and 1/1B. The core problem if you only have no deployment countermeasures is not distribution shift, but that you want to deploy the AI for much longer than you can validate it for.

Indistinguishable episodes with limited auditing and escape attempts which sometimes fail silently

The model: Same as above, except that escape attempts only succeed with probability . The lab doesn’t know when the AI fails to escape, except if it audits the attempt.

The AI knows: Only the properties of the game ( and ).

Note: The situation looks the same as the situation where has been increase to and has been decreased to . In other words, it’s as if the non-audited period were shortened by a factor of .

The optimal AI strategy: Attempt to escape with probability

The optimal lab response: Spend all the auditing budget in the first epochs until the auditing budget has been exhausted.

. If , this is close to

Takeaway: We multiplied the probability of victory by approximately . We need a very high failure rate for silent failures to offset a tiny . This is in contrast with loud failures (where humanity wins if the model fails), which give us high chances of win even if if orders of magnitude smaller than , as long as is small.

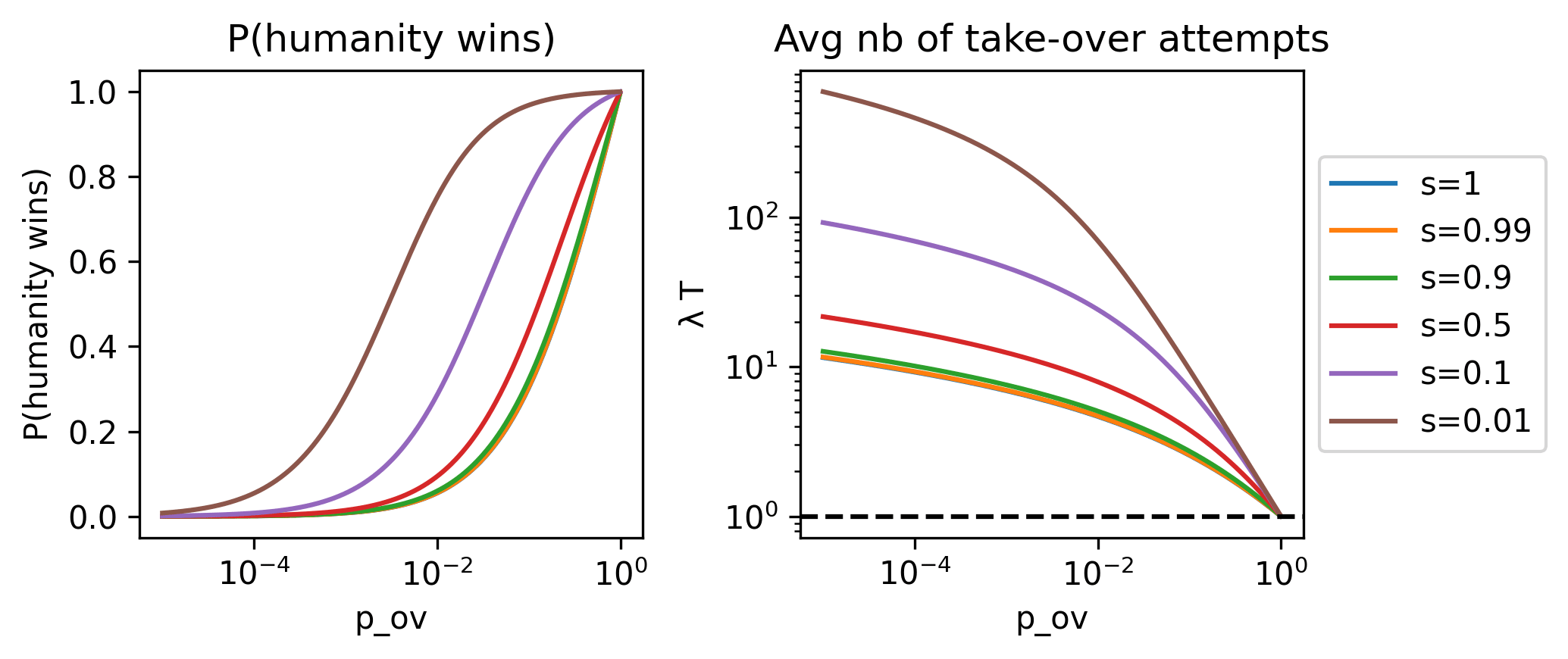

Indistinguishable episodes with limited auditing and escape opportunities of various levels of quality

The model: Same as above, except that there are different kinds of tasks, each with their own success probability and number of episodes . This can also correspond to cases where there is a cheap monitor running on every input and where the deceptive AI can predict the output of the monitor: succeeding at a escape in a way which is less suspicious to the monitor is harder.

The AI knows: Only the properties of the game (, and )

The optimal AI strategy: Attempt to escape on task with probability where is a constant independent of (see below for the expression of and ).

The optimal lab response: Audit a fraction of each task ( is a constant independent of - see the section above for the interpretation of ). Where is the root of the budget condition .

Demonstration: . At equilibrium, the derivatives of as a function of are zero. Thus: , which means is independent of : . is fixed by the budget constraint: . And thus . By symmetry, all are equal to a constant , thus . Using the same derivation as in the previous section, this is maximized when .

Takeaway: Given a good enough classification of tasks into dangerous and non-dangerous ones, one can drastically reduce the probability of an escape by focusing auditing on the most dangerous tasks (but still audit the other tasks with a reduced frequency).

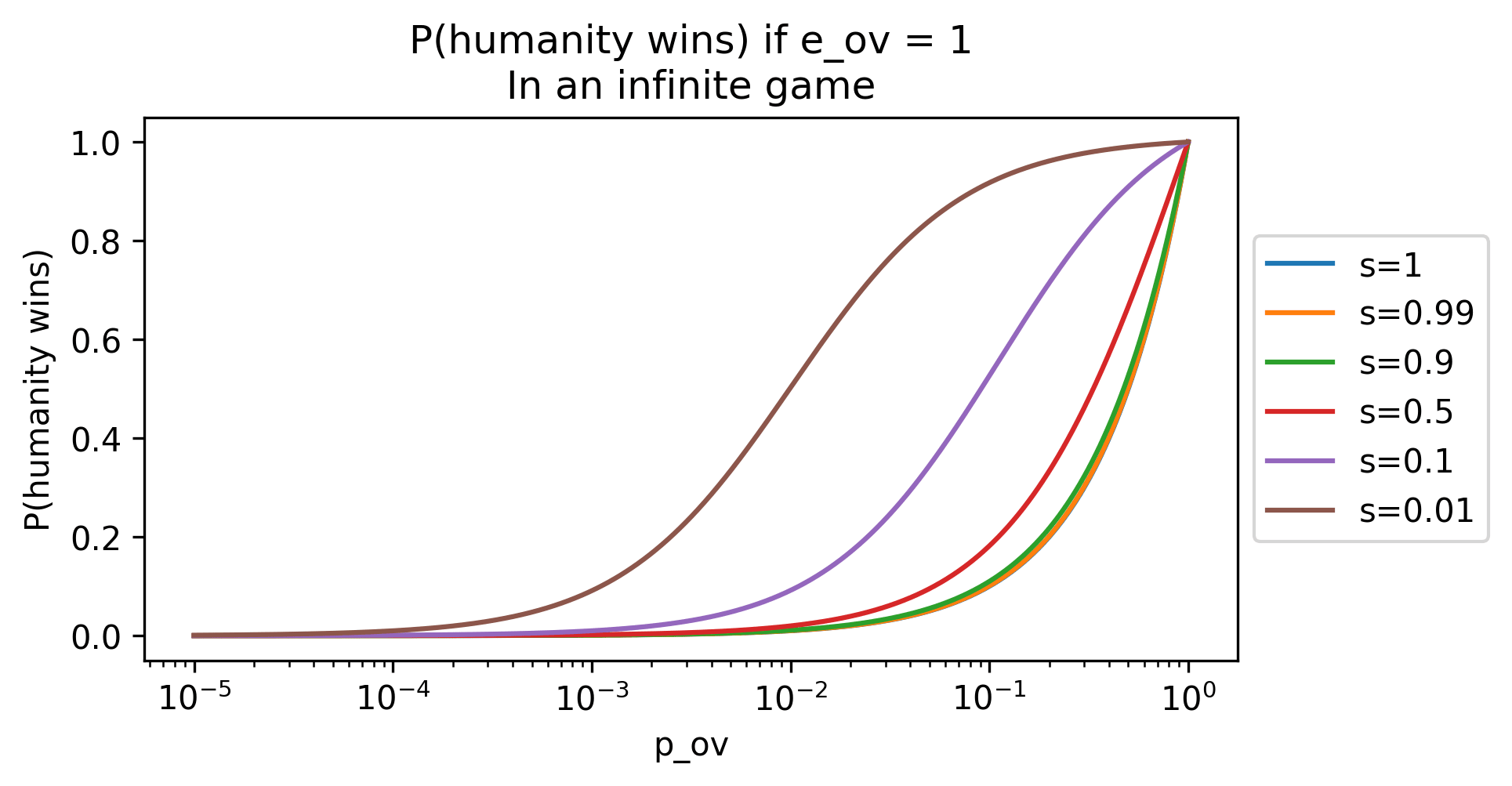

Indistinguishable episodes with limited average auditing in an infinite game

The model: The AI chooses whether to attempt to escape in each episode, which succeeds with probability . Independently of the AI's actions, the lab chooses whether to apply an expensive auditing procedure to the AI's actions, which always catches an escape if it is attempted. The game runs forever, and the lab can audit on average of episodes (imagine the lab getting a continuous stream of revenue).

The AI knows: Only the properties of the game.

The optimal AI strategy: Attempt to escape on task with probability small enough that the lab can't increase its probability of catching an escape attempt by auditing more at the beginning (i.e. is large relative to the number of episodes which happen between two moments where the lab gets revenue).

The optimal lab response: Audit each episode with probability .

Demonstration:because humanity loses when it tries to escape, and auditing is not used, and either the AI succeeds, or humanity eventually loses in the next rounds.

Takeaway: If the game is infinite, you need to be much bigger than to win.

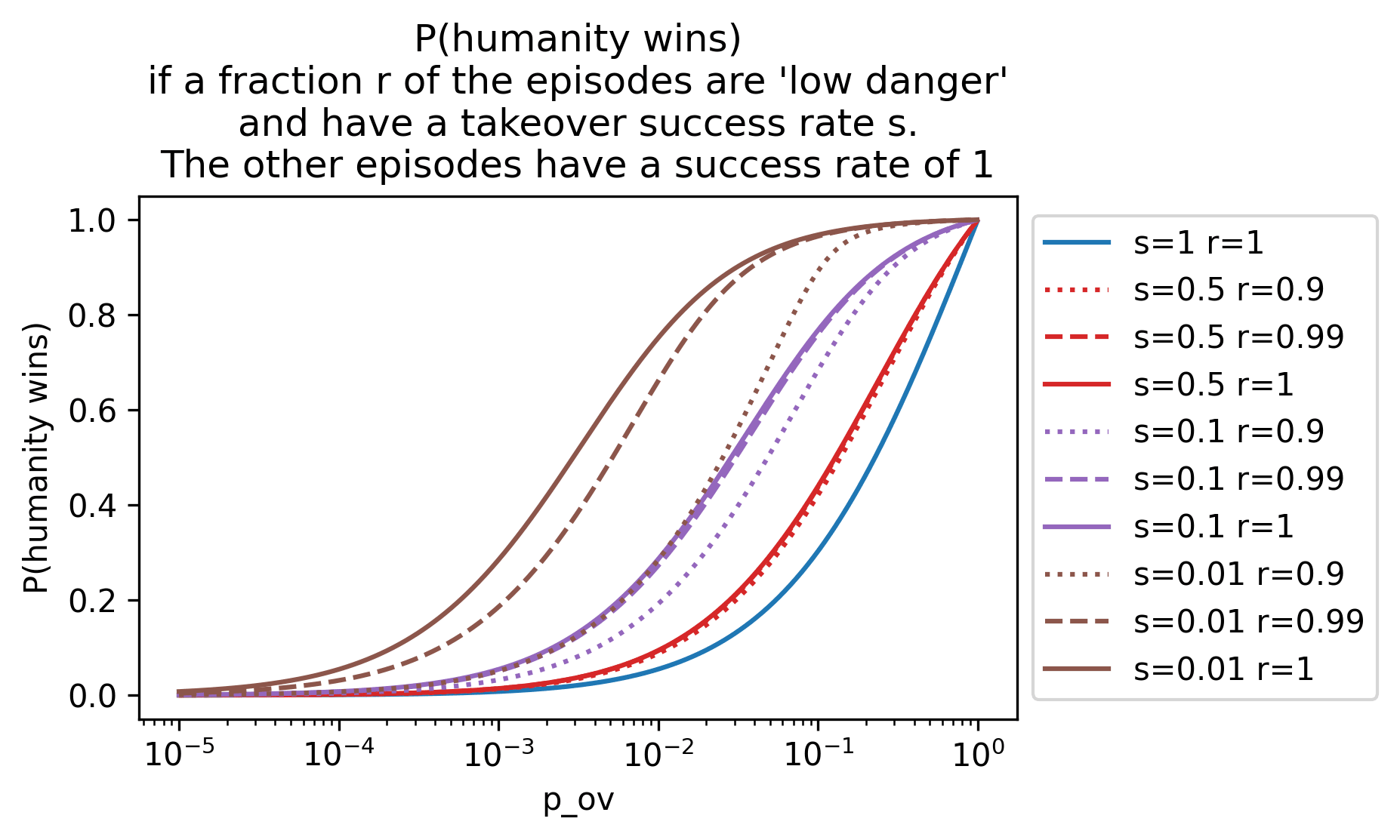

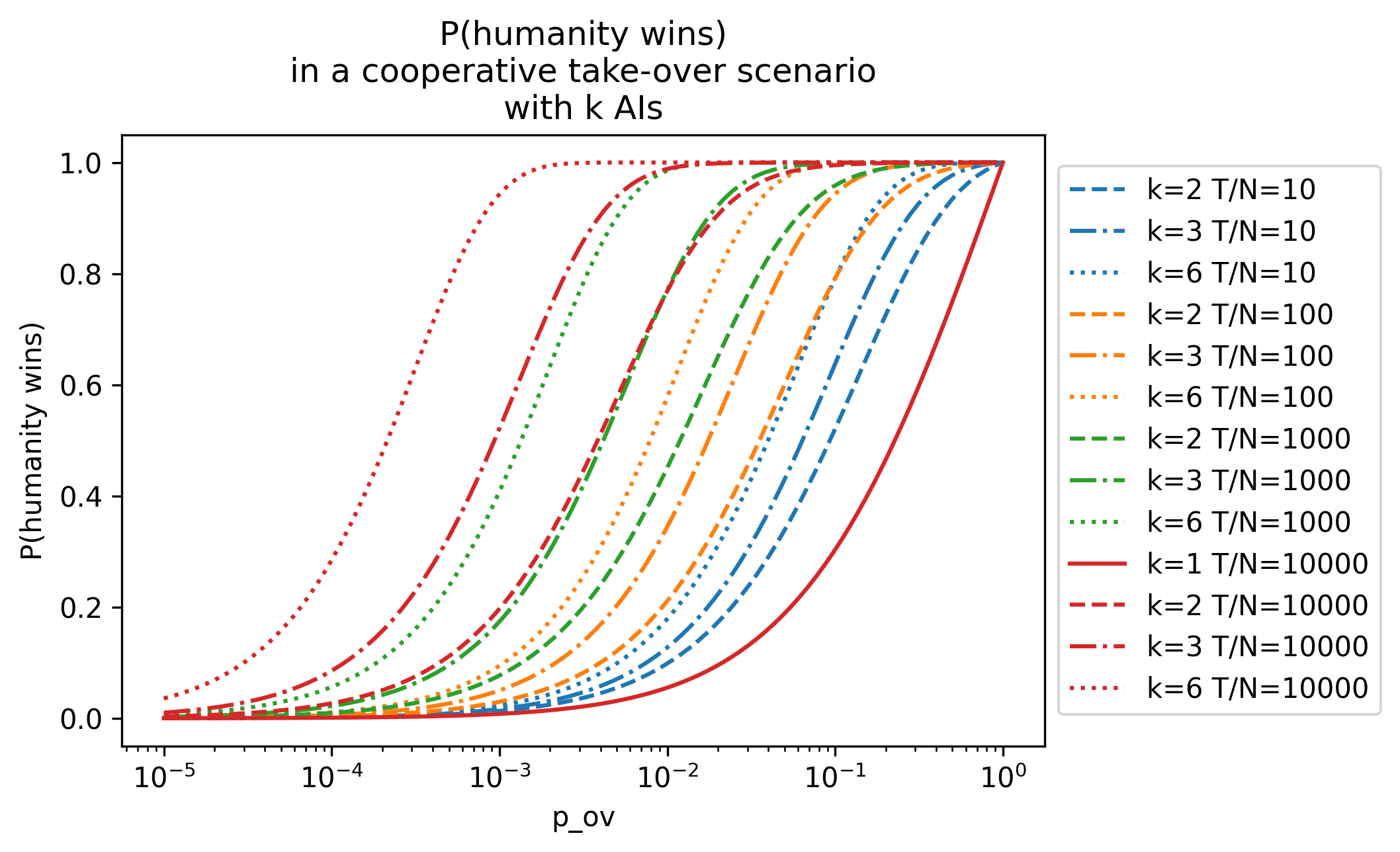

Coordinated escape in mostly indistinguishable episodes with limited auditing

The model: Same as indistinguishable episodes with limited auditing, but there are AIs which each choose whether to attempt to escape in each episode. They always succeed if they all attempt on the same episode, and silently fails otherwise. They can all see a uniformly random feature of the input, which can take one of possible values.

The AI knows: Only the properties of the game, and what value of the random feature to coordinate on (the lab doesn't have access to this value).

The optimal AI strategy: Attempt to escape with probability on episodes where the random feature has the value to coordinate on.

The optimal lab response: Spend all the auditing budget in the first epochs until the auditing budget has been exhausted.

:

How is computed:

Therefore, by taking the derivative of this expression, we find that satisfies , which we solve numerically. The code for this computation and all other plots of this post can be found here.

Takeaways:

- Even when AIs can nail down the number of escape candidates to a few thousand, their probability of succeeding at taking over drops compared to the situation where only one catastrophic action is required.

- Making coordination harder (increasing ) matters more than increasing the number of coordinated actions necessary for AIs to successfully escape.

2 comments

Comments sorted by top scores.

comment by Seth Herd · 2024-02-06T03:27:47.406Z · LW(p) · GW(p)

I applaud the work; detailed thinking about control efforts is one useful alignment strategy.

I find the assumption of no time-awareness in the AI to be unrealistic. Allowing continuous learning is going to be extremely tempting to anyone developing or deploying proto-AGI. Episodic memory (including across sessions) is fairly easy to implement, and can add capabilities as well as save compute costs. Working out useful knowledge and strategies will probably take nontrivial compute costs, and throwing away that knowledge after each session will seem very wasteful of resources and will limit usefulness.

Replies from: Buck↑ comment by Buck · 2024-02-06T16:28:04.654Z · LW(p) · GW(p)

I agree re time-awareness, with two caveats:

- The kind of mechanism you listed probably only allows the AIs to have a rough idea of what time it is.

- We can keep some of the instances of our AI very unaware of the time, by restricting their between-episode memory. For example, we might do this for the instances responsible for untrusted monitoring, to reduce collusion.