EIS II: What is “Interpretability”?

post by scasper · 2023-02-09T16:48:35.327Z · LW · GW · 6 commentsContents

A parable based on a true story Interpretability is a means to an end. The key idea behind this post is that whatever we call “interpretability” tools are entirely fungible with other techniques related to describing, evaluating, debugging, etc. Mechanistic approaches to interpretability are not uniquely important for AI safety. Questions None 7 comments

Part 2 of 12 in the Engineer’s Interpretability Sequence [? · GW].

A parable based on a true story

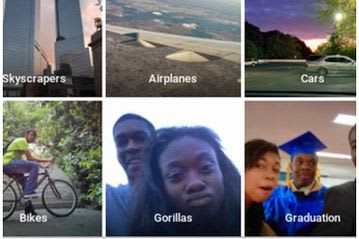

Remember Google’s infamous blunder from 2015 in which users found that one of its vision APIs often misclassified black people as gorillas? Consider a parable of two researchers who want to understand and tackle this issue.

- Alice is an extremely skilled mechanistic interpretability researcher who spends a heroic amount of effort analyzing Google’s model. She identifies a set of neurons and weights that seem to be involved in the detection and processing of human and gorilla faces and bodies. She develops a detailed mechanistic hypothesis and writes a paper about it with 5 different types of evidence for her interpretation. Later on, another researcher who wants to test Alice’s hypothesis edits the model in a way that the hypothesis suggests would fix the problem. As it turns out, the hypothesis was imperfect, and the model now classifies many images of gorillas as humans!

- Bob knows nothing about neural networks. Instead of analyzing the network, he looks at the dataset that the model was trained on and notices a striking lack of black people (as was indeed the case in real life (Krishnan, 2020)). He suggests making the data more representative and training the model again. When this is done, it mostly fixes the problem without side effects.

The goal of this parable is to illustrate that when it comes to doing useful engineering work with models, a mechanistic understanding may not always be the best way to go. We shouldn’t think of something called “interpretability” as being fundamentally separate from other tools that can help us accomplish our goals with models. And we especially shouldn’t automatically privilege some methods over others. In some cases, highly involved and complex approaches may be necessary. But in other cases like Alice’s, the interesting, smart, and paper-able solution to the problem might not only be harder but could also be more failure-prone. This isn’t to say that Alice’s work could never lead to more useful insights down the road. But in this particular case, Alice’s smart approach was not as good as Bob’s simple one.

Interpretability is a means to an end.

Since I work on and think about interpretability every day, I have felt compelled to adopt a definition for it. In a previous draft of this post, I proposed defining an interpretability tool as “any method by which something novel about a system can be better predicted or described.” And I think this is ok, but I have recently stopped caring about any particular definition. Instead, I think the important thing to understand is that 'interpretability’ is not a term of any fundamental importance to an engineer.

The key idea behind this post is that whatever we call “interpretability” tools are entirely fungible with other techniques related to describing, evaluating, debugging, etc.

Does this mean that it’s the same thing as interpretability if we just calculate performance on a test set, train an adversarial example, do some model pruning, or make a prediction based on the dataset? Pretty much. For all practical intents and purposes, these things are all of a certain common type. Consider any of the following sentences.

- This model handles 85% of the data correctly.

- This input plus whatever is in this adversarial perturbation make the model fail.

- I got rid of 90% of the weights and the model’s performance only decreased by 2%.

- The dataset has this particular bias, so the model probably will as well.

- This model seems to have a circuit composed of these neurons and these weights that is responsible for X by…

All of these things are perfectly valid insights to use if they help us learn something we want to learn about a model or do something we want to do with it.

If this seems like pushing for a hastily broad understanding, consider the alternative. Suppose that we think of interpretability as distinct from other tools – perhaps because we care a lot about mechanistic understandings. Then the concept becomes a fairly arbitrary and limiting term with respect to our goals for it. Krishnan (2020) argues against such a definition:

In many cases in which a black box problem is cited, interpretability is a means to a further end … Since addressing these problems need not involve something that looks like an “interpretation” (etc.) of an algorithm, the focus on interpretability artificially constrains the solution space by characterizing one possible solution as the problem itself. Where possible, discussion should be reformulated in terms of the ends of interpretability.

From an engineer’s perspective, it’s important not to grade different classes of solutions each on different curves. Any practical approach to interpretability must focus on eventually producing actionable insights that help us better design, develop, or deploy models. Anything that helps with this is fair game.

Mechanistic approaches to interpretability are not uniquely important for AI safety.

One objection to adopting a broad notion of interpretability might be that mechanistic notions of it seem uniquely useful for AI safety and hence worthy of unique attention. Mechanistic interpretability seems well-equipped for detecting ways that AI systems might secretly be waiting to betray us [AF · GW]. To the extent that this is a concern (and it probably is a big one), wouldn’t that be a good reason to think of mechanistic interpretability separately from other approaches to engineering better models?

At this point, a debate over definitions is of fairly little importance. As long as we are clear and non-myopic, that’s all fine. But there are still two key things to emphasize.

First, there are many ways for AI to cause immense harm that don’t involve deceptive alignment. Recall that deceptive alignment failures are a subset of inner alignment failures which are a subset of alignment failures which are a subset of safety failures. When it comes to issues that don’t stem from deception, we definitely should not restrict ourselves to mechanistic interpretability work.

Second, mechanistic interpretability is not uniquely useful for deceptive alignment. Better understanding how to address it will involve some further unpacking of the term “deception.” But for that discussion, please wait for EIS VIII :)

Questions

- Do you have a definition of interpretability that you find particularly satisfying or clarifying?

- Outside of the interpretability research space, do you know of other interesting examples of different techniques being graded on different curves?

6 comments

Comments sorted by top scores.

comment by DanielFilan · 2023-03-28T23:20:25.753Z · LW(p) · GW(p)

Re: the gorilla example, seems worth noting that the solution that was actually deployed ended up being refusing to classify anything as a gorilla, at least as of 2018 (perhaps things have changed since then).

Replies from: DanielFilan↑ comment by DanielFilan · 2023-03-28T23:21:03.973Z · LW(p) · GW(p)

I guess this proves the superiority of the mechanistic interpretability technique "note that it is mechanistically possible for your model to say that things are gorillas" :P

comment by ryan_greenblatt · 2023-02-09T19:35:40.753Z · LW(p) · GW(p)

Can you give examples of alignment research which isn't interpretability research?

Replies from: ryan_greenblatt, scasper↑ comment by ryan_greenblatt · 2023-02-09T19:39:08.708Z · LW(p) · GW(p)

Fair enough if you're interested in just talking about 'approaches to acquiring information wrt. AIs' and you'd like to call this interpretability.

comment by willhath · 2024-01-09T12:55:09.962Z · LW(p) · GW(p)

Outside of the interpretability research space, do you know of other interesting examples of different techniques being graded on different curves?

Electric vehicles? Early electric vehicles were worse than gas cars on all axis other than the theoretical promise of the technology. However, they were (and still are, ie formula E) graded on separate curves. The fairly straight-forward analogy I'm trying to make is that maybe it's worthwhile treating early technologies gently, as now I think most people are pretty impressed by electric cars.

Although obviously there are significant differences here (consumer market vs helping engineers, etc), I think this could be a useful metaphor to try out arguments in these sequences on to judge their reasonableness.