A Very Mathematical Explanation of Derivatives

post by Heighn · 2022-04-01T14:40:47.881Z · LW · GW · 0 commentsContents

Linear functions Polynomials (and more) Product rule Mathematical induction Proof of the power rule for natural numbers Chain rule Constant multiple rule Euler's number and the natural logarithm General proof of the power rule Local maxima, local minima and second derivatives Multivariable functions Partial derivatives None No comments

This post is meant for readers familiar with algebra and derivatives, but want to deepen their understanding and/or need a refresh.

Linear functions

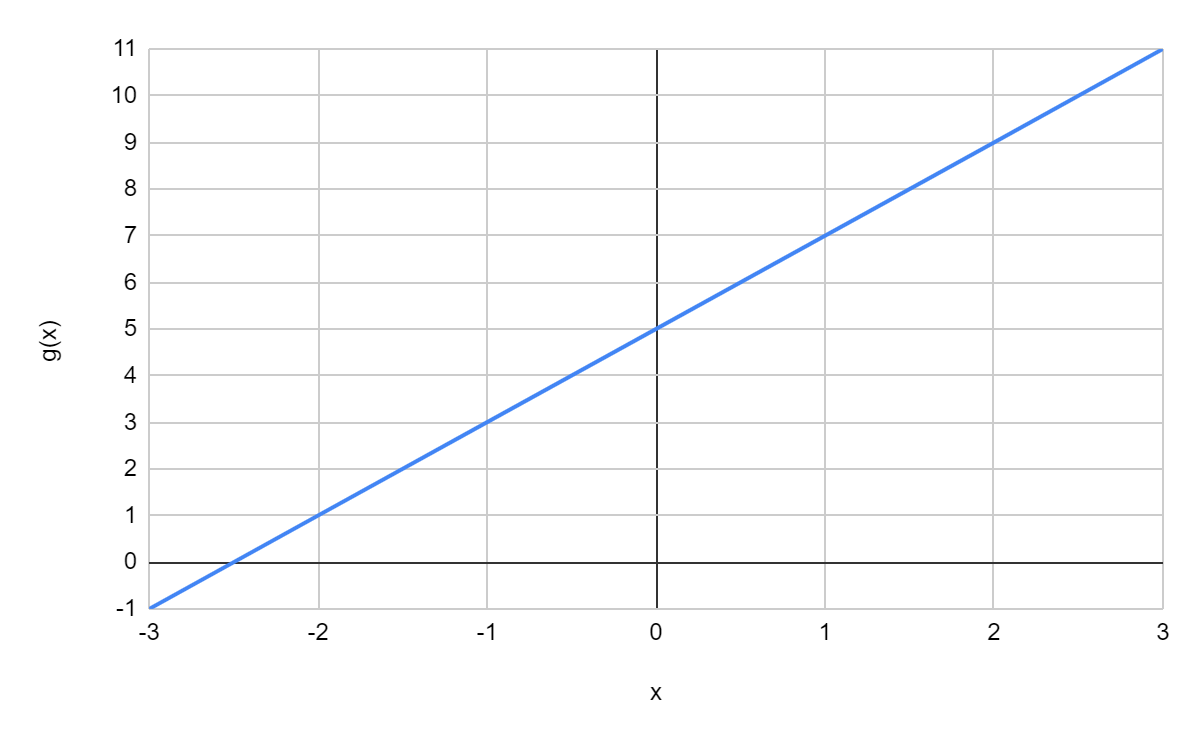

Let's start with a family of very basic functions: the linear functions, expressed as . You might remember its derivative is , because is multiplied by and the constant "disappears" when taking the derivative. This is correct, but let's actually calculate the derivative. Since is a linear function, is the same for all That is, a linear function "goes up" with the same "speed" everywhere, as can be seen in the following graph for :

For example, between and , increases with , just like it does between e.g. and . Therefore, determining the average slope between and will do. The average slope between and is how much increases between and , divided by the difference between and (which is ). Let , in which case we don't have to do the division, as . Filling in for and for , we get:

There it is! . So for , where , this means .

Polynomials (and more)

Polynomials are functions with the following form:

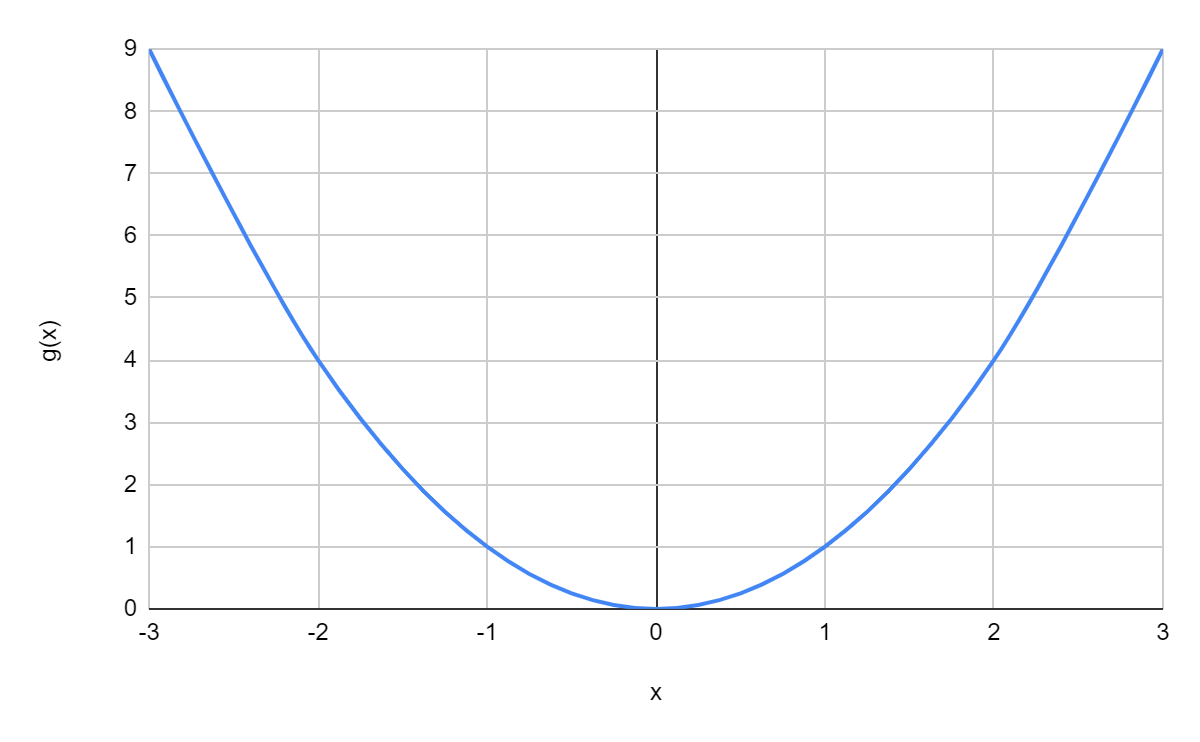

Determining their derivative is a bit more tricky than determining the derivative of a linear function, because now, the derivative isn't necessarily the same everywhere. After all, take :

We can see this is a curved line, and so the derivative is constantly changing. We can sill do something like the "trick" we did with linear functions, but we can't determine by looking how changes between and : that would assume is the same between and , which isn't true. For and , we would have a better estimate of , but we'd still assume to be constant between these values. We need to determine how changes between and some , where needs to approach zero: the smaller gets, the more accurate our calculation for becomes. We can do this using limits:

(Since isn't 1 now, we need to do the division.) We can read this as follows: what value does approach when approaches ?

Let's do this for the simple polynomial :

When approaches , becomes :

.

So for , . You might have learned the general rule:

For ,

This is known as the power rule, and indeed works for , where and and . It also works for the linear function , where and : . But does it work in general? Yes, and we can proof it. Let's first proof it works for all natural numbers () : . We need the product rule and mathematical induction for this proof though, so let's discuss those first.

Product rule

The product rule states that when , . So when e.g. and , and

. We can show the product rule is correct by determining what should be using the original definition of the derivative:

Since we want to write as , let's rewrite the divisor to include the terms and :

and note that indeed,

, which was our original divisor.

Simplifying , we get

Since doesn't contain , we can take it outside the first limit term. We can also rewrite the second term:

When approaches , becomes . Furthermore, by definition,

and ,

so we now have ,

which is the product rule!

Mathematical induction

Mathematical induction is a method for proving something is true for all natural numbers . For example, say we want to proof that for every natural number , , where is simply . We can do this by first showing the condition holds for . That's Step 1, and yes, it does: . Then, we show that if the condition holds for some , it also holds for . That's Step 2. So for this step we assume , and need to show that . That holds as well: if , then . Since for this step we assumed , we have . So .

So we now know that our condition holds for and that if it holds for some , it must also hold for . But then it holds for all natural numbers! Does our condition hold for ? Yes! It holds for by Step 1, so it holds for by Step 2; but then, since it holds for , it also holds for , again by Step 2. Applying Step 2 one more time gives that the condition holds for as well. And we can apply this process to every natural number!

Proof of the power rule for natural numbers

Using the product rule and mathematical induction, we can show that the power rule (for , ) works for all .

Step 1 is to show this is true for . Yes: then , and . (Since is constant (), its derivative is indeed .

Step 2 is to show that if for some and , then for and , .

We can write as . Then, define . Then , and then the product rule says . But by the assumption of Step 2, . Furthermore, . So , which is what we wanted to proof!

So we have shown the power rule works for . We could extend this proof to e.g. cover negative integers for as well. But I'd like to use a different method of proof, that proofs the power rule works for . For this, we first need to know the chain rule, the constant multiple rule, Euler's number and how to take the derivative of the natural logarithm.

Chain rule

Define . (Note this is distinct from .) We want to determine its derivative. We could say , which would make . This is true, but let's take the opportunity to study the chain rule. Define and . We can then write as . Then:

Multiplying by , which equals 1 and is allowed if (otherwise we are dividing by 0), gives:

or

Note that , and . So we now have . That's the chain rule, and it holds whenever we can write a function as . Originally, we said , with and . Then and . According to the chain rule, then, , which is also what we got by applying the power rule to .

Before, we temporarily assumed . What if ? Well, then , and . Then , and . So the chain rule would still apply, as .

Constant multiple rule

If , . This might make intuitive sense, but it also follows from the chain rule: define and . Then , which is the constant multiple rule. Indeed, this same rule also follows from the product rule: if , define . Then and .

Euler's number and the natural logarithm

You might know that Euler's number , which is chosen so that if , . You may also remember the natural logarithm , where . What's the derivative of ? We can find it with the chain rule! Define and . Then , and applying the chain rule gives . But also, , so . So we learn , or , and so . So for , .

General proof of the power rule

Now we're ready to proof the power rule (for , ) works for . Let's rewrite as . Then . Define and . Then , and via the chain rule (and the constant multiple rule) . Remember , so , which is what we need to proof the power rule for .

Local maxima, local minima and second derivatives

As you might know, polynomials like can have local maxima (or peaks, where the graph first goes up and then goes down) and local minima (or minima, where the graph first goes down and then goes up). When a graph goes up, the derivative is positive; when it goes down, the derivative is negative. In the peak, the derivative must be ! It's similar for valleys - the derivative is there, too. That means we can find local maxima and local minima by setting the derivative to ! For , . gives and thus . Therefore, there must be a local maximum or minimum at . Which is it? Well, note that in a local maximum, the derivative must be decreasing (through ): otherwise, the graph wouldn't first go up and then go down. But if the derivative is decreasing, the derivative of the derivative, called the second derivative (written ), must be negative! Conversely, in a local minimum, the second derivative must be positive. For , and . So is a local maximum!

Now consider . We have , and gives or . Then and so . We have a local maximum or minimum in and a local maximum or minimum in . , and . Therefore, we have a local minimum in and a local maximum in .

Multivariable functions

Multivariable functions are functions with, well, more than one variable. Take for example . For and , we have . Or we can take , with .

Partial derivatives

A partial derivative of a multivariable function is determined by treating all but one variable like constants and taking the derivative with respect to the one variable left. For example, for , we can derive with respect to : and with respect to : . More generally, for any 2-variable function , and . For , this means , which indeed simplifies to .

0 comments

Comments sorted by top scores.