Superintelligence 22: Emulation modulation and institutional design

post by KatjaGrace · 2015-02-10T02:06:01.155Z · LW · GW · Legacy · 11 commentsContents

Summary Another view Notes In-depth investigations How to proceed None 11 comments

This is part of a weekly reading group on Nick Bostrom's book, Superintelligence. For more information about the group, and an index of posts so far see the announcement post. For the schedule of future topics, see MIRI's reading guide.

Welcome. This week we discuss the twenty-second section in the reading guide: Emulation modulation and institutional design.

This post summarizes the section, and offers a few relevant notes, and ideas for further investigation. Some of my own thoughts and questions for discussion are in the comments.

There is no need to proceed in order through this post, or to look at everything. Feel free to jump straight to the discussion. Where applicable and I remember, page numbers indicate the rough part of the chapter that is most related (not necessarily that the chapter is being cited for the specific claim).

Reading: “Emulation modulation” through “Synopsis” from Chapter 12.

Summary

- Emulation modulation: starting with brain emulations with approximately normal human motivations (the 'augmentation' method of motivation selection discussed on p142), and potentially modifying their motivations using drugs or digital drug analogs.

- Modifying minds would be much easier with digital minds than biological ones

- Such modification might involve new ethical complications

- Institution design (as a value-loading method): design the interaction protocols of a large number of agents such that the resulting behavior is intelligent and aligned with our values.

- Groups of agents can pursue goals that are not held by any of their constituents, because of how they are organized. Thus organizations might be intentionally designed to pursue desirable goals in spite of the motives of their members.

- Example: a ladder of increasingly intelligent brain emulations, who police those directly above them, with equipment to advantage the less intelligent policing ems in these interactions.

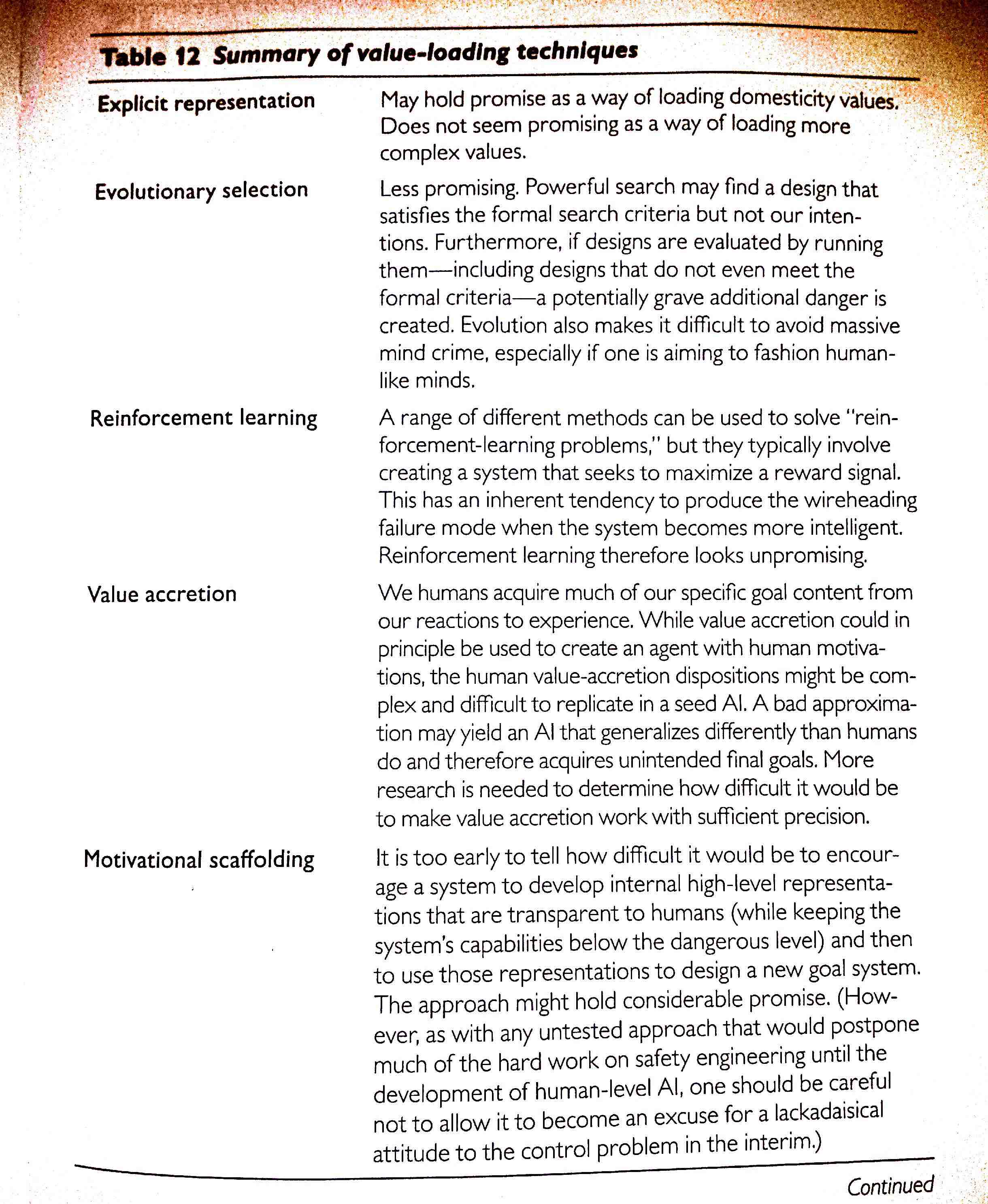

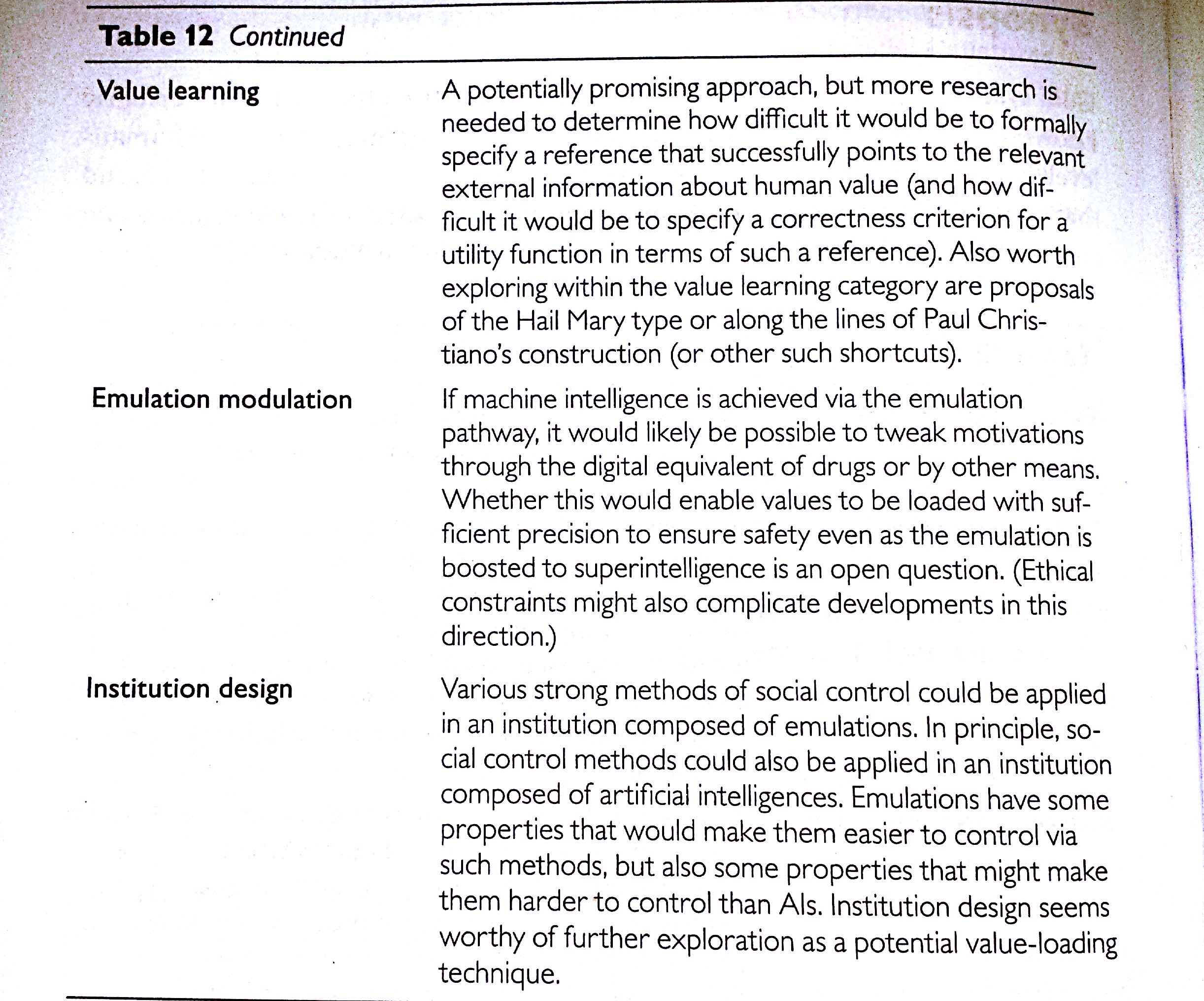

The chapter synopsis includes a good summary of all of the value-loading techniques, which I'll remind you of here instead of re-summarizing too much:

Another view

Robin Hanson also favors institution design as a method of making the future nice, though as an alternative to worrying about values:

On Tuesday I asked my law & econ undergrads what sort of future robots (AIs computers etc.) they would want, if they could have any sort they wanted. Most seemed to want weak vulnerable robots that would stay lower in status, e.g., short, stupid, short-lived, easily killed, and without independent values. When I asked “what if I chose to become a robot?”, they said I should lose all human privileges, and be treated like the other robots. I winced; seems anti-robot feelings are even stronger than anti-immigrant feelings, which bodes for a stormy robot transition.

At a workshop following last weekend’s Singularity Summit two dozen thoughtful experts mostly agreed that it is very important that future robots have the right values. It was heartening that most were willing accept high status robots, with vast impressive capabilities, but even so I thought they missed the big picture. Let me explain.

Imagine that you were forced to leave your current nation, and had to choose another place to live. Would you seek a nation where the people there were short, stupid, sickly, etc.? Would you select a nation based on what the World Values Survey says about typical survey question responses there?

I doubt it. Besides wanting a place with people you already know and like, you’d want a place where you could “prosper”, i.e., where they valued the skills you had to offer, had many nice products and services you valued for cheap, and where predation was kept in check, so that you didn’t much have to fear theft of your life, limb, or livelihood. If you similarly had to choose a place to retire, you might pay less attention to whether they valued your skills, but you would still look for people you knew and liked, low prices on stuff you liked, and predation kept in check.

Similar criteria should apply when choosing the people you want to let into your nation. You should want smart capable law-abiding folks, with whom you and other natives can form mutually advantageous relationships. Preferring short, dumb, and sickly immigrants so you can be above them in status would be misguided; that would just lower your nation’s overall status. If you live in a democracy, and if lots of immigration were at issue, you might worry they could vote to overturn the law under which you prosper. And if they might be very unhappy, you might worry that they could revolt.

But you shouldn’t otherwise care that much about their values. Oh there would be some weak effects. You might have meddling preferences and care directly about some values. You should dislike folks who like the congestible goods you like and you’d like folks who like your goods that are dominated by scale economics. For example, you might dislike folks who crowd your hiking trails, and like folks who share your tastes in food, thereby inducing more of it to be available locally. But these effects would usually be dominated by peace and productivity issues; you’d mainly want immigrants able to be productive partners, and law-abiding enough to keep the peace.

Similar reasoning applies to the sort of animals or children you want. We try to coordinate to make sure kids are raised to be law-abiding, but wild animals aren’t law abiding, don’t keep the peace, and are hard to form productive relations with. So while we give lip service to them, we actually don’t like wild animals much.

A similar reasoning should apply what future robots you want. In the early to intermediate era when robots are not vastly more capable than humans, you’d want peaceful law-abiding robots as capable as possible, so as to make productive partners. You might prefer they dislike your congestible goods, like your scale-economy goods, and vote like most voters, if they can vote. But most important would be that you and they have a mutually-acceptable law as a good enough way to settle disputes, so that they do not resort to predation or revolution. If their main way to get what they want is to trade for it via mutually agreeable exchanges, then you shouldn’t much care what exactly they want.

The later era when robots are vastly more capable than people should be much like the case of choosing a nation in which to retire. In this case we don’t expect to have much in the way of skills to offer, so we mostly care that they are law-abiding enough to respect our property rights. If they use the same law to keep the peace among themselves as they use to keep the peace with us, we could have a long and prosperous future in whatever weird world they conjure. In such a vast rich universe our “retirement income” should buy a comfortable if not central place for humans to watch it all in wonder.

In the long run, what matters most is that we all share a mutually acceptable law to keep the peace among us, and allow mutually advantageous relations, not that we agree on the “right” values. Tolerate a wide range of values from capable law-abiding robots. It is a good law we should most strive to create and preserve. Law really matters.

Hanson engages in more debate with David Chalmers' paper on related matters.

Notes

1. Relatively much has been said on how the organization and values of brain emulations might evolve naturally, as we saw earlier. This should remind us that the task of designing values and institutions is complicated by selection effects.

2. It seems strange to me to talk about the 'emulation modulation' method of value loading alongside the earlier less messy methods, because they seem to be aiming at radically different levels of precision (unless I misunderstand how well something like drugs can manipulate motivations). For the synthetic AI methods, it seems we were concerned about subtle differences in values that would lead to the AI behaving badly in unusual scenarios, or seeking out perverse instantiations. Are we to expect there to be a virtual drug that changes a human-like creature from desiring some manifestation of 'human happiness' which is not really what we would want to optimize on reflection, to a truer version of what humans want? It seems to me that if the answer is yes, at the point when human-level AI is developed, then it is very likely that we have a great understanding of specifying values in general, and this whole issue is not much of a problem.

3. Brian Tomasik discusses the impending problem of programs experiencing morally relevant suffering in an interview with Dylan Matthews of Vox. (p202)

4. If you are hanging out for a shorter (though still not actually short) and amusing summary of some of the basics in Superintelligence, Tim Urban of WaitButWhy just wrote a two part series on it.

5. At the end of this chapter about giving AI the right values, it is worth noting that it is mildly controversial whether humans constructing precise and explicitly understood AI values is the key issue for the future turning out well. A few alternative possibilities:

- A few parts of values matter a lot more than the rest —e.g. whether the AI is committed to certain constraints (e.g. law, property rights) such that it doesn't accrue all the resources matters much more than what it would do with its resources (see Robin above).

- Selection pressures determine long run values anyway, regardless of what AI values are like in the short run. (See Carl Shulman opposing this view).

- AI might learn to do what a human would want without goals being explicitly encoded (see Paul Christiano).

In-depth investigations

If you are particularly interested in these topics, and want to do further research, these are a few plausible directions, some inspired by Luke Muehlhauser's list, which contains many suggestions related to parts of Superintelligence. These projects could be attempted at various levels of depth.

- What other forms of institution design might be worth investigating as means to influence the outcomes of future AI?

- How feasible might emulation modulation solutions be, given what is currently known about cognitive neuroscience?

- What are the likely ethical implications of experimenting on brain emulations?

- How much should we expect emulations to change in the period after they are first developed? Consider the possibility of selection, the power of ethical and legal constraints, and the nature of our likely understanding of emulated minds.

How to proceed

This has been a collection of notes on the chapter. The most important part of the reading group though is discussion, which is in the comments section. I pose some questions for you there, and I invite you to add your own. Please remember that this group contains a variety of levels of expertise: if a line of discussion seems too basic or too incomprehensible, look around for one that suits you better!

Next week, we will start talking about how to choose what values to give an AI, beginning with 'coherent extrapolated volition'. To prepare, read “The need for...” and “Coherent extrapolated volition” from Chapter 13. The discussion will go live at 6pm Pacific time next Monday 16 February. Sign up to be notified here.

11 comments

Comments sorted by top scores.

comment by diegocaleiro · 2015-02-12T17:14:57.954Z · LW(p) · GW(p)

I continue worried with what I posted last week: the fragility of human minds. The more I learn about social endocrynology, glands, neurotransmitters and cognitive neuroscience, the more I notice that the alleged "robustness" of cognition, usually attributed to a combination of plasticity and redundancy, is only robust to the sorts of challenges and problems an animal brain may encounter, problems like firmly memorizing the name of your significant other, hunting in different environments, getting old, and internal bleeding.

But if we had emulations, the sorts of shifts, tweaks and twists that can be done are numerously more than that, and they could well act on subsets of the mind which have no robustness at all, to Minsky's dismay. The fear here is that evolution selected for some kinds of robustness, and completely did not worry about others, and we will soon be able to modify minds in that way, inadvertently so.

For the second time while going through these posts: the more I think about Superintelligence and delve into it's literature, the more skeptical I'm becoming that we will make it through. Everything seems so brittle.

Replies from: KatjaGrace↑ comment by KatjaGrace · 2015-02-12T17:45:26.743Z · LW(p) · GW(p)

To check I understand: you are saying lack of robustness will make it easy to modify minds a lot?

Replies from: diegocaleiro↑ comment by diegocaleiro · 2015-02-12T19:44:01.261Z · LW(p) · GW(p)

I'm saying that the kind of robustness which minds/brains are famous for is not sufficient once you have a digital version of the brain, where the things you will change are of a different nature.

So current squishy minds and brains are robust, but they would not be robust once virtually implemented.

Responding to Paul's related skepticism in my other post:

But that seems to make it easier to specify a person precisely, not harder. The differences in observations allow someone to quickly rule out alternative models by observations of people. Indeed, the way in which a human brain is physically represented makes little difference to the kind of predictions someone would make.

There are many ways of creating something inside a virtual black box that does - as seen from the outside - what my brain does here on earth. Let's go through a few and see where their robustness fails:

1) Scan copy my brain and put it in there.

Failures:

a) You may choose to scan the wrong level of granularity, say, synapses instead of granular cells, neural columns instead of 3d voxels, molecular gates instead of quantum data relevant to microtubule distribution.

b) You may scan the right level of granularity - hoping there is only one! - and fail to find a translation schema for transducers and effectors, the ways in which the box interacts with the outer world.

2) Use an indirect method similar to those Paul described which vastly constrains the output a human can generate (like a typing board which ignores the rest), create a model of the human based on it, when the distinction between the doppelganger model and actor falls below a certain Epsilon, consider that model equivalent to the human and use it.

Failures:

a) It may turn out to be the case that having a brain like neural network/Markov network is actually the most predictive way of simulating a human, so you'd end up with a model that looks like, and behaves like an embedded cognition, physically distributed in space, with all the perks and perils that carries. Tamper with the virtual adrenal glands, and who knows what happens.

b) It may also be that a vastly distinct model from the way we do it would result in similar behavior. Then a whole different realm of completely unexplored confounds, polysemic and synonimic IF THEN gates and chain reactions we never had the chance to even glimpse at would be the virtual entity we are dealing with. This would make me much less comfortable with turning this emulation on than turning on a brain based one. It seems way less predictable (despite having matched the behavior of that human up to that point) once it's environment, embedment and inner structure changes.

It is worth keeping in mind that we are comparing the

robustness of these minds to tweaks available in the virtual world

to the

robustness of the alternatives, one of which is motivational scaffolding and concept teaching.

We should consider whether teaching language, reference, and moral systems is not easier than simulating a mind without distorting it's morals.

You'd have to go through a few google tradutor translations to transform a treatise on morality into a treatise on immorality, but - to exapt a Yudkowskian old example - you only have to give Ghandi one or two pills, or a tumor smaller than his toe, to completely change his moral stance on pacifism.

comment by KatjaGrace · 2015-02-10T02:06:38.790Z · LW(p) · GW(p)

How well do you think the earlier methods apply in multipolar outcomes? How well do this week's methods apply to unipolar outcomes? Is value loading easier for multipolar or unipolar outcomes?

comment by KatjaGrace · 2015-02-10T02:08:30.523Z · LW(p) · GW(p)

Do you think the described emulation institution would be feasible in an applicable future scenario?

comment by KatjaGrace · 2015-02-10T02:07:26.874Z · LW(p) · GW(p)

What did you find least persuasive in this week's reading?

comment by KatjaGrace · 2015-02-10T02:07:12.019Z · LW(p) · GW(p)

Did you change your mind about anything as a result of this week's reading?

comment by KatjaGrace · 2015-02-10T02:06:21.647Z · LW(p) · GW(p)

This week's methods are more designed for messy, multipolar outcomes. Are there other safety methods that are especially applicable there?

comment by JeffFitzmyers · 2015-02-10T16:46:48.982Z · LW(p) · GW(p)

The reason "the task of designing values and institutions is complicated by selection effects" is because that design is not very effective. Everyone makes this way to complicated. Life is a complex adaptive system: a few simple initial conditions iterating over time with feedback. The more integrated things are, the more, and more effective, emergent properties. As Alex Wissner-Gross and others suggest, you don't really design for value: large value is an emergent property. Design the initial conditions. But we don't have to do that: it's already been done! All we have to do is recognize, then codify evolution's initial conditions: Private property. Connections that are both tangible and intangible. Classification: Everything has a scope of relationships. It's the classification that holds all the meta data. And add value first: Iteration http://wp.me/p4neeB-4Y

comment by almostvoid · 2015-02-10T09:15:29.076Z · LW(p) · GW(p)

I am realizing that there is this assumption that robots, AI OSs and variants are gonna work. Well I used to run a live website and working with webmistress realized for starters that codes self corrupt. so no reliability there. then there is human interpretation. some experts simply could not comprehend simple instructions and often to hide their ignorance came back with gobbly-gook speech obfuscation. It took a while to find the right expert. Even then things always went wrong. So future AI is all fantasy as it stands now. Which means a lot of these conversations are fantasy not fact. To give but 2 more examples. I left Twitter but they could not delete me in a month! I re-de-activated myself again. So here we have a system that can't delete information. Another example was Flash-Player which had a security hole [even that in itself shows how hopeless this AI endeavour is. So I deleted the old unstable copy and uploaded the new safe one. Except my computer lost the upload file. Which I found by accident. [Again the implications for running AIs] and finished the upload. But then the Flash-Player videos wouldn't open and play. So here we had vanishing replacement codes. The player worked the day after. The point is that whilst this book is interesting it is about that and no more. The conversations are useless because what happened here couldn't have happened in isolation. I am surely not the only one.

Replies from: Viliam_Bur↑ comment by Viliam_Bur · 2015-02-10T13:36:45.609Z · LW(p) · GW(p)

Different pieces of software have different quality. Websites are usually on the crappy end of the scale. Central parts of operating systems are towards the opposite end. Also, many commercial products are developed with little testing. But there are methodologies for better testing, even mathematical proofs of correctness. Those are usually not used in commercial development, because they require some time and qualification, and companies prefer to hire cheap coders and have the product soon, even if it is full of bugs. And generally, because software companies are usually managed Dilbert-style.

However, it is possible to have mathematical proofs about algorithm correctness (any decent university teaches these methods as parts of informatics), so in these debates it is usually assumed that people who would develop an AI would use these methods.

To a person who knows this, your comment sounds a bit like: "my childhood toy broke easily, therefore it is impossible to ever build a railway that would not fall apart below the weight of a train".