MIRI's 2015 Winter Fundraiser!

post by So8res · 2015-12-09T19:00:32.567Z · LW · GW · Legacy · 23 commentsContents

Donate Now

Why MIRI?

General Progress in 2015

Research Progress in 2015

Future Plans

Donate Now

None

23 comments

MIRI's Winter Fundraising Drive has begun! Our current progress, updated live:

Like our last fundraiser, this will be a non-matching fundraiser with multiple funding targets our donors can choose between to help shape MIRI’s trajectory. The drive will run until December 31st, and will help support MIRI's research efforts aimed at ensuring that smarter-than-human AI systems have a positive impact.

Our successful summer fundraiser has helped determine how ambitious we’re making our plans. Although we may still slow down or accelerate our growth based on our fundraising performance, our current plans assume a $1,825,000 annual budget.

About $100,000 of our 2016 budget is being paid for via Future of Life Institute (FLI) grants, funded by Elon Musk and the Open Philanthropy Project. The rest depends on our fundraiser and future grants. We have a twelve-month runway as of January 1, which we would ideally like to extend. Taking all of this into account, our winter funding targets are:

Target 1 — $150k: Holding steady. At this level, we would have enough funds to maintain our runway in early 2016 while continuing all current operations, including running workshops, writing papers, and attending conferences.

Target 2 — $450k: Maintaining MIRI’s growth rate. At this funding level, we would be much more confident that our new growth plans are sustainable, and we would be able to devote more attention to academic outreach. We would be able to spend less staff time on fundraising in the coming year, and might skip our summer fundraiser.

Target 3 — $1M: Bigger plans, faster growth. At this level, we would be able to substantially increase our recruiting efforts and take on new research projects. It would be evident that our donors' support is stronger than we thought, and we would move to scale up our plans and growth rate accordingly.

Target 4 — $6M: A new MIRI. At this point, MIRI would become a qualitatively different organization. With this level of funding, we would be able to diversify our research initiatives and begin branching out from our current agenda into alternative angles of attack on the AI alignment problem.

The rest of this post will go into more detail about our mission, what we've been up to the past year, and our plans for the future.

Why MIRI?

The field of AI has a goal of automating perception, reasoning, and decision-making — the many abilities we lump under the label "intelligence." Most leading researchers in AI expect our best AI algorithms to begin strongly outperforming humans this century in most cognitive tasks. In spite of this, relatively little time and effort has gone into trying to identify the technical prerequisites for making smarter-than-human AI systems safe and useful.

We believe that several basic theoretical questions will need to be answered in order to make advanced AI systems stable, transparent, and error-tolerant, and in order to specify correct goals for such systems. Our technical agenda describes what we think are the most important and tractable of these questions. Smarter-than-human AI may be 50+ years away, but there are a number of reasons we consider it important to begin work on these problems early:

- High capability ceilings — Humans appear to be nowhere near physical limits for cognitive ability, and even modest advantages in intelligence may yield decisive strategic advantages for AI systems.

- "Sorcerer’s Apprentice" scenarios — Smarter AI systems can come up with increasingly creative ways to meet programmed goals. The harder it is to anticipate how a goal will be achieved, the harder it is to specify the correct goal.

- Convergent instrumental goals — By default, highly capable decision-makers are likely to have incentives to treat human operators adversarially.

- AI speedup effects — Progress in AI is likely to accelerate as AI systems approach human-level proficiency in skills like software engineering.

We think MIRI is well-positioned to make progress on these problems for four reasons: our initial technical results have been promising (see our publications), our methodology has a good track record of working in the past (see MIRI’s Approach), we have already had a significant influence on the debate about long-run AI outcomes (see Assessing Our Past and Potential Impact), and we have an exclusive focus on these issues (see What Sets MIRI Apart?). MIRI is currently the only organization specializing in long-term technical AI safety research, and our independence from industry and academia allows us to effectively address gaps in other institutions’ research efforts.

General Progress in 2015

The big news from the start of 2015 was FLI's "Future of AI" conference in San Juan, Puerto Rico, which brought together the leading organizations studying long-term AI risk and top AI researchers in academia and industry. Out of the FLI event came a widely endorsed open letter, accompanied by a research priorities document drawing heavily on MIRI’s work. Two prominent AI scientists who helped organize the event, Stuart Russell and Bart Selman, have since become MIRI research advisors (in June and July, respectively). The conference also resulted in an AI safety grants program, with MIRI receiving some of the largest grants. (Details: An Astounding Year, Grants and Fundraisers.)

In March, we released Eliezer Yudkowsky's Rationality: From AI to Zombies; we hired a new full-time research fellow, Patrick LaVictoire; and we launched the Intelligent Agent Foundations Forum, a discussion forum for AI alignment research. Many of our subsequent publications have been developed from material on the forum, beginning with LaVictoire's "An introduction to Löb’s theorem in MIRI’s research."

In May, we co-organized a decision theory conference at Cambridge University and began a summer workshop series aimed at introducing a wider community of scientists and mathematicians to our work. Luke Muehlhauser left MIRI for a research position at the Open Philanthropy Project in June, and I replaced Luke as MIRI’s Executive Director. Shortly thereafter, we ran a three-week summer fellows program with CFAR.

Our July/August funding drive ended up raising $631,957 total from 263 distinct donors, topping our previous funding drive record by over $200,000. Mid-sized donors stepped up their game in a big way to help us hit our first two funding targets: many more donors gave between $5k and $50k than in past fundraisers. During the fundraiser, we also wrote up explanations of our plans and hired two new research fellows: Jessica Taylor and Andrew Critch.

Our fundraiser, summer fellows program, and summer workshop series all went extremely well, directly enabling us to make some of our newest researcher hires. Our sixth research fellow, Scott Garrabrant, will be joining this month, after having made major contributions as a workshop attendee and research associate. Meanwhile, our two new research interns, Kaya Stechly and Rafael Cosman, have been going through old results and consolidating and polishing material into new papers; and three of our new research associates, Vadim Kosoy, Abram Demski, and Tsvi Benson-Tilsen, have been producing a string of promising results on the research forum. (Details: Announcing Our New Team.)

We also spoke at AAAI-15, the American Physical Society, AGI-15, EA Global, LORI 2015, and ITIF, and ran a ten-week seminar series at UC Berkeley. This month, we'll be moving into a new office space to accommodate our growing team. On the whole, I'm very pleased with our new academic collaborations, outreach efforts, and growth.

Research Progress in 2015

Our increased research capacity means that we've been able to generate a number of new technical insights. Breaking these down by topic:

We've been exploring new approaches to the problems of naturalized induction and logical uncertainty, with early results published in various venues, including Fallenstein et al.’s “Reflective oracles” (presented in abridged form at LORI 2015) and “Reflective variants of Solomonoff induction and AIXI” (presented at AGI-15), and Garrabrant et al.’s “Asymptotic logical uncertainty and the Benford test” (available on arXiv). We also published the overview papers “Formalizing two problems of realistic world-models” and “Questions of reasoning under logical uncertainty.”

In decision theory, Patrick LaVictoire and others have developed new results pertaining to bargaining and division of trade gains, using the proof-based decision theory framework (example). Meanwhile, the team has been developing a better understanding of the strengths and limitations of different approaches to decision theory, an effort spearheaded by Eliezer Yudkowsky, Benya Fallenstein, and me, culminating in some insights that will appear in a paper next year. Andrew Critch has proved some promising results about bounded versions of proof-based decision-makers, which will also appear in an upcoming paper. Additionally, we presented a shortened version of our overview paper at AGI-15.

In Vingean reflection, Benya Fallenstein and Research Associate Ramana Kumar collaborated on “Proof-producing reflection for HOL” (presented at ITP 2015) and have been working on an FLI-funded implementation of reflective reasoning in the HOL theorem prover. Separately, the reflective oracle framework has helped us gain a better understanding of what kinds of reflection are and are not possible, yielding some nice technical results and a few insights that seem promising. We also published the overview paper “Vingean reflection.”

Jessica Taylor, Benya Fallenstein, and Eliezer Yudkowsky have focused on error tolerance on and off throughout the year. We released Taylor’s “Quantilizers” (accepted to a workshop at AAAI-16) and presented “Corrigibility” at a AAAI-15 workshop.

In value specification, we published the AAAI-15 workshop paper “Concept learning for safe autonomous AI” and the overview paper “The value learning problem.” With support from an FLI grant, Jessica Taylor is working on better formalizing subproblems in this area, and has recently begun writing up her thoughts on this subject on the research forum.

Lastly, in forecasting and strategy, we published “Formalizing convergent instrumental goals” (accepted to a AAAI-16 workshop) and two historical case studies: “The Asilomar Conference” and “Leó Szilárd and the Danger of Nuclear Weapons.”

We additionally revised our primary technical agenda paper for 2016 publication.

Future Plans

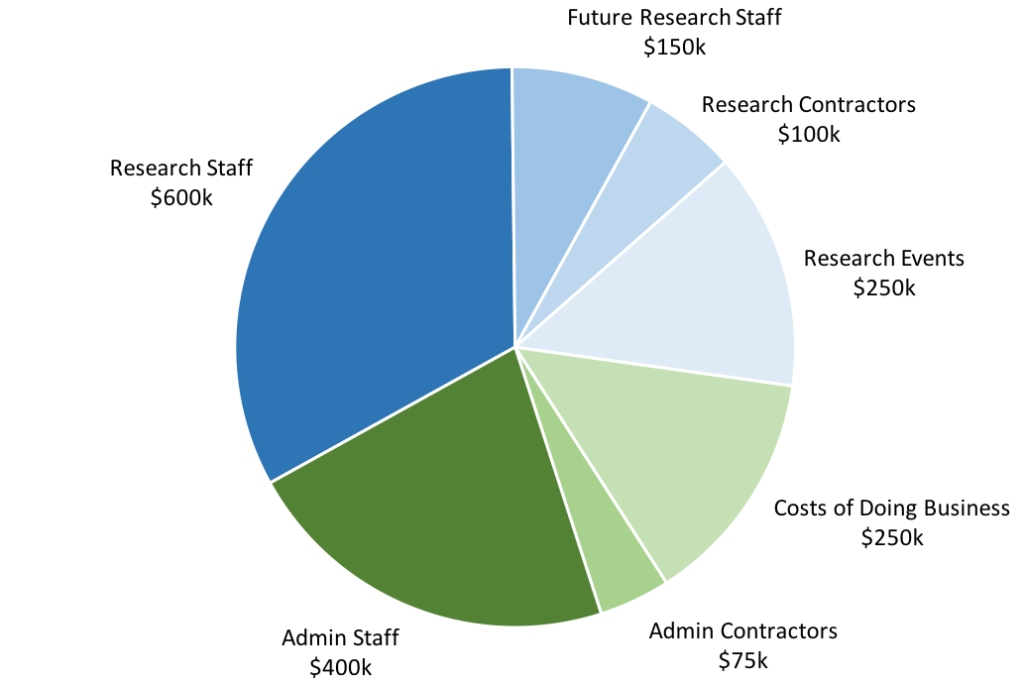

Our projected spending over the next twelve months, excluding earmarked funds for the independent AI Impacts project, breaks down as follows:

Our largest cost ($700,000) is in wages and benefits for existing research staff and contracted researchers, including research associates. Our current priority is to further expand the team. We expect to spend an additional $150,000 on salaries and benefits for new research staff in 2016, but that number could go up or down significantly depending on when new research fellows begin work:

- Mihály Bárász, who was originally slated to begin in November 2015, has delayed his start date due to unexpected personal circumstances. He plans to join the team in 2016.

- We are recruiting a specialist for our type theory in type theory project, which is aimed at developing simple programmatic models of reflective reasoners. Interest in this topic has been increasing recently, which is exciting; but the basic tools needed for our work are still missing. If you have programmer or mathematician friends who are interested in dependently typed programming languages and MIRI’s work, you can send them our application form.

- We are considering several other possible additions to the research team.

Much of the rest of our budget goes into fixed costs that will not need to grow much as we expand the research team. This includes $475,000 for administrator wages and benefits and $250,000 for costs of doing business. Our main cost here is renting office space (slightly over $100,000).

Note that the boundaries between these categories are sometimes fuzzy. For example, my salary is included in the admin staff category, despite the fact that I spend some of my time on technical research (and hope to increase that amount in 2016).

Our remaining budget goes into organizing or sponsoring research events, such as fellows programs, MIRIx events, or workshops ($250,000). Some activities (e.g., traveling to conferences) are aimed at sharing our work with the larger academic community. Others, such as researcher retreats, are focused on solving open problems in our research agenda. After experimenting with different types of research staff retreat in 2015, we’re beginning to settle on a model that works well, and we’ll be running a number of retreats throughout 2016.

In past years, we’ve generally raised $1M per year, and spent a similar amount. However, thanks to substantial recent increases in donor support, we’re in a position to scale up significantly.

Our donors blew us away with their support in our last fundraiser. If we can continue our fundraising and grant successes, we will be able to sustain our new budget and act on the unique opportunities outlined in Why Now Matters, helping set the agenda and build the formal tools for the young field of AI safety engineering. And if our donors keep stepping up their game, we believe we have the capacity to scale up our program even faster. We’re thrilled at this prospect, and we’re enormously grateful for your support.

23 comments

Comments sorted by top scores.

comment by Ben Pace (Benito) · 2015-12-07T23:23:24.846Z · LW(p) · GW(p)

Positive reinforcement for being so open about your spending.

$89 donated.

My first donation to you, and it shall not be my last.

Replies from: So8rescomment by iceman · 2015-12-11T21:31:35.034Z · LW(p) · GW(p)

$1000. (With an additional $1000 because of private, non-employer matching.)

Replies from: So8rescomment by James_Miller · 2015-12-08T01:00:09.392Z · LW(p) · GW(p)

Donated $100.

Replies from: So8rescomment by Rob Bensinger (RobbBB) · 2015-12-09T17:38:23.658Z · LW(p) · GW(p)

Announcement: Raising for Effective Giving just let us know that for the next two days, donations to MIRI through REG's donation page (under "Pick a specific charity: MIRI") will be matched dollar-for-dollar by poker player Ferruell! Fantastic.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2015-12-10T01:06:05.097Z · LW(p) · GW(p)

Update from Ruairi Donnelly, the Executive Director at Raising for Effective Giving: "Ferruell's matching drive has been going on for a while behind the scenes, as he had a preference for doing it in the Russian poker community first. No limit was declared (but the implicit understanding was 'in the tens of thousands'). As donations were slowing down, our ambassador Liv Boeree shared it on Twitter. Ferruell has now confirmed that he'll match donations up to $50,000. [Roughly] $30,000 have already come in."

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2015-12-10T06:46:38.065Z · LW(p) · GW(p)

Update: The remaining $20,000 has been matched!!! Wow!

comment by Rob Bensinger (RobbBB) · 2015-12-18T06:12:43.542Z · LW(p) · GW(p)

Update: We've hit our first fundraising target! We're now nearing the $200k mark. Concretely, additional donations at this point will have several effects on MIRI's operations over the coming year:

- There are a half-dozen promising people we'd be hiring on a trial basis if we had the funds. Not everyone in this reference class can be sent to e.g. the Oxford and Cambridge groups working on AI risk (CSER, FHI, Leverhulme CFI), because those organizations have different hiring criteria and aren't primarily focused on our kind of research. This is one of the main ways a more successful fundraiser this month translates into additional AI alignment research on the margin.

This includes several research fellows we're considering hiring (with varying levels of confidence) in the next year. Being less funding-constrained would make us more confident that our growth is sustainable, causing us to move significantly faster on these hires.

- Secondarily, additional funds would allow us to run additional workshops and run longer and meatier fellows/scholars programs.

Larger donations would allow us to expand the research team size we're shooting for, and also spend a lot more time on academic outreach.

comment by Dr_Manhattan · 2015-12-15T11:05:37.062Z · LW(p) · GW(p)

$250 plus a vote to have winter fundraiser right after the bonus season :)

Replies from: So8rescomment by BrandonReinhart · 2015-12-14T23:58:00.361Z · LW(p) · GW(p)

Donation sent.

I've been very impressed with MIRI's output this year, to the extent I am able to be a judge. I don't have the domain specific ability to evaluate the papers, but there is a sustained frequency of material being produced. I've also read much of the thinking around VAT, related open problems, definition of concepts like foreseen difficulties... the language and framework for carving up the AI safety problem has really moved forward.

Replies from: So8res