AI Summer Harvest

post by Cleo Nardo (strawberry calm) · 2023-04-04T03:35:58.473Z · LW · GW · 10 commentsContents

Trending metaphor Brief thoughts Origins None 10 comments

Trending metaphor

I've noticed that AI safety advocates have recently been appealing to a new metaphor: the AI summer harvest.

(1) Here's the FLI open letter Pause Giant AI Experiments:

Humanity can enjoy a flourishing future with AI. Having succeeded in creating powerful AI systems, we can now enjoy an "AI summer" in which we reap the rewards, engineer these systems for the clear benefit of all, and give society a chance to adapt. Society has hit pause on other technologies with potentially catastrophic effects on society.[5] We can do so here. Let's enjoy a long AI summer, not rush unprepared into a fall.

(2) And here's Yudkowsky talking on the Lex Friedman podcast:

If it were up to me I would be like — okay, like this far and no further.

Time for the Summer of AI, where we have planted our seeds and now we wait and reap the rewards of the technology we've already developed, and don't do any larger training runs that that."

Maybe I'm out-of-the-loop, but I haven't seen this metaphor until recently.

Brief thoughts

- I like the metaphor and I wrote this article to signal-boost it. It's concise, accessible, and intuitive. It represents a compromise (Hegelian synthesis?) between AI decelerationism and AI accelerationism.

- The metaphor points at the reason why slowing down AI progress won't (imo) impose significant economic costs — i.e. just as a farmer gains nothing by sowing seeds faster than they can harvest crops, so too will a lab gain nothing by scaling AI models faster than the economy can productise them.

- Instead of "AI summer", we should call it an "AI summer harvest" because "AI summer" is an existing phrase referring to fast AI development; trying to capture an existing phrase is difficult, confusing, and impolite. Moreover, "AI summer harvest" does a better job at expressing the key idea.

- I think that buying time [LW · GW] is probably more important than anything else at the moment. (Personally, I'd endorse a 0.2 OOMs/year target [LW · GW].) And I think that "AI summer harvest" should be the primary angle by which we communicate buying-time to policy-makers and the general public.

Origins

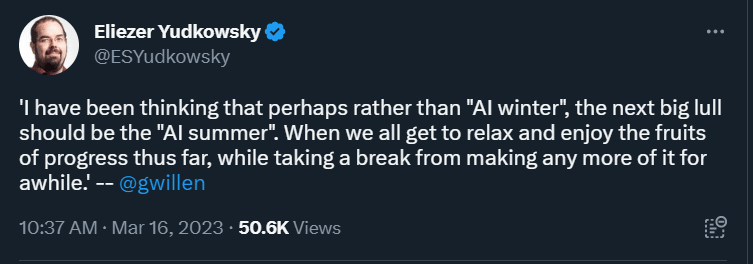

The metaphor was accredited to @gwillen [LW · GW] on March 16th 2023.

10 comments

Comments sorted by top scores.

comment by DragonGod · 2023-04-04T11:19:34.693Z · LW(p) · GW(p)

Very sceptical that buying time is more important than anything else. It's not even clear to me that it's a robustly positive intervention.

From my perspective most of orthodox alignment research developed before the deep learning revolution ended up being mostly useless and some was net negative as it enshrined inadequate ontologies. Some alignment researchers seem to me to be stuck in a frame that just doesn't apply well to artifacts produced by contemporary ML.

I would not recommend new aspiring alignment researchers to read the Sequences, Superintelligence, some of MIRI's earlier work or trawl through the alignment content on Arbital despite reading a lot of that myself.

The LW content that seems valuable re large language models were written in the last one to three years, most in the last year.

@janus' [LW · GW] "Simulators [LW · GW]" is less than a year old.

It's not particularly clear that buying time would be very helpful. The history of AI Safety research has largely failed to transfer across paradigms/frames. If we "buy time" by freezing the current paradigm, we'd get research in the current paradigm. But if the current paradigm is not the final form of existentially dangerous AI, such research may not he particularly valuable.

Something else that complicates buying time even if the current paradigm is the final form of existentially dangerous AI is phenomena that emerge at greater scale [LW · GW]. We're just fundamentally unable to empirically study such phenomena until we have models at the appropriate scale.

Rather than lengthening timelines to AGI/strong AI, it seems to me that most of the existential safety comes from having a slow/continuous takeoff that allows us to implement iterative alignment and governance approaches.

Freezing AI development at a particular stage does not seem all that helpful to me.

That could lead to a hardware overhang and move us out of the regime where only a handful of companies can train strong AI systems (with training runs soon reaching billions of dollars) to a world where hundreds or thousands of actors can do so.

Longer timelines to strong AGI plus faster takeoff (as a result of a hardware overhang) seems less safe than shorter timelines to strong AGI plus slower takeoff.

Replies from: strawberry calm, TinkerBird, dan-4, dan-4↑ comment by Cleo Nardo (strawberry calm) · 2023-04-04T12:45:50.446Z · LW(p) · GW(p)

I believe we should limit AI development to below 0.2 OOMs/year [LW · GW] which is slow continuous takeoff.

Replies from: DragonGod↑ comment by DragonGod · 2023-04-04T13:12:59.670Z · LW(p) · GW(p)

Something like that sounds like a sensible proposal to me.

I'm not sure I endorse that as stated (I believe returns to intelligence are strongly sublinear, so a fixed slow rate of scaling may end up taking too long to get to transformative AI for my taste), but I endorse the general idea of deliberate attempts to control AI takeoff at a pace we can deal with (both for technical AI safety and governance/societal response approaches).

I was pushing back against the idea of an indefinite moratorium now while we harvest the gains from developments to date.

That could lead to a hardware overhang and move us out of the regime where only a handful of companies can train strong AI systems to a world where hundreds or thousands of actors can do so.

↑ comment by TinkerBird · 2023-04-04T11:29:51.296Z · LW(p) · GW(p)

But if the current paradigm is not the final form of existentially dangerous AI, such research may not he particularly valuable.

I think we should figure out how to train puppies before we try to train wolves. It might turn out that very few principles carry over, but if they do, we'll wish we delayed.

The only drawback I see to delaying is that it might cause people to take the issue less seriously than if powerful AI's appear in their lives very suddenly.

Replies from: DragonGod↑ comment by Dan (dan-4) · 2023-04-05T22:27:27.563Z · LW(p) · GW(p)

A pause, followed by few immediate social effects and slower AGI development then expected may make things worse in the long run. Voices of caution may be seen to have 'cried wolf'.

I agree that humanity doesn't seem prepared to do anything very important in 6 months, AI safety wise.

Edited:Clarity.

↑ comment by Dan (dan-4) · 2023-04-05T19:11:41.777Z · LW(p) · GW(p)

I would not recommend new aspiring alignment researchers to read the Sequences, Superintelligence, some of MIRI's earlier work or trawl through the alignment content on Arbital despite reading a lot of that myself.

I think aspiring alignment researchers should read all these things you mention. This all feels extremely premature. We risk throwing out and having to rediscover concepts at every turn. I think Superinelligence, for example, would still be very important to read even if dated in some respects!

We shouldn't assume too much based on our current extrapolations inspired by the systems making headlines today.

GPT-4's creators already want to take things in a very agentic direction, which may yet negate some of the apparent dated-ness.

Replies from: DragonGod"Equipping language models with agency and intrinsic motivation is a fascinating and important direction for future research" -

OpenAI inSparks of Artificial General Intelligence: Early experiments with GPT-4.

comment by gwillen · 2023-04-05T07:19:35.415Z · LW(p) · GW(p)

The metaphor originated here:

https://twitter.com/ESYudkowsky/status/1636315864596385792

(He was quoting, with permission, an off-the-cuff remark I had made in a private chat. I didn't expect it to take off the way it did!)