Societal Self-control

post by Aaron Bergman (aaronb50) · 2021-02-20T03:05:21.271Z · LW · GW · 0 commentsThis is a link post for https://aaronbergman.substack.com/p/societal-self-control

Contents

Intro They’re already here My concern The parallel A neat neoclassical model Back to society The Problem The solution Let not the perfect be the enemy of the slightly-better-than-before What’s going on What’s changed Conclusion None No comments

Intro

At the individual level, we humans often intentionally reduce our freedom so that our future selves will make some particular decision. Want to eat healthier? Much easier to forego buying chips and candy at the supermarket than it is to leave them in the bag at home. Want to stay focused on work? Much easier to block your favorite websites than it is to actively resist the siren’s call of Reddit.

Most of the time, these “prospective self control devices,” or PSCDs, as I’ll call them, don’t entirely remove your future freedom; you can always drive to the store for a candy bar or manually disable the Chrome extension. Still, if done right, the temptations won’t be strong enough to warrant this degree of effort. The mere existence of the barrier also serves as a reminder of your higher-level goal.

Here, I want to explore whether PSCDs are underused at the societal level and, if so, how we might use them to improve collective decision-making.

They’re already here

Unsurprisingly, there are already plenty of institutions/norms/rules/practices that seem to count as societal PSCDs. Literally any law is one banal example; “we” (in a very broad sense) decide to make certain activities difficult to do. This is true both in a physical sense (if you try to rob a bank, someone with a gun will physically come and stop you) and a psychological sense (it is often unpleasant to do something illegal due to fear of punishment and general social stigma).

Just like the individual examples, laws don’t entirely remove our freedom to break them. In some cases, as with murder, this is mostly because our society lacks the capability to physically prevent people from doing so. Often, though, we affirmatively want to be able to break the law in extraordinary circumstances. Most of the time, doing 120 in a 55 is very bad and wrong, but if your dad is having a heart attack in the passenger seat and the hospital is 5 minutes away, the ability to physically break the law (and possibly incur the consequences) is a good thing to have.

However, it seems to me that most of these societal PSCDs are “selfish,” at least in a very loose sense. I hesitate to use the word because of its moral connotation, but I’m using it solely to indicate something that promotes our individual well-being, even if it imposes no cost on others. Both speed limits and a social media website blocker are selfish in this way; after all, the “speed limits for everyone but me” option isn’t tenable.

There’s nothing wrong with these, and I’m very glad that they exist.

My concern

While we as a society face plenty of challenges from a selfish perspective (say, finding a cure for cancer), I am much more concerned about those challenges only presently-living humans can solve, but which predominantly affect beings other than us and those we naturally have empathy for.

The most obvious two examples of such beings are distant future people and certain animals. We generally empathize with our children and grandchildren - even if they have not yet been conceived - as well certain animals like cats and dogs. Since we literally share in their suffering and happiness, it is selfishly rational to care about and protect these groups. To reiterate, the term “selfish” does not negate any moral value associated with protecting puppies or children, which are indeed good things to do.

On the other hand, folks (including myself) generally don’t have a strong emotional connection to people who will be alive in the distant future and most animals, particularly non-mammals like lobsters and insects. Of course, this comes in degrees. You might care a lot about your son, somewhat about your great grandson, but not at all (emotionally, I mean) about your descendant who might be alive in 4876. You might have a strong emotional inclination towards dogs, a modest one towards chickens, and none at all towards fruit flies.

That said, our lack of emotional empathy doesn’t condemn us to disregard the well-being of those we don’t naturally empathize with. If we recognize, at an intellectual level, that future people and sentient animals have moral value, then we can choose to act in their interest anyway.

The parallel

This predicament strikes me as parallel to something we often face at the individual level: a conflict between our long-term/higher-order goals and short-term/emotional interests. Just as most of the 47% of Americans who (profess to) support banning factory farms regularly buy factory-farmed animal products, for example, a lot of people who genuinely want to exercise have trouble actually going to the gym.

One option is to argue that eating McNuggets or staying sedentary simply reveal our true preferences, and so people don’t genuinely want to end factory farming and exercise. There’s some truth to this, but it’s not the whole story. It can be legitimately difficult to force oneself to suffer in pursuit of some higher-level goal.

A neat neoclassical model

This is clearest in the case of substance addiction (is a heroin addict who no longer enjoys the drug but cannot quit just “revealing his preferences”?), but is probably a universal consequence of “time-preference.” From Wikipedia:

We use the term time discounting broadly to encompass any reason for caring less about a future consequence, including factors that diminish the expected utility generated by a future consequence, such as uncertainty or changing tastes. We use the term time preference to refer, more specifically, to the preference for immediate utility over delayed utility.

In other words, time discounting means caring less about something purely because it will happen in the future - not because your tastes or goals might change between now and then. And an example:

a nicotine deprived smoker may highly value a cigarette available any time in the next 6 hours but assign little or no value to a cigarette available in 6 months.

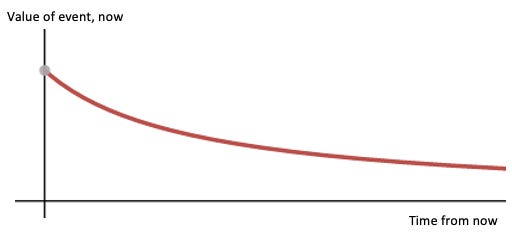

Sure, add it to the list of cognitive biases, but there is an opportunity here! While I haven’t done a comprehensive literature review, I’m almost positive that our time preferences are convex, like this:

For example, I instinctively care much more about a homework assignment I have to complete right now than one I have to complete in a month, even if I could somehow be assured that my actual conscious experiences of completing the work would be identical. However, the difference between how much I value assignments due in one month and two months respectively is much smaller, if it exists at all.

This is why, it seems to me, we intentionally limit our future freedom in the form of personal PSCDs. Though I might value the taste of ice cream right now more than I value its negative health impact spread over the rest of my life, I also might value this total detriment to my health more than I do the taste of ice cream next week.

You can see where this is going. I know that, if I wait until next week to decide, I will choose the ice cream. However, today I will choose to avoid buying a second carton so that my future self has no such option.

Back to society

At the societal level, we see something analogous. If the ice cream is cheap power and my health is the climate, the demos might collectively value cheap oil and gas now more than it does than it does the associated impact on climate change. It also might value the climate more than it will value tax-free carbon emissions in ten years.

Just as an individual avoids buying ice cream at the store, the political system can respond by imposing a carbon tax to be implemented in ten years’ time.

Something similar is already common: new laws often “phase-in” over the course of a few years. For instance, several states are taking several years to gradually increase their minimum wage. While phase-ins are (I assume?) intended to alleviate the burden itself imposed by a new law, they happen to smuggle in a built in time-preference-arbitrage-device. That is, they make it easier to enact new laws which impose short-term costs (on certain constituencies, that is) but accord with society’s higher-order goals.

The Problem

Even still, I am not aware of any law that self-consciously and explicitly delays enactment solely as a means of satisfying our time-preferences. At least in part, I’d bet this is because doing so can seem like a sleazy, underhanded move. For one thing, doing so means that the law will be imposed on a different set of people than those who were of voting age when it was enacted. For another, it might reduce the salience of corrupt or harmful legislation.

These are very legitimate concerns. Would the 2017 GOP effort to repeal the ACA have failed as it (thankfully) did if they had set it to expire in 2030? Likewise, delayed enactment could just as easily shift the burden of some short-termist policy onto a future constituency.

Fundamentally, the problem with society-level PSCDs directly analogous to those we create for our future selves is that the fruits and consequences of such devices are not internalized by a single, psychologically-continuous individual.

The solution

Instead, we need something to serve instead as a proxy for those we care about at an intellectual level (future people, many animals), but who don’t elicit much direct emotional empathy.

The most obvious example would be an institution, with genuine legislative power, entrusted with the mandate to act in the interests of one of these groups. For instance, we can imagine a committee of benevolent philosopher-kings with 30 votes in the U.S. Senate, tasked with representing the interests of farm animals or future people.

Such an idea quickly runs headfirst into reality. The council would be subject to all of the perverse or distortionary incentives that influence existing bureaucracies and democracies. For example, people in power sometimes make decisions they know to be sub-optimal in order to ensure that they are seen as behaving for the right reasons.

More fundamentally, these institutions wouldn’t be directly accountable to those they’re tasked to serve. Say what you will about democracy, but there is at least somefeedback loop between politician performance and electoral outcome. If the body continually makes bad decisions, the animals or people or whatever have no way of expressing their discontent.

Let not the perfect be the enemy of the slightly-better-than-before

All this said, the relevant question is whether these institutions would be an improvement over the status-quo. Right now, those without political power are at the whims of the electorate’s fleeting and arbitrary empathy. It is currently legal to torturetens of billions of chickens every year, recklessly endanger our descendants via climate change, and turn away migrants fleeing violence and poverty merely because they chose the wrong country in which to be born.

I have no doubt that any institution tasked with serving the un-enfranchised would be really imperfect, but it does seem plausible that, in expectation, they’d do better than we’re doing right now.

What’s going on

Any proposal for institutional political change should be met with skepticism. If the proposal is feasible and a good idea, why hasn’t it already been done? If it really seems like a good idea, it’s probably not politically feasible.

So, is there any reason to think that a new “council of the future” or “council for the animals” might be both good and feasible?

In the short term and at a large scale, of course not. Congress isn’t going to create a shadow-senate to represent chickens and lobsters any time soon. Even still, I think the general concept of an institution to represent those without political power has promise.

It took a pretty long time for democracy to become the standard, currently dominant, style of political organization (though it does have a pretty long historical legacy). To hypersimplify, societies have gradually given political power to more and more people; authoritarianism gave way to enfranchisement of (in the West) wealthy white men, and then all white men, and then women and people of all races. At least, I’ll take this standard narrative as basically true until someone who knows what they’re talking about convinces me otherwise.

Lest this come across as triumphalism, it is undoubtedly the case that billions of people live under authoritarian rule, and even “democratic” nations like the U.S. continually disenfranchise felons and suppress the minority vote. Likewise, lots of political power comes in the form of wealth, education, and status. Charles Koch and I do not have the same amount of power simply because we have the same number of votes.

Under a humanist worldview, all is well and good. Sure, there are important fights to be had against voter disenfranchisement and suppression, gerrymandering, and the influence of wealth, but these are tweaks around the margin. True, children cannot vote, but we can generally trust adults to account for their interests, at least to a significant degree.

What’s changed

Two fundamental changes have taken place to modify this humanist worldview.

First, a growing proportion of society is recognizing that non-human animals have moral value. While we continue to brutally raise and kill billions of animals annually, the fact that so many go out of their way to avoid confronting this reality (see: ag-gag laws) is evidence that many at least in principle recognize that animals can suffer, and that animal suffering is bad. If not, why would nearly half the county express support for a ban on factory farming?

Though I’m extremely agnostic about whether computer systems might be (or already are, for that matter) sentient, I can imagine a similar trend taking place with regards to AI in the future.

Second, we have a novel capacity to influence the long-term future. Until the industrial revolution, there wasn’t much that humans could do on this front. Though greenhouse gas emission as a cause of climate change is the most salient example, we now possess several technologies with the potential to cause human extinction: nuclear weapons, artificial intelligence, various forms of biotechnology. Killing all humans isn’t just bad for those who die. It means that everyone who would have come after do not get to be born.

In the timeline of institutional political change — new state formation, new constitutions, significant changes in political power — these developments are quite recent. Most Western democracies are generally run on institutions (say, the U.S. congress and presidency) that have been around for quite a while. Likewise, near-universal adult enfranchisement is a relatively new phenomenon.

My point is that we shouldn’t expect our governance systems to have fully accommodated these dual changes in our society. There are still important fruits to be picked.

Fine, but why should we think people will voluntarily relinquish political power?

For the same reason that you might install a social-media blocker on your browser. Humans have higher-level goals, such as reducing animal suffering and preventing our species’ extinction, which often come into conflict with our short-term interests. So, we should expect there to be some willingness to create institutions that might limit our own political power or freedom in the future, because we know that doing so preemptively serves our long-term or more fundamental values.

We already do this. Sort of. In the form of laws that restrict our freedom, and through bureaucracies that alleviate civil servants of the omnipresent influence of an impending election campaign.

As with laws and bureaucracies, institutions mandated to advocate for and serve those who cannot vote will remain under the ultimate control of presently-alive, enfranchised humans, just your future self can always drive to the store for another carton of ice cream. But will these institutions be abolished as soon as they begin — if we somehow manage to get these societal PSCDs implemented, that is?

I don’t think so. The sluggish speed of political action in western democracies, particularly the United States, is both a bug and a feature. On one hand, virtually nothing ever happens so politics devolves into a symbolic war over cultural power. On the other, agencies like the IRS and programs like the Affordable Care Act don’t get abolished at the GOP’s earliest convenience. In fact, the friction between political will and actual policy change is the very mechanism that enables societal PCSDs to function.

On the margin, I would much prefer that things actually happen (end the filibuster!) but we have a long way to go until an institution like those I’ve described becomes too easy to dissolve.

Conclusion

While I stand by my assertions that formal political representation for morally-valuable-but-disenfranchised people would be a good thing, I’ll reiterate that I understand how intractable this is at the moment.

That said, it seems that Prospective Self-Control Devices might be generally underused at the societal level, and there are marginal improvements that could get the ball rolling in the right direction.

For example, it doesn’t seem crazy to imagine a non-voting representative of future people in Congress, especially given the salient threat of climate change. After all, there are several non-voting representatives already. Likewise, mightn’t some particularly progressive city be willing to appoint a farm animal representative to the city council, even if it begins as a mere symbolic gesture?

I don’t know. These things seem a long ways off too, but it’s never too early to start trying.

0 comments

Comments sorted by top scores.