Roundabout Strategy

post by Nicholas / Heather Kross (NicholasKross) · 2021-01-28T00:44:00.743Z · LW · GW · 1 commentsThis is a link post for https://www.thinkingmuchbetter.com/main/roundabout-strategy/

Contents

Roundabout The Really Good Example of Henry Ford A Big List of Examples When Roundabout Goes Wrong-about Wait, isn’t this just X? Conclusion None 1 comment

Epistemic status: shilling for Big Concept

In the middle of the forest was a library. In the middle of the library was a book. The book’s ideas were usually not very good. At least, they were pretty debatable.

In the middle of the book was a gem.

Roundabout

The book’s author, Mark Spitznagel, synthesized a bunch of ideas from the history of strategy. He coined a newer name for an ancient concept: roundabout. No, not the polite word for "convoluted" or "that traffic thing". Here, this adjective means "pursuing indirect means, to better achieve your goals." A roundabout strategy is one using those means, rather than directly reaching for the ends.

If you’re inclined towards the ancient [LW · GW] ways of Eastern [LW · GW] philosophy [LW · GW], Spitznagel helpfully references more words for the concept:

"In the Dao, yin (unseen, hidden, passive) balances and is balanced by yang (seen, light, active). The antithesis to shi, the intermediate and circuitous, is li, the immediate and direct. As the "all or nothing" of a pitched battle, li seeks decisive victory in each and every engagement (the "false shortcuts" denounced by the Laozi)…"

"The forward-looking shi strategist takes the roundabout path toward subtle and even intangible intermediate steps, while the li strategist concentrates on the current step, the visible power, the direct, obvious route toward a tangible desired end, relying on force to decide the outcome of every battle. Simply stated, li goes for the immediate hit, while shi seeks first the positional advantage of the setup."

If this sounds hokey or weirdly trivial, an example will make the point clearer. Spitznagel’s canonical roundabout story is that of Henry Ford.

The Really Good Example of Henry Ford

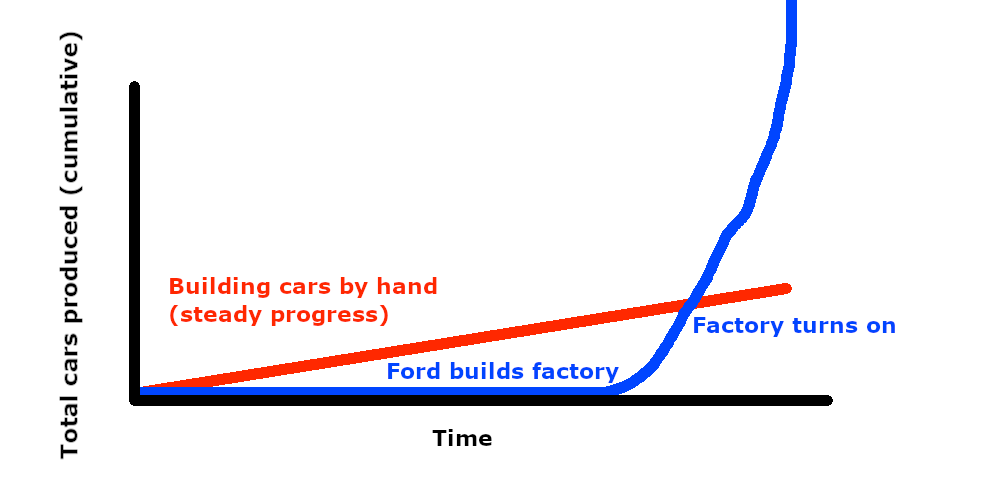

In the early days of automobiles, a workshop of skilled workers would build each car one at a time. This is the direct, or li, way to get cars. To become the largest auto manufacturer, Ford picked a different route.

He built the River Rouge factory, optimized to build consistent-quality cars at a high speed. An assembly line took the task of car-building, split it into microsteps, and optimized each one. Cars were not merely assembled, but their component parts were built in-house, and the components of those components. This extended to the raw materials when Ford bought rubber farms and iron mines. The goal: produce cars faster (in the long run) and make them cheaper (on average). The factory started by producing military equipment and parts, before eventually helping build the Model A and Model T cars.

Now, this was a very roundabout way to build cars, compared to just buying some parts and assembling them by hand. The key is the pursuit of indirect means. The small workshops made more cars than River Rouge, because they had a head start. River Rouge was building no cars (and in fact costing tons of money), for several years. Right until it opened, that is. After that, the direct path’s "head start" was obliterated.

A Big List of Examples

-

Google needs lots of computing power. When renting colocation servers got inefficient, they built their own infrastructure. The direct path of "rent some servers" was usurped by the roundabout path of "do it in-house", which was further optimized with further roundabout tactics. Giant fiber-optic investments, billion-dollar data centers, and custom hardware are the tools here. Cooling the computers could be done on boats, despite the added cost of setting them up. After all, small-percent savings at Google’s scale are worth millions of dollars. Cooling is so important and expensive, they went even more roundabout and built an AI to handle it.

Actually, Google is a fractally good example of roundabout strategy. They hire and pamper as many smart computer scientists and programmers as possible. Those employees optimize the entire company, and their problem domains. Paul Graham noticed this: "[Larry and Sergey’s] hypothesis seems to have been that, in the initial stages at least, all you need is good hackers: if you hire all the smartest people and put them to work on a problem where their success can be measured, you win." When you have enough money and apply enough intelligence, you can afford to pursue increasingly indirect means to your ends.

-

Spitznagel’s home turf is finance, and he at least has a non-garbage track record to show for it. And many examples of roundabout strategy come from business and finance, just like everything [LW · GW] else [LW · GW]. But the most roundabout company in finance is probably not his own.

Renaissance Technologies is legendary for their indirect means to the ends of profit. The direct path in the stock market is through typical trading techniques. Instead of doing that, RenTec gathered tons of data, built and tested proto-machine-learning-type models, built in-house infrastructure, optimized their taxes, and created the right incentives and culture and secrecy to hold onto their edges. RenTec could be called "the Google of quant finance", in this regard. [1]

-

You want more money. You could work a job to get money, or perhaps start a business. Starting a business is a less direct way to get more money, as you must prepare more before you make any money. (You also assume more risk, since your indirect plan might not actually make money). But if you succeed, the business makes money more efficiently/effectively than you could at a job.

-

Many examples of roundabout strategy come from warfare, just like everything [LW · GW] else [LW · GW].

Sun Tzu emphasized many ideas linked to roundabout strategy. Instead of rushing into war, you should try to maneuver politics to your advantage. Through the indirect means, you can "win without fighting" [? · GW], since the goal is about your nation (not the battles). On the tactical level, you shouldn’t just throw troops at the other side, hoping for a decisive victory. Instead, spy on the enemy and use that knowledge to win. The spies don’t fight on their own, and their work isn’t flashy or direct. Yet they are key to victory in the long run.

In the U.S. Civil War, the South had a large economy (through slavery) and bargaining power with Europe (through cotton). But the North had already made the investments of an industrial economy: railroads, telegraph lines, factories, steel, and almost everything besides cotton. The North had the indirect means, which could be deployed to the end of stopping the Confederacy. The South did not.

The Manhattan Project was an incredibly roundabout operation. It required years and years of work, mass-scale logistics, billions of dollars, and entire secret cities. All to build a weird weapon for use in the future, if it even got done before the enemies built theirs. Yet, the patience paid off, at least in the sense of shortening the war. "Mooooom, why can’t we just attack them with our troops noooooow?"

-

You want to do X. You could directly do X, but you’re not skilled enough for that yet. The roundabout strategy is to work on your skills first, and later use them to do X more efficiently. This is part of the idea behind education, especially in technical, trade, and high-skill fields.

-

In A.I. research, "The Bitter Lesson" tells us to favor a roundabout approach to computer intelligence.

The direct path for a computer to solve a human task, is basic automation. Build what you can, and use the "sweet shortcut" of expert knowledge and strong priors (from prior knowledge). In the long run, as computing power becomes cheaper, the roundabout path works better. Build up a giant compute infrastructure, gather giant datasets, run giant arrays of GPUs for giant amounts of time, and train giant neural network models.

This approach takes longer to get running, compared to an expert system. It takes more money, data, compute, and bandwidth. and it costs a lot more. If you don’t already have a hardware overhang [LW · GW], you would literally be waiting on Moore’s law to put your plan into action. But once you finally have the system up and running, it easily beats the competition.

-

The Effective Altruism [? · GW] movement runs into a trade-off between immediacy and roundaboutness. Some causes clearly save lives now, while some may build the infrastructure to save even more lives later.

One of the key ideas in (some of) EA, is "longtermist" ethics. The idea is to seriously weigh the needs of potential future people [2]. This view could require roundabout strategies, like mitigating extinction risks to keep the future open. An extreme roundabout tactic is the long reflection [EA · GW], where humanity just sits and thinks about morality for millennia, "perhaps tens of thousands of years". That’s a long time for thinking, but if it helps us figure out better answers to moral questions, it could make the rest of the future much better.

When Roundabout Goes Wrong-about

The indirect route is not always best. You can’t prepare forever; you must eventually attack. And you still have to keep track of the short term, lest something closer in time block you from moving further.

-

Tesla builds cars. They optimize their cars like machines, they use machines to build the cars, and they treat their factories like machines. "The machine builds the machine which builds the machine." If you improve a second- or third-order process (the factory), you can achieve huge gains in the first-order process (the car output) [3]. This is the strategy of Ford’s factory, to the Nth power.

In the long term, more automation is probably the way to go. In the short term, Tesla needed to ship some damn cars. Neglecting the short term created urgent important problems, which had to be dealt with immediately.

-

You want more money. You could work a job to get money, or perhaps start a business. However, you still need to eat and make rent. Your business will take a while to make money, if it ever does. You could work a side job, keep your expenses low, build a runway of savings, seek venture capital funds, and/or launch your product faster. But if you don’t have some short term source of money, you’ll run out of money and possibly end up homeless. That would put a helluva dent in your long term plan to get money.

-

You want to do X. You could directly do X, but you’re not skilled enough for that yet. You work on your skills first, but get sidetracked by that whole process. You forget why you were developing the skills at all [LW · GW], you move too slow, and eventually you might miss the boat on X. [4]

-

Humanity does the long reflection [EA · GW], but also fails to defend against extinction risks. An asteroid strikes and wipes us out. We literally die by analysis paralysis.

Wait, isn’t this just X?

The point of using the word "roundabout" is to give a quick name to a specific concept. The concept is linked to other key ideas in the rationalist space, but it is not identical to them.

Is "roundabout" a fancy rewording of instrumental goals [LW · GW], as opposed to terminal goals? Well, no. If your terminal goal is personal happiness, one instrumental goal might be making money. Recall the example above: you could work a job or start a business to make money. Starting a businesses is a more roundabout strategy than working a job, but they both work towards an instrumental goal (money). An instrumental goal could be more or less roundabout than another, but making something more roundabout won’t always make something more or less instrumental.

Is "roundabout" another word for long-term thinking, as opposed to focusing on the short term? Well, no. Roundabout strategy is a strategy, not a goal. You can certainly have long-term goals and work towards them, but that isn’t roundabout own its own. A relevant example is the field of longtermist ethics. You could directly work on long term threats to humanity, or write about the philosophical ideas needed to guide the far future. These are both obviously longtermist in their final goals, and one might be more effective than the other. But the second method is more indirect, and thus more roundabout.

Is "roundabout" simply the delayed gratification thing all over again? Well, no. At some point, you have to turn the factory on, make the profit, solve the riddle, and cash out. And, as with the entrepreneurship example, there are risks with longer time horizons and risky plans. Perhaps the one-marshmallow children, in the classic experiment, didn’t think waiting was worth the risk.

Conclusion

The roundabout concept is a useful abstraction for discourse. It intuitively combines planning, risk, and time, and creates a focal point of discussion. Just having the concept on the table suggests more ideas to be thought of. Think back to the Effective Altruism examples. Maybe some EA projects so capital-intensive that, like Ford’s factory, you need to take on risk and sink lots of capital and time. Otherwise, the goal wouldn’t even be possible. With the "roundabout" concept, these discussions can be more explicit, and people’s stances clarified. "I think your approach is too roundabout, because you are underestimating the risks of your long-term plan."

Naming a concept creates a chunk of the concept. "Roundabout" is quicker to remember than "let’s build this thing to help us do the other thing better". It becomes a tool in your thinking, a lens for judging ideas, and a building block of larger plans. My hope is for the roundabout concept to take a seat at the table of decision-making. And, unlike some concepts, roundabout strategy offers ways to create new strategies, instead of "merely" ruling out the bad ones. "How do we trade stocks faster? Well, what’s a roundabout way to get the orders in… maybe a light-speed cable?"

The entire field of quant finance is known for being cartoonishly roundabout. If you want to make money trading stocks, a real roundabout strategy is to have a fiber-optic cable running to the exchange. It costs 9 figures in the short term, but gives a nice speed buff later. The game of finance certainly has the best example of power creep [LW · GW] in history. ↩︎

And by "people", I really mean "all potential future morally relevant entities". So animals and ems [LW · GW] would be weighed. ↩︎

I saw a diagram showing this link between optimization and abstraction. It was in an article explaining this; small gains at the "top" of the abstraction pyramid, cascade into big gains at the bottom. I don’t remember where I saw this, but I will edit this article to link it, if someone can find it. ↩︎

I’m afraid this will happen with me, in learning the skills for A.I. safety. What if I don’t have a good way to contribute? What if I do, but I don’t contribute in time [LW · GW]? The good news is that I haven’t gotten distracted by a large roundabout plan. "That’s my secret, captain: I’m always distracted..." [LW · GW] ↩︎

1 comments

Comments sorted by top scores.

comment by Nicholas / Heather Kross (NicholasKross) · 2023-07-12T23:37:29.326Z · LW(p) · GW(p)

To clarify the "Bitter Lesson" example: the non-roundabout "direct" AI strategy is to use the "sweet shortcut" (h/t Gwern), by using existing human expert knowledge and trying to encode that into a computer. The roundabout strategy is to build a massive computing infrastructure first, which scaling requires. Even if no single group actually executed a strategy of "invent better computers and then do ML on them", society as-a-whole did via the compute overhang.