Understanding Gödel's Incompleteness Theorem

post by Rafael Harth (sil-ver) · 2022-04-06T19:31:19.711Z · LW · GW · 1 commentsContents

1. Languages and Interpretations 1.1 Languages 1.2 Interpretations 1.3 Validity 2. Increasing Power 2.1 Clarifying 'proofs' 2.2 Axioms 2.3 Complete Theories 3. Decidable and semi-decidable problems 4. That's basically it, but let's also look at set theory a little... None 1 comment

EDIT 2023/07: I recommend reading part one of this book review [LW · GW] instead (or at least before) this post; it does a better job explaining formal systems.

This is a post I wrote back in September of 2020 and am now publishing due to the changed incentives [LW · GW]. The idea was something like "create a short document that everyone who knows math but has never studied meta logic can read to obtain a complete understanding of what Gödel's (first) Incompleteness Theorem means". I thought this was worthwhile because said theorem may just be the most criminally misunderstood result in all of mathematics. (And also because writing tutorials like this is a great way to make sure you get it yourself!) I gave up on it at the time because it was getting too long. I've now taken out the part where we prove the theorem, and the post still needs 5000+ words, just for explaining what the heck the theorem even says.

... so I don't really expect many people to be interested, but hey, it's still easier than reading a textbook, so if for some reason you always wanted to know what the incompleteness theorem really means, or you already do know but want to test/refresh your understanding, perhaps this post is for you?

As an entry test, see if you can look at the statement below and understand why it is true, regardless of what and and mean. If you can, you probably know enough math to proceed. (If not, you most likely don't, alas.)

1. Languages and Interpretations

The most important step to approach any of this Metalogic stuff is to understand that there are two fundamentally different things: languages and interpretations.

1.1 Languages

The language world is where formulas like the one above are living. Formally, one starts with a set of symbols and defines specific rules that determine which combinations of symbols constitute proper formulas.[1] The following symbols (plus arbitrarily many variable names) are always present:[2]

(The post assumes you know what they all mean.)

These are enough for some formulas like , but there are also richer formulas such as the following:

This formula will be our running example, so we call it . It clearly contains things that are not in our list of symbols above. We call these new things constants, predicates, and function symbols.

- Constants denote specific elements ((from what set? that depends on the interpretation, see upcoming section)), like 0.

- Predicates are relations that can be true or false, like .

- Function symbols are things that take elements and turn them into other elements, like and and .

Formally, a language is the set of all such additional symbols one wants to allow. Thus, a possible language for would be

The language does not know what the constants refer to or what the predicates and function symbols do, but it does know how many places they have, i.e., how many elements they are applied to; this is the number in the index, so 2 for and 1 for . From this alone, it follows that the above is a proper formula, whereas and are not.[3] Thus, the language fully describes the syntax: it defines exactly what strings do and do not count as formulas.

1.2 Interpretations

Given that languages cover syntax, interpretations are about semantics. Let's begin with an example: in the case of the language above, a possible interpretation would be

Let's unpack this. First, notice that this is a tuple with two elements, and the rest. This corresponds to the fact that an interpretation always does two things, namely

- define a domain, which is the set of all elements the formulas talk about; and

- define every constant, function symbol, and predicate (in the above, this is done via the symbol).

Let's call this interpretation . Interpretation says that

- The domain is the set of natural numbers, .

- The constants, function symbols, and predicates are assigned as follows:

- the constant denotes the number .

- the predicate denotes the less-than relation on .

- the function symbol denotes the successor function on .

- the function symbol denotes addition on .

- the function symbol denotes multiplication on .

In this case, I've cheated a bit by making the constant and predicate and two of the function symbols (i.e., and and , ) look almost the same as the element/relations in/over (i.e., and and ) that already have precise meanings. Formally, they could be assigned any elements/relations whatsoever. For example, we could assign the number 421337 and the relation and end up with an equally valid (though incredibly confusing) interpretation. (But we could not assign to since is not an element in the domain .)

Given interpretation , our formula

says that, 'for any natural number , there exists a natural number such that [if is greater than 1, then is greater than ]'. This happens to be true.[4]

In general, given an interpretation, every closed formula in a language is either true or false. 'Closed' meant that every variable is 'bound', i.e., belongs to a or quantor, as in the formula above. There are also formulas like where some variables are completely undefined; those are called 'open' formulas. Open formulas don't have truth values since they depend on what the unbound variables refer to. Closed formulas are also called sentences, and that's the word we'll use from now on.

This point is good to internalize, so I'll repeat it: given an interpretation, every sentence is either true or false. There is no ambiguity; one can't write down a sentence and specify an interpretation such that the truth value is undefined.

1.3 Validity

A formula usually has a gazillion different interpretations, and in general, the truth value of a sentence depends on the interpretation. For example, our example formula happens to be true under interpretation , but it is false under the interpretation that is like except that denotes the greater-than relationship on .

So in general, the value of a sentence depends on the interpretation. However, there are some sentences which are true under every interpretation, such as . Such formulas are called valid. This concept is really important!

In Highly Advanced Epistemology 101 for Beginners [? · GW], Eliezer asks what logic is all about and answers that it is about proving which conclusions follow from which premises. This is subsumed by the notion of validity:[5] if some sentence always implies some other sentence , then the sentence is valid. In general, since every implication is just another sentence, understanding which sentences are valid is enough to understand which implications always hold.

A nontrivial example using one-place predicate symbols and and is the one from the intro:

If you read these three predicates as saying that something is a kitten, is little, and is innocent, respectively (note that this doesn't assume anything at all; we're only giving cute names), then the above sentence says that

Eliezer's point was that the above is always true, and this is the power of logic. You can find plenty of interpretations that make the two premises false, but no-one can find an interpretation where the premises are true but the conclusion is false. The corresponding formal statement is that the above formula is valid; it is true under every interpretation.

Thus, logic is all about finding valid sentences. And because of this, logic offers an in-built tool to do this. This tool is called a proof system.

(The way it works is roughly that there is a set of precisely specified steps: one begins with a valid sentence like and modifies it bit by bit, using only steps that preserve validity. If one has transformed it into a sentence , this constitutes a proof for .)

The crucial property here is that a proof implies validity. This property is called soundness. Note that, if soundness did not hold, there would be at least one not-valid sentence one could prove, i.e., one could prove a sentence that is false under at least one interpretation. This would be a poor state of affairs, and we probably wouldn't want to call the thing a proof in the first place.

Fortunately, soundness does hold: whenever one can prove a sentence, it is valid.

This begs the question of whether the converse holds as well. Is every valid sentence provable?

Given all of the fuss about incompleteness, you might guess that the answer is no, that every provable sentence is valid, but not every valid sentence is provable. Wrong! Completeness holds as well! Every valid sentence is provable!

Taking soundness and completeness together, we can phrase this as 'a sentence is valid if and only if it is provable'. In particular, holding everything else constant, the fact that a sentence is not provable is always a feature.

The theorem stating that completeness holds is called Gödel's Completeness Theorem. Yes, really. This is why I've called the theorem misunderstood; people who don't know math and talk about it usually think the incompleteness theorem says the opposite of the completeness theorem. In fact, it says something else & more specific; we'll get to that now.

2. Increasing Power

If logic can only prove valid sentences, its utility seems limited. As we have seen, our example sentence

is true under interpretation but false under many other interpretations. This is an apt time to mention that and are not arbitrary examples:

- is called the language of arithmetic (for the obvious reasons)

- is called the standard interpretation of the language of arithmetic (again, for obvious reasons)

(If you've forgotten what they look like, go back and look it up.)

So, what if we decide that is special and we care about proving that a sentence is true under in particular, even if it is not valid? Can we do that?

2.1 Clarifying 'proofs'

In some sense, the answer is obviously yes.

- We can look at the first part, , and conclude that has to be at least 2 (otherwise the full sentence always comes out true)

- We can choose , set (note that ), and argue that

Thus, the sentence is always true. No serious mathematician will doubt this proof.

However, we come up with a 'proof' only because the concept of a 'proof' is overloaded. We use the term 'proof' to refer to the following two things:

- The general, not entirely well-defined notion of a 'proof', something like 'anything that conclusively argues for a proposition' (e.g., the above)

- The rigorously specified notion of proof within a formal language

The proposition that our sentence is true in is provable in the first sense, but (as of now) it's unclear how we would prove it in the second. To avoid misunderstandings, we will henceforth talk about internal proofs whenever we refer to the second kind.

The results about provability (the soundness theorem and Gödel's completeness theorem) are about internal proofs. Thus, the practical implication of these two theorems is that they allow us to talk about internal proofs without knowing anything about how the internal proof system is set up (by arguing 'valid' rather than 'internally provable'). For example, if we look at the kitten sentence:

all we need is to verify that it is valid. Any sentence is internally provable if and only if it is valid; therefore, the kitten sentence is also internally provable. This trick always works. Throughout the post, I will phrase everything in terms of 'valid' or 'not valid', but always keep in mind that this concept is equivalent to 'internally provable' and 'not internally provable'.

2.2 Axioms

Having gotten this distinction out the way, let's rephrase the question: can we internally prove that our sentence is true under the standard interpretation ?

As of now, the answer is negative since the sentence is not valid. Our goal is thus to introduce a mechanism to make it so that more sentences become valid.

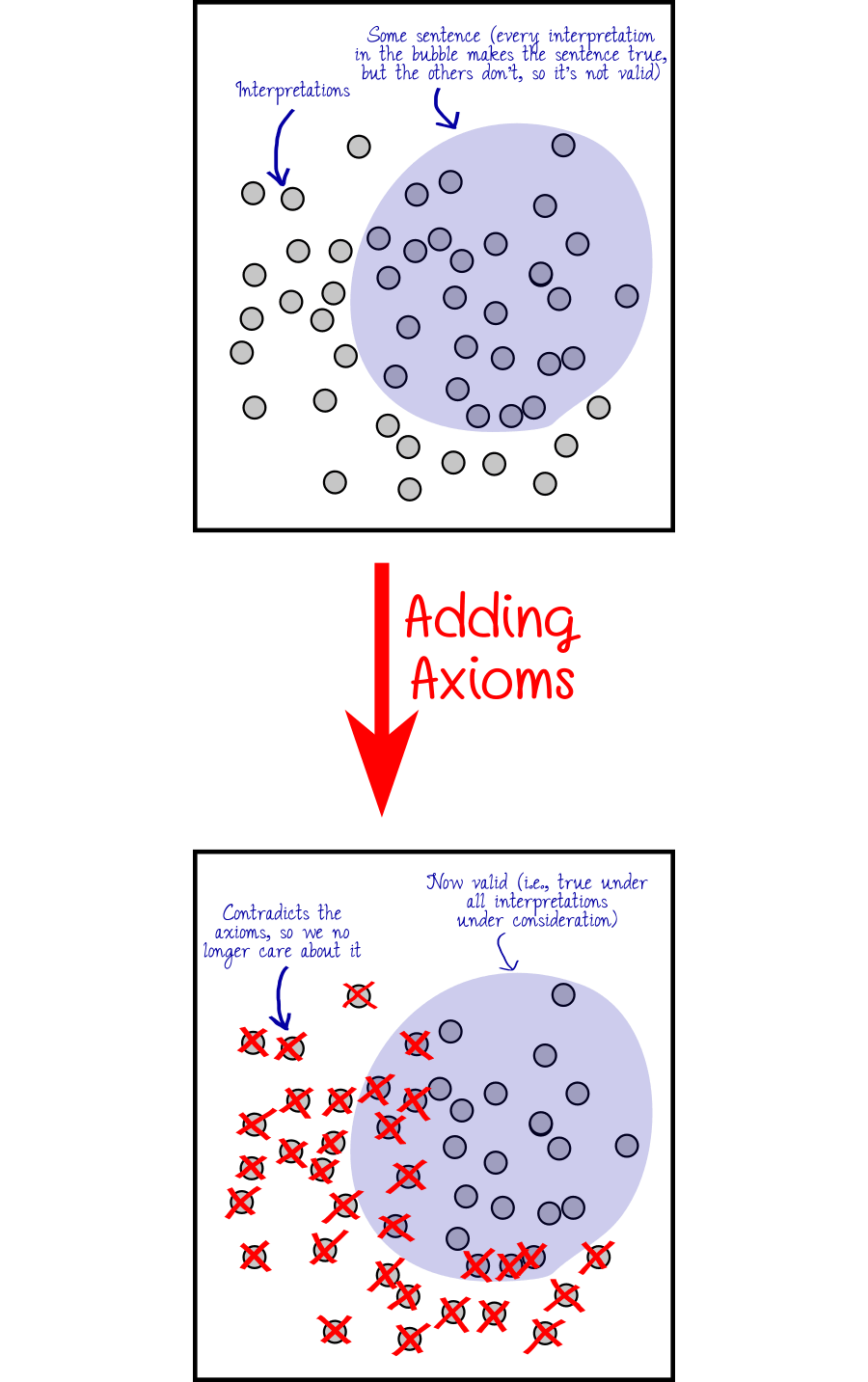

This mechanism is to restrict the class of interpretations by introducing a set of axioms. That is, we write down some sentences, say

and subsequently, only consider the interpretations that make all true. Since there are now fewer interpretations we care about, it follows that it has become easier to be true under every interpretation, and thus more sentences are valid. Here is a graphical illustration of this mechanism:

It's worth pointing out that calling a sentence that is [true under every interpretation that agrees with the axioms] 'valid' is non-standard in the literature (whereas everything else in this post only diverges from the literature if I've made a mistake). A possible alternative approach is to, for every sentence , ask whether the sentence is valid, where is the conjunction of all the axioms . This yields the same results (as in, for every sentence that we call valid given the axioms, the sentence is valid in the classical sense). But, I mean, yuck.

Still, this alternate view is useful to see the following: due to completeness and soundness, we still have the property that every valid sentence is internally provable and vice versa. However, 'internally provable' now entails internally proving rather than .

Given a set of axioms , we call the set of all sentences that are valid given (i.e., the set of all sentences that are made true by every interpretation that makes all of true) the theory of . Thus, a theory always contains the 'classically valid' sentences but may include a lot more, given how strong the axioms are.

Let's go back to our two axioms above:

These sentences certainly rule out many interpretations. For example, now has to behave as we expect concerning , and there is at least some of the intuitive connection between and . Are these enough such that our sample sentence

is part of their theory? Sadly, no. If you're up to do an exercise, find an interpretation that agrees with both axioms yet makes false.

One possibility is the interpretation that is like except that , i.e., the function symbol simply also implements multiplication. This certainly fulfills and since neither of them talks about , but doesn't fulfill since the right side is now never true ( is always false in the standard interpretation).

Since such an interpretation exists, is still not valid. By soundness, we know that it is not internally provable, either.

2.3 Complete Theories

Given a set of axioms, we call their theory consistent if at least one interpretation makes the axioms true.[6] It is called complete if at most one interpretation makes the axioms true. Thus, it is consistent and complete if the axioms precisely nail down one interpretation.[7] As we have seen, the theory based on the axioms and was not complete since makes true, and we have found at least one interpretation that makes false yet also agrees with the axioms (in the exercise).

Recall that our initial problem was that

- Logic can only internally prove things that are true under every interpretation

- Therefore, considering a specific interpretation (like the standard interpretation ), lots of sentences will be true under that interpretation but not true under every interpretation, thus won't be valid, thus not provable.

Our solution was to narrow the space of interpretations through axioms. If we do this so successfully that only one interpretation remains, it immediately follows that the notion of [truth under that interpretation] and [validity] coincide.

Thus, saying that a theory is complete is equivalent to saying that, for every sentence , either or is valid and hence internally provable. This would be the ideal case (provided the theory is also consistent so that we can't just prove everything) since now internal proofs are maximally powerful.

Can we achieve this for ? Is there a set of axioms that makes true such that their theory is complete (so that no other interpretation makes them true)?

As stated, the answer is trivially yes; just take all sentences true under as axioms.[8] But, of course, that's silly; we think of axioms as just a few statements that can prove a lot of things. We can sharpen the requirement by demanding that the set of axioms be finite. In fact, we are a bit more generous and merely ask that the set of axioms be Turing-computable, meaning that for any sentence, we can deterministically check whether or not it is an axiom. This allows an infinite set of axioms, as long as it's easy to describe what the set looks like. (We'll see why this captures what we care about in the next section.)

Thus, let us rephrase the question: is there a Turing-computable set of axioms made true by such that their theory is complete?

Sadly, the answer is no. This, at last, is where the impossibility results come in. To formulate it precisely, we need one more ingredient. One defines a set of axioms called the Robinson Arithmetic, also denoted , which includes and along with a bunch of other common-sense rules (basically the rules about "how numbers should work"). All of the axioms in are true under the standard interpretation , so any attempt to nail down also has to include .[9] And now...

Theorem. (Gödel's first incompleteness theorem.) There is no Turing-computable set of axioms whose theory includes and is both consistent and complete.

So Gödel's (first) incompleteness theorem is about the difficulty of nailing down the standard interpretation. (Or, to be specific, nailing down any interpretation; it's equally impossible to nail down non-standard interpretations of the language of arithmetic, assuming the set of axioms you use to do this include .) That means it's specific to arithmetic; it only applies to theories that include . It may still be possible to nail down a single interpretation for sets of axioms that don't include numbers, at least as far as I know.

To get more into the details of the axioms and what the non-standard interpretations look like, I refer you to the aforementioned sequence [? · GW] from Eliezer.

There's also a second incompleteness theorem, but it's much less interesting, so we won't talk about it. Instead, some more on what the first one means. People sometimes say things like "arithmetic is not decidable". We'll get to that now.

3. Decidable and semi-decidable problems

When mathematicians talk about a 'problem' in this context, they always mean "an infinite set of problems such that (a) the answer to each problem is either 'yes' or 'no', and (2) the set contains arbitrarily large instances of problems". Here are two examples:

- Given an arbitrarily large natural number, decide if it is a prime number.

- Given an arbitrarily long program code, decide if the program, if run, eventually halts.

In this setting, we call a problem decidable iff there exists a single, fixed procedure that can take arbitrary instances of this problem and always behaves like this:

- If the correct answer is 'yes', it outputs 'yes'.

- If the correct answer is 'no', it outputs 'no'.

For example, the first problem is decidable because we can write a python routine that takes some number as input, checks whether divides for some , outputs NO if so, and YES if not. Note that this is just one routine, one short block of code, that can handle arbitrary instances of this problem.

The second problem is not decidable. The obvious way one would try to solve it is to write a routine that always just executes the input program and outputs YES if it halts. This is great if it halts -- but if it doesn't, we have the issue that we can never say 'ah, it will never halt'; maybe it just takes a few more steps. Thus, such a routine would not behave as described above: if the correct answer is 'no', it would run forever and never output 'no'. Or, if we wrote a routine that just gives up and outputs 'NO' eventually, there would be some input program that does halt for which it gives up too soon, and then it would not behave as described above for that input.

(If one restricted the set of problems to only consider a finite number, it would immediately become decidable; just write a procedure that outputs 'NO' if the program hasn't halted after 3^^^3 steps. This is why such problems always consider sets with arbitrarily large instances.)

Furthermore, we call a problem semi-decidable iff there exists a single, fixed procedure that can take arbitrary instances of this problem and always behaves like this:

- If the correct answer is 'yes', it outputs 'YES'.

- If the correct answer is 'no', it does [anything but output 'YES'].

In particular, the second problem is semi-decidable because we can write a routine that takes the input program, runs it (not easy -- you have to implement an entire compiler -- but doable), outputs 'YES' if it halts, and otherwise just keeps running. Note that this is just one routine (albeit a lengthy one) that can handle arbitrary instances of this problem.

Interesting stuff, right? But now, let's apply it to logic. Here's the problem we care about:

- Given an arbitrarily long sentence and Turing-computable set of axioms , decide whether is valid given .

Is this 'problem' decidable? Is it semi-decidable?

The answer to the second question is "yes": the problem is always semi-decidable.[10] This is so because all now-valid sentences are internally provable (Gödel's completeness theorem), we can tell whether something is an internal proof or not (this just requires knowing what our axioms are, which we do because is Turing-computable -- this is why we chose that requirement!), and all internal proofs have finite length. Thus, we can just write one procedure that searches through the set of all possible proofs, ordered by length, and outputs YES if it finds one for our input sentence. If there is such an internal proof, then the proof has some length, so it must be found at the point where the procedure checks all internal proofs of that length.

However, we can never confidently say 'it's not internally provable' because, again, we don't know how long the shortest internal proof is, even if we know one exists. They can get very, very long for large sentences, and we just don't know how long.

So, in general, this problem is semi-decidable but not decidable. However, if the theory of is complete, then it is also decidable! This is so because:

- If is complete, for each sentence , either or is valid.

- Thus, either or is internally provable. (Gödel's completeness theorem.)

- Therefore, we can write a procedure that, given any sentence , searches for internal proofs for and in parallel and outputs 'YES' and 'NO', respectively, if it finds one. Since one of them is always internally provable, this always works.

Since no theory of a Turing-computable set of axioms (that includes ) is consistent and complete (Gödel's first incompleteness theorem), it follows that there is always at least one sentence such that neither nor is valid. Therefore, the problem "given an arbitrary sentence, is this sentence true under the standard interpretation?" is only semi-decidable. (This is the important computational consequence of the incompleteness theorem.)

4. That's basically it, but let's also look at set theory a little...

You may have heard claims like 'set theory provides a formalization for all of mathematics' or something; let's see what's up with that. We will consider Zermelo–Fraenkel set theory with the axiom of choice (ZFC). Set theory is simply a language (as defined in this post), ZFC is a set of axioms (as defined in this post), and the possible 'different versions of mathematics' are the different interpretations (as defined in this post) that make the axioms true.

Concretely, the language of set theory is as follows:

It contains no constants, no function symbols, and a single two-place predicate. (Which we will henceforth call rather than , so it looks more familiar.)

(You may now object, "but we use other symbols in math??!". True, but it's possible to write every statement without those symbols. You can take a statement like and translate it into a statement entirely made of the standard logical symbols plus .)

Ideally, the interpretation of this language would be such that

- its domain consists of all sets; and

- the relation describes what sets contain each other.

Absent any axioms, however, there are countless different interpretations, among them ones that look entirely absurd. Here is one:

In this interpretation, there are exactly two sets (the fact that we call them 'sets' doesn't change anything, it's just what one does when discussing the language of set theory) called and , and the element-of relation is always true, i.e., we have and and and .

This is not what we imagine the universe of all sets to look like, so we need axioms. ZFC has ten (or nine[11]). Here are two of them:

This says that there exists an 'empty set', a set such that is false for every set . It's called the axiom of existence.

This says that if two sets behave the same with regard to set membership (i.e., for any third set, either they both contain it or they both don't contain it), then they're the same set. This is called the axiom of extensionality.

If you're up for another exercise, you can answer the following question: does either of the 2 axioms rule out the interpretation I've postulated above?

Both of them do! For the first, it's because neither nor is the empty set ( isn't since , whereas isn't since ). For the second, it's because and behave identically with regard to set membership (because and ), yet in the interpretation.

However, there are still plenty of absurd interpretations left. For example, if we change to be the relation such that and but and , the interpretation agrees with the axioms again, even though it still only has two sets rather than infinitely many, and still has a set containing itself.

By themselves, these two axioms don't seem very impressive, so let's do just one more:

This axiom is a bit harder to read, but it says that, given any two sets and , there exists a set that contains and but no other set. This is called the axiom of unordered pairs.

These three axioms are certainly not enough, in that there are still plenty of nonsense interpretations left. For example, none of the axioms say that a set can't contain itself, and for another example, none of the axioms imply the existence of any set with more than two members. However, they are already enough to rule out interpretations with finite domains; any interpretation that satisfies the axioms must have infinitely many elements. If you want, you can think about why and look it up in the footnote,[12] but this is optional.

Okay, enough with the examples, let's get to the interesting question. If we include all ten axioms, is ZFC complete? Does it nail down one particular interpretation?

The answer is no, and this is what people usually mean when they say things like 'there are several possible versions of mathematics'. The reason is that the ZFC axioms are enough to prove (even though they don't have the symbols , etc.). Thus, Gödel's incompleteness theorem also extends to "standard math", and it says that there will always be sentences that we can't prove true or false because haven't nailed down a single interpretation.

An example for such a sentence is the claim that there exists a set whose size is in between and (or more generally, in between and for any infinite set ). If this sentence is , then neither nor is valid given ZFC, which is to say, there exists an interpretation that makes true, and another one that makes true. See also Wikipedia's list of statements independent of ZFC.

One could, of course, add additional axioms such that either or becomes valid. There are many such suggestions out there. But (due to Gödel's first incompleteness theorem), there will always be another sentence such that neither nor is valid; one can never get them all.

One particular interpretation is the one under which a set exists if one can internally prove that it exists, i.e., if it exists across all interpretations. This is the 'minimum' interpretation. Unfortunately, there is again no way to nail down the minimum interpretation... nor any other one.

This is the kind of thing I won't do in detail in this post. Instead, I'm assuming you either know the details or have an intuitive grasp on what does and doesn't constitute a formula. To nonetheless provide an example as to what is meant, the strings and are not proper formulas, whereas is. ↩︎

If one wants (but this is not even always done in the literature), one can drop many of these symbols as they can be substituted by other symbols. The obvious one is since one can write instead of . Furthermore, one can write instead of , and even instead of and instead of for some sentence . A minimal set of symbols looks like this:

Restricting the set of symbols is useful when one makes proofs that involve looking at all available symbols separately. (One can then still use the other symbols but consider them abbreviations rather than formal parts of the system.) However, we won't do any such proofs in this post. ↩︎

Note that writing the function symbol in between two terms is a simplification; formally speaking, should be , and similarly with . ↩︎

In fact, there exist many such -- any number greater than 2 does the job. ↩︎

Well, almost. Things get complicated if we're allowing infinite sets of sentences, but this is an issue we can and will just ignore for this post.

The reverse is also true: the concept of implication subsumes that of validity. In particular, the validity of reduces to the implication . ↩︎

The case where no interpretation makes all axioms true is uninteresting; now every sentence vacuously has the property that it is true under every interpretation that agrees with the axioms, which means that the theory of that set of axioms is just the set of all sentences.

Saying that at least one interpretation makes all axioms true is equivalent to saying that the axioms don't contain a contradiction, hence the term 'consistency'. ↩︎

This property is also called 'categorical', but we don't need the extra term. Note also that it's not literally just one interpretation, but one under a boring equivalence relation that takes care of interpretations that 'do the same thing'. ↩︎

This theory is called 'true arithmetic'. ↩︎

Technically, it doesn't need to include , it just has to be able to prove all axioms in so that its theory includes . But this is no meaningful difference; if it can prove all of , it may as well include it. ↩︎

This has interesting consequences. Since set theory is simply a formal language (last section), one can write down a single routine that goes through all possible proofs and spits out the (formula, proof) pair whenever it has found one. If we keep running this procedure, then, for any valid sentence, it will eventually find a proof of that sentence. (It will even find the proof of minimal length.) This means that this one procedure can, in some sense, 'solve' all of mathematics.

It would, however, take quite a long time. ↩︎

The reason why it's not clear is that the axiom of existence is technically redundant; it can be proved from the remaining axioms. Thus, one may or may not include it; the resulting theory is the same. ↩︎

Here is one way to prove the claim. Suppose we have a sequence of sets such that

- all sets are different

- is the empty set

- each set for contains precisely set and nothing else

Then, using the axiom of unordered pairs, we can construct a new set, let's call it , that contains precisely and nothing else. (Note that the and in the axiom are certainly allowed to refer to the same element.) Now is different from all the previous sets because it contains precisely , whereas each set in the sequence contains precisely , and by assumption. Thus, we can extend the sequence to .

Furthermore, we can get this process of the ground using the fact that there is an empty set, leading to the initial sequence .

In regular notation, this proves that there is an infinite sequence of sets of the form , , , , , etc. Note that these all contain precisely 1 element (except for which contains 0).

Of course, this is not an internal proof; it's a regular proof done while looking at the interpretation from the outside. ↩︎

1 comments

Comments sorted by top scores.