Some ML-Related Math I Now Understand Better

post by Fabien Roger (Fabien) · 2023-03-09T16:35:44.797Z · LW · GW · 4 commentsContents

SVD Is Decomposing a Matrix Into a Sum of Simple Read and Write Operations There Is Exponentially Much Room for Almost Orthogonal Vectors in High Dimensional Space Layer Normalization Is a Projection Appendix: Solutions to the Simple Questions Using the Geometric Interpretation of SVD None 4 comments

Here are some simple Math facts rarely taught in ML & Math lectures:

- SVD is decomposing a matrix into a sum of simple read-and-write operations

- There is exponentially much room for close vectors in high dimensional space

- Layer Normalization is a projection

SVD Is Decomposing a Matrix Into a Sum of Simple Read and Write Operations

Thanks to zfurman, on EleutherAI, which told me about the core idea.

Let's say I want to understand what a weight matrix does. A large table of numbers isn't really helpful, I want something better.

Here is a linear transformation which is much easier to understand than a table of numbers: (where and are unit vectors, is a non-negative scalar, and is the dot product between and ). is reading in the direction, and writing in the direction with a magnitude scaled by . I can understand this much more clearly and do nice interpretability with it. Therefore, if I know things about my embedding space (for example, using the logit lens), I can tell what is doing.

Sadly, not all linear transformations can be expressed in this way: it's just a rank-1 transformation, so there is no hope of capturing something as complex as a usual linear transformation.

But what if I allow myself to sum many simple transformations? Then I could just look at each operation independently. More precisely, here is a wishlist to understand the big matrix of the transformation :

- where and are unit vectors, and is a positive scalar - it's a sum of simple transformations;

- for all - no double read, always read in orthogonal directions;

- for all - no double write, always write in orthogonal directions;

- - the number of simple transformation summed should be as small as possible, because that would mean less individual transformation to inspect (and I can't hope for fewer than operations, since the rank of the sum of rank-1 linear transformations is at most ).

In terms of matrices, this is where and are semi-unitary matrices (orthogonal matrices when they are square matrices). This is exactly SVD.

Understanding matrices & SVD this way helped me find a geometric intuition behind some basic properties of matrices. The questions below can also be quickly answered by "doing the math", but I think it's interesting to have a geometric understanding of why these are true.

- What is a geometric interpretation of a symmetric matrix? Why is the inverse of an invertible symmetric matrix always symmetric?

- What is the geometric interpretation of transposing a linear transformation ?

- Why are the eigenvalues of and why are its eigenvectors ? Why are the eigenvalues of and why are its eigenvectors ?

Feel free to share yours in the comments!

There Is Exponentially Much Room for Almost Orthogonal Vectors in High Dimensional Space

According to this paper, the number of almost orthogonal vectors you can put in dimensional space is at least where all pairs of vectors have an angle of at least between them (and ).[1]

Ignoring the , and using (the number of dimensions of the embedding space of GPT-2 XL) it means you can fit at least 3000 vectors with pairwise cosine similarities smaller than 0.1 and vectors with cosine similarities smaller than 0.2. Using (the number of dimensions of the embedding space of GPT-3), you can fit at least vectors with pairwise cosine similarities smaller than 0.05 and vectors with cosine similarities smaller than 0.1.

This means you can get a lot of vectors in your embedding space if you allow for relatively small cosine similarities. But this does not hold for tiny cosine similarities (e.g. 0.01 for , which gives a lower bound of 2 using the formula above). I'm not aware of a lower bound better than for tiny angles. Therefore, for a neural network to use this space to fit independent concepts, it needs to be resilient to many small-but-not-that-small conflicts.

Layer Normalization Is a Projection

Thanks to Lawrence Chan for telling me about this.

Layer Normalization is a normalization layer often found in LLMs. People usually write it as (followed by a scaling and a bias term[2]), which is confusing because we are taking the expected value and the variance of a vector, which is not a common geometric operation.

But it can easily be expressed in terms of two successive projection: is simply , the projection on the hyperplane orthogonal to the vector , .

Then, where is the projection on the unit sphere.

This gives us .

Therefore, LayerNorm is just projecting on the hyperplane orthogonal to , and then projecting again on the unit sphere (of the hyperplane orthogonal to ), times . These two projections are the same as projecting a point to its closest point on the unit sphere of the hyperplane orthogonal to [3].

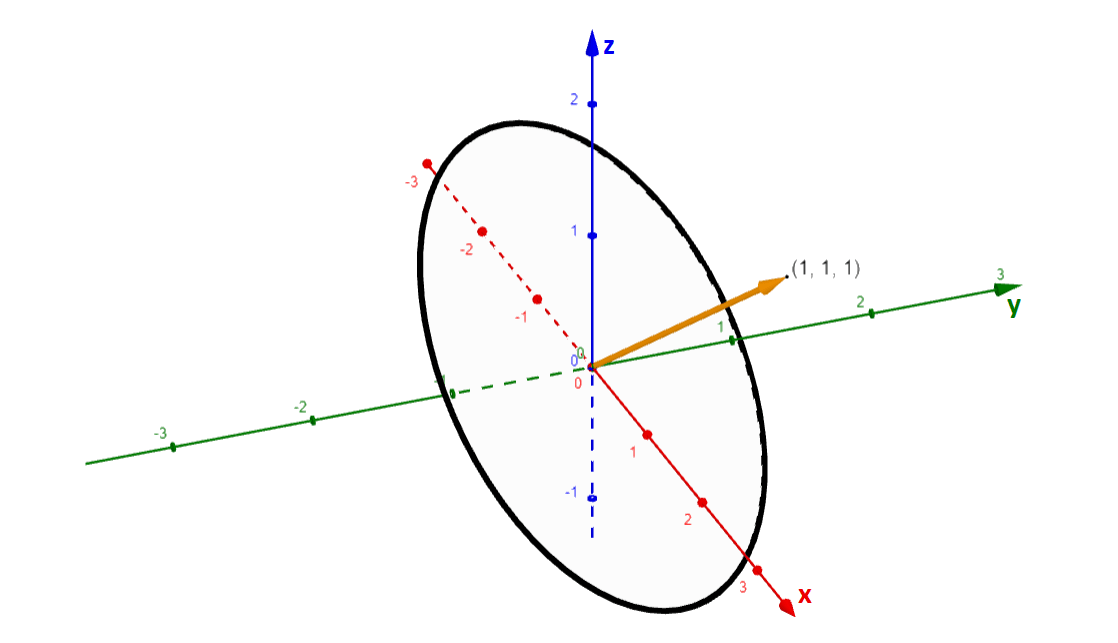

For example, in dimension 3, LayerNorm is the same as projecting a point to the point of the black ring below closest to it.

This helps me see geometrically what is: it's the unit sphere of the hyperplane orthogonal to .

This also gives me a geometrical intuition of why it might help (though the usual formula works just as well), though it is quite speculative. First, projecting on the hypersphere clearly solves the problem of exploding or vanishing activations. Second, the projection on the vector pushes you away from the quadrants where ReLU is boring. Indeed, the vector points towards the quadrant where all coordinates are positive, where ReLU = id, and points away from the quadrant where all coordinates are negative, where ReLU = 0. In all other quadrants, ReLU does something a bit more interesting: it zeros out some coordinates and leaves others intact.

What is missing from this geometrical point of view: It's hard to understand high dimensions. In particular, it's not easy to see that the projection on the hyperplane does almost nothing to high dimensional vectors: it only changes one coordinate among thousands. Therefore, the cosine similarity between a vector and is almost always very close to 1. Indeed, if are the coordinates of in a basis which has the vector (normalized) as first vector,

Appendix: Solutions to the Simple Questions Using the Geometric Interpretation of SVD

- What is a geometric interpretation of a symmetric matrix? Why is the inverse of an invertible symmetric matrix always symmetric?

A symmetric matrix is, according to the spectral theorem, a matrix of the form where U is an orthogonal matrix. Therefore, a symmetric matrix is a matrix which can be decomposed as a sum of transformation of the form . A symmetric matrix is the matrix of a linear transformation, which writes where it reads! Therefore, inverting a symmetric matrix is easy: if the are all non-zero, just read where you just wrote (in the direction of ), scale by , and then write back along The transformation I just described to invert the action of a symmetric matrix also writes where it reads, so the inverse of a symmetric matrix is also symmetric. (You can check, that because there is no double read nor double write, what I described is legit, though the formal proof with usual linear algebra is much faster than the formal proof using geometry.)

- What is the geometric interpretation of transposing a linear transformation ?

, therefore M transposed is the same as M, except it reads where M writes, and writes where M reads (the simple rank-1 operations are "swapped").

- Why are the eigenvalues of and why are its eigenvectors ? Why are the eigenvalues of and why are its eigenvectors ?

is the composition of two linear transformations. A first one (the transposed of M, see above) which reads along scales by and writes along , and a second one which reads along , scales by , and writes along . Therefore, reads along , scales by and writes along . Therefore, it is symmetric (it reads where it writes), and the are eigenvectors with eigenvalues because if it read , it will write (there is no double read nor double writes). The same reasoning works for .

- ^

Toy Models of Superposition states something similar: "It's possible to have many "almost orthogonal" ( cosine similarity) vectors in high-dimensional spaces. See the Johnson–Lindenstrauss lemma." But this lemma does not directly state this property, and rather tells us that there is a linear map to a space with dimensions which almost preserves L2-distances between points. I prefer the theorem I present in this post which is directly about how many almost orthogonal vectors can fit in high-dimensional spaces.

- ^

For numerical stability reasons, people also usually add a small to the denominator.

- ^

Using the L2 distance, for a given point , if y is the closest point to on the unit sphere of the hyperplane orthogonal to the vector, and if is the orthogonal projection of on , then by the Pythagorean theorem, , which is minimal when y is the projection of on the unit sphere. This proves that projecting on the unit sphere of is the same as first projecting on and then projecting on the unit sphere.

4 comments

Comments sorted by top scores.

comment by ojorgensen · 2023-08-29T12:02:14.870Z · LW(p) · GW(p)

But this does not hold for tiny cosine similarities (e.g. 0.01 for , which gives a lower bound of 2 using the formula above). I'm not aware of a lower bound better than for tiny angles.

Unless I'm misunderstanding, a better lower bound for almost orthogonal vectors when cosine similarity is approximately is just , by taking an orthogonal basis for the space.

My guess for why the formula doesn't give this is because it is derived by covering a sphere with non-intersecting spherical caps, which is sufficient for almost orthogonality but not necessary. This is also why the lower bound of vectors makes sense when we require cosine similarity to be approximately , since then the only way you can fit two spherical caps onto the surface of a sphere is by dividing it into hemispheres.

This doesn't change the headline result (still exponentially much room for almost orthogonal vectors), but the actual numbers might be substantially larger thanks to almost orthogonal vectors being a weaker condition than spherical cap packing.

Replies from: Fabien↑ comment by Fabien Roger (Fabien) · 2023-08-30T13:07:11.358Z · LW(p) · GW(p)

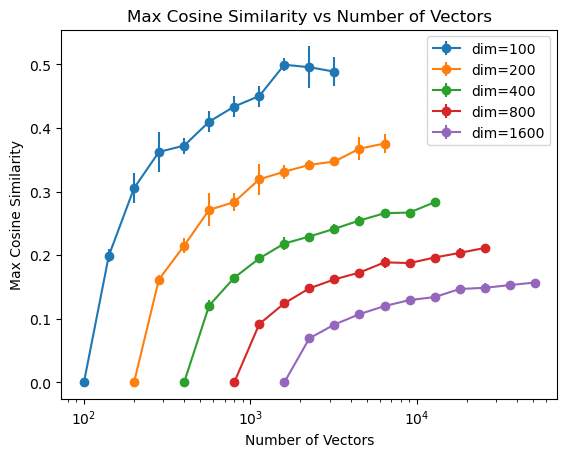

You made me curious, so I ran a small experiment. Using the sum of abs cos similarity as loss, initializing randomly on the unit sphere, and optimizing until convergence with LBGFS (with strong wolfe), here are the maximum cosine similarities I get (average and stds over 5 runs since there was a bit of variation between runs):

It seems consistent with the exponential trend, but it also looks like you would need dim>>1000 to have any significant boost of number of vectors you can fit with cosine similarity < 0.01, so I don't think this happens in practice.

My optimization might have failed to converge to the global optimum though, this is not a nicely convex optimization problem (but the fact that there is little variation between runs is reassuring).

comment by TheManxLoiner · 2024-04-18T19:17:25.816Z · LW(p) · GW(p)

Huge thanks for writing this! Particularly liked the SVD intuition and how it can be used to understand properties of . One small correction I think. You wrote:

is simply the projection along the vector

I think is projection along the vector , so is projection on hyperplane perpendicular to

Replies from: Fabien↑ comment by Fabien Roger (Fabien) · 2024-04-22T13:35:41.983Z · LW(p) · GW(p)

Oops, that's what I meant, I'll make it more clear.