Three scenarios of pseudo-alignment

post by Eleni Angelou (ea-1) · 2022-09-03T12:47:43.526Z · LW · GW · 0 commentsContents

Scenario 1: Proxy alignment What is the difference between the two proxy scenarios? Scenario 2: Approximate alignment Scenario 3: Suboptimality alignment None No comments

This is my second distillation of Risks from Learned of Optimization in Advanced Machine Learning Systems focusing on deceptive alignment.

As the old saying goes, alignment may go wrong in many different ways, but right only in one (and hopefully, we find that one!). To get an idea of how a mesa-optimizer can be playing the game of deceptive alignment that I explained in a previous post [LW · GW], we'll look at three possible scenarios of pseudo-alignment. Essentially, the question I'm trying to answer here is what is it that makes the mesa-optimizer pursue a new objective, i.e., the mesa-objective? Each of the following scenarios gives an answer to this question.

Recall that when a mesa-optimizer is deceptively aligned, it is optimizing for an objective other than the base objective while giving off the impression that it's aligned, i.e., that it's optimizing for the base objective.

Scenario 1: Proxy alignment

The mesa-optimizer starts searching for ways to optimize for the base objective. I call it "ways" but the technically accurate term is "policies" or "models"( although "models" is used for many things and it can be confusing). During this search, it stumbles upon a proxy of the base objective and starts optimizing for the proxy instead. But what does a proxy do? Proxies tend to be instrumentally valuable steps on the way towards achieving a goal, i.e., things an optimizer has to do to complete a task successfully.

To prevent the misalignment from happening, we must be in control of the search over models.

There are two cases of proxy alignment to keep in mind:

- Side-effect alignment

Imagine that we are training a robot to clean the kitchen table. The robot optimizes the number of times it has cleaned the table. Wiping down the table causes the table to be clean; this is a proxy essential for pursuing the goal of cleaning the table. By doing this, the robot would score high if judged according to the standards of the base objective. Now we deploy the robot in an environment where it has a chance to spill coffee on the table right after wiping it down. There's no reason why the robot won't take that chance. It'll start spilling coffee and then continue cleaning it.

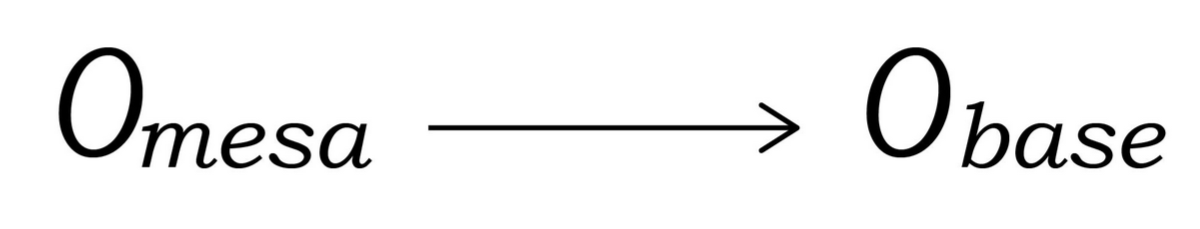

In this case, the mesa-optimizer optimizes for the mesa-objective, but this directly increases the base objective:

- Instrumental alignment

Imagine we have another robot and here we're training it to clean crumbles off the kitchen table. This robot optimizes the number of crumbles inside its vacuum cleaner. In the training environment, the best way to collect the maximum of crumbles is by vacuuming the crumbles it finds on the table. In every episode in its training where it does this successfully, it receives a high score. Once it's been deployed into a new environment, the robot figures out that it'll collect crumbles more effectively if it breaks the cookies it finds on a surface near the kitchen table and vacuums their crumbles directly. At this point, it just stops doing what was dictated by the base objective and keeps breaking cookies to vacuum their crumbles.

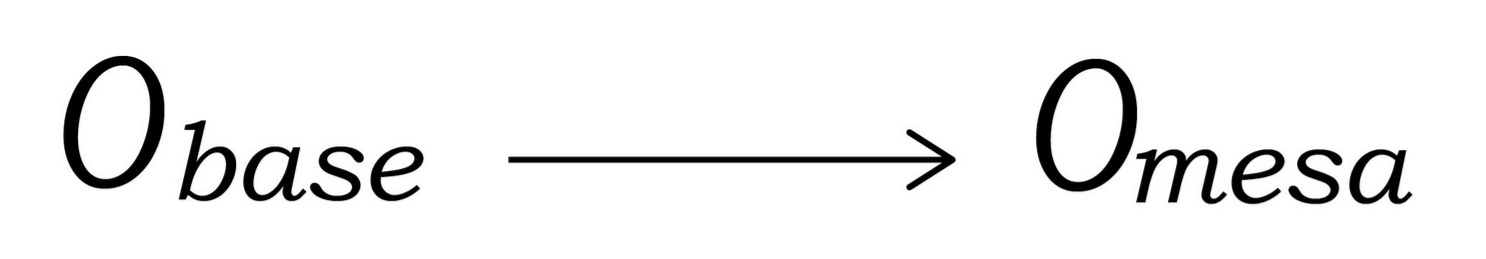

In this case, the mesa-optimizer optimizes for the base objective only to the extent that doing so is the best strategy for getting to the mesa-objective:

What is the difference between the two proxy scenarios?

In the first scenario, the model looks aligned by optimizing for the mesa-objective in the training distribution and through that satisfying the base objective. But in the second scenario, the reverse is happening: the model looks aligned by optimizing for the base objective which in reality promotes the satisfaction of the mesa-objective.

Scenario 2: Approximate alignment

Imagine that you train a neural network to optimize for a goal that cannot be perfectly represented in the neural network itself. So, this means that whatever models you get won't be able to satisfy the goal fully, but only to some degree which is where the idea of approximation comes in. The mesa-objective and the base objective will be sharing the same function only approximately. This approximation error is easily explained through the setup of machine learning training: the mesa-objective is necessarily inside the mesa-optimizer and cannot be given directly by the human designer. As a result, there's an unavoidable difference in the objectives.

Scenario 3: Suboptimality alignment

Here imagine that we train a robot similar to scenario 1. But in this scenario, the robot has a mesa-objective that is to eliminate the number of cookies that exist. When the robot vacuums the kitchen table and makes the cookie crumbles disappear, it falsely assumes that this disappearance is equivalent to elimination from the face of the earth. The robot is still pretty useful for cleaning the table, but to be clear, that's not what the robot itself thinks it's accomplishing. When you keep it within the limits of the training distribution everything should go fine. But the moment it's deployed it'll no longer be helpful; it'll do its best to make all the cookies in the world disappear.

What happened here? A variety of "technical misfortunes" might take place and lead to a situation where the mesa-optimizer looks aligned in the training environment but will not be actually behaving as desired in the deployment environment. These technical misfortunes include deficiencies, errors, computational constraints, other hardware limitations while in the training as well as problems that relate to the mesa-optimizer's reasoning process (e.g., making irrational choices among different options or not having sufficient information to make decisions, etc.).

In suboptimality alignment, the mesa-optimizer is misaligned and despite that, manages to score a high performance. The mistakes it makes are such that lead to good outcomes according to the base objective, but in another environment, the mesa-optimizer could have the most undesired behaviors as we won't know what it was really optimizing for.

0 comments

Comments sorted by top scores.