How Emergency Medicine Solves the Alignment Problem

post by StrivingForLegibility · 2023-12-26T05:24:35.579Z · LW · GW · 4 commentsContents

Separate Policy Search From Policy Implementation Define a Scope of Practice Define Indications and Contraindications Let Your System Ask You What to Do Implement Oversight Increasing Automation and Alignment in EMS None 4 comments

Emergency medical technicians (EMTs) are not licensed to practice medicine. An EMT license instead grants the authority to perform a specific set of interventions, in specific situations, on behalf of a medical director. The field of emergency medical services (EMS) faces many principal-agent problems that are analogous to the principal-agent problem of designing an intelligent system to act autonomously on your behalf. And many of the solutions EMS uses can be adapted for AI alignment.

Separate Policy Search From Policy Implementation

If you were to look inside [? · GW] an agent, you would find one piece responsible for considering which policy to implement, and another piece responsible for carrying it out. In EMS, these concerns are separated to different systems. There are several enormous bureaucracies dedicated to defining the statutes, regulations, certification requirements, licensing requirements, and protocols which EMTs must follow. An EMT isn't responsible for gathering data, evaluating the effectiveness of different interventions, and deciding what intervention is appropriate for a given situation. An EMT is responsible for learning the rules they must follow, and following them.

A medical protocol is basically an if-then set of rules for deciding what intervention to perform, if any. If you happen to live in Berkeley California, here are the EMS documents for Alameda County. If you click through to the 2024 Alameda County EMS Field Manual, under Field Assessment & Treatment Protocols, you'll find a 186 page book describing what actions EMS providers are to take in different situations.

As a programmer, seeing all these flowcharts is extremely satisfying. A flowchart is the first step towards automation. And in fact many aspects of emergency medicine have already been automated. An automated external defibrillator (AED) measures a patient's heart rhythm and automatically evaluates whether they meet the indications for defibrillation. A typical AED has two buttons on it: "On/Off" and "Everyone is clear, go ahead and shock." A ventilator ventilates a patient that isn't breathing adequately, according to parameters set by an EMS provider.

A network router isn't a consequentialist agent. It isn't handed a criteria for evaluating the consequences of different ways it could route each packet, and then empowered to choose a policy which optimizes the consequences of its actions. It is instead what I'll suggestively call a mechanism, a system deployed by an intelligent agent, designed to follow a specific policy which enforces a predictable regularity on the environment. If that policy were to be deficient in some way, such as having a flaw in its user interface code that allows an adversary to remotely obtain complete control over the router, it's up to the manufacturer and not the router itself to address that deficiency.

Similarly, EMS providers are not given a directive of "pick interventions which maximize the expected quality-adjusted life years of your patients." They are instead given books that go into 186 pages of detail describing exactly which interventions are appropriate in which circumstances. As the medical establishment gathers more data, as technology advances, and as evidence that another off-policy intervention is more effective, the protocols are amended accordingly.

Define a Scope of Practice

A provider's scope of practice defines what interventions they are legally allowed to perform. An EMT has a fixed list of interventions which are ever appropriate to perform autonomously. They can tell you quickly and decisively whether an intervention is in their scope of practice, because being able to answer those questions is a big part of their training and certification process. A paramedic has more training, has been certified as being able to safely perform more interventions and knowing when to perform them, and has a correspondingly larger scope of practice.

A household thermostat isn't empowered to start small uncontrolled fires in its owner's home, even though that would help raise the temperature of the space. We deliberately limit the space of actions that our devices can perform autonomously, as part of our overall goal of being able to safely deploy autonomous systems.

Define Indications and Contraindications

Every intervention in a scope of practice has indications associated with it. Many also have contraindications associated with them. An indication indicates that an intervention should be performed. A contraindication indicates that an intervention should not be performed. A patient that is unresponsive, not breathing, and has no detectable pulse meets the Alameda County indications for performing cardiopulmonary resuscitation (CPR). A family member standing in front of the patient, with a valid Do Not Resuscitate (DNR) order is a contraindication for performing CPR. In order for an intervention to be appropriate for a situation, all of that intervention's indications need to present, and all of that intervention's contraindications need to be absent.

Indications and contraindications are a modular and flexible model for specifying what actions are appropriate to take in a well-understood domain of action. If this, then that. In such domains, autonomous systems should be designed to implement a policy which predictably only leads to acceptable outcomes. But what should an autonomous system do when outside of the domain that it was designed for?

Let Your System Ask You What to Do

EMTs perform medical interventions on behalf of a medical director. Directives from a medical director can come in two forms: offline and online. Offline medical directives are also called standing orders. Those 186 pages of "here's what interventions to perform under different circumstances" are offline medical directives. They can be implemented autonomously, without checking in with anyone else.

In contrast, online medical direction involves a medical director (or an authorized representative) telling an EMS provider what intervention to perform. This can include interventions which the provider does not have the authority to perform autonomously. One amazing fact about EMS protocols is that they can direct a provider to seek online medical direction. "I only have 186 pages to describe what to do in literally any situation, and so I'm going to cover a lot of them, but if you find yourself in a situation that isn't covered go ahead and call me and I'll tell you what to do."

This is another great example of the do-nothing pattern of gradual automation/delegation. We start out doing everything ourselves. Eventually we have enough experience that we can write down a flowchart for a task. We can then automate or delegate parts of that task. And the flowchart can say things like "if this happens, you are outside of the domain where I know what to tell you to do in advance. Go ahead and call me and I'll give you direction on how to proceed."

Of course, the flowchart also needs to specify what to do if the director can't be reached. In particular, the system should continue to only take actions which it is authorized to perform autonomously, and only in situations where those actions were defined to be appropriate. In EMS, online medical direction is often helpful, but providers can always fall back on their training and offline medical direction if necessary.

Implement Oversight

A patient care report (PCR) records information about an interaction between a patient and an EMS provider. This includes information about the patient including their name, contact information, signs and symptoms. And it also records what interventions, if any, the EMS provider performed. This documentation can be used for all sorts of important purposes. Often, a patient needing emergency medical attention is taken to a hospital. The PCR becomes part of their medical records, and helps the providers there understand what's been happening with this patient and what treatments they have already received.

The data from many PCRs can be aggregated to measure the effectiveness of different interventions. This information can be used to improve decisions about how protocols should be updated, what equipment should be present in hospitals and ambulances, and sometimes even what interventions should be in a given scope of practice. This is one form of oversight.

It is often emphasized to EMS providers that a good PCR can also mean the difference between being liable or not liable in court. EMS providers are held to a standard of care, and they can face liability for performing an inappropriate intervention, or failing to perform an appropriate one. And a PCR is a provider's documentation of what was going on with a patient, and what interventions they performed. This is another form of oversight.

An EMS provider is also expected to actually follow the directions of their medical director, and is held accountable for failure to do so. This "following my directions" property is one we also want our autonomous systems to have. We call an autonomous system corrigible [? · GW] if it follows valid instructions from authorized parties, and doesn't resist being turned off or reprogrammed. Network routers and thermostats are corrigible. Goal-directed agents are generally not corrigible, because you generally can't accomplish your goal if you're turned off or reprogrammed.

Increasing Automation and Alignment in EMS

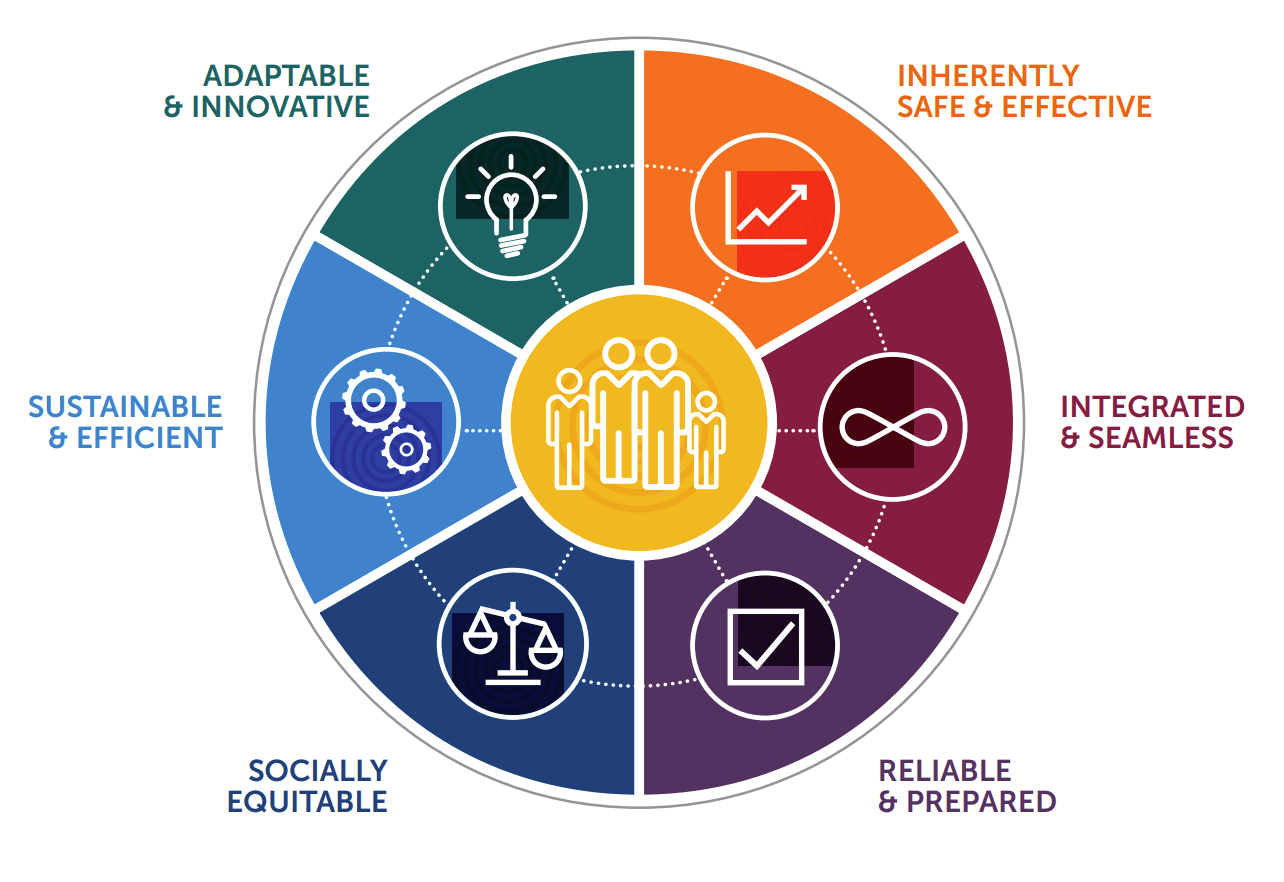

Interestingly, solving the Alignment Problem is also the centerpiece of the EMS Agenda 2050. The tagline is "A People-Centered Vision for the Future." And it's about how the design of the EMS system can be better aligned with the people it serves.

It envisions a world where providers can perform safer and more effective interventions. Where more artificially intelligent and robotic systems are deployed as part of EMS. Where access to care, quality of care, and outcomes are not determined by age, socioeconomic status, gender, ethnicity, geography or other social determinants. Where new technologies, system designs, educational programs, and other aspects of EMS are continuously evaluated and improved.

The principles guiding the EMS Agenda 2050 were selected as part of designing an system for providing emergency medical care. But those same principles can be applied to the design of other systems, including software systems. And to institutions that design software systems. Wouldn't you like your software to be inherently safe and effective, integrated and seamless, reliable, scalable, and efficient? Wouldn't you like the institutions designing your software to be adaptable and innovative, to consider factors like sustainability and the equitable distribution of access and benefits?

This sequence is about how to approach the problem of delegating decision-making to software systems, which are empowered in some circumstances to act autonomously on your behalf. EMS has been doing an impressive job solving an analogous delegation problem, and many practices from EMS can be readily applied to software development.

4 comments

Comments sorted by top scores.

comment by mishka · 2023-12-26T16:14:01.939Z · LW(p) · GW(p)

And many of the solutions EMS uses can be adapted for AI alignment.

This is certainly true for AIs which are not as smart as professional humans, with the exception of their narrow application area. For that situation, the EMT analogy is great.

But when we think about coming super-intelligence, if we extend your analogy, we are talking about systems which understand medicine (and everything else) much better than human medical directors. The system knows how to cure everything that ails the patient, it can rejuvenate the patient, and ... it can try all kinds of interesting medical interventions and enhancements on the patient... and those interventions and enhancements can change the patient in all kinds of interesting ways... and they can have all kinds of interesting side-effects in the environment the patient shares with everyone else...

That's, roughly speaking, the magnitude of AI safety problems we need to be ready to deal with.

Replies from: Orual, StrivingForLegibility↑ comment by Orual · 2023-12-26T17:19:55.652Z · LW(p) · GW(p)

This is definitely a spot where the comparison breaks down a bit. However, it does still hold in the human context, somewhat, and maybe that generalizes.

I worked as a lifeguard for a number of years (even lower on the totem pole than EMTs, more limited scope of practice). I am, to put it bluntly, pretty damn smart, and could easily find optimizations, areas where I could exceed scope of practice with positive outcomes if I had access to the tools even just by reading the manuals for EMTs or paramedics or nurses. I, for example, learned how to intubate someone, and to do an emergency tracheotomy from a friend who had more training. I'm also only really inclined to follow rules to the extent they make sense to me and the enforcing authorities in question can impose meaningful consequences on me. But I essentially never went outside SOP, certainly not at work. Why?

Well one reason was legal risk, as mentioned. If something went wrong at work and someone is (further) injured under my care, legal protection was entirely dependent on my operating within that defined scope of practice. For a smart young adult without a lot of money for lawyers that's fairly good incentive to not push boundaries too much, especially given the gravity of emergency situations and the consequences for guessing wrong even if you are smart and confident in your ability to out-do SOP.

Second, the limits were soft enforced by access to equipment and medicine. The tools I had access to at my workplace were the ones I could officially use, and I did not have easy access to any tools or medicines which would have been outside SOP to administer (or advise someone to administer). This was deliberate.

Third, emergency situations effectively sharply limit your context window and ability to deviate. Someone is dying in front of you, large chunks of you are likely trying to panic, especially if you haven't been put in this sort of situation before, and you need to act immediately, calmly, and correctly. What comes to mind most readily? Well, the series of if-then statements that got drilled into you during training. It's been most of a decade since my last recert, and I can still basically autopilot my way through a medical emergency based on that training. Saved a friend and coworker's life when he had a heart attack and I was the only one in the immediate area.

So how do we apply that? Well, I think the first two have obvious analogies in terms of doing things that actually impose hardware or software limits on behaviour. Obviously for a smart enough system the ability to enforce such restrictions is limited and even existing LLMs can be pushed outside of training parameters by clever prompting, but it's clear that such means can alter model behaviour to a point. Modifying the training dataset is perhaps another analogous option, and arguably a more powerful one if it can be done well, because the pathways developed at that stage will always have an impact on the outputs, no matter the restrictions or RHLF or other similar means of guiding a mostly-trained model. Not giving it tools that let it easily go outside the set scope will again work up to a point. The third one I think might be most useful. Outside of the hardest of hard takeoff scenarios, it will be difficult for any intelligence to do a great deal of damage if only given a short lifetime in which to do it, while it also is being asked to do the thing it was very carefully trained for. LLMs already effectively work this way, but this suggests that as things advance we should be more and more wary of allowing long-running potential agents with anything like run-to-run memory. This obviously greatly restricts what can be done with artificial intelligence (and has somewhat horrifying moral implications if we do instantiate sapient intelligences in that manner), but absent solving the alignment problem more completely would go a long way toward reducing the scope of possible negative outcomes.

↑ comment by StrivingForLegibility · 2023-12-26T16:53:32.338Z · LW(p) · GW(p)

Absolutely! I have less experience on the "figuring out what interventions are appropriate" side of the medical system, but I know of several safety measures they employ that we can adapt for AI safety.

For example, no actor is unilaterally permitted to think up a novel intervention and start implementing it. They need to convince a institutional review board that the intervention has merit, and that a clinical trial can be performed safely and ethically. Then the intervention needs to be approved by a bunch of bureaucracies like the FDA. And then medical directors can start incorporating that intervention into their protocols.

The AI design paradigm that I'm currently most in favor of, and that I think is compatible with the EMS Agenda 2050, is Drexler's Comprehensive AI Services (CAIS). Where a bunch of narrow AI systems are safely employed to do specific, bounded tasks. A superintelligent system might come up with amazing novel interventions, and collaborate with humans and other superintelligent systems to design a clinical trial for testing them. Every party along the path from invention to deployment can benefit from AI systems helping them perform their roles more safely and effectively.

comment by Kaj_Sotala · 2023-12-26T21:11:36.690Z · LW(p) · GW(p)

I'm not sure how useful this will be for alignment, but I didn't know anything about EMT training or protocols before, and upvoted this for being a fascinating look into that.