A dynamical systems primer for entropy and optimization

post by Alex_Altair · 2022-12-10T00:13:13.984Z · LW · GW · 3 commentsContents

Definition The set of states The evolution rule Phase space Examples Discrete time Discrete, finite space Discrete, infinite space Continuous, bounded space Continuous, unbounded space Continuous time Discrete, finite space Discrete, infinite space Continuous, bounded space Continuous, unbounded space Further resources None 3 comments

This is a reference post for my (in-progress) sequences on entropy and optimization. It is intended to be used by readers to catch up on this concept in case it's unfamiliar to them. It is absolutely not intended as a stand-alone introduction to the topic; that would take us far away from its relevance to entropy and optimization. As such, there are countless ideas central to the study of dynamical systems that are omitted.

Both entropy[1] and optimization take place within an extremely simple formalism called a dynamical system. It is simple in the sense that it has very few parts; often, when you start to work with a specific dynamical system, you'll find that its behavior is extremely interesting.

Definition

A dynamical system is any formally specified system that moves between states over time according to a deterministic[2] rule which we'll call the evolution rule. This is formalized as a function that takes every state in a set to another state in ; .

If you are treating time as discrete, then each application of the function is moving the system forward in time one unit. If you are treating time as continuous, then you'll need your function to be able to take another parameter t to tell it how far into the future to push the system; . In either case, we'll indicate the amount of time with a little exponent over the function, as in . So, in the discrete case, applying it twice would look like .

The set of states

The set of states represents all states that the system could[3] be in. There are no further constraints on the set; states do not need to have any properties whatsoever. That said, they almost always do; we'll go into more detail about this in the section about the phase space.

As sets, they can have any cardinality; they could be finite, they could be countably infinite, or they could be uncountably infinite.[4]

The evolution rule

The rule tells us how the system evolves over time. Any state that the system could be in must be able to evolve to some other state in the specified set; it's not valid to allow your system to simply stop evolving,[5] or to exit the set of states. In this way, a dynamical system is an abstraction that captures the idea of "laws of physics". (It also just happens to be how validly defined functions work.)

A sequence of states produced by repeated appliactions of the evolution rule is called a trajectory (by analogy with e.g. a cannonball having a ballistic trajectory through 3-dimensional space). Since every state must have a future state, trajectories cannot stop; they can either travel through infinitely many future states, or they can loop back to some previous state, becoming periodic. However it is possible for a state to have no preceding state, or to have infinitely many preceding states, or to have multiple preceding states. Another way to say this is that the evolution rule is not necessarily injective or surjective.

Phase space

Phase space simply refers to a way of organizing your set of states. As we alluded to above, it's not usually that useful for your system to have states that are just sterile elements in a set. Usually they have meaningful properties besides the trajectories they lie on.

Let's say your system is five particles bouncing around in a box. If we pause the evolution – that is, if we isolate a single state – what information is required to give a full specification of the state at that time? For starters, each particle has a position in each of the three (physical) dimensions. And they each have a velocity, which also has a component in each of the three dimensions. If our evolution rule is Newtonian billiards, where each point-particle travels in a straight line until it hits a wall, after which it bounces off at the angle of reflection, then this is enough information. Five particles with two sets of three pieces of information makes 5*(3 + 3) = 30 pieces of information.

You could consider each of these pieces of information to constitute a dimension of the set of states. In the same way that points on the Cartesian plane have an -dimension and a -dimension, this dimensionality gives the set of states a sort of structure, and thus we call it a space.[6]

Unfortunately, it is common for phase spaces to have a huge number of dimensions, potentially even infinitely many. If our box of particles was more realistic and had one mole of atoms in it, the system's phase space would have over dimensions. So, while visualizing the phase space can be helpful for many applications, it's of limited use past three dimensions.

Examples

Though I think the definition above is, in some sense, logically sufficient to know what I mean by a dynamical system, the real power of the concept comes from seeing the vast scope of what it captures. This list is my attempt to communicate some of that scope.

For the sake of having some categorization scheme, I separate the examples by the nature of their parameters, first by time, then by their phase space. Interestingly, "discrete vs continuous" is not even a true dichotomy here – one could construct a dynamical which has some continuous dimensions of phase space and some discrete dimensions. Or, one could have a single dimension which has some continuous segments and some discrete segments. Or something even crazier, like the Cantor set. And you could even have your time parameter work this way, as long as your evolution rule is capable of expressing how any state can evolve to any future point of that time parameter.

Note that in some of the below examples, what is being shown is an example trajectory visualized with physical dimensions, while in other examples we are seeing states as points evolving through the phase space.

Discrete time

Discrete, finite space

The simplest possible non-empty dynamical system would be one state that evolves to itself.

In this class we could also include all (finite) directed graphs where every node only has exactly one arrow coming out of it. Every such graph would represent both a set of states (the nodes) and an evolution rule (the arrows). I find this type of system to be one of the easiest to think about when trying to understand something about dynamical systems in general. However, the graphs do not, by themselves, have any inherent phase space dimensions.

A well-known discrete-time dynamical system is Arnold's cat map. Below is an animation of the trajectory of every point in the (discrete) phase space, which only has two dimensions. Each iteration shears and rearranges the pixels of the image in the same deterministic, reversible way. Every initial state (pixel) has a periodic trajectory, and by the 150th application of the evolution rule, each trajectory returns to its original state (which is to say, ).

Discrete, infinite space

Turing machines are a formalization of computation. To keep track of their computation, they have an infinitely long binary tape. This, plus the internals of the Turing machine, constitute a phase space. Each square on the tape is its own dimension in the phase space, which can only hold the values 0 or 1. Since the tape is infinitely long, this system has a (countably) infinite number of possible states. (The evolution rule is whatever rules dictate how the Turing machine decides to manipulate the tape and change its own internal state.)

Cellular automata are a similar class of system. They start out with a set of cells, possibly one-dimensional, possibly two, possibly in a hexagonal grid, et cetera. The rules of the cellular automata will determine how the values of the cells are updated.

Sometimes they all get update on each timestep, and sometimes they're only updated locally. Conway's Game of Life, shown below, is one such example. Like the tape of a Turing machine, it has a countably infinite number of cells, each of which can hold one of two values. Each of these cells is a dimension in the phase space.

Continuous, bounded space

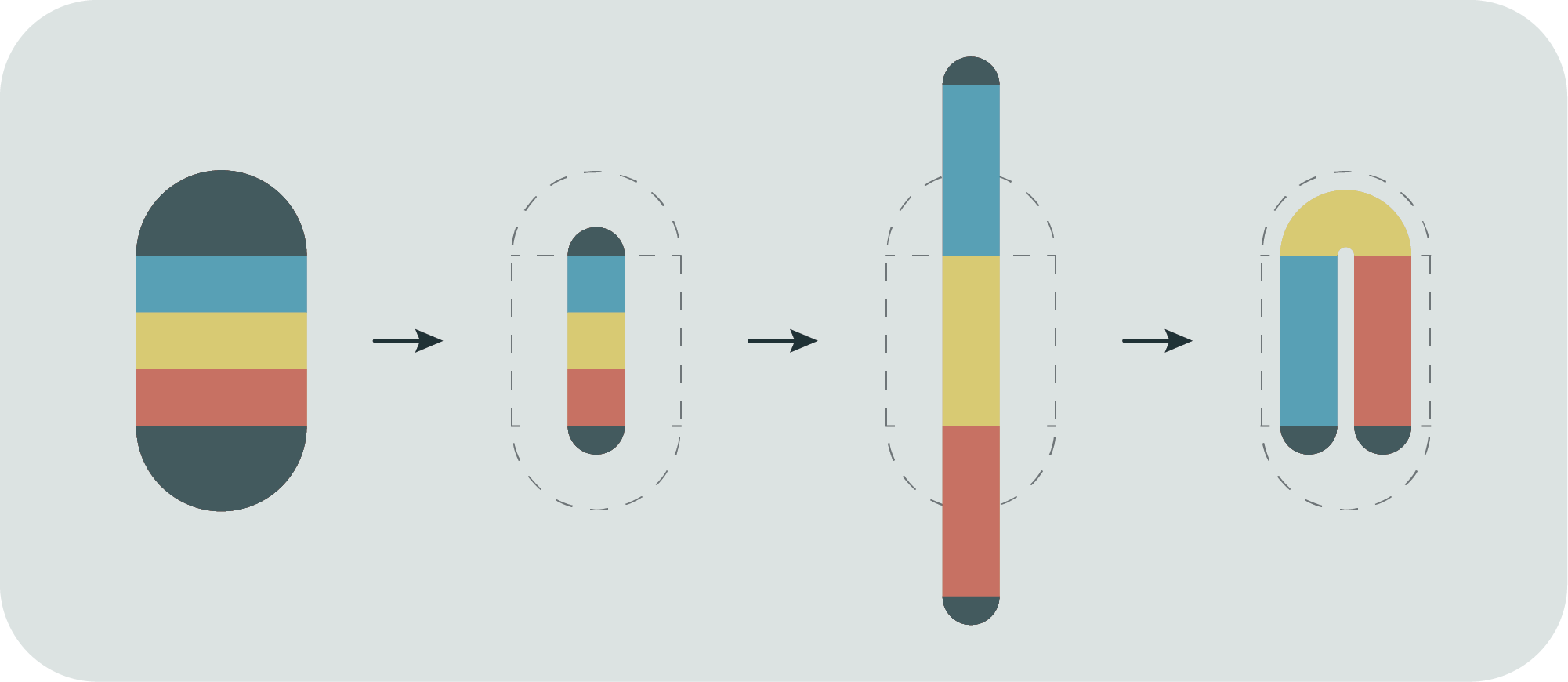

Another canonical dynamical system is Smale's horseshoe map. Like Arnold's cat map above, this illustration is depicting the entire phase space. Each iteration squishes, stretches and folds the phase space (video here). Some points have periodic trajectories, and some don't; they wander out of the square-shaped region, and stay outside.

Continuous, unbounded space

The Mandelbrot set is technically, well, a set. But it is generated by an evolution rule which is applied to the complex plane. The complex plane is the set of possible states. Each point is taken through a process, where it is iteratively squared and added to the original. Points for which this process does not diverge form the Mandelbrot set. In our dynamical systems terms, the Mandelbrot set is the set of initial states whose trajectory stays bounded.

The evolution rule here is slightly non-trivial, because it continues to use the initial complex number at each iteration. Therefore we have to consider the states to be pairs where the evolution rules maps the second value back to itself; .

Continuous time

Discrete, finite space

The combination of continuous time and discrete space is logically coherent, but seems somewhat unnatural. Models of this type might effectively be continuous-space models which are just "rounding off" the spatial coordinates into discrete buckets; this is called coarse-graining.

However there are at least some phenomena that act this way, like nuclear radiation. The nuclei of atoms exist across continuous time, but then decay at discrete moments. One generalization of this is Poisson point processes.

Discrete, infinite space

This category is here for the sake of completeness; there's nothing that in principle prevents a system from having continuous time and discrete-but-infinite space. But I wasn't able to find any named examples of named systems.

Continuous, bounded space

Similar to the particles in a box described above, billiards is a very simple evolution rule which can lead to very interesting results depending on which bounding shape you choose. Below are two systems which have somewhat unpredictable behavior.

Here, the phase space has three dimensions; two for the location of the ball, and one for its direction. Even though the system state is changing at every instant in time, you'll notice that the balls are going straight almost always. Things only seem to substantially change when they reflect off a wall. So the continuous time parameter is not exactly being "fully utilized"; one could express this system with discrete time, where the timestep advanced whenever the ball hit a wall, and then incorporate the shape of the boundary into the evolution rule, which would figure out what angle of wall the ball was going to hit next.

In contrast, the double pendulum below is smoothly changing in all of its parameters. The phase space has four dimensions; an angular position and speed for each of the two arms.

Continuous, unbounded space

Every differential equation of one independent variable can be interpreted as a dynamical system in continuous time. Thus, many classic differential equations – the wave equation, the heat equation, control processes – are dynamical systems of this class.

Further resources

If you're interested in digging into the mathematical meat of dynamical systems, here are two short textbooks I've used for reference.

- If you like concretes first, consider Dynamical Systems by Shlomo Sternberg (2010).

- If you like abstractions and philosophy, consider A Modern Introduction to Dynamical Systems by Richard J. Brown (2018).

- ^

Although, as mentioned in Introduction to abstract entropy [LW · GW], the concept of entropy is meaningful and highly useful when applied to sets of states with no evolution rule.

- ^

Probabilistic evolution rules seem logically coherent to me, though they would complicate the analysis a lot. They could also be made equivalent to deterministic ones by including the probability distribution in your state description. Or they could just be considered ways of dealing with subjective uncertainty over the (underlyingly deterministic) state.

- ^

This isn't really intended to be a philosophical "could", it's just whatever states you're deciding make up your system. However, the set needs to be closed under function application; applying the evolution rule to any element of the set should return another element of the set. It is emphatically not the case that every element in the set needs to be the result of evolving forward some other element.

- ^

I haven't yet encountered a dynamical system which had a set of states that was larger than , but I don't see why that wouldn't be just as valid.

- ^

However, it is valid to have a state evolve to itself; this is a way to model a system stopping.

- ^

The etymology of using the descriptor "phase" is unclear. My mnemonic is that, if the trajectory is periodic, then the set of all points on the trajectory is just the same as all the phases in the period.

3 comments

Comments sorted by top scores.

comment by Alex_Altair · 2022-12-10T00:16:33.965Z · LW(p) · GW(p)

(I hit "publish" on this post earlier than I am comfortable with, because I think I'm biased toward waiting too long, and because publishing is the fastest and most thorough way to get feedback.)

comment by Algon · 2022-12-10T01:11:08.857Z · LW(p) · GW(p)

Wouldn't an infinite Ising model, or really any infinite lattice, count as a discrete, infinite space dynamical system with continuous time? Am I going crazy because of a lack of sleep?

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2022-12-10T06:18:56.292Z · LW(p) · GW(p)

You are not going crazy.

EDIT: But Alex wants deterministic time evolution, which basically means either you have a quantum Ising model (which maybe should count as continuous state space even though the physical space is discrete), or you have a classical Ising model at T=0. Which is maybe not so interesting for the Ising model per se, but there are plenty of classical models of parametric phase transitions at T=0.