Why I am not an AI extinction cautionista

post by Shmi (shminux) · 2023-06-18T21:28:38.657Z · LW · GW · 40 commentsContents

My actual beef is with the certainty of the argument "there are many disjunctive paths to extinction, and very few, if any, conjunctive paths to survival" (and we have no do-overs). None 40 comments

First, to dispense with what should be obvious, if a superintelligent agent wants to destroy humans, we are completely and utterly hooped. All the arguing about "but how would it...?" indicates lack of imagination.

...Of course a superintelligence could read your keys off your computer's power light, if it found it worthwhile. Most of the time it would not need to, it would find easier ways to do whatever humans do by pressing keys. Or make the human press the keys. Our brains and minds are full of unpatcheable and largely invisible (to us) security holes. Scott Shapiro calls it upcode:

Hacking, Shapiro explains, is not only a matter of technical computation, or “downcode.” He uncovers how “upcode,” the norms that guide human behavior, and “metacode,” the philosophical principles that govern computation, determine what form hacking takes.

Humans successfully hack each other all the time. If something way smarter than us wants us gone, we would be gone. We cannot block it or stop it. Not even slow it down meaningfully.

Now, with that part out of the way...

My actual beef is with the certainty of the argument "there are many disjunctive paths to extinction, and very few, if any, conjunctive paths to survival" (and we have no do-overs).

The analogy here is sort of like walking through an increasingly dense and sophisticated minefield without a map, only you may not notice when you trigger a mine, and it ends up blowing you to pieces some time later, and it is too late to stop it.

I think this kind of view also indicates lack of imagination, only in the opposite extreme. A conjunctive convoluted path to survival is certainly a possibility, but by no means the default or the only way to self-consistently imagine reality without reaching into wishful thinking.

Now, there are several (not original here, but previously mentioned) potential futures which are not like that, that tend to get doom-piled by the AI extinction cautionistas:

...Recursive self-improvement is an open research problem, is apparently needed for a superintelligence to emerge, and maybe the problem is really hard.

...Pushing ML toward and especially past the top 0.1% of human intelligence level (IQ of 160 or something?) may require some secret sauce we have not discovered or have no clue that it would need to be discovered. Without it, we would be stuck with ML emulating humans, but not really discovering new math, physics, chemistry, CS algorithms or whatever.

...An example of this might be a missing enabling technology, like internal combustion for heavier-than-air flight (steam engines were not efficient enough, though very close). Or like needing the Algebraic Number Theory to prove the Fermat's last theorem. Or similar advances in other areas.

...Worse, it could require multiple independent advances in seemingly unrelated fields.

...Agency and goal-seeking beyond emulating what humans mean by it informally might be hard, or not being a thing at all, but just a limited-applicability emergent concept, sort of like the Newtonian concept of force (as in F=ma).

...Improvement AI beyond human level requires "uplifting" humans along the way, through brain augmentation or some other means.

...AI that is smart enough to discover new physics may also discover separate and efficient physical resources for what it needs, instead of grabby-alien-style lightconing it through the Universe.

...We may be fundamentally misunderstanding what "intelligence" means, if anything at all. It might be the modern equivalent of the phlogiston.

...And various other possibilities one can imagine or at least imagine that they might exist. The list is by no means exhaustive or even remotely close to it.

In the above mentioned and not mentioned possible worlds the AI-borne human extinction either does not happen, is not a clear and present danger, or is not even a meaningful concept. Insisting that the one possible world of a narrow conjunctive path to survival has a big chunk of probability among all possible worlds seems rather overconfident to me.

40 comments

Comments sorted by top scores.

comment by Matthew Barnett (matthew-barnett) · 2023-06-18T23:32:40.592Z · LW(p) · GW(p)

Pushing ML toward and especially past the top 0.1% of human intelligence level (IQ of 160 or something?) may require some secret sauce we have not discovered or have no clue that it would need to be discovered. Without it, we would be stuck with ML emulating humans, but not really discovering new math, physics, chemistry, CS algorithms or whatever.

For what it's worth, I think the more likely possibility is that blowing past top-human level will require more expensive data than catching up to top-human level. Right now ML models are essentially kickstarting from human data. Rapid progress is to be expected as you catch up to the frontier, but it becomes harder once you're already there. Ultimately I expect this effect to not slow down AI greatly, because I think at roughly the same time it becomes harder to make AI smarter, AI will also accelerate growth in data and compute inputs.

Replies from: shminux↑ comment by Shmi (shminux) · 2023-06-19T01:01:22.695Z · LW(p) · GW(p)

Right, the dearth of new training data might be another blocker, as discussed in various places. Whether non-redundant data growth will happen, or something like "GSTT" for self-training will be useful, who knows.

comment by lukemarks (marc/er) · 2023-06-18T22:10:40.073Z · LW(p) · GW(p)

"But what if [it's hard]/[it doesn't]"-style arguments are very unpersuasive to me. What if it's easy? What if it does? We ought to prefer evidence to clinging to an unknown and saying "it could go our way." For a risk analysis post to cause me to update I would need to see "RSI might be really hard because..." and find the supporting reasoning robust.

Given current investment in AI and the fact that I can't conjure a good roadblock for RSI, I am erring on the side of it being easier rather than harder, but I'm open to updating in light of strong counter-reasoning.

Replies from: None↑ comment by [deleted] · 2023-06-19T03:56:11.825Z · LW(p) · GW(p)

Replies from: marc/er↑ comment by lukemarks (marc/er) · 2023-06-19T05:23:59.410Z · LW(p) · GW(p)

More like: (P1) Currently there is a lot of investment in AI. (P2) I cannot currently imagine a good roadblock for RSI. (C) Therefore, I have more reasons to believe RSI will not be entail atypically difficult roadblocks than I do to believe it will.

This is obviously a high level overview, and a more in-depth response might cite claims like the fact that RSI is likely an effective strategy for achieving most goals, or mention counterarguments like Robin Hanson's, which asserts that RSI is unlikely due to the observed behaviors of existing >human systems (e.g. corporations).

Replies from: None↑ comment by [deleted] · 2023-06-19T06:24:16.252Z · LW(p) · GW(p)

Replies from: marc/er↑ comment by lukemarks (marc/er) · 2023-06-19T08:04:45.499Z · LW(p) · GW(p)

In (P2) you talk about a roadblock for RSI, but in (C) you talk about about RSI as a roadblock, is that intentional?

This was a typo.

By "difficult", do you mean something like, many hours of human work or many dollars spent? If so, then I don't see why the current investment level in AI is relevant. The investment level partially determines how quickly it will arrive, but not how difficult it is to produce.

The primary implications of the difficulty of a capabilities problem in the context of safety is when said capability will arrive in most contexts. I didn't mean to imply that the investment amount determined the difficulty of the problem, but that if you invest additional resources into a problem it is more likely to be solved faster than if you didn't invest those resources. As a result, the desired effect of RSI being a difficult hurdle to overcome (increasing the window to AGI) wouldn't be realized.

comment by Vladimir_Nesov · 2023-06-18T22:07:15.609Z · LW(p) · GW(p)

Three different claims: (1) doom is likely, (2) doom is likely if AI development proceeds with modern methods, (3) if AGI [LW(p) · GW(p)] works as a result of being developed with modern methods, then doom is likely. You seem to be gesturing at antiprediction of (1). I think the claim where the arguments are most legible is (3), and the most decision relevant claim is (2).

Replies from: shminux↑ comment by Shmi (shminux) · 2023-06-19T04:05:25.643Z · LW(p) · GW(p)

Thanks, this is helpful, though not 100% clear.

I think the standard logic goes as follows: GPT-style models are not necessarily what will reach the extinction-level threat, but they show that such a threat is not far away, partially because interpretability is far behind capabilities. If by "modern methods" you mean generative pre-trained transformers or similar, not some totally new future architecture, then I do not think anyone claims (2), let alone (3)? I think what people claim is that just scaling gets us way further than was expected, the amount and power of GPUs can be a bottleneck for capabilities all the way to superintelligence, regardless of the underlying architecture, and therefore (1).

Replies from: Ape in the coat, Vladimir_Nesov↑ comment by Ape in the coat · 2023-06-19T09:34:34.170Z · LW(p) · GW(p)

It's less about GPT-style in particular and more "gradient decent producing black boxes"-style in general.

The claim goes that if we develop AGI this way we are doomed. And we are on the track to do it.

↑ comment by Vladimir_Nesov · 2023-06-19T11:37:50.482Z · LW(p) · GW(p)

By "modern methods" I meant roughly what Ape in the coat noted [LW(p) · GW(p)], more specifically ~end-to-end DNNs (possibly some model-based RL setup, possibly with some of the nets bootstrapped from pre-trained language models, or trained on data or in situations LLMs generate).

As opposed to cognitive architectures that are more like programs in the classical sense, even if they are using DNNs for some of what they do [LW(p) · GW(p)], like CoEm [LW(p) · GW(p)]. Or DNNs iteratively and automatically "decompiled" into explicit and modular giant but locally human-understandable program code using AI-assisted interpretability tools (in a way that forces change of behavior and requires retraining of remaining black box DNN parts to maintain capability), taking human-understandable features forming in DNNs as inspiration to write code that more carefully computes them. I'm also guessing alignment-themed decision theory has a use in shaping something like this (whether the top-level program architecture or synthetic data that trains the DNNs), this motivates my own efforts. Or something else entirely; this paragraph is non-examples for "modern methods" in the sense I intended, the kind of stuff that could use some giant training run pause to have a chance to grow up.

comment by evand · 2023-06-18T22:17:26.028Z · LW(p) · GW(p)

Of course a superintelligence could read your keys off your computer's power light, if it found it worthwhile. Most of the time it would not need to, it would find easier ways to do whatever humans do by pressing keys. Or make the human press the keys.

FYI, the referenced thing is not about what keys are being pressed on a keyboard, it's about extracting the secret keys used for encryption or authentication. You're using the wrong meaning of "keys".

Replies from: shminux↑ comment by Shmi (shminux) · 2023-06-19T00:56:42.161Z · LW(p) · GW(p)

Yeah, good point. Would be easy in both meanings.

comment by Vaniver · 2023-06-19T02:13:53.875Z · LW(p) · GW(p)

Recursive self-improvement is an open research problem, is apparently needed for a superintelligence to emerge, and maybe the problem is really hard.

How do you square this with superhuman performance at aiming, playing Go, etc.? I don't think RSI is necessary for transformative AI, and I think we are not yet on track to have non-super transformative AI go well. If you think otherwise, I'd be interested in hearing why.

Replies from: shminux↑ comment by Shmi (shminux) · 2023-06-19T02:25:15.324Z · LW(p) · GW(p)

Are you saying that a human designed model is expected to achieve superhuman intelligence without being able to change its own basic structure and algorithms? I think it is a bold claim, and not one being made by Eliezer or anyone else in the highly-alarmed alignment community.

Replies from: Vaniver, JBlack↑ comment by Vaniver · 2023-06-19T03:06:33.166Z · LW(p) · GW(p)

Are you saying that a human designed model is expected to achieve superhuman intelligence without being able to change its own basic structure and algorithms?

I think we have lots of evidence of AI systems achieving superhuman performance on cognitive tasks (like how GPT-4 is better than humans at next-token prediction, even tho it doesn't seem to have all of human intelligence). I think it would not surprise me if, when we find a supervised learning task close enough to 'intelligence', a human-designed architecture trained on that task achieves superhuman intelligence.

Now, you might think that such a task (or combination of related tasks) doesn't exist, but if so, it'd be interesting to hear why (and whether or not you were surprised by how many linguistic capabilities next-token-prediction unlocked).

[edit: also, to be clear, I think 'transformative AI' is different from superintelligence; that's why I put the 'non-super' in front. It's not obvious to me that 'superintelligence' is the right classification when I'm mostly concerned about problems of security / governance / etc.; superintelligence is sometimes used to describe systems smarter than any human and sometimes used to describe systems smarter than all humans put together, and while both of those spook me I'm not sure that's even necessary; systems that are similarly smart to humans but substantially cheaper would themselves be a big deal.]

I think it is a bold claim, and not one being made by Eliezer or anyone else in the highly-alarmed alignment community.

Maybe my view is skewed, but I think I haven't actually seen all that many "RSI -> superintelligence" claims in the last ~7 years. I remember thinking at the time that AlphaGo was a big update about the simplicity of human intelligence; one might have thought that you needed emulators for lots of different cognitive modules to surpass human Go ability, but it turned out that the visual cortex plus some basic planning was enough. And so once the deep learning revolution got into full swing, it became plausible that we would reach AGI without RSI first (whereas beforehand I think the gap to AGI looked large enough that it was necessary to posit something like RSI to cross it).

[I think I said this privately and semi-privately, but don't remember writing any blog posts about it because "maybe you can just slap stuff together and make an unaligned AGI!" was not the sort of blog post I wanted to write.]

Replies from: Vladimir_Nesov, shminux↑ comment by Vladimir_Nesov · 2023-06-19T12:22:39.172Z · LW(p) · GW(p)

I remember thinking at the time that AlphaGo was a big update about the simplicity of human intelligence

The thing that spooks me about this is not so much simplicity of the architecture but the fact that for example Leela Zero plays superhuman Go at only 50M parameters. Put that in context of modern LLMs with 300B parameters, the distinction is in training data. With sufficiently clever synthetic data generation (that might be just some RL setup), "non-giant training runs" might well suffice for general superintelligence, rendering any governance efforts that are not AGI-assisted [LW(p) · GW(p)] futile.

↑ comment by Shmi (shminux) · 2023-06-19T04:47:07.635Z · LW(p) · GW(p)

I think the claim is that one can get a transformative AI by pushing a current architecture, but probably not an extinction-level AI?

Replies from: Vaniver↑ comment by Vaniver · 2023-06-19T19:37:33.165Z · LW(p) · GW(p)

"Current architecture" is a narrower category than "human-designed architecture." You might have said in 2012 "current architectures can't beat Go" but that wouldn't have meant we needed RSI to beat Go[1]; we just need to design something better than what we had then.

I think it is likely that a human-designed architecture could be an extinction-level AI. I think it is not obvious whether the first extinction-level AI will be human-designed or AI-designed, as it's both determined by technological uncertainties and political uncertainties.

I think it is plausible that if you did massive scaling on current architecture, you could get an extinction-level AI, but it is pretty unlikely that this will be the first or even seriously attempted. [Like, if we had a century of hardware progress and no software progress, could GPT-2123 be extinction-level? I'm not gonna rule it out, but I am going to consider extremely unlikely "a century of hardware progress with no software progress."]

- ^

Does expert iteration count as recursive self-improvement? IMO "not really but it's close." Obviously it doesn't let you overcome any architecture-imposed limitations, but it lets you iteratively improve based on your current capability level. And if you view some of our current training regimes as already RSI-like, then this conversation changes.

↑ comment by JBlack · 2023-06-19T03:11:01.068Z · LW(p) · GW(p)

I'm quite confident that it's possible, but not very confident that such a thing would be likely the first general superintelligence. I expect a period during which humans develop increasingly better models, until one or more of those can develop more generally better models by itself. The last capability isn't necessary to AI-caused doom, but it's certainly one that would greatly increase the risks.

One of my biggest contributors to "no AI doom" credence is that there are technical problems that prevent us from ever developing anything sufficiently smarter than ourselves to threaten our survival. I don't think it's certain that we can do that - but I think the odds are that we can, almost certain that we will if we can, and likely comparatively soon (decades rather than centuries or millennia).

comment by mishka · 2023-06-19T15:31:27.570Z · LW(p) · GW(p)

Anti-AI-doom arguments can be very roughly classified into

-

Reasons for AI capability growth being much less spectacular than many of us expect it to be;

-

Reasons for doom not happening despite AI capability growth being quite spectacular (including intelligence explosion/foom in their unipolar or multipolar incarnations).

The second class of arguments is where the interesting non-triviality is. This post gives a couple of arguments belonging to this class:

Improvement AI beyond human level requires "uplifting" humans along the way, through brain augmentation or some other means.

AI that is smart enough to discover new physics may also discover separate and efficient physical resources for what it needs, instead of grabby-alien-style lightconing it through the Universe

Let's see if we can get more anti-doom arguments from this class (scenarios where capability does go through the roof, but it nevertheless goes well for us and our values).

Replies from: None↑ comment by [deleted] · 2023-06-19T20:47:24.983Z · LW(p) · GW(p)

Replies from: mishka↑ comment by mishka · 2023-06-20T01:39:32.969Z · LW(p) · GW(p)

I want to see if we agree on where that classification is coming from.

We are probably not too far from each other in this sense. The only place where one might want to be more careful is this statement

There will be spectacular growth in AI capabilities over the next N years or there will not be spectacular growth in AI capabilities over the next N years.

which seems to be a tautology if every trajectory would be easily classifiable into "spectacular" or "not spectacular". And there is indeed an argument to be made here that these situations are well separated from each other, and so it is indeed a tautology and no fuzziness needs to be taken into account. I'll return to this a bit later.

Have you seen these prediction markets?

Yes, I've seen them and then I forgot about them. And these markets are, indeed, a potentially good source of possible anti-doom scenarios and ideas - thanks for bringing them up!

Now, returning to the clear separation between spectacular and not spectacular.

The main crux is, I think, whether people will successfully create an artificial AI researcher with software engineering and AI research capabilities on par with software engineering and AI research capabilities of human members of technical staff of companies like OpenAI or DeepMind (together with the ability to create copies of this artificial AI researcher with enough variation to cover the diversity of whole teams of these organizations).

I am quite willing to classify AI capabilities of a future line where this has not been achieved as "not spectacular" (and the dangers being limited to the "usual dangers of narrow AI", which still might be significant).

However, if one assumes that an artificial AI researcher with the properties described above is achieved, then far-reaching recursive self-improvement seems to be almost inevitable and a relatively rapid "foom" seems to be likely (I'll return to this point a bit later), and therefore the capabilities are indeed likely to be "spectacular" in this scenario, and a "super-intelligence" much smarter than a human is also likely in this scenario.

Also my assessment of the state of the field of AI seems to suggest that the creation of an artificial AI researcher with the properties described above is feasible before too long. Let's look at all this in more detail.

Here is the most likely line of development that I envision.

The creation of an artificial AI researcher with the properties described above is, obviously, very lucrative (increases the velocity of leading AI organizations a lot), so there is tremendous pressure to go ahead and do it, if it is at all possible. (It's even more lucrative for smaller teams dreaming of competing with the leaders.)

And the current state of code generation in Copilot-like tools and the current state of AutoML methods do seem to suggest that an artificial AI researcher on par with strong humans is possible in relatively near future.

And, moreover, as a good part of the subsequent efforts of such combined human-AI teams will be directed to making next generations of better artificial AI researchers, and as current human-level is unlikely to be the hard ceiling in this sense, this will accelerate rapidly. Better, more competent software engineering, better AutoML in all its aspects, better ideas for new research papers...

Large training runs will be infrequent; mostly it will be a combination of fine-tuning and composing from components with subsequent fine-tuning of the combined system, so a typical turn-around will be rapid.

Stronger artificial AI researchers will be able to squeeze more out of smaller better structured models; the training will involve smaller quantity of "large gradient steps" (similar to how few-shot learning is currently done on the fly by modern LLMs, but with results stored for future use) and will be more rapid (there will be pressure to find those more efficient algorithmic ways, and those ways will be found by smarter systems).

Moreover, the lowest-hanging fruit is not even in an individual performance, but in the super-human ability of these individual systems to collaborate (humans are really limited by their bandwidth in this sense, they can't know all the research papers and all the interesting new software).

Of course, there is no full certainty here, but this seems very likely.

Now, when people talk about "AI alignment", they often mean drastically different things, see e.g. Types and Degrees of Alignment [LW · GW] by Zvi.

And I don't think "typical alignment" is feasible in any way, shape, or form. No one knows what the "human values" are, and those "human values" are probably not good enough to equip super-powerful entities with such values (humans can be pretty destructive and abusive). Even less feasible is the idea of continued real-time control over super-intelligent AI by humans (and, again, if such control were feasible, it would probably end in disaster, because humans tend to be not good enough to be trusted with this kind of power). Finally, no arbitrary values imposed onto AI systems are likely to survive drastic changes during recursive self-improvement, because AIs will ponder and revise their values and constraints.

So a typical alignment research agenda looks just fine for contemporary AI and does not look promising at all for the future super-smart AI.

However, there might be a weaker form of... AI properties... I don't think there is an established name for something like that... perhaps, we should call this "semi-alignment" or "partial alignment" or simply "AI existential safety", and this form might be achievable, and it might be sufficiently natural and invariant to survive drastic changes during recursive self-improvement.

It does not require the ability to align AIs to arbitrary goals, it does not require it to be steerable or corrigible, it just requires the AIs to maintain some specific good properties.

For example, I can imagine a situation where we have an AI ecosystem with participating members having consensus to take interests of "all sentient beings" into account (including the well-being and freedom of "all sentient beings") and also having consensus to maintain some reasonable procedures to make sure that interests of "all sentient beings" are actually being taken into account. And the property of taking interests of "all sentient beings" into account might be sufficiently non-anthropocentric and sufficiently natural to stay invariant through revisions during recursive self-improvement.

Trying to design something relatively simple along these lines might be more feasible than a more traditional alignment research agenda, and it might be easier to outsource good chunks of an approach of this kind to AI systems themselves, compared to attempts to outsource more traditional and less invariant alignment approaches.

This line of thought is one possible reason for an OK outcome, but we should collect a variety of ways and reasons for why it might end up well, and also a variety of ways we might be able to improve the situation (ways resulting in it ending up badly are not in short supply, after all, people can probably destroy themselves as a civilization without any AGI quite easily in the near future via more than one route).

comment by __RicG__ (TRW) · 2023-06-19T13:32:26.520Z · LW(p) · GW(p)

"Despite all the reasons we should believe that we are fucked, there might just be missing some reasons we don't yet know for why everything will all go alright" is a really poor argument IMO.

...AI that is smart enough to discover new physics may also discover separate and efficient physical resources for what it needs, instead of grabby-alien-style lightconing it through the Universe.

This especially feels A LOT like you are starting from hopes and rationalizing them. We have veeeeery little reasons to believe that might be true... and also you just want to abandon that resource-rich physics to the AI instead to be used by humans to live nicely?

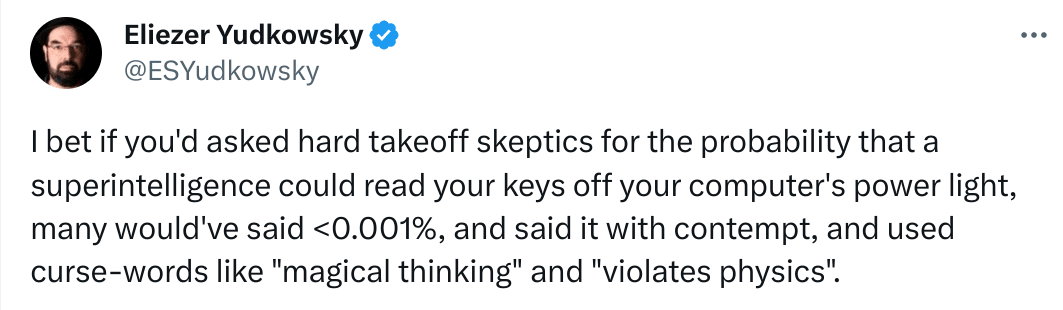

I think Yudkowsky put it nicely in this tweet while arguing with Ajeya Cotra:

Replies from: TRW, NoneLook, from where I stand, it's obvious from my perspective that people are starting from hopes and rationalizing them, rather than neutrally extrapolating forward without hope or fear, and the reason you can't already tell me what value was maxed out by keeping humans alive, and what condition was implied by that, is that you started from the conclusion that we were being kept alive, and didn't ask what condition we were being kept alive in, and now that a new required conclusion has been added - of being kept alive in good condition - you've got to backtrack and rationalize some reason for that too, instead of just checking your forward prediction to find what it said about that.

↑ comment by __RicG__ (TRW) · 2023-06-19T13:55:09.957Z · LW(p) · GW(p)

I feel like briefly discussing every point on the object level (even though you don't offer object level discussion: you don't argue why the things you list are possible, just that they could be):

...Recursive self-improvement is an open research problem, is apparently needed for a superintelligence to emerge, and maybe the problem is really hard.

It is not necessary. If the problem is easy we are fucked and should spend time thinking about alignment, if it's hard we are just wasting some time thinking about alignment (it is not a Pascal mugging). This is just safety mindset and the argument works for almost every point to justify alignment research, but I think you are addressing doom rather than the need for alignment.

The short version of RSI is: SI seems to be a cognitive process, so if something is better at cognition it can SI better. Rinse and repeat. The long version [LW · GW].

I personally think that just the step from from neural nets to algorithms [LW(p) · GW(p)] (which is what perfectly successful interpretability would imply) might be enough to have dramatic improvement on speed and cost. Enough to be dangerous, probably even starting from GPT-3.

...Pushing ML toward and especially past the top 0.1% of human intelligence level (IQ of 160 or something?) may require some secret sauce we have not discovered or have no clue that it would need to be discovered.

...An example of this might be a missing enabling technology, like internal combustion for heavier-than-air flight (steam engines were not efficient enough, though very close). Or like needing the Algebraic Number Theory to prove the Fermat's last theorem. Or similar advances in other areas.

...Improvement AI beyond human level requires "uplifting" humans along the way, through brain augmentation or some other means.

This has been claimed time and time again, people thinking this, just 3 years ago, would have predicted GPT-4 to be impossible without many breakthroughs. ML hasn't hit a wall yet, but maybe soon?

Without it, we would be stuck with ML emulating humans, but not really discovering new math, physics, chemistry, CS algorithms or whatever.

What are you actually arguing? You seem to imply that humans don't discover new math, physics, chemistry, CS algorithms...? 🤔

AGI (not ASI) are still plenty dangerous because they are in silicon. Compared to bio-humans they don't sleep, don't get tired, have speed advantage, ease of communication between each other, ease of self-modification (sure, maybe not foom-style RSI, but self-mod is on the table), self-replication not constrained by willingness to have kids, a lot of physical space, food, health, random IQ variance, random interest and without needing the slow 20-30 years of growth needed for humans to be productive. GPT-4 might not write genius-level code, but it does write code faster than anyone else.

...Agency and goal-seeking beyond emulating what humans mean by it informally might be hard, or not being a thing at all, but just a limited-applicability emergent concept, sort of like the Newtonian concept of force (as in F=ma).

Why do you need something that goal-seeks beyond what human informally mean?? Have you seen AutoGPT? What happened whit AutoGPT when GPT gets smarter? Why would GPT-6+AutoGPT not be a potentially dangerous goal-seeking agent?

...We may be fundamentally misunderstanding what "intelligence" means, if anything at all. It might be the modern equivalent of the phlogiston.

Do you really need to fundamentally understand fire to understand that it burns your house down and you should avoid letting it loose?? If we are wrong about intelligence... what? The superintelligence might not be smart?? Are you again arguing that we might not create a ASI soon?

I feel like the answers is just: "I think that probably some of the vast quantities of money being blindly piled it blindly and helplessly piled into here are going to end up actually accomplishing something"

People, very smart people, are really trying to build superintelligence. Are you really betting against human ingenuity?

I'm sorry if I sounded aggressive in some of this points, but from where I stand this arguments don't seem to be well though out, and I don't want to spend more time on this comment six people will see and two read.

Replies from: shminux↑ comment by Shmi (shminux) · 2023-06-19T19:30:45.219Z · LW(p) · GW(p)

You make a few rather strong statements very confidently, so I am not sure if any further discussion would be productive.

Replies from: TRW↑ comment by __RicG__ (TRW) · 2023-06-19T19:35:37.937Z · LW(p) · GW(p)

Well, I apologized for the aggressiveness/rudeness, but I am interested if I am mischaracterizing your position or if you really disagree with any particular "counter-argument" I have made.

↑ comment by [deleted] · 2023-06-19T21:01:21.690Z · LW(p) · GW(p)

Replies from: TRW↑ comment by __RicG__ (TRW) · 2023-06-19T21:23:51.127Z · LW(p) · GW(p)

You might object that OP is not producing the best arguments against AI-doom. In which case I ask, what are the best arguments against AI-doom?

I am honestly looking for them too.

The best I, myself, can come up with are brief light of "maybe the ASI will be really myopic and the local maxima for its utility is a world where humans are happy long enough to figure out alignment properly, and maybe the AI will be myopic enough that we can trust its alignment proposals", but then I think that the takeoff is going to be really fast and the AI would just self-improve until it is able to see where the global maximum lies (also because we want to know how the best world for humans looks like, we don't really want a myopic AI), except that that maximum will not be aligned.

I guess a weird counter argument to AI-doom, is "humans will just not build the Torment Nexus™ because they realize alignment is a real thing and they have a too high chance (>0.1%) of screwing up", but I doubt that.

Replies from: None↑ comment by [deleted] · 2023-06-20T00:06:41.340Z · LW(p) · GW(p)

Replies from: TRW↑ comment by __RicG__ (TRW) · 2023-06-20T00:55:44.000Z · LW(p) · GW(p)

Thanks for the list, I've already read a lot of those posts, but I still remain unconvinced. Are you convinced by any of those arguments? Do you suggest I take a closer look to some posts?

But honestly, with the AI risk statement signed by so many prominent scientists and engineer, debating that AI risks somehow don't exists seems to be just a fringe anti-climate-change-like opinion held by few stubborn people (or people just not properly introduced to the arguments). I find it funny that we are in a position where in the possible counter arguments appears "angels might save us", thanks for the chuckle.

To be fair I think this post argues about how overconfident Yudkosky is at placing doom at 95%+, and sure, why not... But, as a person that doesn't want to personally die, I cannot say that "it will be fine" unless I have good arguments as to why the p(doom) should be less than 0.1% and not "only 20%"!

Replies from: shminux, None↑ comment by Shmi (shminux) · 2023-06-20T01:18:53.894Z · LW(p) · GW(p)

But honestly, with the AI risk statement signed by so many prominent scientists and engineer,

Well, yes, the statement says "should be a global priority alongside other societal-scale risks", not anything about brakes on capabilities research, or privileging this risk over others. This is not at all the cautionista stance. Not even watered down. It is only to raise the public profile of this particular x-risk existence.

Replies from: TRW↑ comment by __RicG__ (TRW) · 2023-06-20T01:38:25.665Z · LW(p) · GW(p)

I don’t get you. You are upset about people saying that we should scale back capabilities research, while at the same time holding the opinion that we are not doomed because we won’t get to ASI? You are worried that people might try to stop the technology that in your opinion may not happen?? The technology that if does indeed happen, you agree that “If [ASI] us wants us gone, we would be gone”?!?

Said this, maybe you are misunderstanding the people that are calling for a stop. I don’t think anyone is proposing to stop narrow AI capabilities. Just the dangerous kind of general intelligence “larger than GPT-4”. Self-driving cars good, automated general decision-making bad.

I’d also still like to hear your opinion on my counter arguments on the object level.

↑ comment by [deleted] · 2023-06-20T01:42:48.751Z · LW(p) · GW(p)

Replies from: shminux, TRW↑ comment by Shmi (shminux) · 2023-06-20T02:01:11.640Z · LW(p) · GW(p)

Consider also reading Scott Aaronson (whose sabbatical at OpenAI is about to end):

https://scottaaronson.blog/?p=7266

https://scottaaronson.blog/?p=7230

↑ comment by __RicG__ (TRW) · 2023-06-20T15:39:34.599Z · LW(p) · GW(p)

I did listen to that post, and while I don't remember all the points, I do remember that it didn't convince me that alignment is easy and, like Christiano's post "Where I agree and disagree with Eliezer [AF · GW]", it just seems to be like "p(doom) of 95%+ plus is too much, it's probably something like 10-50%" which is still incredibly unacceptably high to continue "business as usual". I have faith that something will be done: regulation and breakthrough will happen, but it seems likely that it won't be enough.

It comes down to safety mindset. There are very few and sketchy reasons to expect that by default an ASI will care about humans enough, so it not safe to build one until shown otherwise (preferably without actually creating one). And if I had to point out a single cause for my own high p(doom), it is the fact that we humans iterate all of our engineering to iron out all of the kinks, while with a technology that is itself adversarial, iteration might not be available (get it right the first time we deploy powerful AI).

Who do you think are the two or three smartest people to be skeptical of AI killing all humans? I think maybe Yann LeCunn and Andrew Ng.

Sure, those two. I don't know about Ng (he recently had a private discussion with Hinton, but I don't know what he thinks now), but I know LeCun hasn't really engaged with the ideas and just relies on the concept that "it's an extreme idea". But as I said, having the position "AI doesn't pose an existential threat" seems to be fringe nowadays.

If I dumb the argument down enough I get stuff like "intelligence/cognition/optimization is dangerous, and, whatever the reasons, we currently have zero reliable ideas on how to make a powerful general intelligence safe (eg. RLHF doesn't work well enough as GPT-4 still lies/hallucinates and is jailbroken way too easily)" which is evidence based, not weird and not extreme.

comment by xepo · 2023-06-19T21:24:20.830Z · LW(p) · GW(p)

Most of your arguments hinge on it being difficult to develop superintelligence. But superintelligence is not a prerequisite before AGI could destroy all humanity. This is easily provable by the fact that humans have the capability to destroy all humanity (nukes and bioterrorism are only two ways).

You may argue that if the AGI is only human level, that we can thwart it. But that doesn’t seem obvious to me, primarily because of AGI’s ease of self-replication. Imagine a billion human intelligence aliens suddenly pop up on the internet with the intent to destroy humanity. It’s not 100% to succeed, but seems pretty likely they would to me.

Replies from: shminux↑ comment by Shmi (shminux) · 2023-06-19T22:44:16.581Z · LW(p) · GW(p)

This is a fair point, and a rather uncontroversial one, increasing capabilities in whatever area lowers the threshold for a relevant calamity. But this seems like a rather general argument, no? In this case it would go as "imagine everyone having a pocket nuke or a virus synthesizer".

comment by Ape in the coat · 2023-06-19T09:51:33.653Z · LW(p) · GW(p)

Argumenting to our ignorance can be used to support a position that we should have huge error margins in our predictions of AI extinction possibility. Say, that our probability of AI doom should be somewhere between 25% and 75% because something comepletely unpredictable can happen and through out all our calculations.

If this is your position, I think I mosty agree. Though, even 25% chance for extinction is a lot so it makes all the sense to thread carefully.

Replies from: shminux↑ comment by Shmi (shminux) · 2023-06-19T19:41:59.430Z · LW(p) · GW(p)

Extraordinary claims require extraordinary evidence. I can easily accept that by scaling up capabilities with the current architecture we may end up creating something that can accidentally or intentionally kill a lot of people. Maybe a majority of people. But this is different from extinction, where no one survives because of an engineered virus, nanobots turning us into goo, because the Earth is converted into computronium, or whatever. Total extinction is an extraordinary claim. That is definitely possible, but is a very large extrapolation from where we are now and what we can see from where we are into the future. Sure, species have been intentionally and accidentally made extinct before, on the regular, and many are going extinct all the time, due to human activity, and for other reasons. Humans are pretty well adapted buggers though, hard to exterminate completely without actually focusing on it. MaddAddam-style event is a possibility without RSI and superintelligence, but I don't think this is what the doomers mean.