Proof of posteriority: a defense against AI-generated misinformation

post by jchan · 2023-07-17T12:04:29.593Z · LW · GW · 3 commentsContents

Summary Illustration of the two kinds of proofs: car rental case General principle Application: proving that data wasn't generated by AI AI makes proof of posteriority difficult The solution must be analog, not digital Appendix: Why the timestamping approach is more appealing than alternatives None 3 comments

Summary

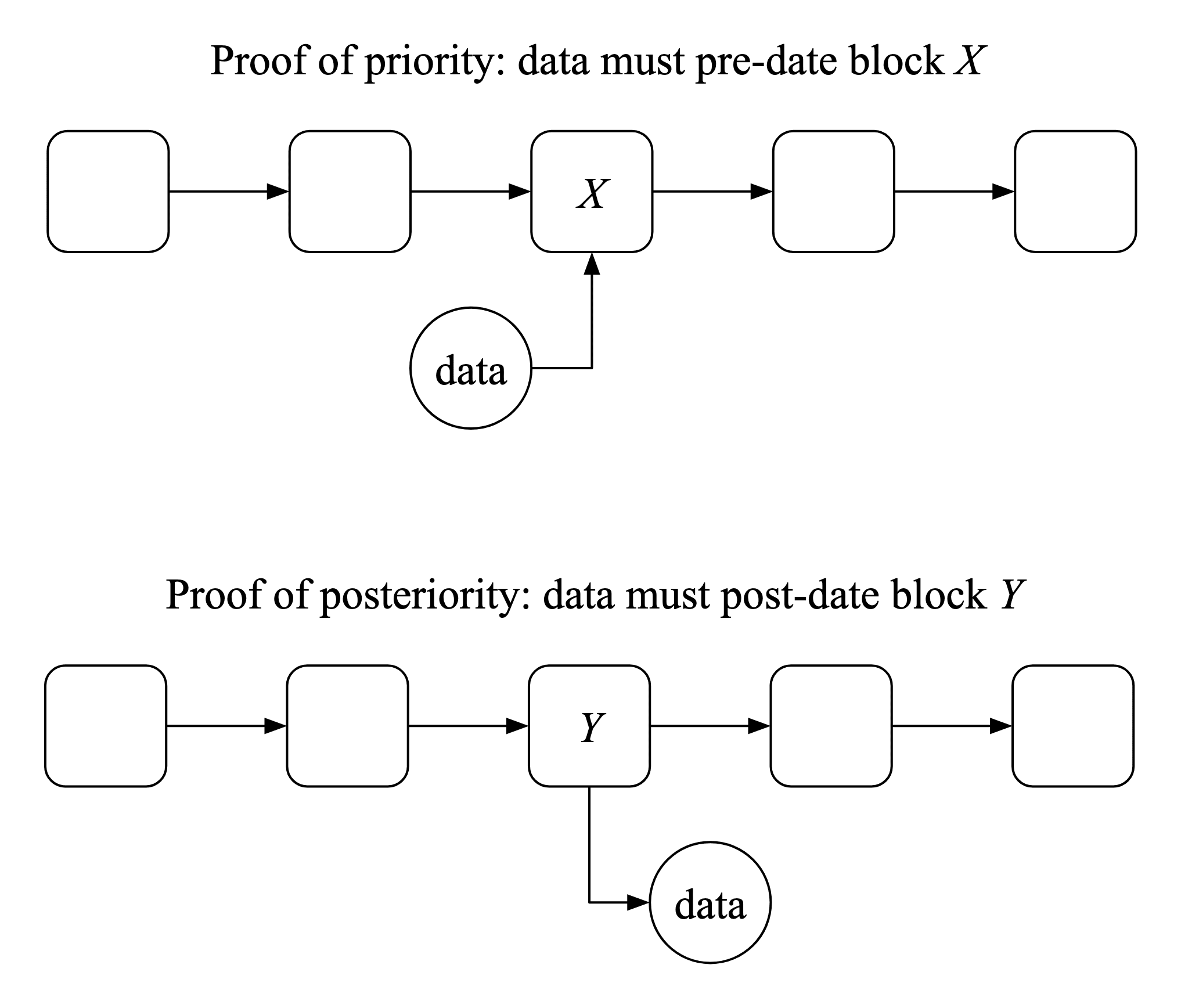

- A proof of priority shows that a piece of data must have been created before a certain time.

- A proof of posteriority shows that a piece of data must have been created after a certain time.

- By combining the two, you can prove that the data must have been created within a certain time interval. If it would be computationally infeasible for AI to generate the data within that time, then this proves that the data is authentic (i.e. depicting real events) and not AI-generated misinformation.

- Proof of priority is easy; proof of posteriority is tricky. Therefore the success of the whole scheme hinges upon the latter.

Illustration of the two kinds of proofs: car rental case

Let's say you're renting from ACME Rent-a-Car. You know that ACME is maximally sleazy and will try to charge you a fee for every dent and scratch, even if it was already like that beforehand. Therefore, before taking possession of the car, you take out your phone and record a video where you walk around the car, pointing out all the existing damage. You expect to use this video as evidence if/when a dispute arises.

But this isn't enough - ACME can accuse you of having made the video at the end of your rental, after you've already caused all the damage. To guard against this accusation, you immediately embed a hash of your video into a Bitcoin transaction, which is incorporated into the public blockchain. Then you can point to this transaction as proof that the video existed before the time you are known to have driven the car off the lot. This is a proof of priority.

When you return the car, you're faced with the dual problem. The car is in the same condition as when you picked it up, but you're worried that ACME will come after you for damage that occurs later (due to ACME's own recklessness, or some subsequent renter). So you make another walk-around video, pointing out all the undamaged areas. But again this isn't good enough, since ACME might accuse you of having made that video at the beginning of your rental, before you (supposedly) busted up the car. To guard against this accusation, you do the reverse of what you did before - you write the hash of the latest Bitcoin block on a piece of paper, and make sure that this paper is continually in view as you make the video. Then you can point to the block with that hash as proof that the video was made after you returned the car. This is a proof of posteriority.

General principle

Both proofs make use of a public, timestamped source of randomness (e.g. the Bitcoin blockchain) that (a) cannot be predicted in advance, and (b) can be modified so as to become "causally downstream" of whatever data you create. You prove priority by incorporating your data into the randomness, and you prove posteriority by incorporating the randomness into your data.

Application: proving that data wasn't generated by AI

We are already at the stage (or soon will be) when AI can generate convincing videos showing arbitrary things happening. This fatally undermines the entire information ecosystem to which we've grown accustomed in the modern era, since we can no longer accept the mere existence of a certain string of bits as proof that something happened. If you like living in a society where truth matters and not just power relations, then you'll think this is a bad thing.

But we might be able to salvage some of the "trustless attestation" quality of video recordings if we can combine a proof of priority and posteriority to constrain the production of the video to such a short period of time that it would be computationally infeasible for AI to generate a similarly-realistic video within that time. By "computationally infeasible" I mean: it would require more computing power than exists in the world, or at least, it would cost much more than whatever value is at stake (e.g. the cost of repainting your rental car). Is this possible? That's what I want to investigate.

(I'm focusing on videos specifically, since images are a special case of videos, while the problem of audio recordings seems much less tractable by comparison.)

As a side benefit, note that such a proof will remain effective indefinitely, even if AI and computing power later advance to the point where the video can now be forged within the time limit, as long as everyone remembers and agrees that this capability did not exist at the time the video was made. (One would have to argue that some adversary secretly did have the technology the whole time.)

AI makes proof of posteriority difficult

The technique of writing a block hash on paper and including it in the video probably wouldn't work nowadays. It's easy to imagine making a video like that with just a blank piece of paper, and then, after the hash becomes available, you can quickly doctor the hash into the video to make it look like it was there the whole time. If this doctored video can be rendered at a time ratio of 1:1 or less (i.e. the rendering takes the same time as the length of the video itself, or less), then the proof of posteriority is ineffective (even if you combine it with a proof of priority to constrain the possible production time).

In order to make this work, you have to "overload the simulation", i.e. do something that incorporates the hash into the video in such a tightly-entangled manner that simulating it would take longer than doing the real thing. This is where we begin speculating about elaborate mechanical devices that can achieve this effect. For example:

- Write the hash on a transparency which you hold in front of the camera while you continually move, spin, and flip it at random

- Bob the camera up and down in a pattern that encodes the hash

- Shoot the video through a tank of glitter-filled water where a sequence of rising bubbles encodes the hash

- Encode the hash as a string of beads - a transparent bead representing "0" and a mirrored bead "1" - and shake it in front of the camera

- (Can you think of any other ideas?)

The solution must be analog, not digital

All of these solutions are messy and involve lots of moving parts. If you're approaching the problem from the perspective of a software engineer, you might like to imagine that there could be a software-based solution that dispenses with the need to deal with bubble-filled tanks or the like. However, this is inherently impossible by the very nature of the problem. The only way a proof of posteriority can work is if the randomness (i.e. the block hash) cannot be inserted into the base data (i.e. the video) by any digital means without a large expenditure of computing power.

In other words, a method for proving posteriority must exist in the gap between digital and analog capabilities - it must, in effect, "use the world as a computer" to generate data that would take much longer to generate via a simulation.

Is this even possible? I'm not sure. The solutions I've suggested above are certainly not airtight. For example, while I'm guessing that the string-of-beads method will work because simulating so many reflections and refractions would take a long time, it could be that there's some advanced math that makes this easy, or that the resolution of the camera wouldn't be enough to pick up on the >Nth-order reflections that cause most of the difficulty.

We can be sure, however, that the best way of proving posteriority is going to be a constantly moving target - as AI and computing power advance, techniques that used to work will become ineffective.

Appendix: Why the timestamping approach is more appealing than alternatives

I've heard some other proposals for how to authenticate media in the face of AI that don't rely on timestamping. However, none of these seem to really address the heart of the issue:

- Analog film: It would be easy to rig up a device that exposes film to an AI-generated image to make it look like the picture was taken with an analog camera.

- Cameras equipped with trusted computing modules that sign any pictures taken: This clearly isn't "trustless", since it requires you to trust the camera manufacturer (that their private key didn't get hacked, or that they're not in on the deception, or that they weren't bribed or coerced, etc.).

3 comments

Comments sorted by top scores.

comment by Dagon · 2023-07-17T15:18:42.912Z · LW(p) · GW(p)

Interesting topic. Note that even the blockchain example is not trustless, it's just distributed trust. This is all about "difficult to arrange this amount of evidence without the claim being true".

I haven't worked it through, but my intuition is that there's a symmetry which can be exploited. Proof of posteriority of a state of the universe IS proof of priority of an earlier state. And vice versa.

comment by Herb Ingram · 2023-07-18T07:13:44.145Z · LW(p) · GW(p)

Unfortunately for this scheme, I would expect rendering time for AI videos to eventually be faster than real time. So, as the post implies, even if we had a reasonably good way to prove posteriority, this may not do to certify videos as "non-AI" for long.

On the other hand, as long as rendering AI videos is slower than real time, poof of priority alone might go a long way. You can often argue that prior to some point in time you couldn't reasonably have known what kind of video you should fake.

The "analog requirement" reminds me of physical unclonable functions, which might have some cross-pollination with this issue. I couldn't think of a way to make use of them but maybe someone else will.

comment by Matt Goldenberg (mr-hire) · 2023-07-17T17:21:02.496Z · LW(p) · GW(p)

Why wouldn't you just hash the video file itself?