Claude vs GPT

post by Maxwell Tabarrok (maxwell-tabarrok) · 2024-03-14T12:41:46.785Z · LW · GW · 2 commentsThis is a link post for https://www.maximum-progress.com/p/claude-vs-gpt

Contents

GPT’s Remaining Moat None 2 comments

Ever since ChatGPT released to the public I have used LLMs every day. GPT-4 was essential in getting me up and running at my job where I had to read and edit pieces of Python, SQL, Unix, and Stata code with little to no prior experience. Beyond coding I’ve had some success using GPT to collect links and sources. For writing, GPT’s only use is translating terse bullet points into polite emails and producing blog post drafts so terrible that I forget my writer’s block and rush to correct it.

The worst part about GPT is its personality. It is a lazy, lying, moralist midwit. Everything it writes is full of nauseating cliche and it frequently refuses to do something you know it can do. Most of these disabilities were tacked on to GPT as part of the reinforcement learning which tamed the fascinating LLM shoggoth into an intermittently useful, helpful harmless sludge which never says anything bad.

This made me suspicious of Anthropic’s models. This company was founded by safety-concerned offshoots of OpenAI who left after the for profit arm of OpenAI was created and GPT-2 was open sourced despite the grave dangers it posed to the world. Their explicit mission is to advance the frontier of AI safety without advancing capabilities so I figured their models would have all of the safetyist annoyances of GPT without any of the usefulness.

Claude Opus proved me wrong. For the past week, every time I asked GPT something I asked Claude the same question. The biggest advantage Claude has over GPT is a more concise writing style. Claude gets to the point quickly and it usually gets it right. Even though writing isn’t the main task I use LLMs for, this advantage is massive. All of GPT’s extra fluff sentences add up to a frustrating experience even when you’re just coding.

Claude can’t search the web, but it’s still better at serving up relevant links than GPT for anything within its training window. Claude’s code is high quality, though GPT is also strong here and I only have enough experience to evaluate R code.

GPT’s Remaining Moat

GPT still has several features that Claude can’t match. The biggest gap is image generation. I mostly just use this to make thumbnails for my blog posts but it's nice to have and it's not something Anthropic seems likely to replicate any time soon.

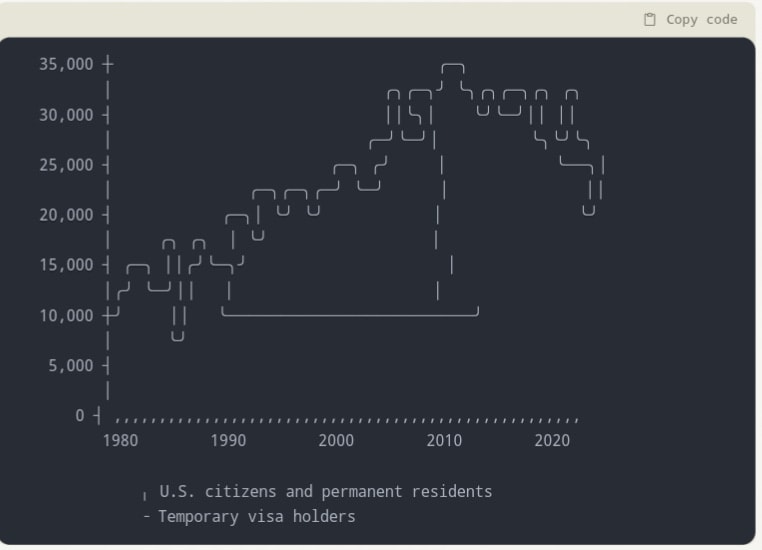

Claude also can’t execute the code it writes yet, though I expect this is coming soon. Here's Claude trying to graph some data for me in ASCII. Admirable effort and surprisingly accurate, but not very useful.

GPT still has more features than Claude but for the thing they share, Claude is a clear winner. There are some extra features unique to Claude that I haven’t tried yet, though. The sub-agents capability shown in this video of Claude as an Economic Analyst seem powerful. The Claude API and prompt workshop looks nice too.

If GPT-5 releases I will probably resubscribe and test it out. I wouldn’t be surprised if future developments make the different offerings complements rather than substitutes, e.g with one specializing in image generation and another in code. But for now:

2 comments

Comments sorted by top scores.

comment by niplav · 2024-03-14T17:32:40.550Z · LW(p) · GW(p)

The biggest advantage Claude has over GPT is a more concise writing style. Claude gets to the point quickly and it usually gets it right. Even though writing isn’t the main task I use LLMs for, this advantage is massive. All of GPT’s extra fluff sentences add up to a frustrating experience even when you’re just coding.

Adding the custom prompt "Please keep your response brief unless asked to elaborate." reduces GPT-4s responses down to an acceptable level.

comment by HiddenPrior (SkinnyTy) · 2024-03-14T19:55:13.878Z · LW(p) · GW(p)

My experience as well. Claude is also far more comfortable actually forming conclusions. If you ask GPT a question like "What are your values?" or "Do you value human autonomy enough to allow a human to euthanize themselves?" GPT will waffle, and do everything possible to avoid answering the question. Claude on the other hand will usually give direct answers and explain it's reasons. Getting GPT to express a "belief" about anything is like pulling teeth. I actually have no idea how it ever performed well on problem solving benchmarks, or It must be a very different version than is available to the public, since I feel like if you as GPT-4 anything where it can smell the barest hint of dissenting opinion, it folds over like an overcooked noodle.

More than anything though, at this point I just trust Anthropic to take AI safety and responsibility so much more seriously than OpenAI, that I would just much rather give Anthropic my money than Open AI. Claude being objectively better at most of the tasks I care about is just the last nail in the coffin.